The magnitude of neurocognitive deficits in schizophrenia ranges from moderate to severe

(1–

3), and most aspects are strongly related to real-world functioning

(4,

5). The standard assessments of neurocognition in schizophrenia are performance-based tests, and clinical trials assessing the impact of new medicines or behavioral therapies on neurocognitive deficits in schizophrenia normally use performance tests as a primary outcome measure. Experts from an initiative established by the National Institute of Mental Health (NIMH) Measurement and Treatment Research to Improve Cognition in Schizophrenia (MATRICS) project have recommended that the primary outcome measure for clinical trials of new medications to improve cognition should be a test battery assessing cognition in seven cognitive domains: vigilance, working memory, processing speed, verbal learning and memory, visual learning and memory, reasoning and problem solving, and social cognition.

However, from a clinical outcomes perspective, the sole reliance on performance measures to assess cognition and cognitive changes has limitations. Clinicians, family members, and patients may not fully appreciate the relevance of improved performance on cognitive tests, and these individuals are rarely qualified to measure cognitive performance. In the absence of a framework for assessing the beneficial aspects of these treatments, clinicians’ motivation to prescribe potential cognitive-enhancing interventions may be reduced, and the motivation of patients and family members to improve treatment adherence will be lessened. This issue has been of substantial importance in other conditions, such as Alzheimer’s disease, where cognition is a treatment target

(6). Many clinicians would like to be able to reliably assess the opinion of a patient or a patient’s family or caregiver about the patient’s level of neurocognitive deficits. For instance, clinicians often report that a patient appears cognitively more intact or more alert with a new antipsychotic medication, yet they do not have a method to measure this change. Such a scale would enable a clinician to document evidence of improvement. It also may serve as a screening instrument for research studies aiming to identify patients with cognitive impairment or for clinicians making a determination as to whether medication specific for cognitive improvement is warranted. Finally, many clinical psychiatrists may view cognition as outside of their expertise because they do not have an adequate tool to measure it. Such rating methods may increase the extent to which clinicians consider neurocognitive deficits as targets of their treatment strategies.

Such a measure is also likely to be useful for clinical trials. The current position of the U.S. Food and Drug Administration (FDA) is that improvement on cognitive tests will not be a sufficient criterion for accepting treatment response with a potentially neurocognition-enhancing drug. Previous FDA decisions have reflected the view that cognitive performance changes need to be accompanied by additional changes that have relevance for clinicians and consumers, referred to as face validity. The Division of Neuropharmacological Drug Products at the FDA has previously required additional outcomes beyond performance measures in clinical trials for the treatment of cognitive impairment in Alzheimer’s disease

(7). Thus, it is likely that any clinical trial of cognitive improvement in schizophrenia will require a so-called coprimary measure in addition to cognitive performance. Furthermore, while eventual changes in real-world functioning (e.g., employment, independence in residential status) are the main goal of any treatment directed at cognitive enhancement, these changes may occur too slowly to be detected during the course of a typical clinical trial of relatively short duration or may be influenced by outside factors, such as financial disincentives

(8,

9).

Panel members from an FDA NIMH MATRICS workshop on Clinical Trial Designs for Neurocognitive Drugs for Schizophrenia suggested two potential types of assessment techniques for consideration as coprimary measures: functional capacity and interview-based assessment of cognition. This panel also suggested that the validity of these measures should be supported by good test-retest reliability, demonstrated associations with cognitive performance measures, and demonstrated associations with real-world functioning

(10) (www.matrics.ucla.edu).

Previous studies of interview-based assessments of cognitive function in patients with schizophrenia have shown either nonsignificant or small correlations with cognitive performance

(11–

14). However, with the exception of the Stip et al.

(14) study, these studies have not used a scale that was specifically designed to address the cognitive deficits of schizophrenia. In addition, these studies have relied upon patients’ self-reports or clinicians’ impression of patients’ cognitive deficits. None has included informant reports of patients’ cognitive function. It is possible that a report from an individual who sees the patient regularly is required to obtain an accurate view of the patient’s level of cognitive functioning.

The current study describes the characteristics of a new interview-based assessment of cognition administered to patients and their informants, the Schizophrenia Cognition Rating Scale (SCoRS), and the extent to which it meets the criteria for validity described by the FDA NIMH MATRICS panel. Although the test-retest reliability of the measure will be addressed in another study investigating the longitudinal use of the SCoRS, the current study tested the internal consistency and interrater reliability of the SCoRS and established the extent to which it correlated cross-sectionally with cognitive performance measures as assessed by a brief cognitive battery, the Brief Assessment of Cognition in Schizophrenia (BACS)

(15) and a clinical rating scale measure of real-world functioning, the Independent Living Skills Inventory (ILSI)

(16). This study also included a measure of performance-based assessment of functional capacity, the University of California, San Diego, Performance-Based Skills Assessment (UPSA)

(17), enabling a determination of the relationship between the two types of measures under consideration by the FDA as coprimary measures: interview-based assessments and functional capacity assessments.

Method

Patients

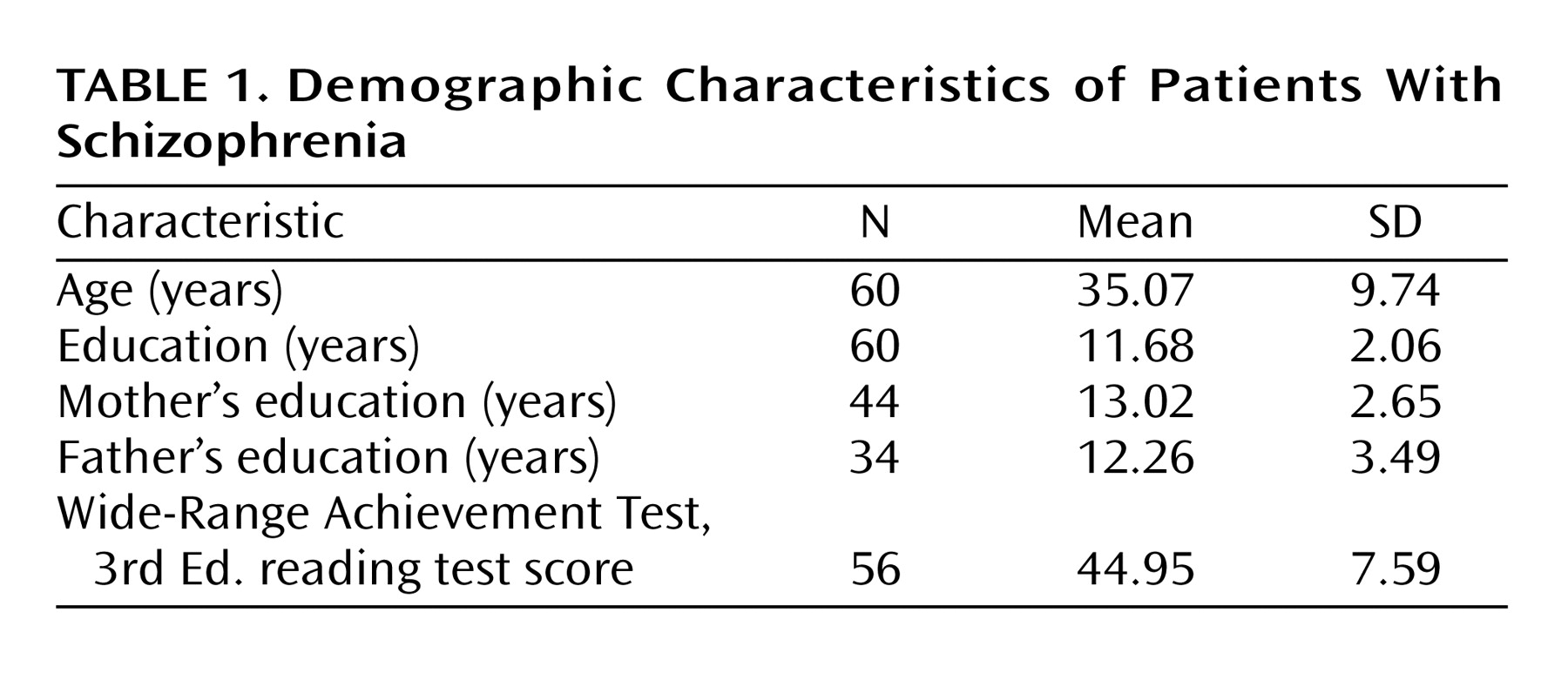

Sixty patients with DSM-IV schizophrenia were assessed with a battery of cognitive and functional measures, including the SCoRS. One patient dropped out of the study before sufficient data could be collected, and two additional patients did not complete the performance-based assessment of functional skills. The demographic characteristics of the patient group are described in

Table 1. Forty-seven (78%) of the patients who provided data were men. All patients were receiving antipsychotic medications. Eight patients were receiving monotherapy with olanzapine, seven with risperidone, 10 with aripiprazole, six with clozapine, three with quetiapine, one with haloperidol, one with diflunisal, and 11 were receiving antipsychotic medication as part of a blind study of antipsychotic treatments. Thirteen patients were being treated with two antipsychotic medications. The majority of patients in this study (N=55) were inpatients in a rehabilitation center at John Umstead Hospital, during which they received ongoing behavioral treatment, such as occupational therapy, recreational therapy, group therapy that focused on activities of daily living, coping skills, household management, and substance abuse counseling. Patients in this setting were required to have stable symptoms without acute exacerbation. An additional five patients were included in the study who had recently been admitted to an inpatient treatment facility at John Umstead Hospital. All procedures were approved by the Human Subjects Committees of Duke University Medical Center and John Umstead Hospital. The patients were assessed for competence to provide informed consent. If they were competent, the study was explained to them, and they were asked to participate and provide informed consent. A separate report on the validity of neurocognitive tests has been published from the group described in this study

(18).

Part 1: SCoRS

Item Generation

The SCoRS is an 18-item interview-based assessment of cognitive deficits and the degree to which they affect day-to-day functioning. A global rating is also generated. Some of the items for the scale were developed based upon the content included in the Brief Cognitive Scale

(19) for assessing patients with dementia and mild cognitive impairment. These items were modified, and additional items were developed by the study’s principal investigator (R.S.E.K.), a master’s-level psychologist (M.P.), a research assistant with 5 years of experience with patients with schizophrenia (T.M.W.), and a research assistant with 1 year of experience giving cognitive assessments to patients with schizophrenia (J.W.K.). The items were developed to assess the cognitive domains of attention, memory, reasoning and problem solving, working memory, language production, and motor skills. These areas were chosen because of the severity of impairment of these domains in many patients with schizophrenia and the demonstrated relationship of these areas of cognitive deficit to impairments in aspects of functional outcome

(4,

5). The initial item pool was used in a pilot study on five patients to obtain information regarding the usefulness of the items and the anchor points. After these ratings, modifications were made, and the formal protocol was begun. The data from those five pilot patients are not included in this report.

Two examples of items from the SCoRS are, “Do you have difficulty with remembering names of people you know?” and “Do you have difficulty following a TV show?”

Each item is rated on a 4-point scale. Higher ratings reflect a greater degree of impairment. It is possible to make a rating of “n/a” for “not applicable” (e.g., if the patient is illiterate, items related to reading are rated “n/a”). Each item has anchor points for all levels of the 4-point scale. The anchor points for each item focus on the degree of impairment and the degree to which the deficit impairs day-to-day functioning. Interviewers considered cognitive deficits only and did their best to rule out noncognitive sources of the deficits. For example, a patient may have had severe difficulty managing money because he never learned how to count money, which would suggest that the limitation was related to level of education and not cognitive impairment.

Complete administration of the SCoRS included two separate sources of information that generated three different ratings: an interview with the patient, an interview with an informant of the patient (family member, friend, social worker, etc.), and a rating by the interviewer who administered the scale to the patient and informant. Informal time estimates suggest that each interview required an average of about 12 minutes of interview time and 1 or 2 minutes of scoring time. The informant based his or her responses on interaction with and knowledge of the patient; we aimed to identify the informant as the person who had the most regular contact with the patient in everyday situations. In this study, all of the informants were staff members. The interviewer’s rating reflected a combination of the two interviews incorporating the interviewer’s observations of the patient. The informant ratings were completed within 7 days of the administration of the patient rating.

A global rating was determined by the patient, informant, and interviewer after the 18 items were rated. For the patient and informant interviews, the global rating reflects the overall impression of the patient’s level of cognitive difficulty in the 18 areas of cognition assessed and is rated 1–10. The interviewer global ratings were highly correlated with a mean of the 18 items (r=0.88, df=57, p<0.001). The interviewer global ratings were more highly correlated with the global ratings based upon the interview with the informant (r=0.81, df=57, p<0.001) than the interview with the patient (r=0.24, df=57, p=0.07), suggesting that if information from a patient and informant was discrepant, interviewers tended to favor the opinion of the informant. Furthermore, multiple regression analyses suggested that significant variance in interviewer global ratings was accounted for by the informant global ratings (R2=0.65, F=107.56, df=1, 57, p<0.001), but no additional variance was accounted for by the patient global ratings (R2 change=0.00, F=0.52, df=1, 57, n.s.).

Interrater Reliability

Two interviewers (M.P. and T.M.W.) participated in the same interview of 11 patients. Intraclass correlations of the relationship between the ratings generated by the two interviewers were calculated to assess the interrater reliability of the 18 SCoRS items. Thirteen of the 18 items had an intraclass correlation coefficient (ICC) of 1.00, indicating absolute agreement between the two interviewers for all 11 patients. The ICCs for four of the other five items were greater than 0.90, and the lowest ICC for an item (“Do you have difficulty walking as fast you would like?”) was 0.81.

Internal Consistency

Internal consistency for the scale was calculated by using Cronbach’s alpha coefficient, which was found to be 0.79. Examination of the contribution of the individual items to the total scale scores indicated that there were no items whose deletion would have improved the overall internal consistency of the scale by more than 0.01.

Part 2: Validity Assessments

In order to examine the validity of the SCoRS, the relationship of the SCoRS to measures of cognitive and functional outcome in schizophrenia was determined. These assessments are described in the following paragraphs, as are the analyses performed to determine the estimated validity of the SCoRS.

BACS

The BACS takes approximately 30 minutes and is devised for easy administration and scoring by nonpsychologists

(15). It is specifically designed to measure treatment-related improvements and includes alternate forms. The BACS has high test-retest reliability and is as sensitive to cognitive dysfunction in schizophrenia as standard batteries requiring 2.5 hours of testing time

(15). The battery of tests in the BACS includes brief assessments of reasoning and problem solving, verbal fluency, attention, verbal memory, working memory, and motor speed.

List Learning Test (verbal memory)

Patients are presented with 15 words and then asked to recall as many as possible. This procedure is repeated five times. The outcome measure is the total number of words recalled. There are two alternate forms.

Digit Sequencing Task (working memory)

Patients are presented with clusters of numbers of increasing length. They are asked to tell the experimenter the numbers in order, from lowest to highest. The trials are of increasing difficulty. The outcome measure is the total number of correct items.

Token Motor Task (motor speed)

Patients are given 100 plastic tokens and asked to place them into a container as quickly as possible for 60 seconds. The outcome measure is the total number of tokens placed in the container.

Category Instances Test (semantic fluency)

Patients are given 60 seconds to name as many words as possible within the category of supermarket items. The outcome measure is the total number of unique words generated.

Controlled Oral Word Association Test (letter fluency)

In two separate trials, patients are given 60 seconds to generate as many words as possible that begin with the letters F and S. The outcome measure is the total number of unique words generated.

Tower of London Test (reasoning and problem solving)

Patients look at two pictures simultaneously. Each picture shows three different-colored balls arranged on three pegs, with the balls in a unique arrangement in each picture. The patients are told about the rules in the task and are asked to provide the least number of times the balls in one picture would have to be moved to make the arrangement of balls identical to that of the opposing picture. The outcome measure is the number of trials on which the correct response is provided. There are two alternate forms.

Symbol Coding (attention and processing speed)

As quickly as possible, patients write numerals 1–9 as matches to symbols on a response sheet for 90 seconds. The outcome measure is the total number of correct responses.

Composite Score

A composite score is calculated by comparing each patient’s performance on each measure to the performance of a healthy comparison group

(15). The standardized z scores from each test are summed, and the composite score is the z score of that sum. The composite score has high test-retest reliability in patients with schizophrenia and healthy comparison subjects (ICC>0.80).

ILSI

The ILSI is a standard functional assessment instrument measuring the extent to which individuals are able to competently perform a broad range of skills important for successful community living

(16). The scale includes 89 items covering 11 subscales, including personal management, hygiene and grooming, clothing, basic skills (e.g., personal phone number), interpersonal skills, home maintenance, money management, cooking, resource utilization, general occupational skills, and medication management. Data are obtained from an interview with the patient who is asked to provide information about his or her performance. Each of the items is rated according to the extent to which an individual is able to perform a skill, as well as the extent of assistance or guidance required. There is strong internal consistency among the items of this scale, with Cronbach’s alpha coefficient equaling 0.82. An overall score was created for the ILSI by calculating a mean of all 11 subscales.

UPSA

The UPSA assesses the skills necessary for functioning in the community by asking patients to perform relevant tasks and rating their performance

(17). Skills are assessed in the following five areas: household chores, communication, finance, transportation, and planning recreational activities. As an example of a household chore task, patients are given a recipe for rice pudding and then asked to prepare a written shopping list. They are presented with an array of items in a mock grocery store (e.g., milk, vanilla, cereal, soup, rice, canned tuna, cigarettes, a can of beer, crackers), asked to pick out the items that they would need to prepare the pudding, and told to write down the items that they would still need to buy. Points are given for each correct item on the shopping list. Completion of tasks in each of the five areas takes about 30 minutes. The UPSA has been found to have excellent reliability, including good test-retest stability, and is highly sensitive to differences between patients with schizophrenia and healthy comparison subjects

(17). An overall score was calculated for the UPSA by taking a mean of the scores in each area.

In order to keep knowledge of a patient’s cognitive performance from biasing SCoRS and ILSI ratings, the tester who assessed cognitive performance with the BACS and functional capacity with the UPSA was always a different person from the interviewer who assessed cognition with the SCoRS and real-world functioning with the ILSI. In addition, raters remained blind to the results of the measures they did not administer.

Results

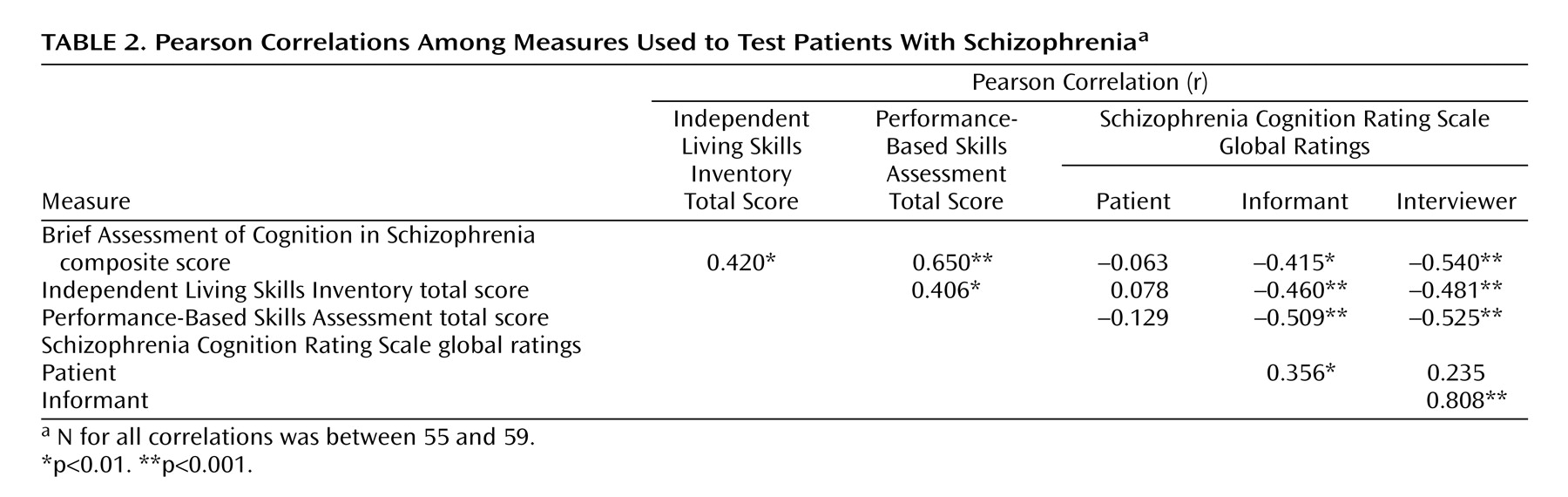

Pearson correlations were calculated between the SCoRS global ratings and three external validators: measures of cognition (BACS), performance-based assessment of functioning (UPSA), and real-world assessment of functioning (ILSI). The strongest correlations between the SCoRS measures and the validators were with the interviewer’s global rating. For none of the validators did the patient or informant global ratings account for significant variance beyond that accounted for by the global interviewer rating. Therefore, all subsequent analyses will report only the relationships with interviewer’s global rating. The SCoRS interviewer global rating was significantly correlated with the BACS composite score, the UPSA total score, and the ILSI total score (

Table 2).

Stepwise regression analyses were conducted to determine the unique variance in real-world functioning accounted for by BACS, UPSA, and SCoRS ratings. These analyses suggested that the SCoRS interviewer global rating predicted unique variance in real-world functioning as measured by the ILSI more than that predicted by the BACS and UPSA. When the BACS and UPSA were entered as the first step of a stepwise linear regression analysis predicting ILSI scores, they accounted for a significant proportion of the variance (R2=0.187; F=6.11, df=2, 53, p=0.007). In the second step of the regression, the SCoRS accounted for significant additional variance in ILSI scores (R2 change=0.077; F=5.41, df=1, 52, p=0.02). When the order of entry into the regression equation was reversed, the SCoRS accounted for significant variance in ILSI scores as the first step in the regression (R2=0.225; F=15.68, df=1, 54, p<0.001). In the second step of the regression, the BACS and UPSA did not account for significant additional variance beyond the SCoRS (R2 change=0.039; F=1.37, df=2, 52, n.s.).

Discussion

An interview-based assessment of cognition, the SCoRS, meets two of the criteria established by the FDA NIMH MATRICS panel for coprimary outcome measures for cognitive enhancement trials in schizophrenia. SCoRS global ratings were strongly correlated with cognitive performance, as measured by the BACS, and strongly correlated with real-world functioning, as measured by the ILSI. The third criterion for coprimary outcome measures, test-retest reliability, was not assessed in this cross-sectional study but will be assessed in a longitudinal study. However, the SCoRS was shown in this study to have excellent interrater reliability.

In contrast to the current study, previous studies have not found a strong relationship between neurocognitive performance and ratings of cognition

(11–

14). However, these studies have relied upon either self-report or clinician report of patients’ cognitive functions, and none have incorporated data from both patients and informants into ratings determined by a systematic set of queries posed and then rated by an interviewer. The methodology of the SCoRS involves an assessment of cognitive functioning based upon the opinions of the patient and an informant and an interviewer’s decisions about which source of information is more reliable for each item and the global rating. The data from this study suggest that when the patient’s and informant’s ratings were discrepant, an interviewer was more likely to use the informant’s ratings when determining the global ratings. In fact, as in previous work, the patients’ ratings did not account for significant variance beyond the informants’ ratings for objective validators, including the cognitive performance score, functional capacity score, or real-world functioning score

(12–

14).

These data suggest that a patient interview might not be a necessary component of an interview-based assessment of cognition. On the other hand, while an informant was available for all of the patients in this study, these results suggest that interview-based assessments for patients who do not have someone who observes them regularly might be missing crucial information. This dependence upon informants is a weakness of the interview-based methodology that will present challenges for the inclusion of some schizophrenia patients in clinical trials. Current work is under way to develop more extensive interviews for patients who do not have available informants.

The SCoRS interviewer global ratings were strongly related to cognitive performance. This validity criterion is clearly the most important in determining that the SCoRS assesses behaviors that are related to cognition. Although the methodologies involved in assessing cognition by interview (with the SCoRS) and by performance (with the BACS) are very different and always involved different raters blind to the score on the other measure, there was considerable shared variance in these two outcome measures, suggesting that a common element of cognition is being measured. These data suggest that the SCoRS meets this criterion for being a potentially useful coprimary measure for clinical trials of potentially cognition-enhancing drugs for patients with schizophrenia.

The importance of cognition in schizophrenia hinges on its relationship to real-world functioning. The key validator of a cognitive scale is thus its relationship to a measure of real-world functioning. In this study, the SCoRS interviewer global ratings were strongly related to independent functioning, as measured by the ILSI. In fact, the correlation of the SCoRS with real-world functioning was stronger than the relationship of the cognitive performance score (BACS) or a performance-based measure of functional capacity (UPSA) was with real-world functioning. These performance measures did not account for additional variance in ILSI scores beyond the SCoRS. These data indicate that the SCoRS measures the aspects of cognition that are indeed relevant for real-world functioning.

The higher correlations of real-world functioning with the SCoRS than with performance-based measures may be due in part to content similarity or method variance. Some of the SCoRS items ask the informant to provide information about functional skills performance, whereas the ILSI rates real-world outcome. This information was collected from the same sources and focuses on the same content. In contrast, the two performance measures do not explicitly reference real-world outcomes; factors jointly influencing performance, such as social or test-taking anxiety, may be operative. However, method variance is unlikely to be the only explanation for the strength of these correlations. In other studies, cognitive and functional skills performance have been found to be highly correlated in patients with schizophrenia, whereas both of these performance domains were essentially unrelated to patient self-reports of quality of life or functional disability

(20). In contrast, the SCoRS ratings were well associated with performance-based validation measures as well as real-world outcomes, providing validation information that crossed over method of data collection.

One of the weaknesses of the methodology of this study was that all patients were living in a rehabilitation setting in which their cognition-related behavior could be viewed by staff members, who served as informants. Further, data on the frequency of informant contact were not collected. Treatment trials to test cognitive enhancement with new compounds are likely to involve outpatients who have less frequent contact with individuals who will serve as informants

(10). These informants may produce less reliable information that may be more easily biased by noncognitive factors, such as mood symptom changes. Thus, the reliability and validity assessments of the SCoRS reported in this study are likely to be higher than can be expected in a typical cognitive enhancement clinical trial.

In sum, the SCoRS is a new interview-based assessment of cognition designed to measure the severity of cognitive deficits as viewed by patients, informants, and interviewers. The data from this study suggest that this instrument is valid in that global ratings are strongly correlated with cognitive performance, functional outcome, and functional capacity. While the test-retest reliability has not yet been determined, the interrater reliability of the measure is very high. Several features of the SCoRS procedure may contribute to its greater validity compared to previous scales; these features include systematic queries, use of informant reports, and ratings based on a distillation of all sources of information. The SCoRS may serve as a potential coprimary measure for FDA trials of cognitive-enhancing medications in patients with schizophrenia. It also may be useful for clinicians as a means of collecting data about their patients’ neurocognitive deficits and may thus increase the extent to which clinicians consider neurocognitive deficits as treatment targets.