Although emotion processing deficits have been implicated in schizophrenia since its original description

(1), with symptoms including a “flat” and “inappropriate” affect, the extent and nature of emotion processing performance in schizophrenia are still unclear. Several studies have demonstrated that patients with schizophrenia have deficits in the processing of emotional facial expressions

(2,

3), and these have included patients from a wide variety of cultural backgrounds

(4). The deficits seem to affect mainly the ability to name and discriminate expressions

(5,

6). Furthermore, performance correlates with symptom severity

(7–

9), which indicates that deficits in discriminating emotional aspects of facial expressions have clinical significance. Finally, functional imaging studies have shown abnormal activation in patients with schizophrenia in emotion processing tasks

(10,

11). A comparison between effects of behavioral studies and functional imaging studies is made difficult by the differing nature of tasks. Behavioral studies use complex emotion discrimination tasks, whereas functional imaging studies use simple response modalities because of technical limitations and the need to probe specific neural systems. Few tasks are available that have been used in psychometric assessment and in functional imaging.

Notwithstanding the increasing evidence for emotion processing deficits in schizophrenia, controversy still exists as to whether a differential deficit can be established for processing emotion compared to nonemotional features

(7,

12–17). Notably, neutral and happy faces showed different scan path patterns in patients

(18). However, studies varied in the extent to which emotion discrimination and control tasks were equated, and the issue has been further complicated by using varying ranges of emotions and intensities. A differential deficit has been reported in recognition accuracy of patients for disgusted, fearful, and neutral expressions

(8). However, different emotions were intermixed, and therefore, specificity of emotion discrimination could be based on only neutral expressions

(8). Such a design does not permit powerful separation between deficits related to sensitivity and specificity.

In the present study, we sought to examine the ability of patients with schizophrenia, compared to healthy individuals, to discriminate emotional facial expressions. We used the same facial stimuli to examine performance on two nonemotional domains: age discrimination and recognition memory. Studies examining episodic memory in schizophrenia

(19–

21) have consistently indicated deficits

(22), including for face memory

(23). Age discrimination has been used as a control task in earlier studies

(7,

10–

11,

24–26). We aimed at comparing such deficits in nonemotional aspects of face processing to the deficit in identifying emotional expressions. An additional aim was to examine whether the deficit in facial emotion processing has specific performance characteristics with respect to sensitivity (being able to correctly identify a target emotion) and specificity (being able to correctly reject an expression that is not of the target emotion). Therefore, we used a blocked design in which the target emotion is different for each block and specificity can be determined for each emotion separately. Finally, the task was designed so that it could be administered with functional imaging by using blocked and event-related analysis. To equate and simplify response, all tasks required binary responding on a two-button forced-choice key.

Method

Subjects

The study was performed on 20 (10 male, 10 female) inpatients with a DSM-IV diagnosis of schizophrenia and 20 (10 male, 10 female) healthy volunteers who were matched to the patients by gender, age, and years of parental education (within 2 years of the mean). After the procedure was fully explained, written informed consent was obtained from all subjects. The mean age of the patients was 37.7 years (SD=12.6). They had a mean parental education of 9.3 years (SD=3.5). The local institutional review board approved the protocol.

Symptom severity was assessed with the Positive and Negative Syndrome Scale

(27). Mean ratings were 13.0 (SD=7.1) on the positive symptom scale and 16.9 (SD=7.7) on the negative symptom scale. All patients were taking antipsychotic medication, with 12 receiving atypicals, four taking typicals, and three taking both agents. One patient’s neuroleptic medication was unknown because he was participating in a study using double-blind psychopharmacology. Patients with any other psychiatric disorder or neurological illness were excluded. The patients were free of recreational drugs and alcohol, as ascertained by regular urine drug screenings.

The healthy volunteers were recruited through local advertisements, followed by a detailed screening. Inclusion was based on the absence of any neurological disease or psychiatric disorder, including current substance abuse. The mean age of the healthy comparison subjects was 37.2 years (SD=11.4), and their mean parental education was 9.9 years (SD=3.0). A two-tailed comparison of means confirmed that there were no differences between the patients and the healthy participants in age (t=0.79, df=19, n.s.) or parental education (t=–0.37, df=19, n.s.).

Procedure

The participants were presented a modified version of the Facial Emotions for Brain Activation Test

(25) with the PRESENTATION software package (Neurobehavioral Systems Inc., San Francisco). The stimuli consist of color photographs of actors and actresses of various ethnicities (African American, Caucasian, Asian, and Hispanic). The detailed task construction is reported elsewhere

(25). Briefly, an original pool of photographs was presented to a group of raters (N=64) who judged the displayed emotion and the level of intensity of each picture. Pictures with the highest degree of identification accuracy were selected. In the emotion discrimination task, the photographs express either one of four different emotions (happiness, sadness, anger, fear) or no emotion (neutral). Disgust has been left out because it was difficult to judge for both patients and comparison subjects

(8), perhaps because of ambiguous and equivocal facial expressions.

Task

The discrimination task consisted of four runs, one run for each emotion. The order of runs was randomly assigned across subjects. Each run consisted of 120 faces (32 targets, 32 nontargets, and 56 neutral targets). The presentation order was fixed within each run, but reaction side (a left or right button press for the correct answer) was randomly assigned across subjects, with each single stimulus presented for 3 seconds without an interstimulus interval. Within each run, blocks of face presentations and crosshairs (baseline) alternated. Each face presentation phase lasted 90 seconds, each crosshair phase was shown for 24 seconds. A 90-second block, while lengthier than needed for blocked analyses, was chosen to permit subsequent event-related (bottom-up) analysis of cerebral correlates for specific emotions. The 32 nontarget emotions had a slightly unequal distribution (N=11, N=11, N=10), varying across trials and affecting all emotion categories comparably. This was needed to keep the number of presentations of the single faces (emotional as well as neutral) equal during the emotion discrimination, i.e., all targets were presented exactly five times before the facial recognition phase. The participants had to decide whether the presented face was showing the respective target emotion or any other emotion/no emotion. They responded by pressing the left or right control button on a computer keyboard.

There were two cognitive control tasks. The age-discrimination task consisted of 120 stimuli divided into four blocks, with face and crosshair presentations in alternating order. Sixty-four of the 120 faces were emotional, and 56 were neutral. Again, the stimuli were presented for 3 seconds each with no interstimulus intervals. In this task, the subjects were asked to decide, by pressing a button, whether the presented face was older or younger than 30 years. A similar control task was proven adequate and effective in earlier studies

(24–

27). Finally, a facial recognition task was administered in which the participants were shown the same faces presented in the discrimination tasks beforehand. However, the facial expressions in this task were all neutral. As in the discrimination tasks, faces and crosshair phases were presented in alternating order with the memory blocks appearing for 108 seconds and the crosshair appearing for 24 seconds. In this case, the subjects had to judge for each face whether or not they had seen it in the discrimination task before by pressing the right or left control button, respectively. The procedure consisted of 144 faces, including 96 old stimuli that had been shown before (targets) and 48 new faces (nontargets).

Statistical Analysis

The dependent measure of performance in emotion discrimination for each emotion, age discrimination, and recognition was percent correct responses. To analyze performance in emotion discrimination in further detail, we computed sensitivity (true positives/[true positives plus false negatives]) and specificity (true negatives/[true negatives plus false positives]) for each emotion condition. The true positives included the number of targets correctly recognized in each run, whereas the true negatives consisted of the number of nontargets correctly classified as such. False positive errors for each run (emotion) were calculated as the number of times a nontarget face was rated as a target out of the total number of nontargets per run. False negative errors were the number of targets falsely categorized as nontargets. Sensitivity is defined as the probability of correctly identifying a target, whereas specificity refers to the probability of correct rejection of a nontarget.

Because there was an unequal number of emotion discrimination (four) and nonemotional tasks (two), we performed generalized estimating equations modeling (SAS Proc Genmod, SAS Institute, Cary, N.C.) rather than multivariate analysis of variance. The generalized estimating equation is also more robust against violation of the sphericity assumption. Diagnosis and sex were grouping variables, and we tested for the significance of a diagnosis-by-emotional (four emotions) versus nonemotional (age discrimination, facial recognition) interaction term (with performance defined as percent correct). To contrast sensitivity and specificity within the emotional tasks, the generalized estimating equation tested for a diagnosis by sensitivity versus specificity interaction term with group (patients versus comparison subjects) and gender as between-subject factors and emotion as a within-subject factor. For the detailed analysis of false positives, suggested by the significant interaction, we performed an analysis of variance (ANOVA) (SAS Proc GLM, Cary, N.C.) on the false positive errors, with diagnosis and sex as between-subject factors and emotion as a within-subject factor.

Results

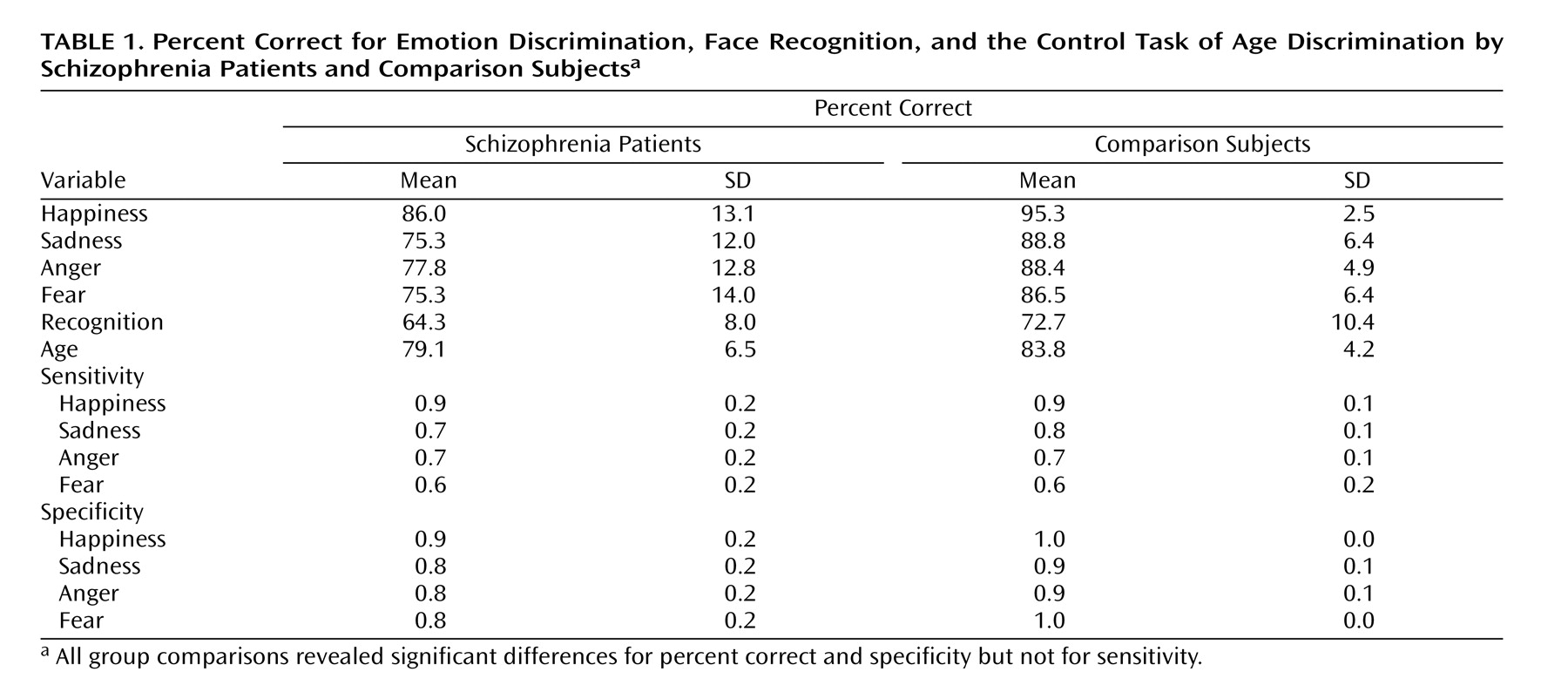

Figure 1 shows the means of patients and comparison subjects for the four emotion discrimination tasks and the two cognitive tasks. As can be seen, both male and female patients were impaired across tasks. However, the difference appears more pronounced for the emotional tasks. Indeed, the generalized estimating equation revealed a significant group effect (χ

2=15.18, df=1, p<0.0001) and an interaction of diagnosis × emotional versus nonemotional tasks (χ

2=21.06, df=2, p<0.0001). No main effects or interactions with gender were significant. Post hoc Scheffé comparisons within the emotion tasks revealed significant group differences for anger (diff

crit=6.34), fear (diff

crit=7.07), happiness (diff

crit=6.10), and sadness (diff

crit=6.33). Group differences in the percentage of correct responses were also significant for the recognition task (F=8.46, df=1, 36, p=0.006) and the age discrimination task (F=7.38, df=1, 36, p=0.01) (see also

Table 1).

As Chapman and Chapman

(28) have proposed, significant diagnosis-by-measure interactions need to be scrutinized for level of difficulty and true score variance before we can safely conclude the presence of a differential deficit. Patients may show impairment on one task because it is more difficult and not because it taps a different ability. To evaluate task difficulty, we compared the percent correct of the age and facial recognition tasks with the emotion discrimination task in healthy comparison subjects. Contrary to the alternative explanation of task difficulty artifacts, both control tasks were more difficult than the emotion discrimination task: age discrimination task (t=4.86, df=19, p=0.0001), facial recognition test (t=6.63, df=19, p=0.0001). This makes the finding of greater deficits in emotion discrimination more prominent because patients were more impaired on the easier emotion discrimination task, whereas they were able to process a nonemotional aspect and to memorize faces at a level more similar to that of the comparison subjects.

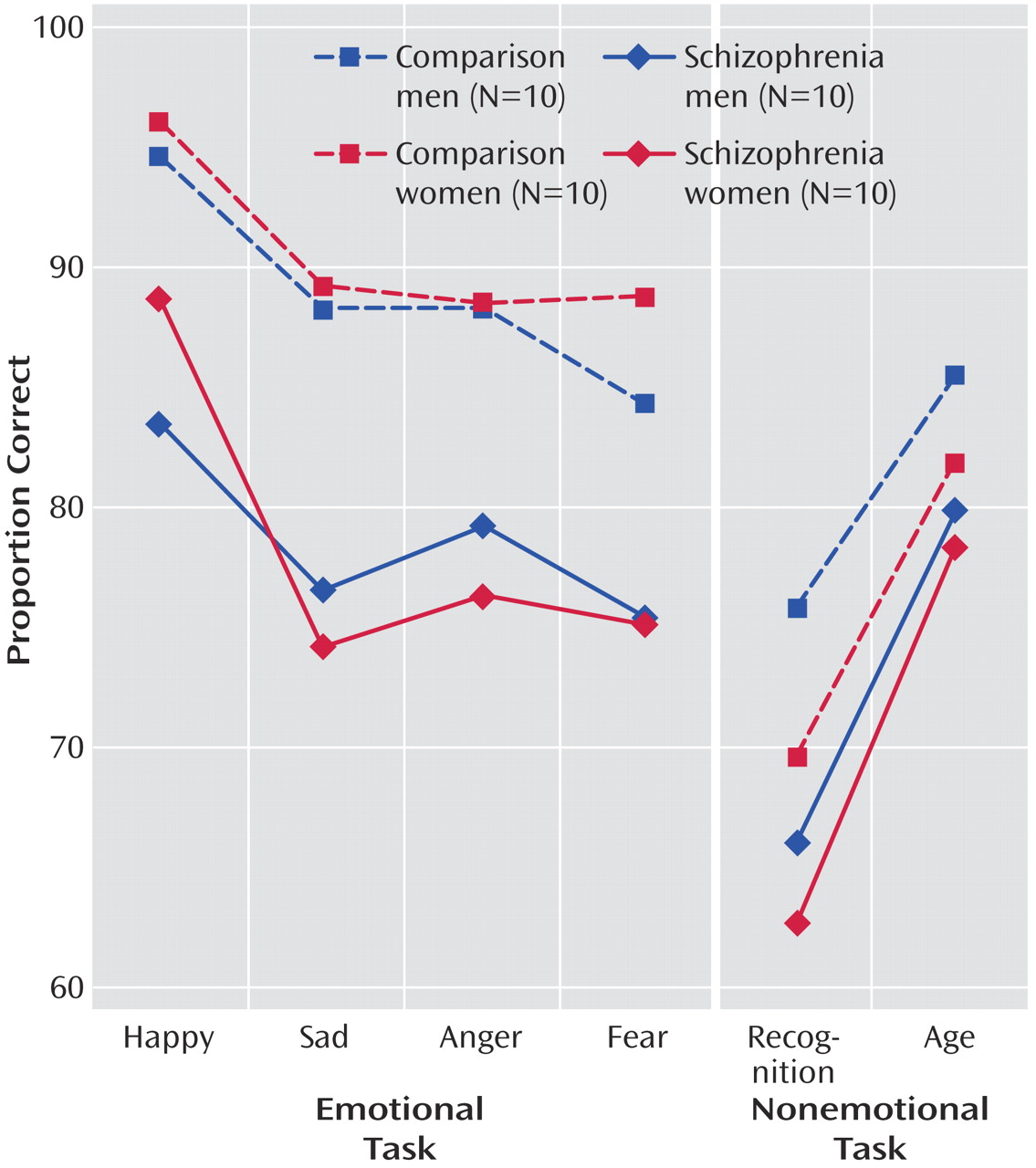

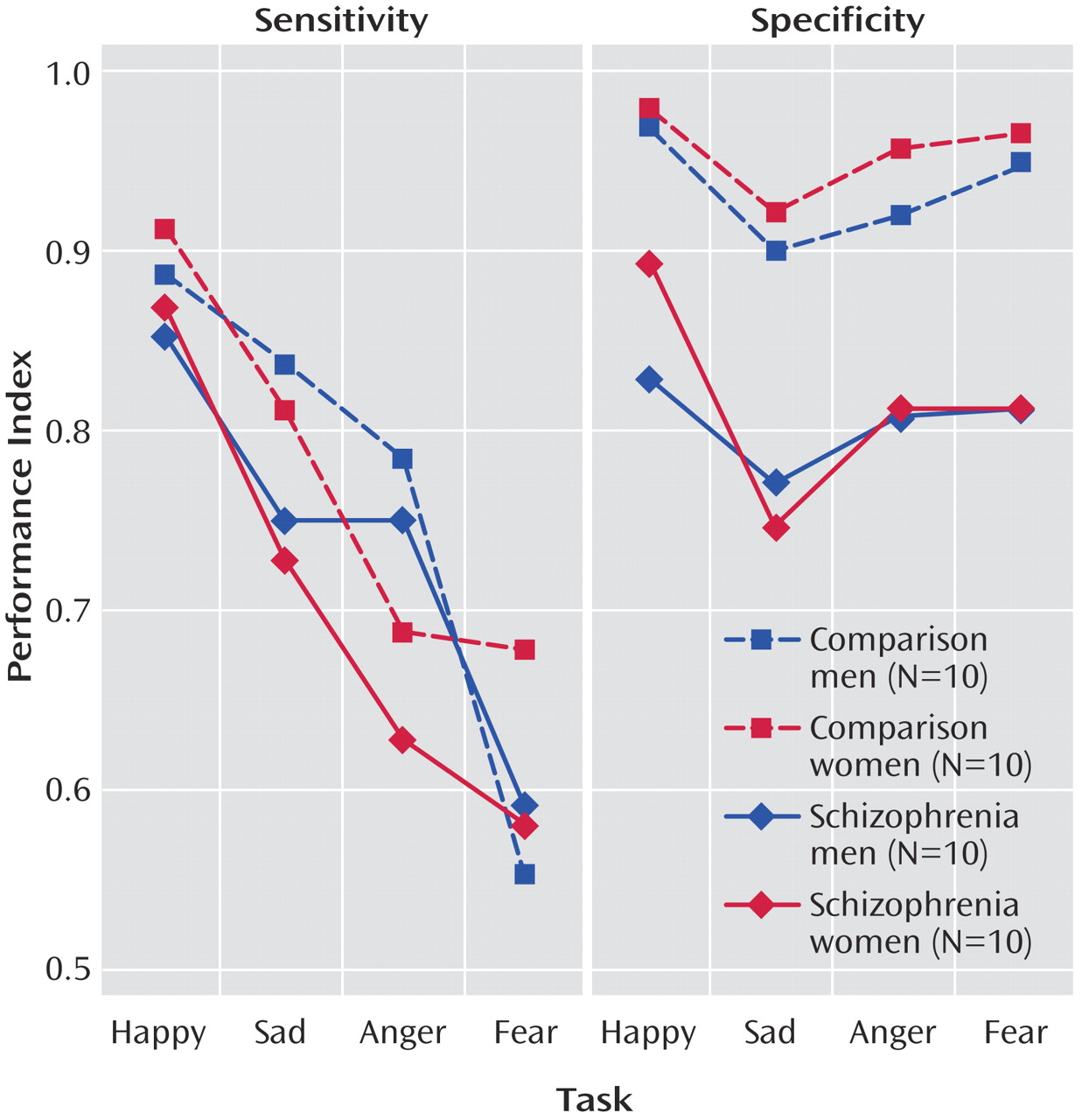

When we compared sensitivity and specificity (

Table 1,

Figure 2), it appeared that patients showed rates of sensitivity similar to those of the comparison subjects for the four emotions but performed worse on the specificity measures. The generalized estimating equation showed a main effect of diagnostic group (χ

2=8.87, df=1, p<0.003) and a diagnosis × sensitivity versus specificity interaction (χ

2=22.12, df=2, p<0.0001). Post hoc comparisons of means confirmed a significant difference between patients and healthy volunteers in specificity for anger (diff

crit=0.09), fear (diff

crit=0.08), happiness (diff

crit=0.07), and sadness (diff

crit=0.08). We also found a marginal gender-by-emotion interaction for sensitivity (p=0.03). Post hoc (Scheffé) tests indicated that across groups, men discriminated angry faces with a significantly higher sensitivity than women (diff

crit=0.09).

To further decompose the specificity effect, we performed an ANOVA on the false positive errors. The group difference was significant (F=17.12, df=1, 36, p=0.0002), indicating more false positives in patients regardless of emotion (happiness diffcrit=0.08, sadness diffcrit=0.11, fear diffcrit=0.09, anger diffcrit=0.09). There were no other effects.

Discussion

Consistent with earlier studies

(17,

29,

30), we found impaired emotion discrimination performance in patients with schizophrenia compared to healthy persons. We also found with the same stimuli that patients, compared to comparison subjects, are relatively less impaired in processing nonemotional features of the face, specifically age, and in face memory. It is noteworthy that patients were more impaired on the emotion discrimination task even though the nonemotional task was harder. This result further underscores the need to study emotion processing deficits in schizophrenia, particularly in view of their correlates with clinical severity and course, as found in previous studies

(7,

8,

31).

By presenting the specific emotions in blocks and revealing the target emotion for each block (i.e., at the beginning of each block, the participants were informed about the target emotion to be identified), the present design also permitted an evaluation of whether patients are primarily impaired in sensitivity or specificity for recognizing emotions. The results indicated that under these conditions, specificity is impaired rather than sensitivity. Thus, the patients encountered more difficulties when they had to decide whether a nontarget represented the respective target emotion. Impaired specificity of emotion discrimination could have severe consequences for social functioning. Our results indicate that when patients with schizophrenia are seeking to identify a target emotion, they will tend to misread that emotion even when it was not present on the observed expression. Such errors could lead to delusions, affective isolation, and withdrawal.

Several preceding studies did not find indications for impaired specificity but rather for reduced sensitivity

(5,

6). Our earlier cross-cultural study

(4) found lower sensitivity and specificity for German and American patients. The difference between the present paradigm and the other studies is that here we identified the target emotion for each block, eliminating the need to subclassify emotions and thereby perhaps facilitating sensitivity. Establishing differential deficits in emotional processing compared to nonemotional aspects of faces does not mean that patients will be more impaired for all emotions relative to all nonemotional processing tasks. Note, however, that even for the easily recognizable emotion of happiness, a performance deficit emerged in patients, whereas for age discrimination, which is a significantly more difficult task for comparison subjects in relation to emotion recognition, the deficit was less apparent. Age discrimination performance in patients was better than recognition of each negative emotion, whereas in comparison subjects, performance here was worse than for any negative emotion. Further research is needed to examine whether impairment of sensitivity or specificity of emotion in patients with schizophrenia depends on situational factors modulating expectancy.

Gender differences were indicated for sensitivity by a significant gender-by-emotion interaction. Men displayed a greater sensitivity for recognition of angry faces than women, irrespective of group. Because gender differences in emotion recognition have been reported before

(32), this may point to a differential evolutionary significance of different emotions for men and women. Anger as a signal for aggression may entail a greater biological significance for men than women.

The present study has several limitations. Conclusions with respect to a specific versus generalized deficit need to be taken with extreme caution, and our results require replication and further placement in a larger context of deficits. It is extremely challenging to find adequate matched control tasks for emotion recognition tasks. Our control tasks matched the emotional task on stimulus material and task construction parameters, yet they proved slightly but significantly more difficult. The present paradigm is also limited in that it does not provide measures of differential patterns of misidentifications of target emotions. When a happy face is misclassified, is it more likely to be seen as neutral, angry, or sad? Such error patterns would be of interest, and earlier studies

(8) have shown that misattribution of negative emotions to neutral faces was the main outcome measure differentiating patients with schizophrenia from comparison subjects. However, the present paradigm was designed to separate sensitivity from specificity by using a binary separation of target from foils. Thus, during each block, the participant indicates only whether the face has the target emotion; participants are not then asked to further specify the emotion if it is not the target. Adding a query for specific misattribution to the task would have compromised its psychometric integrity, and analysis of error patterns necessitates a different testing paradigm.

Another limitation of the study is that the order of two conditions (age discrimination and memory) was fixed, and the impact of task order needs to be determined. Furthermore, the recognition phase showed only neutral faces because it was used as a nonemotional control condition, and a proper balance of emotional and nonemotional faces during recognition will make the task prohibitively long. It would be useful to apply emotional and nonemotional faces for the facial recognition task. However, such an examination, although of clear merit, is beyond the scope of the present study. The study also lacks a condition maximizing specificity of search. Target emotions may be easier to recognize in the present task because the participant is required to focus on one target emotion only during each block. This is similar to tasks in which priming is used. The target emotion reflects the prime, and seeing a target emotion requires only a correct assignment. It would be interesting to compare performance of patients and comparison subjects when the requirement is a decision on the correct emotion for each stimulus without any prime. However, this may pose its own conceptual difficulties. There is no evidence for floor or ceiling effects in sensitivity performance in the present study. Thus, patients could have shown greater impairment in sensitivity. Furthermore, since happy faces were easier to recognize in both groups, the heterogeneity of responses within the emotion recognition task may have accentuated the differences in performance to the control tasks. However, because this was similar in both groups and group differences were the focus of interest in this study, we believe this factor does not affect our main conclusions.

The basis for deficits in facial emotion processing in schizophrenia is unclear. Deficient visual scanning has been hypothesized

(18). Several studies applying chimeric faces, which are known to elicit a perceptual bias to the left hemiface in healthy persons, have found a significantly weaker bias in schizophrenia patients

(33,

34). Given evidence that emotions are expressed more intensely in the left hemiface

(35,

36), deficient facial scanning and perhaps eye-tracking mechanisms merit further examination.

The deficits found in emotion recognition likely relate to impairments observed in “theory of mind” tasks. Schizophrenia patients have deficits in theory of mind problems

(37), requiring conscious interpretation of someone else’s mental state (empathy). Arguably, facial emotion recognition requires theory of mind. Further study can help establish whether these impairments are related to or are independent of general cognitive deficits and whether they reflect dysfunction in partly overlapping cerebral networks.

The present results encourage functional imaging studies probing neural systems for emotion processing that show abnormal activation in schizophrenia

(10,

11), and the present task can provide a probe that yields informative performance data.