Despite the importance of cognitive deficits in schizophrenia, no drug has been approved for treatment of this aspect of the illness. The absence of a consensus cognitive battery has been a major impediment to standardized evaluation of new treatments to improve cognition in this disorder

(1,

2) . One of the primary goals of the National Institute of Mental Health’s (NIMH’s) Measurement and Treatment Research to Improve Cognition in Schizophrenia (MATRICS) initiative was to develop a consensus cognitive battery for use in clinical trials in schizophrenia. The development of a standard cognitive battery through a consensus of experts was designed to establish an accepted way to evaluate cognition-enhancing agents, thereby providing a pathway for approval of such new medications by the U.S. Food and Drug Administration (FDA). It would also aid in standardized evaluation of other interventions to treat the core cognitive deficits of schizophrenia.

The desirable characteristics of the battery were determined through an initial survey of 68 experts

(3) . The MATRICS Neurocognition Committee then reviewed and integrated results from all available factor-analytic studies of cognitive performance in schizophrenia to derive separable cognitive domains

(4) . An initial MATRICS consensus conference involving more than 130 scientists from academia, government, and the pharmaceutical industry led to agreement on seven cognitive domains for the battery and on five criteria for test selection

(1) . The criteria emphasized characteristics required for cognitive measures in the context of clinical trials: test-retest reliability; utility as a repeated measure; relationship to functional status; potential changeability in response to pharmacological agents; and practicality for clinical trials and tolerability for patients. The seven cognitive domains included six from multiple factor-analytic studies of cognitive performance in schizophrenia—speed of processing; attention/vigilance; working memory; verbal learning; visual learning; and reasoning and problem solving

(4) . The seventh domain, social cognition, was included because it was viewed as an ecologically important domain of cognitive deficit in schizophrenia that shows promise as a mediator of neurocognitive effects on functional outcome

(5,

6), although studies of this domain in schizophrenia are too new for such measures to have been included in the various factor-analytic studies. Participating scientists initially provided more than 90 nominations of cognitive tests that might be used to measure performance in the seven cognitive domains.

In this article, we describe the procedures and data that the MATRICS Neurocognition Committee employed to select a final battery of 10 tests—the MATRICS Consensus Cognitive Battery (MCCB)—from among the nominated cognitive tests. These procedures involved narrowing the field to six or fewer tests per cognitive domain; creating a database of existing test information; using a structured consensus process involving an interdisciplinary panel of experts to obtain ratings of each test on each selection criterion; selecting the 20 most promising tests for a beta version of the battery; administering the beta battery to individuals with schizophrenia at five sites to directly compare the 20 tests (phase 1 of the MATRICS Psychometric and Standardization Study); and selecting the final battery based on data from this comparison.

A second article in this issue

(7) describes the development of normative data for the MCCB using a community sample drawn from the same five sites, stratified by age, gender, and education (phase 2 of the MATRICS Psychometric and Standardization Study). This step was critical to making the consensus battery useful in clinical trials.

During the MATRICS process, it became apparent that the FDA would require that a potential cognition-enhancing agent demonstrate efficacy on a consensus cognitive performance measure as well as on a “coprimary” measure that reflects aspects of daily functioning. Potential coprimary measures were therefore evaluated, as reported in a third article in this issue

(8) .

Because of space limitations, a brief description of the methods used to evaluate the nominated cognitive measures is presented here; more details are available in a data supplement that accompanies the online edition of this article.

From Test Nominations to a Beta Battery

Summary of Methods and Results

Initial evaluation

The MATRICS Neurocognition Committee, cochaired by Drs. Nuechterlein and Green and including representatives from academia (Drs. Barch, Cohen, Essock, Gold, Heaton, Keefe, and Kraemer), NIMH (Drs. Fenton, Goldberg, Stover, Weinberger, and Zalcman), and consumer advocacy (Dr. Frese), initially evaluated the extent to which the 90 nominated tests met the test selection criteria based on known reliability and validity as well as feasibility for clinical trials. Because the survey established that the battery would optimally not exceed 90 minutes, individual tests with high reliability and validity that took less than 15 minutes were sought. In this initial review, 36 candidate tests across seven cognitive domains were selected.

Expert panel ratings

Procedures based on the RAND/UCLA appropriateness method were used to systematically evaluate the 36 candidate tests

(9,

10) . This method starts with a review of all relevant scientific evidence and then uses, iteratively, methods that help increase agreement among members of an expert panel that represents key stakeholder groups. A summary of available published and unpublished information on each candidate test was compiled into a database by the MATRICS staff, including information relevant to each test selection criterion (see the Conference 3 database at www.matrics.ucla.edu).

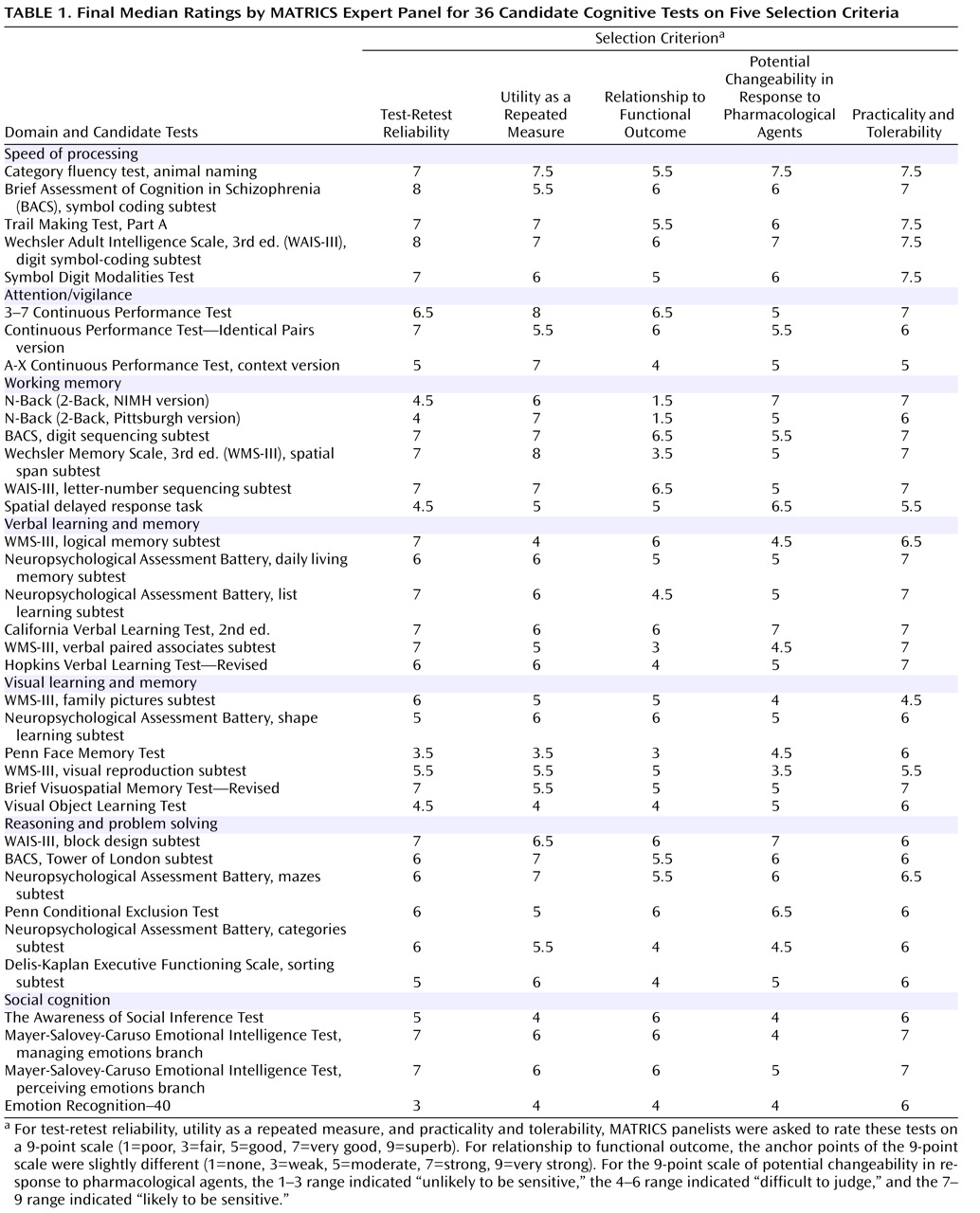

Using this database, an expert panel then evaluated and rated the extent to which each of the 36 candidate tests met each of the five selection criteria. The panel included experts on cognitive deficits in schizophrenia, clinical neuropsychology, clinical trials methodology, cognitive science, neuropharmacology, clinical psychiatry, biostatistics, and psychometrics. These preconference ratings were then examined to identify any that reflected a lack of consensus. Twenty of the 180 ratings indicated a notable lack of consensus, so the expert panel discussed each of these and completed the ratings again. Dispersion decreased, and the median values for nine ratings changed. The median values of all final ratings are presented in

Table 1, grouped by cognitive domain.

Selection of beta battery

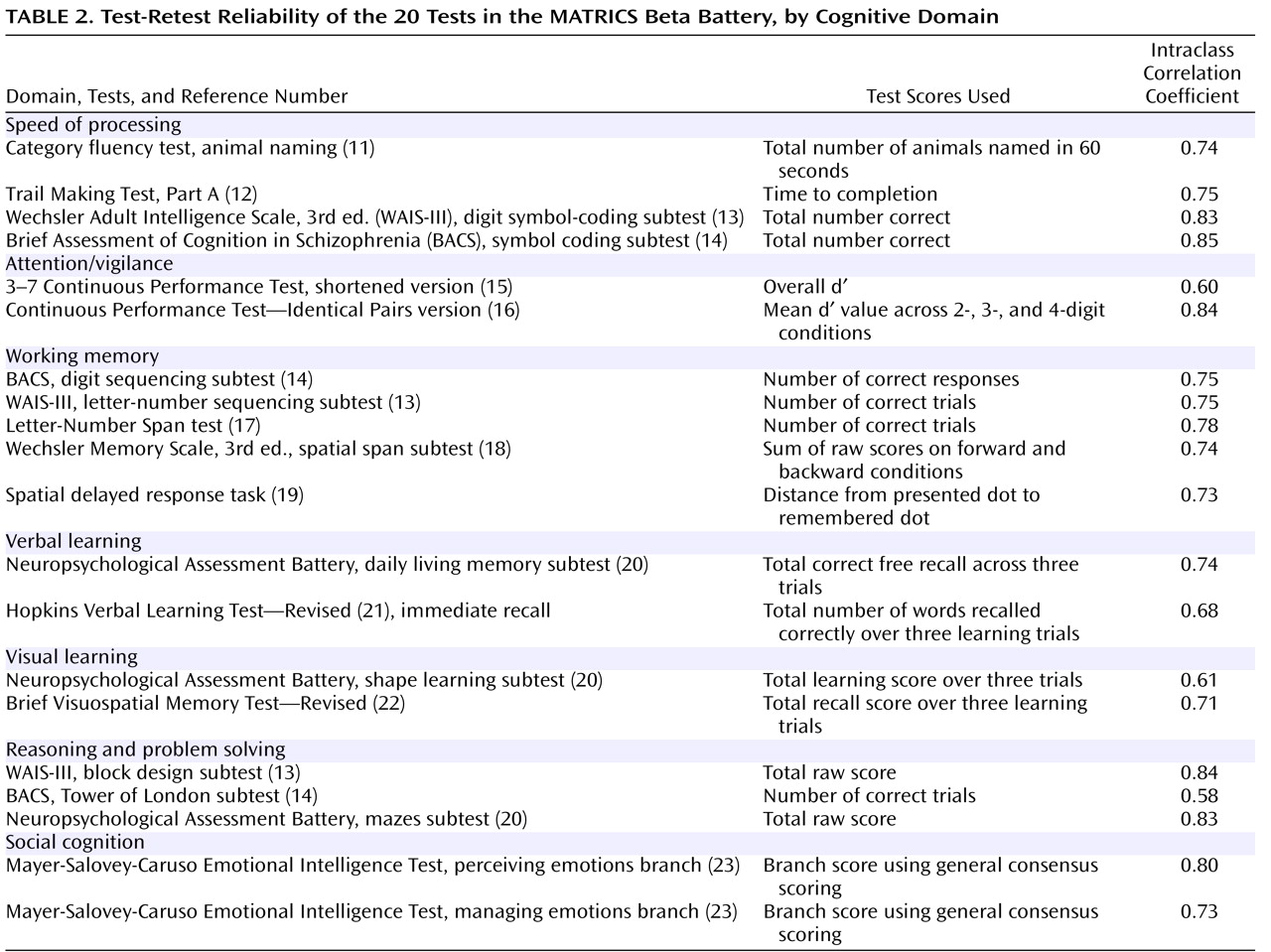

The Neurocognition Committee used the expert panel ratings to select the beta version of the battery for the MATRICS Psychometric and Standardization Study. The goal was to select two to four measures per domain. The resulting beta version of the MCCB included 20 tests (see

Table 2 ; for more details, see the online data supplement and http://www.matrics.ucla.edu/matrics-psychometrics-frame.htm).

From Beta Battery to Final Battery

Summary of Methods

The MATRICS Psychometric and Standardization Study was conducted to directly compare the tests’ psychometric properties, practicality, and tolerability to allow the best representative(s) of each domain to be selected for the final battery. Details of the study’s methods are provided in the online data supplement.

Sites and Participants

The study sites had extensive experience with schizophrenia clinical trials and expertise in neuropsychological assessment: University of California, Los Angeles; Duke University, Durham, N.C.; Maryland Psychiatric Research Center, University of Maryland, Baltimore; Massachusetts Mental Health Center, Boston; and University of Kansas, Wichita. Each site contributed at least 30 participants with schizophrenia or schizoaffective disorder, depressed type, who were tested twice, 4 weeks apart.

Study Design and Assessments

Potential participants received a complete description of the study and then provided written informed consent, as approved by the institutional review boards of all study sites and the coordinating site. Next, the Structured Clinical Interview for DSM-IV

(24) was administered to each potential participant. If entry criteria were met, baseline assessments were scheduled. Participants were asked to return 4 weeks later for a retest.

In addition to the 20 cognitive performance tests, data collected included information about clinical symptoms (from the Brief Psychiatric Rating Scale [BPRS; 25, 26]), self-report measures of community functioning (from the Birchwood Social Functioning Scale

[27] supplemented with the work and school items from the Social Adjustment Scale

[28] ), measures of functional capacity, and interview-based measures of cognition

(8,

29,

30) . See the online data supplement for descriptions of alternate cognitive test forms and staff training for neurocognitive assessments, symptom ratings, and community functioning measures.

Results

Participants

Across the five study sites, 176 patients were assessed at baseline, and 167 were assessed again at the 4-week follow-up (a 95% retention rate). Participants’ mean age was 44.0 years (SD=11.2), and their mean educational level was 12.4 years (SD=2.4). Three-quarters (76%) of the participants were male. The overall ethnic/racial distribution of the sample was 59% white (N=104), 29% African American (N=51), 6% Hispanic/Latino (N=11), 1% Asian or Pacific Islander (N=2), <1% Native American or Alaskan (N=1), and 4% other (N=7).

Based on the diagnostic interviews, 86% of participants received a diagnosis of schizophrenia and 14% a diagnosis of schizoaffective disorder, depressed type. At assessment, 83% were taking a second-generation antipsychotic, 13% a first-generation antipsychotic, and 1% other psychoactive medications only; current medication type was unknown for 3%. Almost all participants were outpatients, but at one site patients in a residential rehabilitation facility predominated.

As expected for clinically stable patients, symptom levels were low. At the initial assessment, the mean BPRS thinking disturbance factor score was 2.6 (SD=1.3), and the mean BPRS withdrawal-retardation factor score was 2.0 (SD=0.9). Ratings were similar at the 4-week follow-up: the mean thinking disturbance score was 2.4 (SD=1.2), and the mean withdrawal-retardation score was 2.0 (SD=0.8).

Dimensions of Community Functional Status

A principal-components analysis with the seven domain scores from the Social Functioning Scale and a summary measure of work or school functioning from the Social Adjustment Scale yielded a three-factor solution (social functioning, independent living, and work functioning; see supplementary

Table 1 in the online data supplement) that explained 59% of the variance and was consistent with previous findings

(31,

32) . Factor scores from these three outcome domains, as well as a summary score across domains, were used as dependent measures for functional outcome.

Site Effects

Cognitive performance was generally consistent across sites; only four of the 20 analyses of variance (ANOVAs) showed a significant site effect, and the differences were relatively small. In contrast, there were clear site differences in community functioning. ANOVAs revealed significant differences in social outcome (F=3.36, df=4, 170, p<0.02) and independent living (F=4.18, df=4, 170, p<0.01). Work outcome showed a similar tendency (F=2.35, df=4, 170, p=0.06), and three of the pairwise comparisons were significant.

Test-Retest Reliability

At MATRICS consensus meetings, high test-retest reliability was considered the most important test feature in a clinical trial. Test-retest reliability data are summarized in

Table 2 . We considered both Pearson’s r and the intraclass correlation coefficient, which takes into account changes in mean level (for Pearson’s r, see the supplementary

Table 2 in the online data supplement). Alternate forms were used for five of the tests. Test-retest reliabilities were generally good. The committee considered an r value of 0.70 to be acceptable test-retest reliability for clinical trials. Most of the tests achieved at least that level.

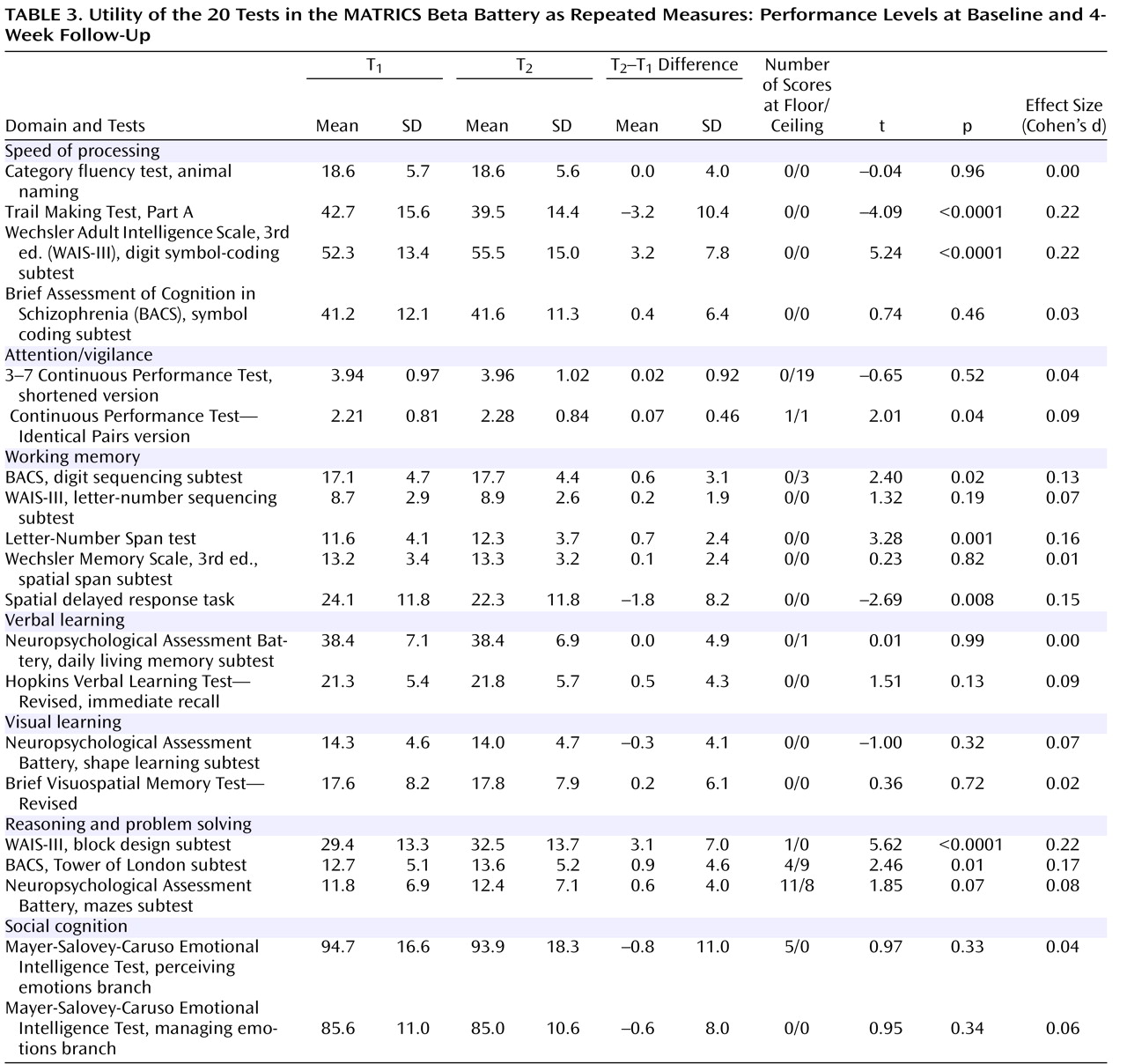

Utility as a Repeated Measure

Tests were considered useful for clinical trials if they showed relatively small practice effects or, if they had notable practice effects, scores did not approach ceiling performance. We considered performance levels at baseline and 4-week follow-up, as well as change scores, magnitude of change, and the number of test administrations with scores at ceiling or floor. Practice effects were generally quite small (

Table 3 ), but several were statistically significant. Some tests in the speed of processing and the reasoning and problem-solving domains showed small practice effects (roughly one-fifth of a standard deviation), but even so, there were no noticeable ceiling effects or constrictions of variance at the second testing.

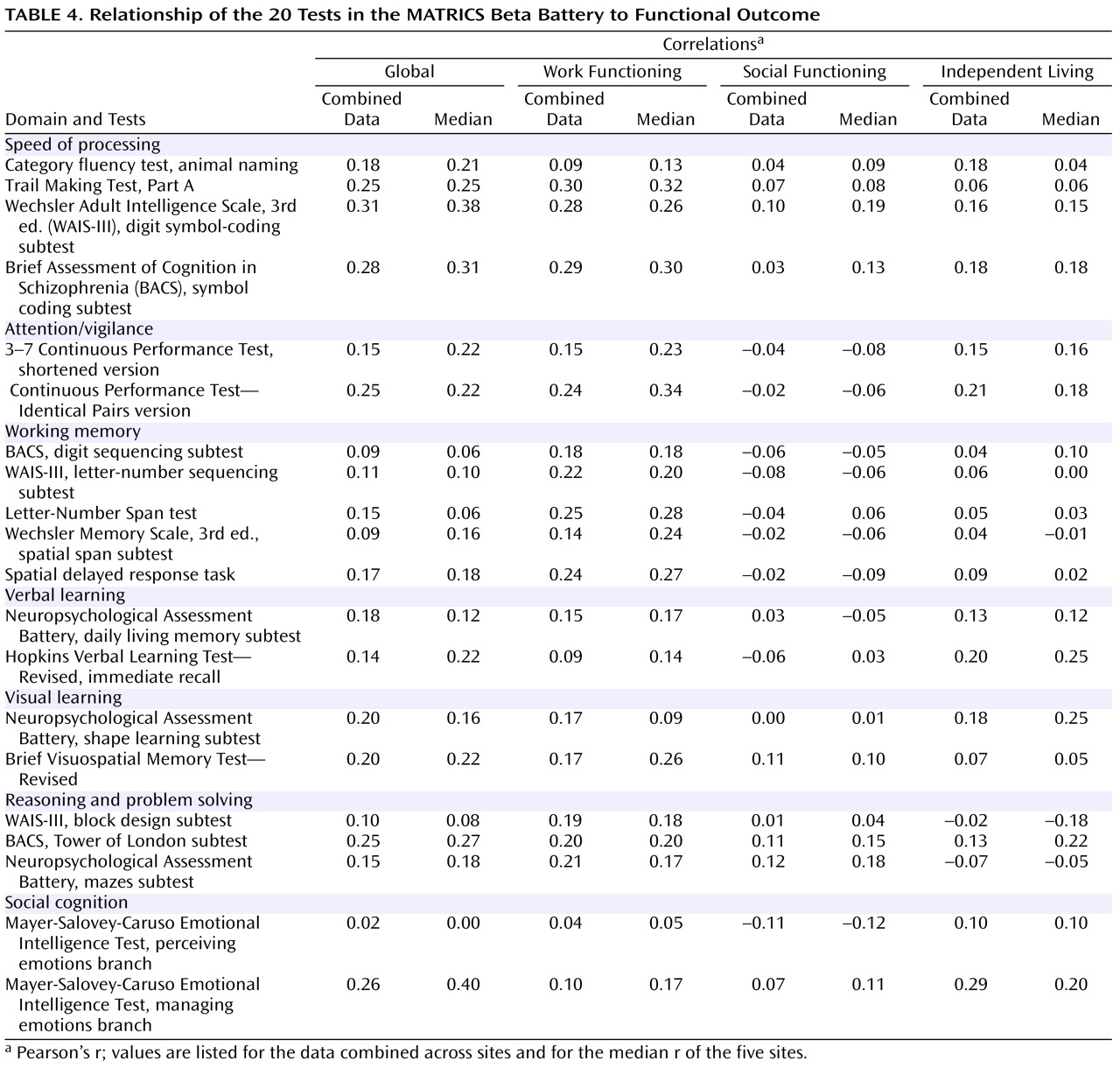

Relationship to Self-Reported Functional Outcome

As mentioned above, the sites differed substantially in participants’ functional status. At one site, only one participant was working, and another site largely involved patients in a residential rehabilitation program. As a result, correlations between cognitive measures and functional outcome showed considerable variation from site to site. Statistical analyses indicated that the heterogeneity of correlation magnitudes across sites was somewhat greater than would be expected by chance, particularly for the independent living factor. Pooled correlations weighted by sample size, however, were very similar to the overall correlations across sites. Given variability in strength of relationships, the MATRICS Neurocognition Committee examined the correlations by combining participants across sites and by looking at the five sites separately and considering the median correlation among sites. Both methods may underestimate the correlations that might be achieved without such site variations (e.g., nine of the tests had correlations >0.40 with global outcome at one or more sites). Furthermore, the restriction of the functional outcome measures to self-report data may have reduced the correlation magnitudes. However, the data did allow direct comparisons among the tests within the same sample, which was the primary goal (

Table 4 ). The correlations tended to be larger for work outcome and smaller for social outcome, consistent with other studies

(33,

34) .

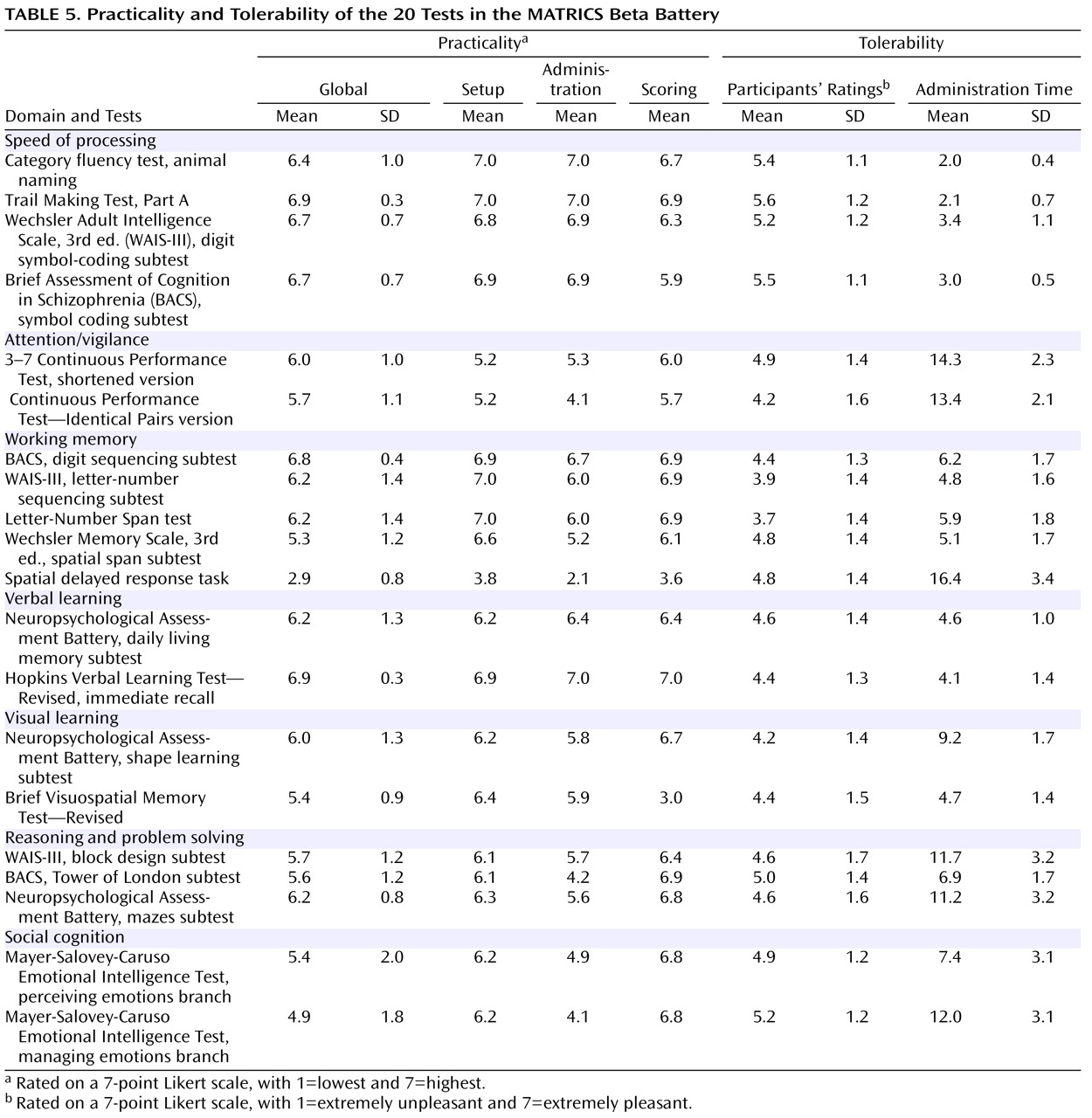

Practicality and Tolerability

Practicality refers to the test administrator’s perspective. It includes consideration of test setup, staff training, administration, and scoring. In assessing practicality, a 7-point Likert scale was used in three categories (setup, administration, and scoring) and in a global score. Ratings by each tester were made after data collection for the entire sample. Tolerability refers to the participant’s view of a test. It can be influenced by the length of the test and any feature making completing the test more or less pleasant, including an unusual degree of difficulty or excessive repetitiveness. We asked participants immediately after they took each test to point to a number on a 7-point Likert scale (indicated by unhappy to happy drawings of faces; 1=extremely unpleasant; 7=extremely pleasant) to indicate how unpleasant or pleasant they found the test.

Table 5 presents the practicality and tolerability results as well as the mean time it took to administer each test. Despite some variability, most tests were considered to be both practical and tolerable. This result likely reflects the efforts to take these factors into consideration in the earlier stages of test selection.

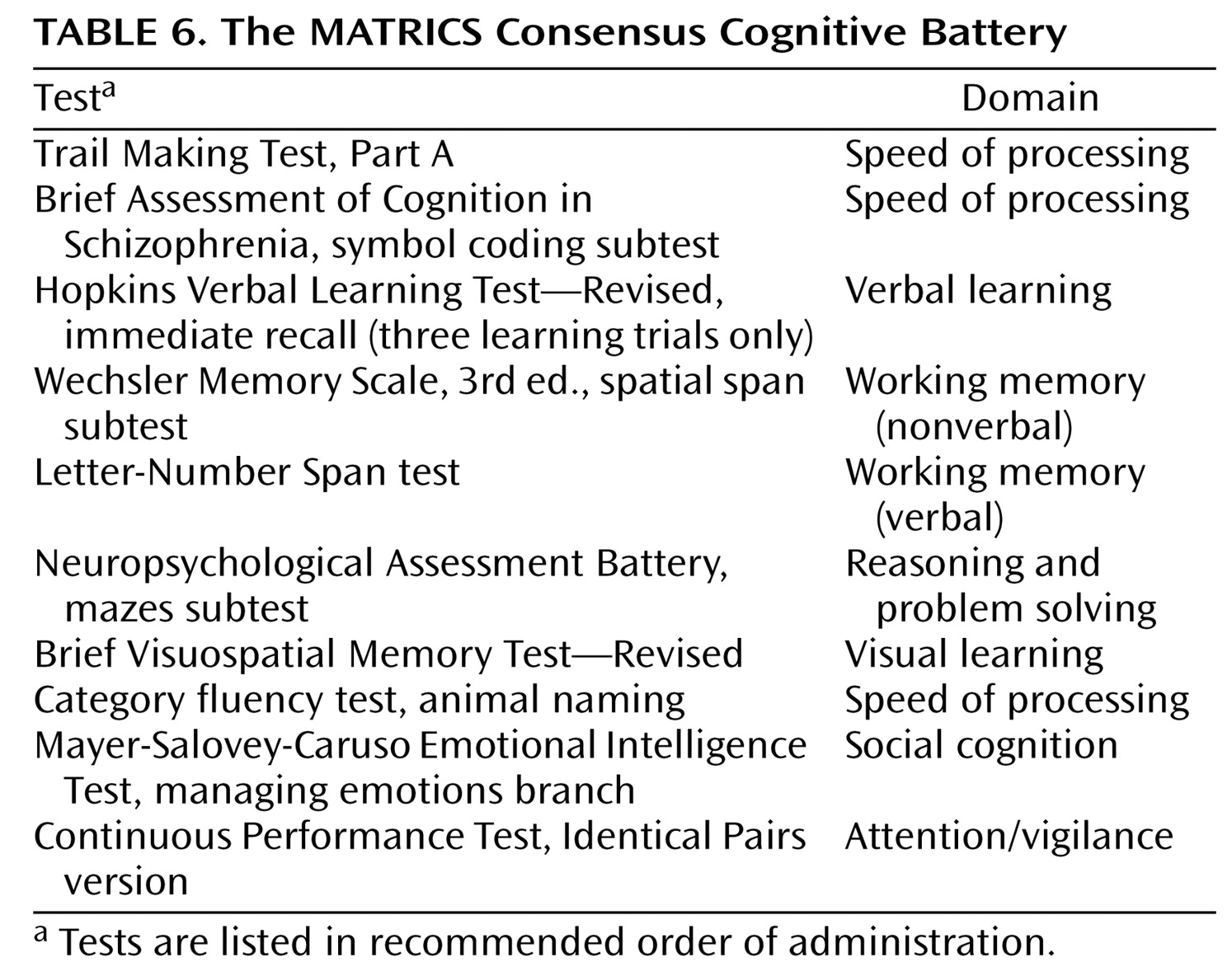

Selection of the Final Battery

The MATRICS Neurocognition Committee used the data in Tables 2–5 to select the tests that make up the final MCCB. Two site principal investigators of the Psychometric and Standardization Study who were not already part of the MATRICS Neurocognition Committee (Drs. Baade and Seidman) were added to the decision-making group to maximize input from experts in neurocognitive measurement. After a discussion of results in a given cognitive domain, committee members independently ranked each candidate test through an e-mail ballot. Members who had a conflict of interest with any test within a domain recused themselves from the vote on that domain. The 10 tests in the final battery are presented in

Table 6 in the recommended order of administration. Based on the time the individual tests took to administer during the MATRICS Psychometric and Standardization Study, total testing time (without considering rest breaks) is estimated to be about 65 minutes. Training to administer these 10 tests should take no more than 1 day, including didactic instruction and hands-on practice. Below, we briefly summarize the reasons that these 10 tests were selected.

Speed of processing

The committee had planned to include two types of measures in this category: a verbal fluency measure and a graphomotor speed measure. The measures were psychometrically comparable in most respects. The Brief Assessment of Cognition in Schizophrenia symbol coding subtest was selected because it showed a smaller practice effect than the WAIS-III digit symbol coding subtest. Given the brief administration time and high tolerability of these measures, the committee decided to include an additional graphomotor measure with a different format (the Trail Making Test, Part A), for a total of three tests (the Trail Making Test, Part A; the Brief Assessment of Cognition in Schizophrenia symbol coding subtest; and the category fluency test).

Attention/vigilance

The Continuous Performance Test—Identical Pairs Version was selected for its high test-retest reliability and the absence of a ceiling effect.

Working memory

The spatial span subtest of the Wechsler Memory Scale, 3rd ed., was selected for nonverbal working memory because of its practicality, its brief administration time, and the absence of a practice effect. For verbal working memory, the Letter-Number Span test was selected because of its high reliability and its somewhat stronger relationship to global functional status.

Verbal learning

The committee considered the verbal learning tests to be psychometrically comparable. The Hopkins Verbal Learning Test—Revised was selected because of the availability of six forms, which may be helpful for clinical trials with several testing occasions.

Visual learning

The Brief Visuospatial Memory Test—Revised was selected because it had higher test-retest reliability, a brief administration time, and the availability of six forms.

Reasoning and problem solving

The Neuropsychological Assessment Battery mazes subtest was selected for its high test-retest reliability, small practice effect, and high practicality ratings.

Social cognition

The managing emotions component of the Mayer-Salovey-Caruso Emotional Intelligence Test was selected for its relatively stronger relationship to functional status.

With selection of the final battery, it became possible to calculate test-retest reliabilities for cognitive domain scores that involve multiple tests and for an overall composite score for all 10 tests. Test scores were transformed to z-scores using the schizophrenia sample and averaged to examine the reliability of the composite scores. Four-week test-retest intraclass correlation coefficients were 0.71 for the speed of processing domain score, 0.85 for the working memory domain score, and 0.90 for the overall composite score.

Discussion

Discussions among representatives of the FDA, NIMH, and MATRICS indicated that the absence of a consensus cognitive battery had been an overriding obstacle to regulatory approval of any drug as a treatment for the core cognitive deficits of schizophrenia. The steps and data presented here moved the process from an initial nomination list of more than 90 cognitive tests to the selection of the final 10 tests. The process involved the participation of a large number of scientists in diverse fields to achieve a consensus of experts. To ensure a fair and effective process for selecting the best brief cognitive measures for clinical trials, the methods included both a structured consensus process to evaluate existing data and substantial new data collection.

One clear point to emerge from the MATRICS consensus meetings was the importance of reliable and valid assessment of cognitive functioning at the level of key cognitive domains

(1,

4) . Although some interventions may improve cognitive functioning generally, evidence from cognitive neuroscience and neuropharmacology suggests that many psychopharmacological agents may differentially target a subset of cognitive domains

(35,

36) . A battery assessing cognitive performance at the domain level requires more administration time than one that seeks to measure only global cognitive change. One challenge was to select a battery that adequately assessed cognitive domains and was still practical for large-scale multisite trials. The beta battery was twice as long as the final battery yet was well tolerated by participants, with 95% returning as requested for retesting. Furthermore, no participant who started a battery failed to complete it. The final battery offers reliable and valid measures in each of the seven cognitive domains and should be even easier to complete. If a clinical trial involves an intervention hypothesized to differentially affect specific cognitive domains, our experience with the beta battery suggests that the MCCB could be supplemented with additional tests to assess the targeted domains without substantial data loss through attrition.

The psychometric study sites varied substantially in the level and variance of their participants’ functional status. In contrast, cognitive performance showed few significant site effects. Local factors may influence functional status in ways that are not attributable to cognitive abilities. Thus, cognitive abilities establish a potential for everyday functional level, while environmental factors (e.g., the local job market, employment placement aid, and housing availability) may influence whether variations in cognitive abilities manifest themselves in differing community functioning. In this instance, the sites varied in the extent to which treatment programs and local opportunities encouraged independent living and return to work, and this variation contributed to differences across sites in the magnitude of correlations between individual measures and functional outcome. To further examine variability across sites, we considered the correlation for each site between the overall composite cognitive score and global functional status. Three sites showed clear relationships (with r values of 0.36, 0.38, and 0.44) and two did not (r values of 0.03 and 0.11). Calculating the correlations across sites probably led to a low estimate of the true magnitude of this correlation in people with schizophrenia. Nevertheless, the correlations allowed a reasonable direct comparison of the tests.

Another factor in the magnitude of correlations observed between cognitive performance and functional outcome may have been the restriction of community functioning measures to self-report data, which may include biases. Use of informants to broaden the information base or use of direct measures of functional abilities in the clinic may yield stronger relationships to cognitive performance

(8) .

The final report of the MATRICS initiative identified the components of the MCCB and recommended its use as the standard cognitive performance battery for clinical trials of potential cognition-enhancing interventions. This recommendation was unanimously endorsed by the NIMH Mental Health Advisory Council in April 2005, and it was accepted by the FDA’s Division of Neuropharmacological Drug Products. To facilitate ease of use and distribution, the tests in the MCCB were placed into a convenient kit form by a nonprofit company, MATRICS Assessment, Inc.

(37) . The MCCB is distributed by Psychological Assessment Resources, Inc., Multi-Health Systems, Inc., and Harcourt Assessment, Inc.

The primary use of the MCCB is expected to be in clinical trials of potential cognition-enhancing drugs for schizophrenia and related disorders. Use of the MCCB in trials of cognitive remediation would facilitate comparison of results across studies. Another helpful application would be as a reference battery in basic studies of cognitive processes in schizophrenia, as it may aid evaluation of sample variations across studies.

The last step in the development of the MCCB was a five-site community standardization study to establish co-norms for the tests, examine demographic correlates of performance, and allow demographically corrected standardized scores to be generated. This stage is described in the next article

(7) .