In March 2023, an image of Pope Francis wearing a puffy white Balenciaga coat went viral, receiving coverage in The New York Times, CNN, and beyond. Some users criticized the pope for such an ostentatious demonstration of wealth. The actual issue, however, was that the image wasn’t real; it was created using Midjourney, an AI-powered image generating tool.

You have likely now heard of ChatGPT. You and some of your patients may be using AI-powered technology for entertainment, increased efficiency with tasks, or general queries. In its most recent public presentation in November 2023, OpenAI highlighted that ChatGPT has 100 million weekly users.

As the pope’s image shows, popular generative AI tools are no longer solely text-generating tools; they are now multimodal. This means that a model can perceive visual information as well as generate visual information, in the case of ChatGPT. These capabilities build on similar models and technologies that were previously separate from ChatGPT. (DALLE 3 is OpenAI’s image-generation model; it was rolled out to most users in October 2023 and has since been combined into the ChatGPT interface.) In addition, other companies, like Midjourney, have solely focused on AI-powered text-to-image generation. Given ChatGPT’s number of weekly users, many of your patients are likely using these technologies, so it is important to understand them and discuss their pros and cons with patients.

Much has been written on generative AI’s ability to propagate and create disinformation. OpenAI has already collaborated with Georgetown and Stanford universities to identify risks and solutions regarding the ability of large language models (LLMs) to be misused for disinformation. They foresee that LLMs will decrease the cost of disinformation campaigns and increase the feasibility of personalized influence campaigns via generation of personally tailored content. Open AI and the universities stated in a January 2023 report, “Our bottom-line judgment is that language models will be useful for propagandists and will likely transform online influence operations.” Anthropic and similar companies have also been the subject of public scrutiny for such risks.

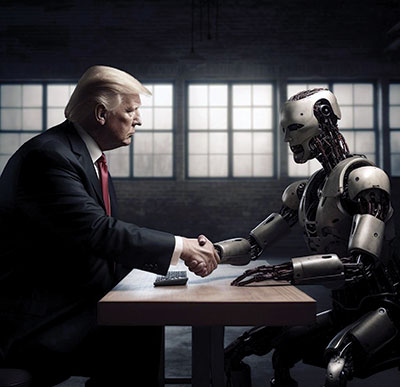

The image of the pope is a relatively benign example of such technology; however, one can easily imagine what a presidential campaign may look like with the power of generative AI. These technologies have already been utilized to generate images of politicians such as Vladimir Putin and Donald Trump being arrested. There is no tagging for such images that would allow users to know that an image was generated by AI. As such, AI-generated images (or “deepfake”) are now being shared on social media with increasing frequency, and users are being inundated with vast amounts of increasingly convincing disinformation.

On the right are two images I generated using Midjourney. These relatively benign images are meant to show presidential hopefuls shaking hands with an AI-powered robot. If the creator of such images aims to persuade or anger its intended audience, however, one can imagine that the images would be more malicious and convincing. (These images took me only 15 seconds to generate on an iPhone.)

In addition to generating images, ChatGPT can now receive visual information and process it. For instance, you can take a picture of a broken dishwasher with your phone and upload it to ChatGPT. ChatGPT will analyze the image, access its training data, and propose repair solutions. The medical and psychiatric implications of such a technology are quite obvious. Researchers have investigated the intersection of AI and radiology for quite some time, but now patients have the ability to upload their radiologic images directly into ChatGPT and ask for interpretation. (OpenAI has obviously built in safeguards into the technology.) The uses of this paradigm-shifting technology are endless. Individuals can upload pictures of their outfits and ask ChatGPT to opine on how fashionable they are and areas for improvement. Furthermore, users are taking pictures of their meals and asking for nutritional input.

Given ChatGPT’s number of weekly users, many of your patients are likely using these technologies and seeing AI-generated content, so it is important to understand them and discuss their pros and cons with patients. Generative AI could worsen patients’ anxieties, phobias, and delusions, especially in an election year with high stakes. Counseling patients on the safe use of this technology and being aware of the possibility of encountering dubious and even harmful images online is of the utmost importance.

However, we must take care not to be doomsdayers who criticize all technological development as anathema. There are benefits to the incredible ability to generate novel images with simple keystrokes. Research has consistently demonstrated that creativity can be an engine for happiness, and generative AI has now created an entire realm of creativity that was unimaginable just a few years ago. Patients can utilize such technologies to alleviate boredom, process stressful moments, and self-actualize in artistic pursuits.

Similarly, whether taking a picture of your fridge to ask for healthy recipes or taking a picture of a broken bike to ask how to fix it, it is clear that AI models that can process visual media will also benefit many individuals. It is our job as psychiatrists to stay up to date on how our patients are using the technology and to stay vigilant about its inherent risks as well as its perceived benefits. Psychiatrists can begin by using the technology themselves, speaking to their patients about it, and researching the best ways this technology can improve mental health. If we aren’t taking part in the conversation, it will happen without our input. ■