Development and Validation of a Computerized-Adaptive Test for PTSD (P-CAT)

Abstract

Objective:

Methods:

Results:

Conclusions:

Methods

Overview

Participants

Calibration sample.

Sample for field test validation.

Calibration Measures

Traumatic exposure screen.

PTSD item bank.

Validation Measures

Procedures

Item calibration.

Field test item validation.

Data Analysis

P-CAT Development and Psychometric Properties

Validation

Results

Sample Characteristics

| Characteristic | Calibration sample (N=1,085) | Validation sample (N=203) | ||

|---|---|---|---|---|

| N | % | N | % | |

| Age | ||||

| 18–30 | 109 | 10 | 29 | 14 |

| 31–45 | 386 | 36 | 44 | 22 |

| 45–60 | 246 | 23 | 72 | 35 |

| ≥61 | 344 | 32 | 58 | 29 |

| Gender | ||||

| Male | 851 | 78 | 161 | 79 |

| Female | 234 | 22 | 42 | 21 |

| Racea | ||||

| White | 821 | 76 | 147 | 72 |

| African American | 91 | 8 | 37 | 18 |

| Other nonwhite | 88 | 8 | 11 | 5 |

| Latino ethnicity | 78 | 7 | 15 | 7 |

| Education | ||||

| Less than high school | 35 | 3 | 0 | — |

| High school or GED | 213 | 20 | 31 | 15 |

| Some college | 501 | 46 | 114 | 56 |

| Bachelor’s degree or higher | 335 | 31 | 58 | 29 |

| Employmenta | ||||

| Employed | 573 | 53 | 69 | 34 |

| Student | 63 | 6 | 24 | 12 |

| Homemaker | 46 | 4 | 5 | 2 |

| Unemployed or disabled | 233 | 21 | 97 | 48 |

| Retired | 305 | 28 | 52 | 26 |

| Self-reported diagnosisa | ||||

| PTSD | 237 | 22 | 103 | 51 |

| Other mental health conditionb | 404 | 37 | 135 | 67 |

| No mental health condition | 646 | 60 | 51 | 25 |

Factor Analysis and Item Calibration

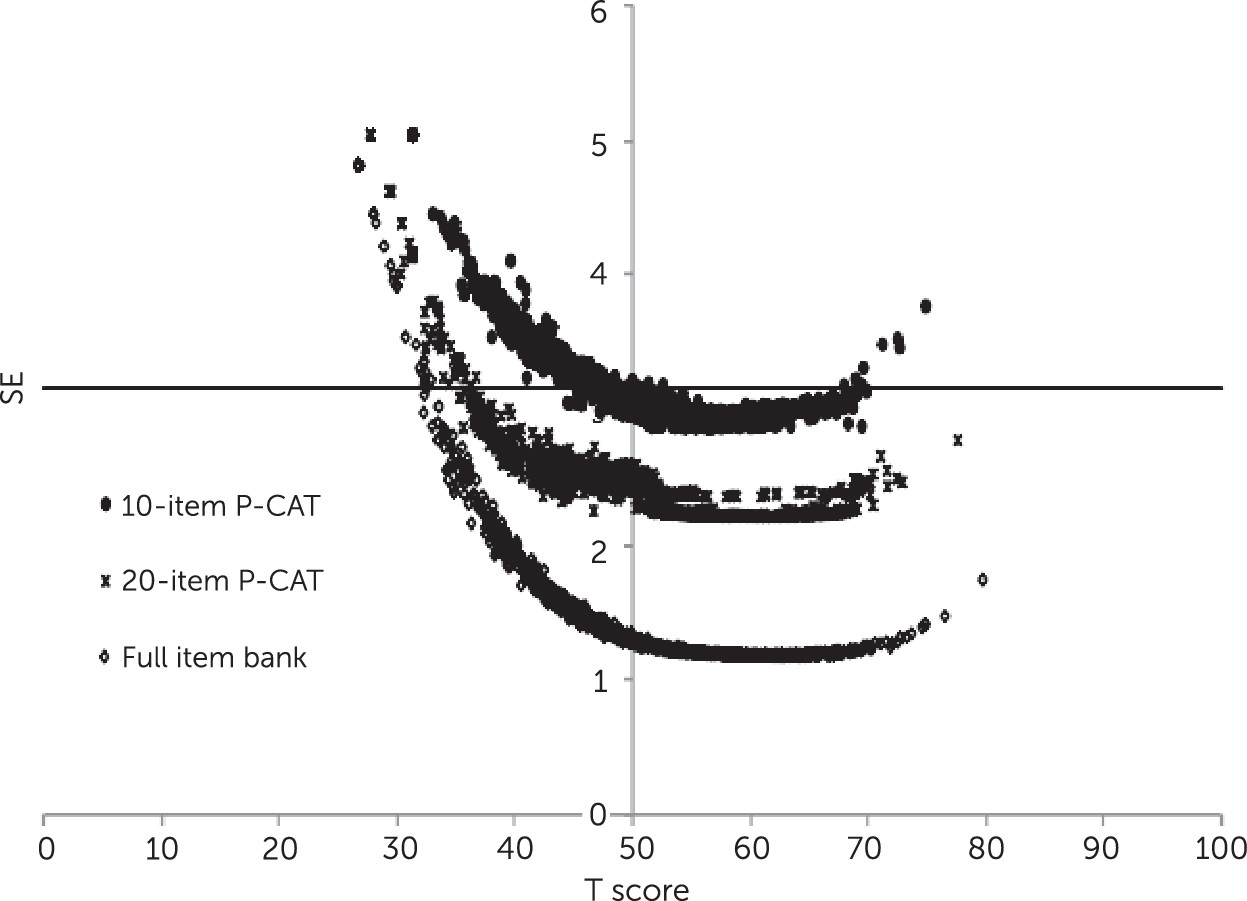

P-CAT Scoring and CAT Simulations

Concurrent and Discriminant Validity

| P-CAT total and domain | PTSD (N=91) | Other mental health condition (N=60) | No mental health condition (N=52) | Fb | |||

|---|---|---|---|---|---|---|---|

| M | SD | M | SD | M | SD | ||

| Total | 62.98 | 4.41 | 57.17 | 7.39 | 52.93 | 7.82 | 43.75 |

| Reexperiencing | 63.29 | 4.79 | 57.09 | 7.70 | 52.78 | 8.11 | 43.73 |

| Avoidance | 62.19 | 4.13 | 57.44 | 6.68 | 53.85 | 7.83 | 33.07 |

| Negative mood-cognitions | 62.49 | 4.47 | 56.99 | 7.54 | 52.09 | 7.65 | 45.64 |

| Arousal | 62.71 | 4.24 | 56.55 | 7.36 | 52.58 | 8.19 | 44.41 |

| P-CAT total and domain | PTSD diagnosis | PTSD screen | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Yes (N=129) | No (N=71) | Fb,c | Positive (N=140) | Negative (N=63) | Fb,d | |||||

| M | SD | M | SD | M | SD | M | SD | |||

| Total | 62.18 | 5.10 | 52.04 | 6.97 | 138.5 | 62.35 | 4.86 | 50.55 | 6.07 | 218.1 |

| Reexperiencing | 62.26 | 5.67 | 52.09 | 7.31 | 119.5 | 62.54 | 5.28 | 50.38 | 6.37 | 202.3 |

| Avoidance | 61.55 | 4.70 | 53.17 | 7.16 | 99.0 | 61.65 | 4.40 | 51.98 | 6.97 | 143.0 |

| Negative mood-cognitions | 61.77 | 5.24 | 51.39 | 6.76 | 145.4 | 61.85 | 5.04 | 50.09 | 6.09 | 206.9 |

| Arousal | 61.80 | 5.15 | 51.58 | 7.06 | 137.7 | 61.98 | 4.93 | 50.10 | 6.19 | 214.1 |

Sensitivity and Specificity

Example of P-CAT Administration

| Respondent and item | Domain | Response | Scorea | SE |

|---|---|---|---|---|

| Low symptom severity | ||||

| I felt upset when I was reminded of the trauma | Reexperiencing | Never | 45.3 | .66 |

| I had sleep problems | Arousal | Sometimes | 46.9 | .52 |

| I avoided situations that might remind me of something terrible that happened to me | Avoidance | Never | 46.1 | .48 |

| I felt distant or cut off from people | Negative mood-cognitions | Never | 44.9 | .44 |

| I found myself remembering bad things that happened to me | Reexperiencing | Rarely | 45.9 | .36 |

| I lost interest in social activities | Negative mood-cognitions | Never | 47.0 | .36 |

| I felt jumpy or easily startled | Arousal | Rarely | 47.0 | .31 |

| I had bad dreams or nightmares about the trauma | Reexperiencing | Never | 46.9 | .30 |

| I felt that if someone pushed me too far, I would become angry | Arousal | Rarely | 47.1 | .28 |

| I had flashbacks (sudden, vivid, distracting memories) of the trauma | Reexperiencing | Never | 47.0 | .28 |

| I had trouble concentrating | Arousal | Rarely | 47.4 | .26 |

| I felt that I had no future | Negative mood-cognitions | Never | 47.3 | .26 |

| High symptom severity | ||||

| I felt upset when I was reminded of the trauma | Reexperiencing | Often | 62.5 | .50 |

| I had sleep problems | Arousal | Often | 62.1 | .45 |

| I tried to avoid activities, people, or places that reminded me of the traumatic event | Avoidance | Often | 62.7 | .37 |

| I lost interest in social activities | Negative mood-cognitions | Sometimes | 61.9 | .33 |

| I had flashbacks (sudden, vivid, distracting memories) of the trauma | Reexperiencing | Sometimes | 61.7 | .31 |

| I felt emotionally numb | Negative mood-cognitions | Often | 62.5 | .30 |

| Memories of the trauma kept entering my mind | Reexperiencing | Sometimes | 62.3 | .29 |

| I felt distant or cut off from people | Negative mood-cognitions | Often | 62.5 | .28 |

| I had bad dreams about terrible things that have happened to me | Reexperiencing | Sometimes | 62.4 | .28 |

| I felt jumpy or easily startled | Arousal | Often | 63.0 | .25 |

| Any reminder brought back feelings about the trauma | Reexperiencing | sometimes | 63.4 | .24 |

| I felt that I had no future | Negative mood-cognitions | Rarely | 63.3 | .24 |

Discussion

Conclusions

Acknowledgments

Footnote

References

Information & Authors

Information

Published In

Cover: Tea infuser and strainer, by Marianne Brandt, circa 1924. Silver and ebony. The Beatrice G. Warren and Leila W. Redstone Fund, 2000, The Metropolitan Museum of Art, New York City. Image copyright © The Metropolitan Museum of Art; image source: Art Resource, New York City.

History

Authors

Funding Information

Metrics & Citations

Metrics

Citations

Export Citations

If you have the appropriate software installed, you can download article citation data to the citation manager of your choice. Simply select your manager software from the list below and click Download.

For more information or tips please see 'Downloading to a citation manager' in the Help menu.

View Options

View options

PDF/EPUB

View PDF/EPUBLogin options

Already a subscriber? Access your subscription through your login credentials or your institution for full access to this article.

Personal login Institutional Login Open Athens loginNot a subscriber?

PsychiatryOnline subscription options offer access to the DSM-5-TR® library, books, journals, CME, and patient resources. This all-in-one virtual library provides psychiatrists and mental health professionals with key resources for diagnosis, treatment, research, and professional development.

Need more help? PsychiatryOnline Customer Service may be reached by emailing [email protected] or by calling 800-368-5777 (in the U.S.) or 703-907-7322 (outside the U.S.).