The need for digital literacy training has become more apparent because of COVID-19 and increased reliance on technology in all facets of care. Although access to technology remains an issue for some, the second digital divide of knowledge, skills, and confidence is now greater than the first. Ensuring that all patients are able to engage with digital health encompasses access to and equity of health care. In this report, we explore a digital literacy program aimed toward patients with mental illness who were receiving care in either a community mental health center or in an inpatient psychiatric unit.

As more health care services are offered virtually, digital literacy has become recognized as a social determinant of health (

1). Patients with limited digital literacy access telemedicine services at lower rates compared with patients with greater digital literacy (

2). The issue is pressing in the mental health field because telehealth and virtual visits are expanding and likely will become a permanent facet of care because of changes sparked by COVID-19 (

3). The ability to access digital health is a critical issue for patients with serious mental illness, who often need the most services but on average have less education than those without this condition and may experience cognitive impairment.

Access to digital health for patients with serious mental illness is limited most by digital literacy. Although computers are often associated with telepsychiatry, mobile devices such as smartphones are the primary modality through which patients access digital health services (

4,

5). It is already well established that people with mental illness own smartphones at rates nearly as high as those of the general population (

6,

7). This trend continues today. For example, a 2021 study of digital skills among people with schizophrenia and bipolar disorder showed that more than 85% of participants owned a digital device; however, 42% lacked foundational skills to use those devices, as measured by the essential digital skills framework (

8).

This lack of digital literacy has increasingly been recognized as a primary barrier to acquisition of mental health services. Despite higher clinical needs due to the COVID-19 pandemic, reports suggest that individuals with schizophrenia are now attending fewer appointments (

9); moreover, only 5% of the Americans surveyed were connected to mental health services for the first time during the pandemic (

10). Although access to care is a complex and multifaceted issue, gaps in technology literacy contribute greatly to disparities in care (

11).

In an attempt to close this gap, our team has been offering—even before COVID-19—digital literacy training for people with mental disorders through a program called Digital Outreach for Obtaining Resources and Skills (DOORS) (

12). DOORS offers 8 weeks of group-based digital skills training, with the goal of teaching participants skills via use of their smartphones (

13). During COVID-19, the program expanded, and we created an online version (

https://skills.digitalpsych.org).

We sought to further improve DOORS by researching its impact on participants, with the primary goal of assessing changes in self-reported digital literacy and the exploratory aim of assessing changes in self-reported functional skills. We also sought to assess whether learning digital literacy skills was associated with transdiagnostic improvements in problem solving, feelings of control, anxiety, and mood-related symptoms. Thus, in this report, we explore how targeted digital skills training affects functional and clinical outcomes.

Methods

DOORS was offered at in-person group sessions and was led by our team of trained digital navigators, who completed a 10-hour training (

14) to ensure that they were able to facilitate and lead DOORS groups effectively.

All DOORS sessions contributing data to this report were conducted in facilities in Boston from July to November 2021. The program was offered in two settings: outpatient community mental health centers, known as “clubhouses,” and an inpatient psychiatric unit (IPU). Participants receiving treatment in the clubhouses (N=113) had the traditional 8-week curriculum, whereas participants in the IPU (N=74) received a modified curriculum that involved only one lesson (on app evaluation), which was repeated weekly because of the high rate of patient turnover due to discharges. The clubhouse DOORS sessions were each 90 minutes in length, whereas the IPU sessions were 45 minutes to accommodate the workflow of the unit. Personally identifiable information was not collected in accordance with guidance from site leaders at the community and inpatient sites. The study was approved by the Beth Israel Deaconess Medical Center Institutional Review Board for verbal consent, given that the survey was anonymous and gathered no personal health information (e.g., race, sex) apart from age.

Past iterations of DOORS have focused on either wellness goals or functional outcomes (

12,

13). We adapted these survey tools to assess for changes in skill acquisition, confidence, knowledge, and mental health–related outcomes (see the

online supplement to this report). The survey questions that we used to measure the mental health–related clinical changes were informed by scales that were used in a study of a single-session intervention (

15). These scales measured social functioning, negative thought patterns, hope, mood, problem solving, and anxiety, with the goal of assessing symptoms as well as potential underlying factors related to symptoms (e.g., problem solving). Participants were instructed to answer clinical survey questions, rated on a scale from 1, strongly disagree or not at all, to 10, strongly agree or a lot, on the basis of their current state. Functional survey questions, rated on the same scale, were adapted from our previous survey measurement tool (

13).

Pre- and postintervention survey data were analyzed through descriptive statistics and two-tailed t tests. The lack of a gold standard for measuring the digital literacy of people with serious mental illness (defined here as a diagnosis of schizophrenia, bipolar disorder, or major depressive disorder) led us to rely on our research on self-reported scales for determining thresholds. We set digital literacy skill deficiency to be less than 50% of the mean, sufficiency to be within 1 SD of deficiency, and proficiency to be higher than 1 SD from deficiency. These thresholds were determined by our team because, to our knowledge, there are no clear standards or validated metrics that outline digital literacy proficiency among patients with serious mental illness.

Results

A total of 113 preintervention surveys and 87 postintervention surveys were collected from the clubhouse sessions, whereas 74 preintervention surveys and 52 postintervention surveys were collected from the IPU sessions. Most participants in the clubhouse cohort were in the age group of 45–54 years, whereas most participants in the IPU cohort were in the age group of 35–44 years.

Participants in the IPU group self-reported significantly higher initial anxiety, depression, and stress scores compared with the clubhouse group (p<0.05). However, differences in answers to initial clinical survey questions regarding secondary control, problem solving, and internal motivation did not reach statistical significance.

Overall, there were no statistically significant differences in any clinical outcomes when measured before and after each session for those in either the clubhouse or IPU group. As shown in the online supplement, clubhouse participants reported feeling that they were more motivated to use their smartphone as part of their recovery, better able to solve problems, and more optimistic. Survey results from participants in the IPU cohort revealed an average increase in optimism. Participants in both the clubhouse and IPU groups had average decreases in anxiety, anhedonia, and feeling that things are outside of their control. Further details on pre- and postintervention average survey scores for these clinical outcome variables in each setting are available in the online supplement. The greatest difference in change in survey scores between participants in the IPU group and those in the clubhouse group was seen in questions regarding stress and problem solving (online supplement).

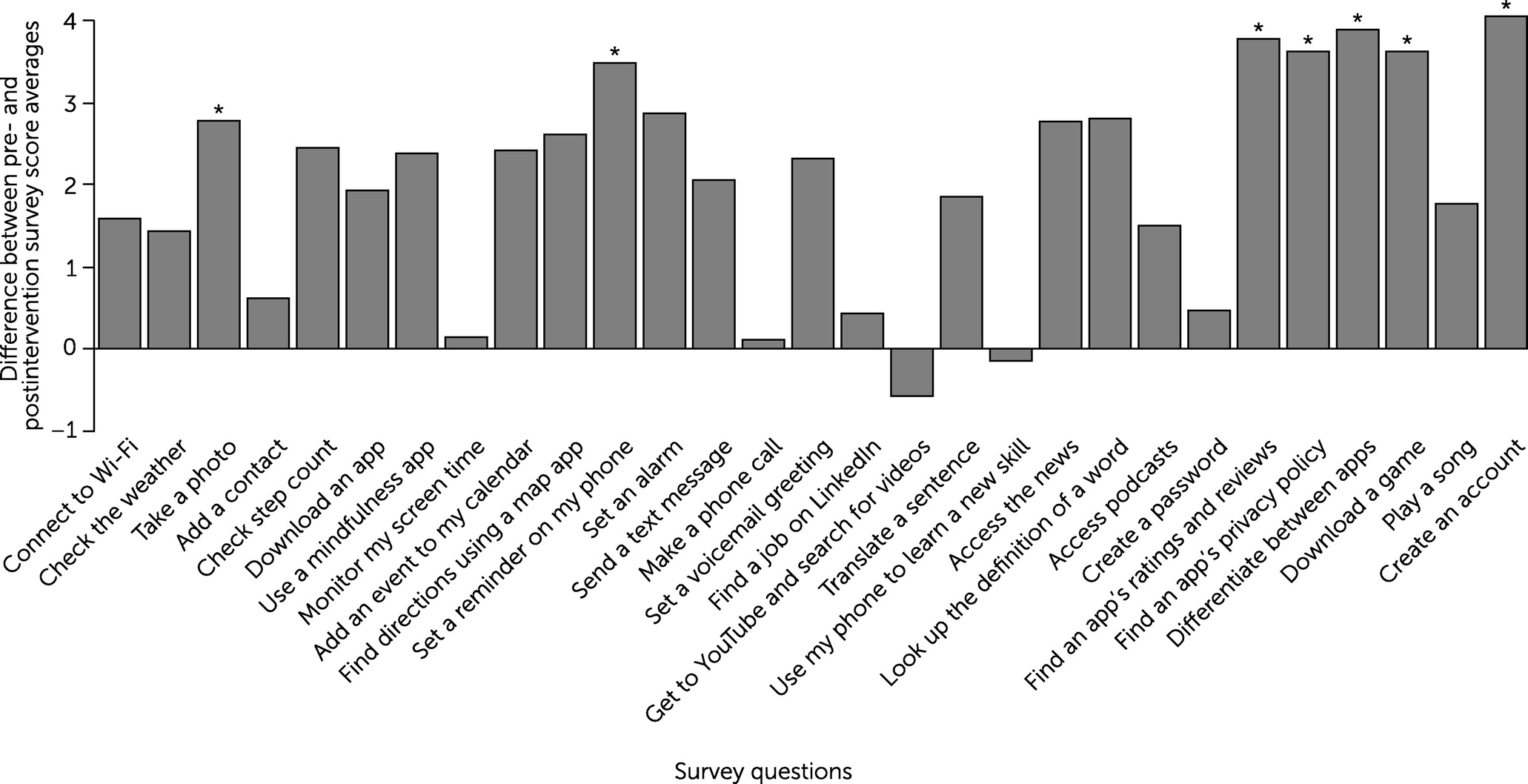

Clubhouse participants responded to 3–4 unique functional survey questions at each of the eight sessions. Changes between pre- and postintervention survey score averages for this population are shown in

Figure 1. The average pre- and postintervention survey scores for the statistically significant functional survey questions are available in the

online supplement. Of the 29 functional survey questions measuring changes in digital skills literacy, 27 showed overall improvements, with statistically significant (p<0.05) improvements visible in seven of the 27 questions. Session 7 of the curriculum, which educates participants on how to navigate smartphones safely, elicited the most improvement in functional skills compared with any other session. The three skills with statistically significant change scores were the ability to “find an app’s ratings and reviews,” “find an app’s privacy policy,” and “differentiate between apps that protect my data and apps that do not.”

Clubhouse participants initially scored poorly (i.e., displayed deficiencies) on 24 (83%) of the 29 functional skills taught, indicating how urgent the need for digital skills training is to achieve the core competencies needed for the implementation of technology into care. After offering our digital literacy curriculum to the clubhouse participants, 25 (86%) of the 29 functional skills were assessed as sufficient; of those 25 skills, 12 (48%) were assessed as proficient.

IPU participants, however, showed no deficiencies in functional skills: all preintervention survey score averages were at least 6.5. Although overall improvements were seen between pre- and postintervention functional survey scores, no statistically significant differences were observed, as shown in a table available in the online supplement.

Discussion

As the need for digital literacy training for people with mental disorders increases, programs such as DOORS offer a ready-to-use solution. Our results suggest that DOORS can improve functional digital literacy skills among patients with serious mental illness and patients receiving treatment in an IPU. Although our results do not support the notion that DOORS can convey clinical benefit as a single-session intervention, they do suggest the program’s feasibility, with trends that should be explored in studies with greater power. In future studies, researchers should consider other design methods, because the immediate pre-post design of the current study made it difficult to measure improvements in clinical outcomes.

Our results highlight the importance of digital literacy training. Clubhouse participants demonstrated substantial deficiencies in their initial digital skills knowledge. Given the flexible nature of DOORS, we can use these results to adapt the program to make it more effective. Our results also suggest that inclusion of some functional skills, such as adding a contact or making a telephone call, may be too simple and self-evident to be useful to most people. The omission of a skill from the program, however, presents a dilemma because any single skill may be the most important to a particular individual, and such a preference cannot be captured in averages. One solution we plan to explore is a separate “key to DOORS” course that would teach only the most basic and fundamental skills to those who need them; thus, only more advanced skills, such as downloading apps, would be taught in the standard DOORS curriculum.

Our findings regarding the functional skills of IPU participants must be interpreted differently because we taught one session (vs. eight for the clubhouse group) in a repeated fashion to match the flow of patients on and off the unit. These IPU participants had high preintervention average survey scores for all functional skills, so their lack of improvement may in part be related to a ceiling effect. This possibility suggests that teaching more advanced skills than those taught in the clubhouse group sessions may be appropriate. However, patients’ short lengths of stay and frequent inability to attend an entire session pose challenges to the program’s effectiveness in the IPU setting.

Some results are more challenging to understand. For example, certain survey results revealed a decrease in reported digital skill comfort and knowledge after an educational session. Although we cannot determine which factors caused this decrease in patients’ self-reported perception of their digital skill set, potential factors include an initial overestimation of skills, challenges with group learning, and external disturbances that pulled participants away from the session. This unexpected finding highlights the need for better assessment tools for digital literacy beyond the self-report methods used here.

Our study also had several weaknesses that must be acknowledged. Because the postintervention survey was conducted immediately following the educational session, long-term knowledge retention and real-world impact or benefit were not measured. Of note, we developed our own survey scales and thresholds because of the lack of validated scales and metrics to assess digital literacy among patients with serious mental illness. The need for the creation and validation of these scales and metrics is evident. Another limitation was the lack of a control group. Participants who had to leave during the group session accounted for some missing postintervention survey data and may have affected outcomes. Finally, this study was not designed to be adequately powered to detect differences in outcomes.

Despite these limitations, our approach has several strengths. The material to run DOORS groups is publicly available and easy to customize. The real-world use of DOORS reported in this study, even given the challenges related to the COVID-19 pandemic, suggests the flexibility necessary to offer the program in diverse settings. In future iterations of this study, we aim to add control groups that will complete the curriculum online to assess the utility of this delivery modality compared with that of in-person sessions.

Conclusions

The DOORS digital literacy curriculum can help to ensure that all patients are able to engage with digital health by reducing the digital divide, and it can improve functional skills among people with serious mental illness receiving treatment in community mental health settings.