In recent years evidence-based care and the implementation of evidence-based guidelines have been increasingly emphasized as standards of care and as cornerstones for improving the quality and effectiveness of mental health care. In this context "evidence" is interpreted as knowledge generated by developing an intervention, testing its efficacy in randomized trials in controlled research settings, modifying it somewhat for "real-world" settings and patient populations (

1,

2,

3), and then conducting effectiveness studies (

4,

5,

6,

7). Once this work is published in peer-reviewed journals, it is considered "evidence."

Considering the substantial resources required to undertake these lengthy yet critical research processes, it is unlikely that adequate funding and research capacity will ever be available to subject all valuable interventions to investigations of efficacy and effectiveness. Perhaps more importantly, even when interventions have been shown to be both clinically effective and cost-effective (

6,

8,

9), they are often not adopted widely, and not even sustained beyond the period of external funding within the very systems in which they have been tested and found to be effective.

Although it is important that researchers continue to develop new interventions and test these interventions' efficacy and effectiveness, it may be possible to generate valuable evidence by using other approaches that are more likely to be adopted and sustained in routine practice (

10). Sustainability has been associated with decision-making processes that are participatory, equitable, and accountable. Consequently, factors related to sustainability include compatibility of the innovation with clients' needs and resources, a sense of ownership of the innovation (

11,

12), collaboration (

13), adequate resources, and user-friendly communication and evaluation (

14).

All these factors suggest that providers' involvement at the grassroots level may enhance program sustainability. However, evidence-based interventions are often seen as top-down impositions by clinicians who are expected to adopt and sustain them but who do not have an active role in their development and evaluation. In addition, practitioners often rightly question whether or not the "evidence" generated in research studies conducted elsewhere is applicable to their own practices, because their local circumstances may differ significantly from those in research studies (

15,

16).

Duan and colleagues (

16) recently argued that another approach to generating clinical evidence might be to "harvest" new and innovative ideas from clinicians. They suggested that frontline clinicians be engaged in developing and evaluating clinical innovations on the basis of their clinical experience. Similar in many ways to strategies adopted in "participatory" research or "community-participatory" research in the public health field (

17), this bottom-up approach for generating clinical evidence has the advantage of actively engaging providers at the outset of the research process, thereby enhancing the relevance of the evidence generated, and perhaps also enhancing program sustainability. The bottom-up approach would not replace the conventional top-down approach but would occur as a parallel process.

Many top-down approaches for translation and dissemination of interventions of known effectiveness have allowed for modifications and have involved clinicians in making these modifications (

18,

19,

20,

21). Because they seek local input and allow some adaptation to local conditions, these efforts are similar to the bottom-up approach described here. However, the bottom-up approach is fundamentally different from translation or dissemination approaches in that the interventions themselves are proposed by nonresearcher clinicians and are carried out by clinicians with the support of researchers. Also, the two approaches represent the opposite ends of a spectrum; standardization (fidelity to a specified model) is emphasized in translation and dissemination models, whereas local preferences (models designed for local system and culture) are emphasized in the bottom-up approach. To illustrate this point, consider the case of hamburgers. Consumers who buy their hamburgers at McDonald's can rely on obtaining a standardized product. However, local hamburger stands may produce more tasty hamburgers. The primary trade-off between these two options is that standardization creates predictable products whereas adaptation results in local creativity rather than predictability.

A bottom-up approach may hold the promise of a more equitable partnership between researchers and clinicians. Indeed, one of the key features and challenges of the bottom-up approach is that services researchers will be required to assume a different role. Rather than leading with their own predetermined interventions, services researchers will be required to serve as facilitators and collaborators with clinicians. They will do so by bringing their research and evaluation skills to assist clinicians in testing their own interventions. Also, if a bottom-up approach is successful, clinicians are likely to become more familiar with research and evaluation methods.

To illustrate an exploratory effort to develop and implement the bottom-up approach, this article describes a program undertaken by the South Central Mental Illness Research, Education, and Clinical Center (MIRECC), a research and education center funded by the Veterans Healthcare Administration (VHA) within the South Central VHA network. The goals of this program, called the clinical partnership program, are to harvest intervention ideas from frontline clinicians, to see these interventions realized and evaluated with assistance from services researchers, and to gain and retain support of the mental health leadership for this process. The program seeks to build the evaluation and research capacity of frontline providers and to enhance the capacity of services researchers to share power and work in a collaborative style with frontline providers. Such partnerships may be critical if the intervention is to be sustained over time (

22,

23).

Setting

The South Central VHA network encompasses all or parts of Louisiana, Arkansas, Oklahoma, Alabama, Mississippi, Florida, Texas, and Missouri. This network serves the largest population of any of the 21 VHA networks and includes the largest percentage of veterans who live at or below the poverty level. About 43 percent of these veterans live in nonmetropolitan areas.

Within this network, ten VHA medical centers and 30 freestanding clinics offer mental health care, provided by more than 1,000 specialty mental health clinicians (

24). Mental health care for the network as a whole is coordinated by the mental health product line manager in consultation with the product line advisory council. This council consists of the administrative leaders from each of the ten VHA medical centers. The South Central MIRECC is a separately funded program that works directly with the mental health product line manager to promote research and provide educational opportunities.

One advantage of conducting demonstration projects in VHA settings is that the VHA routinely maintains large administrative databases, both locally and nationally (

25), and the South Central network has recently created a user-friendly data warehouse that integrates a number of local sources of administrative data, such as patient utilization, provider, and pharmacy data (unpublished data, Bates J, 2002). Although there are decided limitations to these data—like most administrative data, these data consist primarily of utilization measures—the data source is an inexpensive tool available to assist in evaluation efforts.

Goals of the program

The clinical partnership program was designed with four process goals in mind: to involve top leadership in developing the program's clinical themes; to encourage clinicians to propose specific projects relevant to the theme to be implemented at their sites; to review, select, and fund two to five projects; and to provide assistance to clinicians in developing study designs and conducting evaluations. How we addressed each of these goals is described below.

Developing a theme

We initiated this program by involving the top mental health leadership at each of the ten facilities in this VHA network. In large organizations, engaging top leadership in a "decision-making coalition" at the outset is often critical to the success of an entire project (

26). We wanted to protect providers' latitude in choosing and proposing an intervention, but we also wanted a cohesive theme that the leadership could agree was relevant to current issues in the network. We asked each member of the product line advisory council to identify areas of mental health needs or concerns that could be addressed in a clinical partnership project. The ten council members generated a list of topics, most of which were contained within the theme of "improving treatment adherence." This theme not only has the support of the network leadership but also is broad enough to allow clinicians latitude in developing innovative interventions. Adherence was broadly defined as adhering to specific treatments—for example, taking medications, attending scheduled outpatient visits, or remaining engaged in care.

Encouraging clinician participation

To encourage the participation of clinicians, we first designed a user-friendly Request for Proposals (RFP). Experience and familiarity with research design and procedures vary greatly across clinicians in the network. Even though it was critical to require certain design and methodologic features, we recognize that many design and methodologic concepts that are familiar to researchers might not be familiar to clinicians. With this in mind, we carefully designed our RFP and application forms by using language that was as simple and straightforward as possible. To further encourage clinicians' participation, we offered the assistance of services researchers as consultants for the design and evaluation plan of the individual projects. The application required that the applicants include the following features in their proposed projects: objectives of the intervention, a description of a target population for the intervention, listings of inclusion and exclusion criteria for intervention participants, an estimation of the number of participants, identification of a comparison group that would not receive the intervention but that would be assessed, and an evaluation plan including specific process or outcome measures, or both. The RFP was disseminated through the mental health leadership and through the MIRECC electronic newsletter.

Reviewing, selecting, and funding projects

We subjected all applications to three stages of review. First, we conducted a scientific review with the help of three services researchers with national reputations. These reviewers scored each project for scientific merit by using a specified format. Second, three clinical and administrative leaders from the product line advisory council scored each application in terms of its relevance to network mental health goals and its perceived potential to improve care. To reach the pool of finalists, applications had to score in the top half of each of these two reviews. A final selection committee consisting of both MIRECC members and mental health product line leaders made the final selection. This three-tiered approach attempted to balance clinical relevance with scientific rigor. Wherever possible, we favored applications from medical centers whose clinicians had little or no research experience.

We received nine applications from seven medical centers and funded four of these projects. Two of the four projects selected will be led by clinicians who have some research experience, and two will be conducted by clinicians who have very little familiarity with research or evaluation techniques. The four projects, all of which are now under way, proposed four quite different interventions. The first involves enhancing attendance at mental health specialty appointments by using a modified collaborative care model (

9,

27,

28) and a nurse care manager. The second project involves use of brief motivational enhancement in a group format (

29) for veterans with posttraumatic stress disorder (

30). The third involves the creation of a peer-support "buddy system" in which patients who are enrolled in the clinic provide orientation to new patients (

31). Finally, the fourth project involves the use of a combination of motivational interviewing and contingency management for veterans who have primary substance use disorders. Each of these projects, although often based on the work of others, can be viewed as a pilot project of a new intervention designed to be responsive to local needs and systems of care.

With the assistance of network leadership, we were able to secure approximately $1 million to initiate this program. We expected each intervention to last a minimum of two years. Applicants were allowed to request up to two full-time-equivalent (FTE) personnel to conduct the intervention. At least one-half of an FTE was required to assist with data collection and management. MIRECC research and evaluation staff will support data analysis for the evaluation, either as consultants in cases in which providers are able to conduct their own data analyses or as primary data analysts in cases in which providers do not have such capability. The local facilities contributed the time of the local principal investigators.

Facilitating projects

To assist with the MIRECC clinical partnership program, we hired approximately 1.5 FTEs of "facilitators"—individuals who were experienced in research methods and evaluation techniques. Each of the four projects has been assigned a facilitator who will have regular contact with the clinicians and will work directly with frontline providers. Facilitators were selected partly on the basis of their interpersonal skills, ability to convey information effectively, and interest in participatory research. We did not develop a "cookbook" approach to facilitation, because we expected that flexibility would be essential.

Project facilitators visit the intervention sites periodically and, when necessary, create linkages with MIRECC research personnel who have specific expertise needed for the pilot project. The role of the facilitators is broad, varying from project to project depending on the knowledge, skills, and research background of the local clinical team. In general, the facilitator's job is to ensure the integrity of the design and the evaluation components of the project. In some cases, facilitators are working initially with the clinicians to refine their proposed interventions or protocols. For clinicians who have more research experience—for example, those who are familiar with the development and implementation of protocols and with standardized clinical measures—the facilitators may focus on identifying research expertise needed to analyze data. In all cases facilitators assist with the selection of assessment instruments and approaches to collect assessment data for both intervention and comparison groups.

Although this program aims to encourage clinicians to become more familiar with empirical assessments, we do not expect that clinicians will emerge from this program able to evaluate interventions entirely on their own. Rather, we view participation in the project as a first step toward becoming more skilled in research or evaluation methods. Bringing together the clinical and research worlds such that most clinicians can independently learn in a systematic and organized way from their own empirical data will require long-term changes in education that are beyond the scope of this program.

Program evaluation

Two levels of evaluation will be conducted. The first is a quantitative evaluation of the outcomes of the interventions, as outlined in each applicant's original proposal. As noted above, facilitators will assist the clinicians with this evaluation. A second, qualitative evaluation will also be conducted to determine whether our goal of creating a participatory relationship between clinicians and researchers is achieved for the program as a whole and for each individual project. It is worth pointing out that we do not know the extent to which the outcomes of the two evaluations might be related. For example, it is possible that an individual pilot project would be judged as being clinically effective in the quantitative evaluation with or without its having achieved the "participatory" goals that will be assessed with qualitative methods.

Clinicians, administrators, and facilitators for each project will serve as subjects for the qualitative evaluation. We will utilize approaches used in participatory research for this evaluation (

32,

33,

34). In particular, we have chosen to model our qualitative evaluation after the approach proposed by Naylor and colleagues (

34) to assess the extent to which a "participatory process" actually occurs in a program (

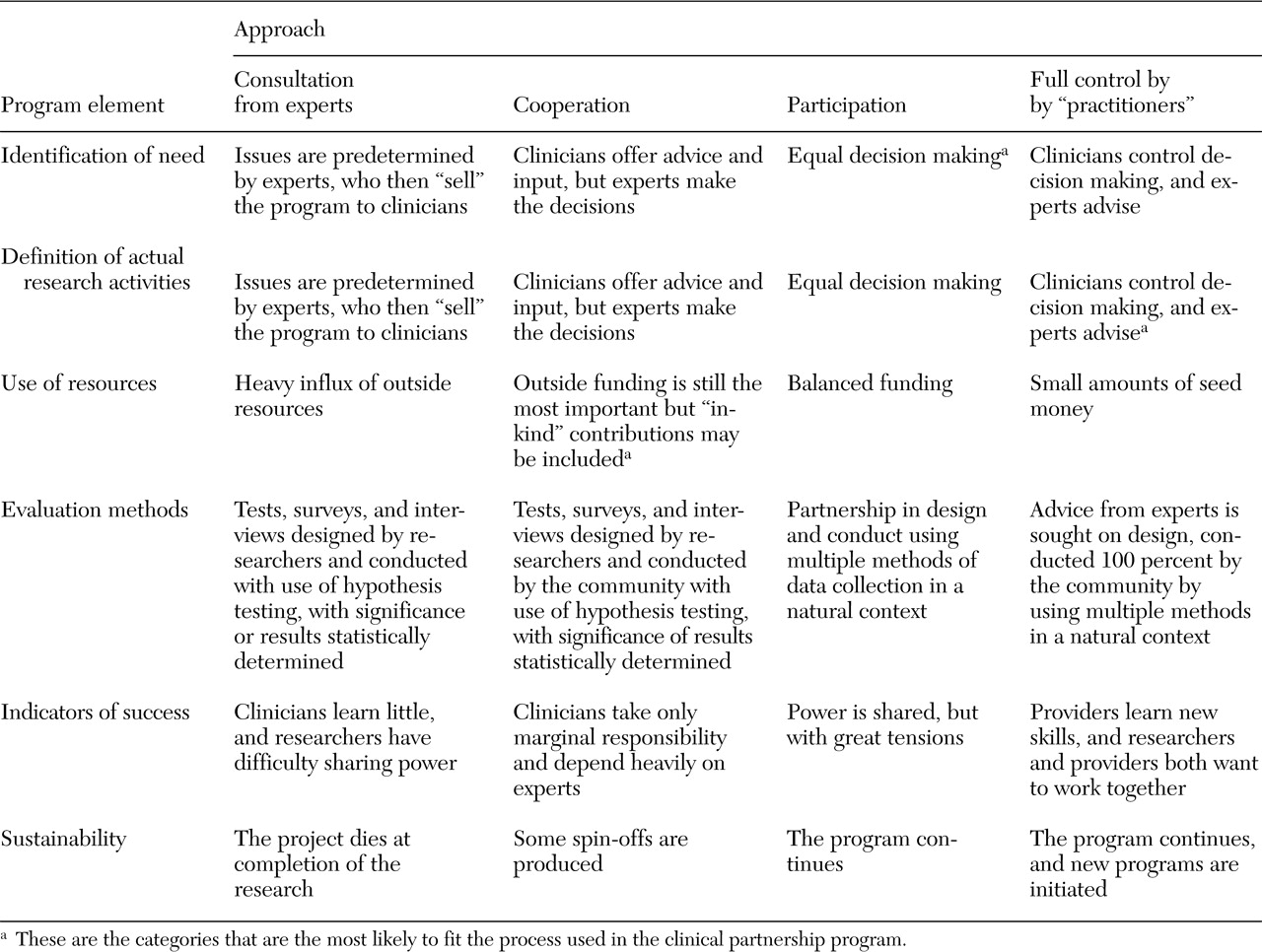

Table 1). This approach involves the assessment of six elements: identification of a need or a theme, definition of actual activities, origin of resources, evaluation methods, indicators of success, and sustainability.

For each project, these elements will be rated along a continuum developed to characterize the range of processes from being fully expert driven to being fully controlled by participants. We expect some features to be common to the program as a whole. For example, themes for the clinical partnership program were identified as described above, and this process would most likely fall under "participation" in

Table 1. Although there is some variation among individual projects in terms of the "in-kind" contribution made, the program as a whole is probably best characterized by the "cooperation" approach in the table. We expect considerable variability in other dimensions, such as success indicators and degree of sustainability.

In participatory research in public health, multiple stakeholder groups are often included as participants. Our definition of "participants," by contrast, is relatively narrow, including only mental health administrators, frontline providers, and project facilitators. Other participatory research conducted in mental health settings has included consumers as participants (

35). Although there are numerous examples involving large numbers of participant groups or stakeholders in public health research, very few such studies can be found in the mental health literature.

Discussion and conclusions

This article briefly describes the concepts and procedures guiding the South Central MIRECC clinical partnership program. Although the promotion of care on the basis of evidence from the literature has undoubtedly been a major contribution to improving clinical care, there are both theoretical and practical limitations to this approach. Perhaps most disturbing is that, even when evidence-based interventions demonstrate clinical effectiveness and cost-effectiveness, these interventions are, with few exceptions (

36), rarely sustained or adopted. The bottom-up approach for the production of evidence might result in the development of more relevant interventions and a greater likelihood of sustainability. However, this approach will require that experts from academic centers be willing and able to share power and decision making.

In any case, we are not arguing for the superiority of one approach over another but, rather, for the coexistence of these two approaches. To assess the extent to which such bottom-up programs truly foster collaboration, evaluation approaches developed in the public health sector, particularly those involving community-participatory research, may be useful. The success of the MIRECC clinical partnership program will depend not only on the outcome of each individual pilot project but also on the degree to which true collaboration between clinicians and researchers is achieved and on the extent to which these programs are sustained in local settings.