Without question, the

Diagnostic and Statistical Manual of Mental Disorders1 functions as the bible of psychiatric diagnosis. DSM establishes the scope of psychopathology; it demarcates the boundary between the merely eccentric and the truly ill. Its power and influence cannot be underestimated, for how we choose to define an illness necessarily affects the nature of the very questions that we ask in furthering our knowledge about that illness.

DSM-IV classifies a belief as a delusion if and only if it contains four elements. These require that the ideation be

2.

Based on a faulty inference from reality (i.e., not simply false because of ignorance or wrong information).

3.

Sustained in spite of clear evidence to the contrary.

4.

Discordant with beliefs accepted by one's culture.

The negation of any one of these four elements is sufficient to rule out a diagnosis of delusional ideation. The first three conditions are intuitive; the last condition, we will argue below, is not at all obvious and makes evident some of our most fundamental assumptions regarding the nature of psychiatric illness. Thus, even if the condition of cultural nonconformity turns out to be necessary—and we will make a case here that it is necessary—it is so for nontrivial reasons that deserve critical examination.

We first present below some of the problems raised by the “cultural condition.” We then offer a preliminary response to this critique within the standard framework of DSM and point out the shortcomings of this response. We next present a theory of delusions that is based on Johnson-Laird's experimental studies on what we call “conceptual model restriction” in normal cognition

2,3 and addresses the cultural condition from within the context of model restriction. Finally, we present some case examples to examine whether, in fact, model restriction is useful in diagnosing delusional ideation, particularly in cases that would be considered ambiguous by current DSM criteria.

PROBLEMS WITH CULTURAL SUBJECTIVITY

The most striking feature of the cultural condition is that it is expressly relativistic. The only symptom of delusional ideation is the set of beliefs that the patient holds. However, a pattern of beliefs presented by one person may be evidence for mental illness, whereas the identical pattern of beliefs presented by another person within a different cultural context may not be evidence for mental illness. On a superficial level, this makes it hard to conceive of delusional ideation as a medical condition that is reducible to a physiological pathology. After all, a heart murmur is evidence of a heart abnormality whether it is diagnosed in New York or in Bangladesh. Although other facts about a patient may be relevant in deciding on a diagnosis in medicine (a prior myocardial infarction, for instance, in the case of the murmur), they are so only in the context of symptom underdetermination. Even if a particular symptom may be evidence for more than one underlying cause, the presence of that symptom presents insufficient information by itself to distinguish between possible causes.

The distinction between symptom underdetermination and symptom relativism is subtle but nonetheless real. The former concerns incomplete information about a pathology; the shift is therefore between diagnoses. The latter concerns not the nature of the diagnosis, but the nature of the actual pathology that underlies that diagnosis. In medicine, a one-to-one correlation between a pathology and its diagnosis is presumed to exist; in other words, one body's state should not, in principle, produce two different and mutually incompatible diagnoses. A person's neurobiological framework (i.e., actual pathology) presumably is not affected by whether that person's residence is in Bangladesh or New York. Therefore, a potential paradox in establishing delusions as a medical (versus, say, a sociological) disease emerges if the same person can be diagnosed correctly in different and mutually incompatible ways simply because of his or her social context.

The cultural condition can be defended from the problem of physiological irreducibility in two ways. First, one can argue that the heart murmur example misses the point by presenting a false analogy. What is relevant about diagnosing delusions in Bangladesh versus New York is that the systems of beliefs in which those delusions occur, their context, is different. A direct analogy to the heart murmur case, therefore, would require that the standard heart be different in New York and Bangladesh. If so, it might indeed be difficult (if not theoretically impossible) to characterize a heart in one location as diseased from the vantage point of the other location, since the standard for each is different. Even if one heart is intrinsically less efficient than the other, we would be hard pressed to label as “sick” a characteristic that is the norm. For example, we do not consider the basset hound, an animal of astonishingly inefficient design, to be a “diseased dog,” but rather a separate breed.

A second response to the issue of physiological irreducibility is to suggest that the underlying problem, precisely that to which the delusions are physiologically reducible, causes one to believe in things that are not accepted by one's culture. The fourth condition in the DSM criteria (the cultural condition) is really only a consequence of the third condition (perseverance in the face of disconfirmation) if we make the probabilistic assumption that typically the greater the number of people who endorse a factual belief, the greater the likelihood of its truth. Thus, the very fact that a belief's negation is endorsed by many people should count as “disconfirmation” for that belief. We shall elaborate on this view in a later section.

The second problem with the cultural condition, vagueness, is related to the first. In principle one cannot determine whether a belief conforms to a person's “culture or subculture” unless one can characterize the boundaries of that culture or subculture. Yet there is no precise method for distinguishing a subculture's ideology from a communally held delusional belief. While it is easy to define two categories of beliefs, those that are held by most of the members of a homogeneous culture and those that are held solely by one individual, the vast proportion of rationally unjustifiable beliefs fall in between the two. To name just a few interesting examples, consider folie à deux, millenial cults, national hysteria, and most, if not all, organized religions. If DSM can consider folie à deux as sharing a delusion, then why not a folie à trois? Or a folie à trente, for that matter? If DSM can consider families to share in delusions, then why not several families or entire groups of people who are genetically unrelated but who consider themselves to be family? For that matter, why should the number of people who share a symptom be theoretically relevant at all? The person with the heart murmur still has a heart murmur if his entire town does as well. Although, as we noted, a characteristic may very well not be classified as a disease if it is pervasive enough to be considered as occurring “by default” (as with the unfortunate basset hound), the characteristic obviously exists, independent of diagnosis.

On the other hand, if some objective measure of minimal functioning can indeed be defined (“working” versus “not working,” for instance), then a characteristic can be classified in terms of whether it impedes or promotes functioning. We will argue here that such an objective measure exists in the case of belief formation. Just as we can plausibly define a “functional heart” as one that permits an organism to go out and perform the activities that are required to sustain that organism, we can plausibly define a “functional rational structure” as one that preserves truth-value enough of the time to allow the individual to 1) survive physically and 2) communicate socially—the two being usually linked.

These two desiderata, physical survival and social communication, are objective even though they admit to degree. Because they are objective criteria, we would expect them to fall outside of the domain of culture. It will be objected that social communication is itself social, of course, and thus might well vary according to culture. Physical survival might also vary, for that matter, since the physical requirements of different cultures are different—consider a primitive agrarian culture versus one in which the majority of people sit in offices. Yet this is to miss the point. What we are looking at in the case of rational structure is something much more fundamental than the content of beliefs; it is the ability to think (regardless of particular content) in a manner that preserves truth-value. “Preservation of truth-value” may be defined as the ability in individuals to draw conclusions that are consistently true (i.e., that correspond with “reality” in the purely factual sense), from premises that are also true.

The connection between thinking in ways that preserve truth-value and our two desiderata of minimal functioning (physical survival and social communication) is commonsensical. Individuals who have true knowledge that they are in front of a hole are less likely to fall into it. Having true knowledge of the fact that winter will inevitably follow summer, one can plan ahead and stockpile food. By extension, a group of individuals who have true knowledge will have a shared frame of reference that will allow them to communicate. This point is less obvious because we can easily imagine a large group of people who have shared false knowledge that acts as a shared frame of reference, but this, as we shall argue later, is a special case. The relevant premise here is that one can be wrong in a very large number of ways, but one can be right in only one way. For example, if my telephone is black, then there is only one true statement about its color: My telephone is black. However, there are a very large number of statements about its color that are false: My telephone is white, My telephone is brown, My telephone is chartreuse, etc. Since the false statements are all equally wrong, we would not expect the choice of one to be favored over another. Therefore, the probability that more than one person would choose the same wrong statement is exceedingly small because the possibilities from among which one can choose are very large. The probability that each additional person would choose the same wrong statement is even smaller by far. We can imagine that if each person in a society had a different view about the color of the telephone (and a different view about everything else as well), the members of that society would find it impossible to communicate with one another. Therefore, mutual and consistent access to truth provides the most efficient manner of communicating.

Because “what is factually true” exists independently of the cultural vantage point from which one stands, access to that knowledge is not culturally relative. If there is a hole somewhere in Bangladesh, it is just as much a hole whether I am a native or a tourist. Knowledge of its existence will prevent me from falling into it whether I am a native or a tourist. We will be able to discuss its dangers only if we all recognize it as a hole, and that recognition is independent of our cultural backgrounds. Far from being culturally relativistic, recognition of truth is perhaps the only thing that cuts across cultures and allows us to see each other as common creatures of rationality. Cultures may have different values, but presumably they do not have different formal systems of logic.

PRAGMATIC MOTIVATIONS FOR CULTURAL SUBJECTIVITY

Perhaps this is too abstract. The likely implicit motivation for the manner in which delusions are defined in DSM is twofold: the need to identify pathology in the face of widespread nonrational or false beliefs (diseases being, by definition, low in prevalence), and an emphasis on functionality. The cultural condition presumably addresses both. Or does it?

Consider two cases. The first is a student who believes that he will perform well on his exams only if he is in possession of a certain “lucky” rabbit's foot during these exams. Since he owns the rabbit's foot, bringing it to his exams does not present a problem. He continues to hold this belief even though his performance on exams varies in direct correspondence to the amount of time that he spends studying in preparation for these exams and is uncorrelated to whether the rabbit's foot is in his possession. Instances in which he has the rabbit's foot and does well reinforce his belief, but instances in which he does well without the rabbit's foot or does poorly with the rabbit's foot are discounted as insignificant aberrations.

The second case concerns another student, who believes that she will perform well on her exams only if she sees nine parked red cars on the day that she takes her exam. This belief causes problems for the student, for she often spends hours before her exams running around trying to find the nine parked red cars and sometimes is unable to find them even after an exhaustive search. This student also continues to hold on to her belief in spite of the fact that her performance varies in direct correspondence directly to the amount of time that she spends studying in preparation for these exams and is uncorrelated to whether she has, in fact, found the nine parked cars. Again, instances in which she has found the nine parked cars and does well reinforce her belief, while instances in which she cannot find the nine parked cars but does well, or finds the nine parked cars but does poorly, are perceived as insignificant aberrations.

Both students hold false beliefs in spite of evidence to the contrary. The first student's false belief is not uncommon, while the second student's belief is considered extremely odd. The first student's belief improves his level of functioning by providing an easily attainable (false) sense of security. The second student's belief impairs her level of functioning by making the object of (false) security beyond her control. So, is one case delusional and one not delusional because of considerations of cultural acceptance, or because of considerations of functionality? This is a misleading question, for it assumes that in only one case are the beliefs delusional. We will argue that by the criteria for model restriction given below, both students are delusional, but that one of the delusions is more common and socially acceptable precisely because it does not have a significant impact on functioning. Although considerations of functionality may determine who receives treatment on a purely practical basis, the underlying mechanism of immunity to contradictory evidence remains the same. Presumably the more immune one is to contradictory evidence, the more extreme one's delusions will be, and therefore the more likely they are to affect one's functioning and be culturally unacceptable. But to look solely at cultural acceptance and functioning is to mistake the symptom for the cause. We will look at cases of culturally shared rationally unjustifiable belief after a brief presentation of a model of delusions that is based on immunity to contradictory evidence (“model restriction”) in the following section. We will then refine the formulation of the cultural condition in terms of that model.

A NEW DEFINITION OF DELUSIONAL IDEATION IN TERMS OF MODEL RESTRICTION

Johnson-Laird's experimental studies on the cognitive functioning of healthy individuals suggest that rather than starting from a few premises and deriving conclusions in a systematic deductive manner, most people take in information, form mental models (hypotheses) with respect to that information, then gradually amend (“restrict”) their models by interaction with contradictory evidence.

2,3 Thus, if a little boy has an experience with a cat who scratches, he may form the hypothesis that all cats scratch and that the only animals that scratch are cats. Later experience with affectionate cats and surly hamsters may cause him to amend his model: some cats scratch and some do not; some hamsters scratch also. In this manner, our ideas are constantly affected by the increasing information to which we are exposed. As the boy grows up, he presumably incorporates more fundamental principles into his rational structure that act as a filter on new experiences: just because one

x does

y doesn't mean that all

x do

y, for instance. These principles signal the likely presence of counterexamples to his original hypothesis to which he can be alert. Johnson-Laird's work has shown that simply being presented with counterexamples to one's theories is not sufficient to reasoning well; counterexamples must first be recognized for what they are.

Delusions may be viewed as the natural consequence of a failure to distinguish conceptual relevance: irrelevant information, in the form of disconnected experiences, is taken to be relevant in a manner that suggests false causal connections, whereas relevant information, in the form of counterexamples, is ignored. Interestingly, there is a substantial amount of evidence that individuals with schizophrenia have a similar problem in distinguishing between relevant and irrelevant sensory stimuli.

4,5 The classic case of sensory filtering is that of the “cocktail party phenomenon,” in which one can focus on the conversation of one's partner amidst the loud drone of a hundred other conversations. It could be hypothesized that the tendency to view disparate and unconnected pieces of information as being all equally important, gathered within the context of a heightened sense of alarm, is responsible for the sort of conspiratorial delusions that are so common among paranoid patients. Garety

6 has suggested a model of belief formation that is similar.

The advantage of considering delusions within the context of model restriction is that it provides a coherent framework from which the first three DSM conditions often follow (falsity, faulty inference, immunity to contradictory evidence) and preserves the core of the fourth condition without the relativism and vagueness that characterize its current formulation. We would argue that the consequences of the cultural condition are intuitively maintained by making a distinction between beliefs that are arational and beliefs that are irrational. “Arational” beliefs are those that are held without rational justification, in the absence of information to support them. “Irrational” beliefs are those that are held in spite of evidence to the contrary; the problem here is not lack of information, but rather refusal to acknowledge it. The little boy who initially concludes that “all cats scratch and the only animals that scratch are cats” on the basis of his limited experience is not delusional in making this hypothesis. The world does not necessarily lay all of its relevant premises before us at once. He is only doing what all of us do regularly in the absence of full information. But the boy who grows up into a man and still maintains that “the only animals that scratch are cats,” in spite of experience with long-clawed surly hamsters, is delusional, by our definition. It may be a delusion that has little or no impact on his ability to function (in which case his delusion may never be diagnosed as such), or it may be totally incapacitating. It may a delusion that is his alone, or it may be a delusion shared by a whole slew of cat-hating activists. But both of these judgments (as to functioning and social commonality) merely function as modifiers to the diagnosis—they do not define the diagnosis itself.

Because model restriction provides an objective set of criteria for diagnosis, it is independent of cultural setting. On the other hand, it also neatly excludes a whole region of arational belief that is largely culturally influenced. Common religious beliefs such as the “second coming,” God, or heaven cannot be considered “delusional” because they admit of no counterexample: future events haven't yet occurred and thus cannot be contradicted, and nonphysical entities cannot be contradicted by physical evidence. Belief in the power of prayer, for instance, may be labeled as either arational or irrational, depending upon the ground rules that one uses to characterize possible responses to the prayer. Obviously, if the answer to a prayer must always be “yes,” then any instance in which one prays for x and x does not occur must constitute a counterexample. Because it is often the case that people who pray do not receive what they prayed for, the possibility of having “no” as an answer to their prayers permits them to go on praying without having to face disconfirmatory evidence. Therefore, in evaluating an individual who is markedly religious (who fervently prays, for instance) for evidence of delusional ideation, it is necessary to determine whether or not the individual's maintenance of his or her religious beliefs requires ignoring empirical counterexamples to those beliefs (in this case, by answering the question, “is ‘no’ a possible answer to prayer?”).

A potential difficulty is that many “bizarre” delusions (i.e., delusions defined by DSM as those whose content is not physically possible) would also seem to evade diagnosis on similar grounds. For the patient who considers himself to be an ambassador from Alpha Centauri, what counts as a relevant counterexample? And for the patient who believes that his thoughts are being controlled by an outside source, what counts as relevant counterexample? For many delusions, the intuitive problem is not that we have reason to believe that they are false, but rather that we have no reason to believe that they are true. Yet these would appear to be “arational” and thus by our earlier description would not be considered to be “delusional.”

This is the case only if we neglect contextual restrictors. We suggested earlier that the little boy, as he grows older and more experienced at having his hypotheses contradicted, might eventually filter his hypotheses through a set of conceptual rules that automatically restrict his models. These rules would include probabilistic maxims of the following type: the future is likely to resemble the past; information that is gathered from many sources is more likely to be true than information gathered from only one source; one should give higher credence to events of intrinsically higher probability and lower credence to events of intrinsically lower probability; some coincidental events should be judged as being random rather than causal because they share no other relevant features; the likelihood of events occurring randomly is inversely proportional to the number of times that they occur coincidentally; and so on. The fact that these are identified as “common sense” is hardly accidental. They form the basis of our communal experience of the world. These contextual premises themselves provide preliminary counterexamples and serve to restrict models at perhaps an even more fundamental level than do empirical counterexamples. The hypothesized failure of delusional patients to make adequate use of contextual restrictors is consistent both with studies that have shown a tendency for them to “jump to conclusions” in forming judgments

7,8 and with patients' focus on present information to the exclusion of relevant past experience.

9Contextual restrictors may provide the needed distinction between bizarre delusions and commonly shared arational beliefs. This is because arational beliefs that run counter to the opinions of one's peers are prima facie examples of immunity to (contextual) counterexample, since the experiences and conclusions of others are a valuable tool in preserving truth-value. Bizarre delusions typically violate not only the criterion of “peer review,” but other contextual restrictions that govern causation, regularity, and randomness as well. What is perhaps most critical here is not just that patients' beliefs are of low probability, but that the patients' “investment” in these beliefs is disproportional to their probability of being true. Thus, a woman who believes in the Virgin Mary's potential to perform miracles is not necessarily delusional if her arational belief does not prevent her from taking steps to act on her own behalf. The same believer, on the other hand, might very well be recognized as delusional if she gives away all of her possessions and takes to sea in a leaky boat in the expectation that she will be saved. Of course, these cases are not limited to the religious domain; a similar example might be drawn with an optimistic buyer of lottery tickets. It is important to note that probabilistic considerations are relevant only in the face of arational beliefs; irrational beliefs are always, upon this model, considered to be delusions, regardless of functional or social considerations. Rational false beliefs, on the other hand, are always considered to be nondelusional, again regardless of functional or social considerations. Rational false beliefs are defined here as those that would have been likely to be true given contextual premises, and for which no counterexample has yet been presented. An example would be the parent who believes that his or her child is in school because the child is usually in school during the day, unaware of the fact that the child has been taken to a hospital.

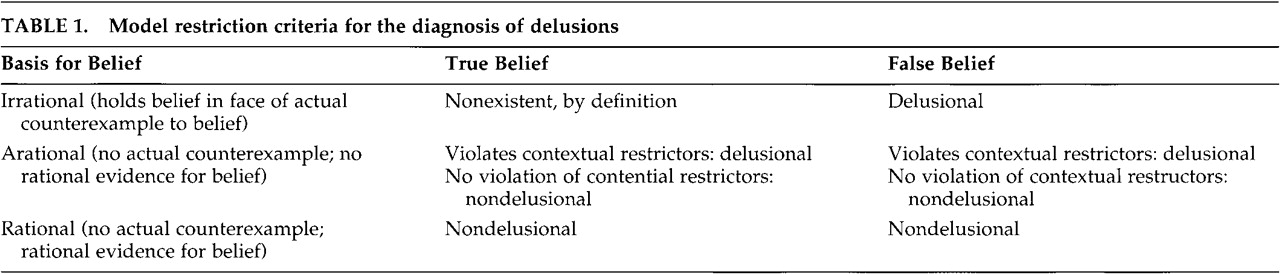

Because assessment of immunity to counterexamples requires two variables that are evaluated with respect to one another—the presence of counterexamples versus the recognition of counterexamples—it follows that the severity of a delusion can be described according to that continuum. (The model is set forth in

Table 1.) In most circumstances, the degree of immunity is not neatly quantifiable because the presence of counterexamples must be estimated. However, intuitive distinctions can still be made. The woman who believes that her neighbor hates her is presumably more delusional if she bases her judgment on two interactions consistent with her belief and fifteen interactions inconsistent with her belief than if she bases her judgment on two consistent interactions and three inconsistent interactions. The critical issue is how much information must be ignored in order to maintain the belief, where the amount of information one must ignore is directly proportional to the severity of the delusion.

This “mathematics of counterexamples” would account for our perception that bizarre delusions are symptoms of a more severe pathology than non-bizarre delusions. For any given false belief, the number of actual counterexamples is relatively small because the opportunities for testing the belief are limited by its content. For example, if I believe that my neighbor hates me, then the possibilities for my confronting counterexamples are limited to the number of my possible interactions with that neighbor. On the other hand, contextual counterexamples, being much more general guidelines for how the world works, are found at every turn, and their applicability is not limited by exact content. Contextual rules about causation and randomness, for instance, are reinforced within a wide variety of situations, from the machinations of a job promotion to the catching of a cold. Since the opportunities for counterexample are so much more prevalent, the violation of contextual restrictors must necessarily ignore a much larger share of the world's available information. Therefore, by our hypothesis, the delusions that such violations produce would be predictably much more severe.

From this viewpoint, then, the distinction between bizarre and non-bizarre delusions is one of degree, rather than one of kind. For both, the underlying mechanism is a failure to adequately restrict one's mental models. For bizarre delusions the failure is more diffuse, encompassing recognition not only of concrete counterexamples to the model, but of contextual ones as well. The hypothesis that bizarre delusions exist as a proper subset of non-bizarre delusions is obviously testable, for it predicts that patients with bizarre delusions will have the cognitive characteristics of patients with non-bizarre delusions, while the converse will not be true.

CONCLUSIONS AND CASE EXAMPLES OF SHARED BELIEFS

It may be objected that this is only a question of redefinition by means of added modifiers, that what we call “delusions that are false and impair functioning,” DSM simply calls “delusions.” Moreover, since generally only people with delusions that are “false and impair functioning” will even be seen for treatment, why not restrict the set, at least for clinical purposes? We have argued and will argue that “delusions” need neither be false nor impair functioning to be the product of pathological reasoning and to appear intuitively deviant. We have emphasized above that broadening the diagnosis to avoid socially relativistic criteria simplifies the task of finding a biological correlate to delusions and gains much-needed precision. Our argument began from the observation that if symptoms of psychosis are to be considered brain-based, then their presence must signify an impairment of cognitive functioning, where “adequate cognitive functioning” can be objectively defined. We have made the claim that such a standard exists. This is the preservation of truth-value, analogous to other medical standards of functioning, in that it makes the survival of the organism primary. We have said that reasoning in the real world cannot be straightforwardly deductive because of the lack of a prespecified set of relevant premises. A persuasive alternative to deductive inference was suggested in the form of Johnson-Laird's theory of model restriction, which gives a large role to recognition of counterexamples in creating true belief systems about the world. We are then able to use a revised theory of model restriction to classify beliefs as being either pathological or nonpathological symptoms by reference to their violation of either actual (i.e., concrete) or contextual (i.e., probabilistic) counterexamples.

This revised diagnostic criterion shifts the focus from whether the belief is true and culturally accepted to the question of how the belief was arrived at and maintained. It is only the latter issue that is dictated by neurobiological function; the objective state of the outside world and its culture are not. Since we believe that only factors that have biological effects can be relevant in making a medical diagnosis, we believe that the diagnostic criterion suggested above is a useful alternative to the traditional manner of viewing delusions. The usefulness of the cultural condition, in this view, is that it makes evident one of our strongest contextual premises, that beliefs that are held by many people are more likely to be true than beliefs that are held by few.

The Trembling Flower (arational; true; reduced functioning). A 19-year-old college student in New York is diagnosed with mania. He believes that his girlfriend, a student at Berkeley, is cheating on him. He states his reason for this belief in the following manner: “The trembling of the petals on this flower prove to me that my lover is betraying me.” The flower in question is a lily that she has given him during a visit. The girlfriend confides privately to the psychiatrist that she is, in fact, having an affair in California with a classmate and that she has not told her boyfriend.

Comments: This case qualifies as a delusion by model-restriction criteria (because of contextual counterexamples), but not by DSM criteria (because the content of the belief is true). This type of example points out the limitations of the DSM requirement that a belief be false and supports our argument that what is pathological about delusional ideation is not the content of the belief, per se, but the method by which it is reached and maintained.

The Practice of Voodoo (irrational; false; uncertain effect on functioning). Voodoo is a culturally accepted religion in the Caribbean Islands, as well as in areas of New York City. A common practice is to attempt to affect a person, either positively or maliciously, by manipulating effigies that contain fragments of the person's clothing, nails, or hair. The type of manipulation done to the effigy is supposed to correspond to the type of benefit or injury done to the person it represents. Because the benefit or injury seldom follows the manipulation, disconfirmation is nearly universal.

Comments: From a simplistic point of view, this case qualifies as a delusion by model-restriction criteria (because of actual counterexamples), but not by DSM criteria (because the belief is shared by a culture). However, what complicates the matter is that not all members of the culture can be characterized uniformly, since some have personal experience with actual counterexamples while others have only confirmational experience based on biased hearsay. The degree to which individual members of the culture do or do not suffer from delusion would be dependent on the degree to which they have had to ignore the presence of counterexamples in order to maintain their beliefs. This variety of experience is something that DSM's cultural condition ignores. It treats such beliefs as normal by virtue of cultural immunity, whether the subject in question is a witch doctor who has large amounts of personal experience from which to draw conclusions, or a person with no personal experience from which to draw conclusions who simply holds the beliefs as part of his or her upbringing.

The God Box (arational; uncertain truth; reduced functioning). A 20-year-old female college student in a city in Maryland was told in her church youth group to create a “God Box.” Whenever she has a problem, she is supposed to write it on a small piece of paper and put it in the God Box. She can then “forget about it and let God take care of it as is His will.”

Comments: Since God presumably works in ways that cannot be predicted, there is no way to disconfirm this hypothesis. It does not flagrantly violate any contextual counterexamples, since the correspondence of passivity with negative outcomes is acceptable (negative outcomes can always be interpreted as “His will”). Therefore, this does not qualify as a delusion by model-restriction criteria, even if it does seem to be pathological for other reasons. DSM's treatment of this case would seem to depend on whether a church group of unspecified size would qualify as a “subculture” or not.

The Family of Opus Dei Holocaust Revisionists (arational, false; uncertain effect on functioning). A 22-year-old man, a successful graphic artist in the suburbs of Washington, DC, believes that Jewish “Elders” fabricated the Holocaust for profit and to cover up the Jews' extermination of ethnic Ukrainians. When asked how he reconciles this belief with the large amount of literature that contradicts his thesis, he points out that Jews control the publishing industry. When asked how he reconciles this belief with the contrary information that he learned in his college history class, he points out that Jews also control academia. He claims to have learned these beliefs from his parents and family. His parents are member of the small Catholic sect Opus Dei and teach in an Opus Dei high school. The family is apparently stockpiling arms in their basement in preparation for a “race war.”

Comments. This case qualifies as a delusion by model-restriction criteria because of contextual counterexamples, considering the unlikelihood of such a vast and secret conspiracy given other facts about the way the world works. This example beautifully illustrates the tendency for patients to salvage actual counterexamples at the expense of contextual counterexamples. DSM's treatment of this case would seem to depend upon whether the young man's family would qualify as a “subculture” or not.

ACKNOWLEDGMENTS

This work was supported by funding from the National Institute of Mental Health and the National Alliance for Research on Schizophrenia and Depression.