C linical and experimental evidence suggests that the left hemisphere of the brain is specialized for speech activity and the right hemisphere is specialized for many nonlinguistic functions. Jackson

1 related the hemispheric linguistic differences to differences in cognitive activity, suggesting that the left hemisphere is specialized for analytical organization, while the right hemisphere is adapted for direct associations among stimuli and responses. Modern researchers have substantially generalized this differentiation to encompass a wide range of behaviors in normal subjects.

2,

3 Experimental

4 –

6 and clinical

7,

8 investigators of hemispheric asymmetry appear to agree on the fundamental nature of the processing differences between the two sides of the brain: the left hemisphere is specialized for propositional, analytic, and serial processing of incoming information, while the right hemisphere is more adapted for the perception of appositional, holistic, and synthetic relations.

Up to now, the perception of music has been a well-documented exception to this differentiation. Melodies are composed of an ordered series of pitches, and hence should be processed by the left hemisphere rather than the right. Yet the recognition of simple melodies has been reported to be better in the left ear than the right.

9,

10 This finding is prima facie evidence against the functional differentiation of the hemispheres proposed by Jackson; rather, it seems to support the view that the hemispheres are specialized according to stimulus-response modality, with speech in the left, vision and music in the right, and so forth.

10,

11 In this report we present evidence that such conclusions are simplistic since they do not consider the different kinds of processing strategies that listeners use as a function of their musical experience.

12Psychological and musicological analysis of processing strategies resolves the difficulty for a general theory of hemispheric differentiation posed by music perception. It has long been recognized that the perception of melodies can be a gestalt phenomenon. That is, the fact that a melody is composed of a series of isolated tones is not relevant for naive listeners—rather, they focus on the overall melodic contour.

13 The view that musically experienced listeners have learned to perceive a melody as an articulated set of relations among components rather than as a whole is suggested directly by Werner:

14, p. 54 “In advanced musical apprehension a melody is understood to be made up of single tonal motifs and tones which are distinct elements of the whole construction.” This is consistent with Meyer’s

15 view that recognition of “meaning” in music is a function not only of perception of whole melodic forms but also of concurrent appreciation of the way in which the analyzable components of the whole forms are combined. If a melody is normally treated as a gestalt by musically naive listeners, then the functional account of the differences between the two hemispheres predicts that melodies will be processed predominantly in the right hemisphere for such subjects. It is significant that the investigator who failied to find a superiority of the left ear for melody recognition used college musicians as subjects;

16 the subjects in other studies were musically naive (or unclassified).

If music perception is dominant in the right hemisphere only insofar as musical form is treated holistically by naive listeners, then the generalization of Jackson’s proposals about the differential functioning of the two hemispheres can be maintained. To establish this we conducted a study with subjects of varied levels of musical sophistication that required them to attend to both the internal structure of a tone sequence and its overall melodic contour.

We found that musically sophisticated listeners could accurately recognize isolated excerpts from a tone sequence, whereas musically naive listeners could not. However, musically naive people could recognize the entire tone sequences, and did so better when the stimuli were presented in the left ear; musically experienced people recognized the entire sequence better in the right ear. This is the first demonstration of the superiority of the right ear for music and shows that it depends on the listener’s being experienced; it explains the previously reported superiority of the left ear as being due to the use of musically naive subjects, who treat simple melodies as unanalyzed wholes. It is also the first report of ear differences for melodies with monaural stimulation.

We recruited two groups of right-handed subjects

17 15 to 30 years old from the New York area; 14 were musically naive listeners, who had less than 3 years of music lessons at least 5 years before the study; 22 were musically experienced (but nonprofessional) listeners, who had at least 4 years of music lessons and were currently playing or singing; each group of subjects was balanced for sex.

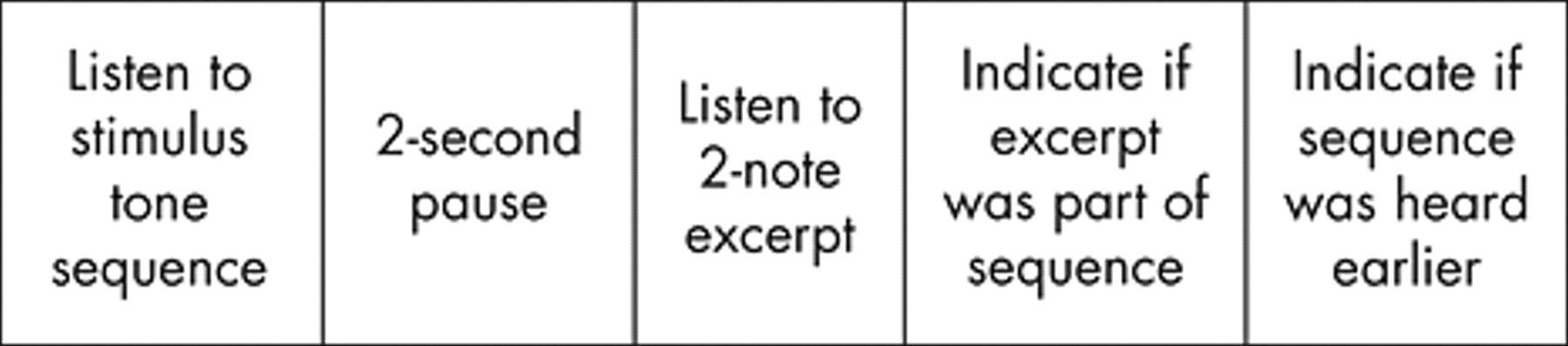

The listener’s task is outlined in

Fig. 1 . The two-note excerpt recognition task provided a measure of whether the listener could analyze the internal structure of a melody. The sequence recognition task provided a measure of the listener’s ability to discriminate the entire configuration of the tone sequence. Each listener responded to a set of 36 tonal melodies ranging in length from 12 to 18 notes, and a parallel set of materials in which the tone sequences were a rearrangement of the notes in each melody so that the melodic line was disrupted somewhat. A well-tempered 1½-octave scale was used (starting from the note C with a frequency of 256 hertz). Each tone in a melodic sequence was exactly 300 msec long, and was equal in intensity to the other tones. Two seconds after each stimulus melody there was a two-note excerpt; three-fourths of the excerpts were drawn from the stimulus sequence, one-fourth were not. One-fourth of the melodies reoccurred as later stimuli—as the next stimulus, two stimuli later, or three stimuli later.

Subjects were asked to listen to each stimulus sequence, to write down whether the following two-note excerpt was in the stimulus sequence, and then to write down whether they had heard the sequence before in the experiment. The stimuli were played over earphones at a comfortable listening level, either all to the right ear or all to the left ear for each subject. One-half of the subjects in each group heard the 36 melodic sequences first, and then the 36 rearranged sequences, with a rest period between the groups. Before each set of materials there was a recorded set of instructions which included four practice stimuli.

The musically experienced subjects discriminated the presence of the two-note excerpts in both ears (see Table 1) [

P < .01 across subjects and across simuli, on scores corrected for guessing

18 ]. No significant differences occurred according to whether the sequence was melodic or rearranged. The musically naive subjects did not discriminate the excerpts in either ear.

All groups of subjects successfully discriminated instances when a sequence was a repetition from instances when it was not. However, this discrimination was better in the right ear for experienced listeners ( P < .01 across subjects and P < .05 across stimuli) and better in the left ear for inexperienced listeners ( P < .025 across subjects and P < .001 across stimuli). These differences were numerically consistent for both melodic and rearranged sequences. Most of the differences between naive and experienced listeners can be attributed to the superior performance of the right ear in experienced listeners ( P < .025 across subjects and P < .025 across stimuli); performance in the left ear does not differ significantly between the two groups of subjects.

Confirming the results of previous studies, the musically naive subjects have a left ear superiority for melody recognition. However, the subjects who are musically sophisticated have a right ear superiority. Our interpretation is that musically sophisticated subjects can organize a melodic sequence in terms of the internal relation of its components. This is supported by the fact that only the experienced listeners could accurately recognize the two-note excerpts as part of the complete stimuli. Dominance of the left hemisphere for such analytic functions would explain dominance of the right ear for melody recognition in experienced listeners: as their capacity for musical analysis increases, the left hemispher becomes increasinly involved in the processing of music. This raises the possibility that being musically sophisticated has real neurological concomitants, permitting the utilization of a different strategy of musical apprehension that calls on left hemisphere functions.

We did not find a significant right ear superiority in excerpt recognition among experienced listeners. This may be due to the overall difficulty of the task and insensitivity of excerpt recognition as a response measure. Support for this interpretation comes from a more recent study in which we compared the response time for excerpt recognition in boys aged 9 to 13 who sing in church choir

19 with the response time in musically naive boys. In this study, recognition accuracy did not differ by ear, but response times were faster in the right ear than the left for choirboys. Furthermore, the relative superiority of the right ear in choirboys compared with other boys of the same age increased progressively with experience in the choir.

In sum, our subjects have demonstrated that it is the kind of processing applied to a musical stimulus that can determine which hemisphere is dominant. This means that music perception is now consistent with the generalization suggested initially by Jackson that the left hemisphere is specialized for internal stimulus analysis and the right hemisphere for holistic processing.

Acknowledgments

Reprinted (abstracted/excerpted) with permission from Bever TG and Chiarello RJ. Science 1974; 185:537–539