Consumers, clinicians, administrators, and researchers have expressed concern regarding the lack of stability in the U.S. behavioral health workforce (

1,

2 ), although empirical data on the nature of these problems and their relationship to the quality of mental health services are lacking (

1 ). Research from the past few decades indicates that turnover rates in mental health agencies are high, approximately 25%–50% per year (

3,

4,

5,

6,

7,

8,

9 ). Turnover can contribute to reduced productivity, financial stress in the organization, fractured relationships with clinical clients, and fragile clinical teams (

1,

10,

11 ). State mental health authorities and agency personnel are attempting to disseminate and implement complex, evidence-based practices within this difficult human resources landscape (

12,

13 ). To our knowledge, only one exploratory study has examined the relationship between team instability and implementation of evidence-based practices (

13 ).

In light of this gap in the literature, this study used data from a large evidence-based practice demonstration project to examine team turnover and its relationship to implementation processes and outcomes. We first describe rates of team turnover during the 24 months of implementation. We then report the quantitative relationship between team turnover and measures of implementation success. Next we explore the perceived effect of implementation on turnover by analysis of the qualitative data gathered from key informants. Finally we discuss the results of blending qualitative and quantitative data to further elucidate the relationship between team turnover and implementation outcomes.

Methods

Implementation intervention

Fifty-two sites across eight states initiated participation in the project titled Implementing Evidence-Based Practices in Routine Mental Health Settings. The project included an agency- and clinician-level intervention designed to facilitate implementation of five evidence-based practices between 2002 and 2005 (

14 ). Forty-nine teams participated in the intervention for the full 24 months. Reliable turnover data were available for 42 teams, which are the focus of this article.

Integrated dual disorders treatment (

14,

15 ), supported employment (

15 ), family psychoeducation (

16 ), assertive community treatment (

17 ), and illness management and recovery (

18 ) were the five evidence-based practices implemented. The intervention included three complementary elements: training materials that aimed to engage interest and teach relevant skills, training on the practices and their implementation, and year-long consultation with a trainer with occasional follow-up for an additional year. The primary outcome measures of the intervention were scores measuring fidelity to the evidence-based practice model on a summary 5-point scale. A secondary outcome measure included penetration scores, or the proportion of eligible clients receiving the practice, converted to a 5-point scale. The study was approved by the institutional review board (IRB) at Dartmouth Medical School and by each local site's affiliated IRB. All participants for whom potentially identifying information was collected gave informed consent.

Measures

Turnover. Complete staff turnover data were available for 42 teams. To derive an aggregate team turnover measure, we divided the total number of people leaving the team during the 24-month implementation period by the total number of original team positions. Reasons for staff departures included resignation (57%, N=106), termination (12%, N=23), and intra-agency transfer (29%, N=55). No reason was given in 1.6% (N=3) of the cases. Cases in which turnover occurred because the program closed were excluded.

Fidelity. The core components of the five evidence-based practices have been empirically validated through several clinical trials. Clearly defined practice models have been designated as evidence-based practices via an expert consensus review of empirical evidence before study initiation (

12 ). Fidelity, or adherence, to these practice models was the primary intervention outcome. Fidelity instruments were designed for each evidence-based practice to operationalize measurement of components essential to implementation. Each item was rated on a 5-point scale by two trained raters. Items were averaged to derive a mean score between 1 and 5, with higher values indicating greater fidelity to the evidence-based model. Fidelity was measured every six months for two years.

Penetration. Penetration rate was defined as the number of clients served in the specific evidence-based practice divided by either the number of eligible clients at the agency (if systematically tracked) or the estimated number of eligible clients derived by multiplying the total target population by the corresponding estimated percentage of eligible clients on the basis of the literature. Penetration was measured on a scale of 1 to 5, with higher scores indicting greater penetration of the practice at the agency. A score of 5 indicated that at least 80% of eligible clients were receiving the evidenced-based practice. A score of 1 indicated that the agency was providing the practice to no more than 20% of eligible clients. Penetration rate was also measured at six-month intervals for two years.

Analytic procedures

Statistical analysis. Team workforce characteristics are reported via descriptive statistics. Team size at baseline by evidence-based practice and 24-month team turnover by evidence-based practice were examined via one-way analyses of variance (ANOVAs). Initial bivariate analyses suggested that fidelity and penetration outcomes were related to team turnover during the implementation period. We examined these relationships further using two multivariate regression models with 24-month penetration or fidelity scores as the dependent variables. We used linear regression to model the effect of turnover on 24-month fidelity scores. Because of the ordered, noncontinuous nature of the penetration score, we used ordered logistic regression to examine the effect of turnover on 24-month penetration scores, controlling for baseline penetration score and team size. The standard errors of both models were adjusted for clustering within state by using a Huber-White estimator of variance (

19,

20 ). All analyses were conducted with the Stata statistical package (StataCorp release 9.2).

Qualitative analysis. Observational qualitative data were collected throughout the implementation period by an implementation observer trained in qualitative methods. Key events, such as introductory training and supervision, were documented via narrative summaries. Interviews with program managers, consultant-trainers, and community service program directors were conducted by implementation observers at six-month intervals throughout the project. These interviews were transcribed before being imported into ATLAS.ti qualitative software and were then summarized in written reports with dimensional displays by the implementation observer.

Dimensions hypothesized to be important to the implementation process, nested within broad domains, were designated a priori through consultation of relevant literature and discussion among experts (

21 ). The domain of importance for this article is workforce issues, and the two dimensions isolated within this domain are personnel action and staffing. Themes related to patterns of staffing instability as well as strategies and facilitators used to support the implementation of the evidence-based practices were extracted by the first author. Two-year turnover patterns were examined via staffing timelines, timelines of key events, and qualitative field notes. Sites were initially categorized as "turnover relevant to implementation" or "turnover irrelevant to implementation" on the basis of qualitative reports. Because turnover was not always viewed as a completely negative event, sites for which turnover seemed to be a relevant factor in implementation were then subcoded as turnover positive, turnover negative, or mixed. Aside from calculation of turnover rates, all qualitative analyses were conducted before the quantitative analysis.

Mixed-methods analysis. To explore the question "What amount of turnover is important to implementers?" the designation of whether or not turnover was deemed a relevant theme in the implementation story, based on comments from staff, leaders, and consultants, was merged with the turnover rates. Low-, medium-, and high-turnover categories were created on the basis of staff perceptions of relevance of turnover. Next we examined the relationship between these turnover categories and implementation outcomes via simple tabulation of fidelity and penetration means for each category. We repeated this procedure for examining the relationship between implementation outcomes and qualitative designation of positive, negative, and mixed influence of turnover on outcomes.

Results

Team characteristics

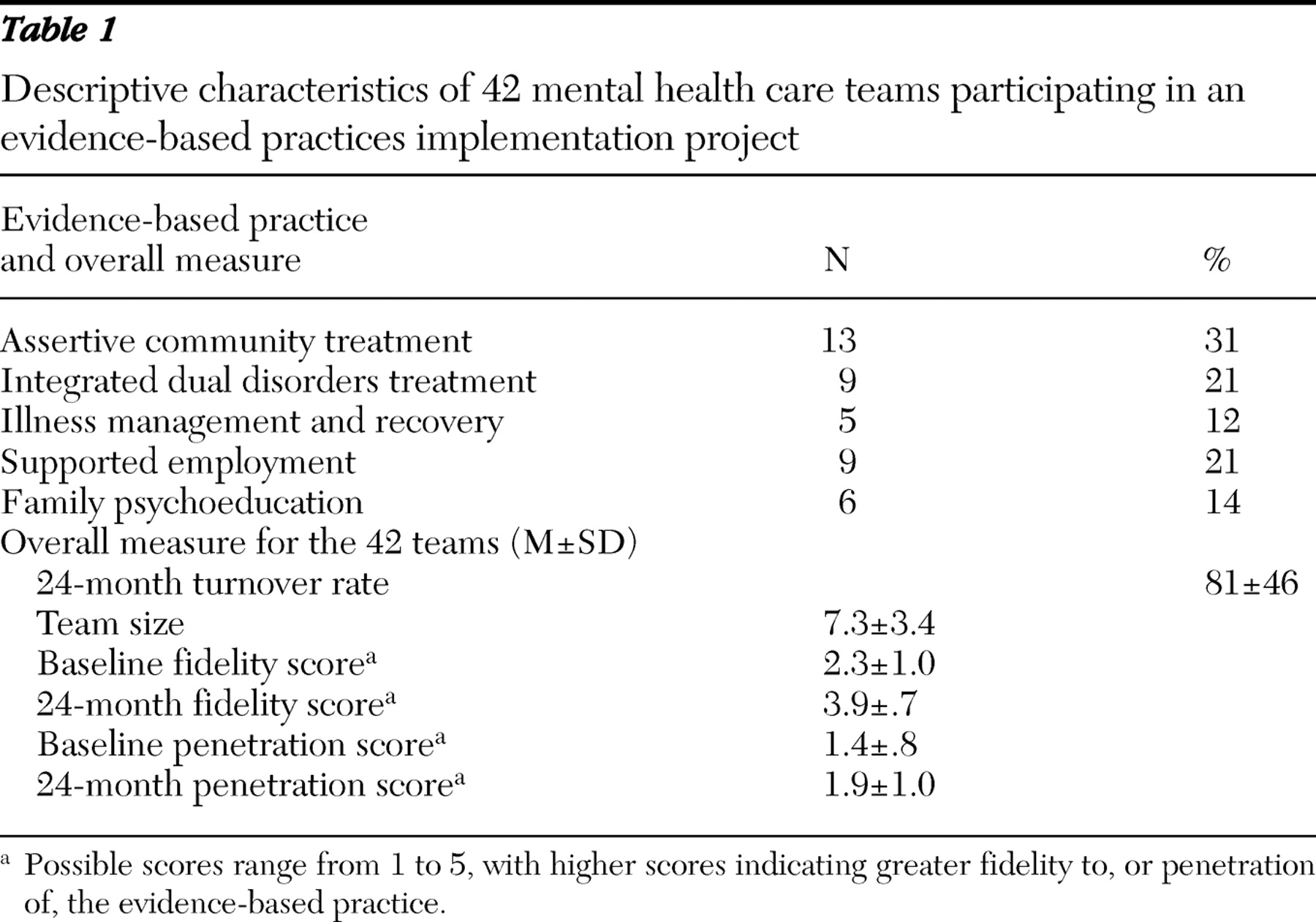

Table 1 reports descriptive characteristics for the 42 sites included in the analyses. A one-way ANOVA indicated that there were significant differences in mean team sizes by evidence-based practices, a finding consistent with the different staffing requirements of the evidence-based practices (F=12.44, df=4 and 37, p<.001). Postestimation procedures indicated that teams providing assertive community treatment and integrated dual disorders treatment were both significantly larger than teams providing family psychoeducation and supported employment (all multiple-comparison-corrected differences were significant at p<.001). The ANOVA indicated that there were no statistically significant differences between evidence-based practices in turnover rates.

Quantitative relationships

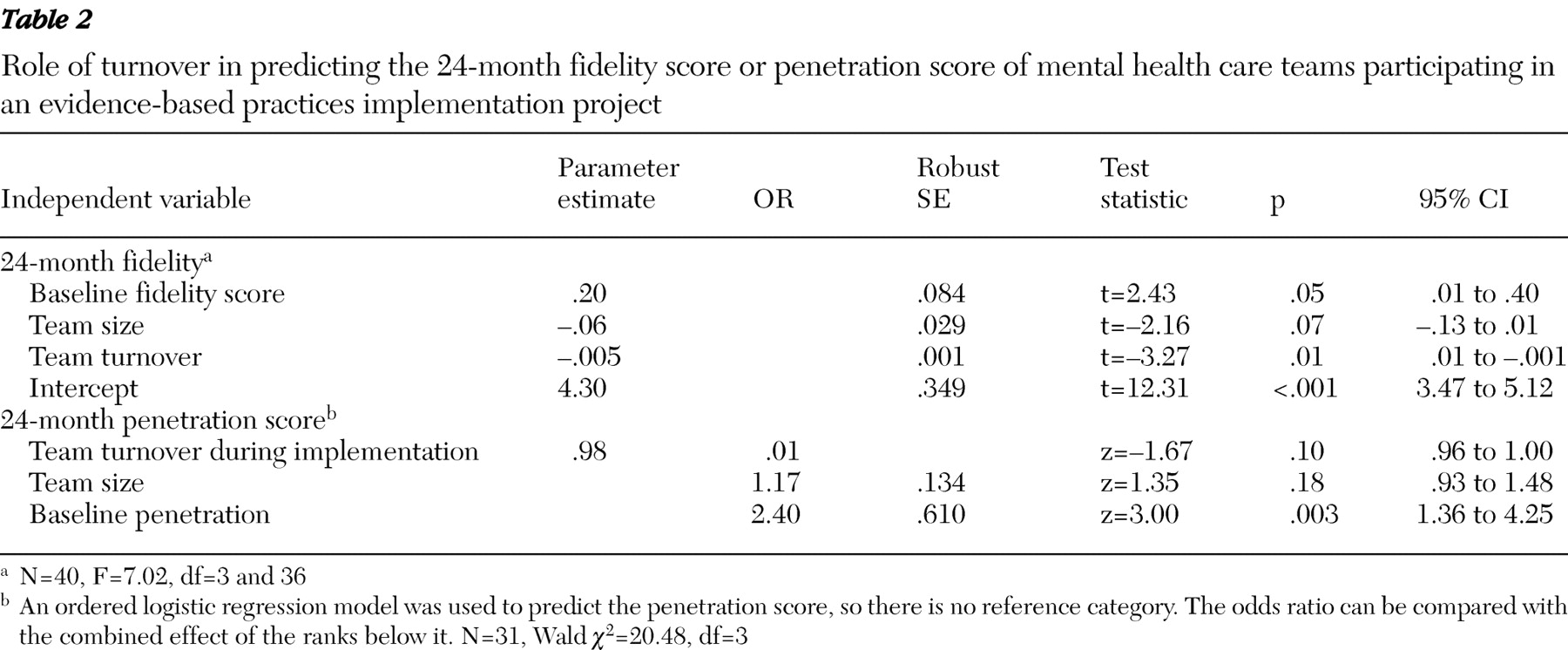

Fidelity. Multivariate linear regression was used to examine the relationship between 24-month fidelity outcomes and turnover during the implementation, with controls for baseline score and team size (

Table 2 ). The overall model was statistically significant and explained approximately 14% of the variance in fidelity score (p=.02, R

2 =.14). Team turnover was a significant negative predictor of 24-month fidelity scores.

Penetration. The ordered logistic regression model significantly predicted 24-month penetration scores (p=.001). An approximate likelihood ratio test of coefficient equivalence across categories indicated that the proportional odds assumption of the model was not violated. Only 31 cases were available for analysis for this model because of missing penetration data for either the baseline or the final endpoint. Only penetration score at baseline significantly predicted the 24-month penetration score, although a nonsignificant trend was observed for turnover rate. The odds ratios in

Table 2 can be interpreted as follows: for every 1% increase in turnover, the odds of being in the highest category of penetration score (<80% penetration) is approximately 2% lower than the odds of being in the lower four categories combined. Because the odds are proportional between categories, the interpretation of the odds ratio is the same for each category.

Qualitative relationships

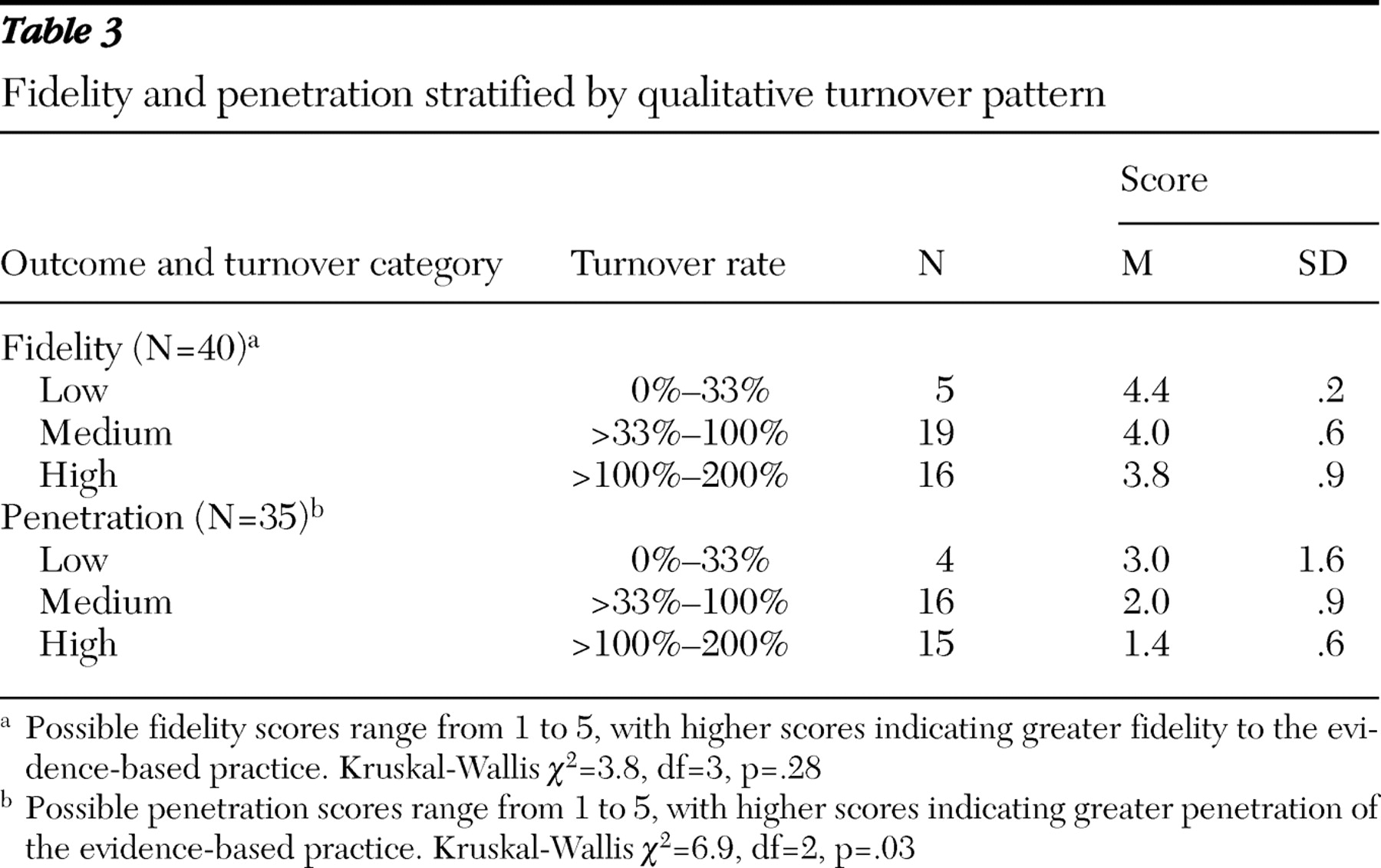

The quantitative data revealed substantial team instability during the two-year project period. However, a systematic examination of the qualitative data provides insights into the complexity of the relationship between turnover and implementation outcomes. Seventy-one percent (30 of 42 teams) of agency teams using evidence-based practices noted that turnover was a significant factor in implementing the evidence-based practices over the 24 months. Further, a pattern emerged when examining the actual turnover rates and the qualitative reporting of significance of turnover. No teams that experienced turnover under 33% (five teams) reported it to be a substantial factor in implementation. Teams experiencing turnover in the range of 33% to less than 100%, considered a medium range, were mixed in their experience of turnover as significant. Seventy-four percent of these teams (14 of 19 teams) felt that it was a factor. Most teams with turnover rates over 100% noted that turnover was a substantial factor in implementation (89%, or 16 of 18 teams). These themes, described below, illustrate the interplay between workforce issues and implementation of evidence-based practices.

Negative turnover. Sixteen of the 30 teams mentioning turnover as a factor viewed it as a barrier to implementation outcomes. Teams frequently noted that they struggled to implement the practice when staffing turnover was high primarily because of difficulty in having enough trained workers to deliver the practice. Agencies often made the decision to prioritize fidelity (high-quality service) over penetration (delivery of service to more consumers), at times serving very few clients. This decision was not made in isolation but was the advice generally given by the consultants in regard to which outcomes to focus on when staffing resources were unstable. Low clinical skill, difficulty in changing practice orientations, and problematic interpersonal team dynamics were reasons often noted for staffing turnover.

Positive turnover. Twelve teams described turnover as having a primarily positive influence on implementation. On the one hand, two teams felt that this positive influence was due to fortuitous replacement of less qualified staff with more qualified staff. On the other hand, a more frequent theme was a realignment of the team by both direct care staff and agency administrators with support of the consultants. Direct care and supervisory workers sometimes eliminated themselves by leaving the agency, and this allowed administrators to reevaluate training and hiring practices. Less frequently, poorly performing workers were fired or forced to resign.

Mixed turnover. One theme present in two teams was that worker turnover jeopardized the implementation for many months, yet in the end a stronger clinical team emerged. This happened through several channels, including intervention by the consultant, changes in agency personnel policies, and chance occurrences. In these cases, it was difficult to classify turnover as positive or negative for implementation.

Relationship between turnover and implementation outcomes

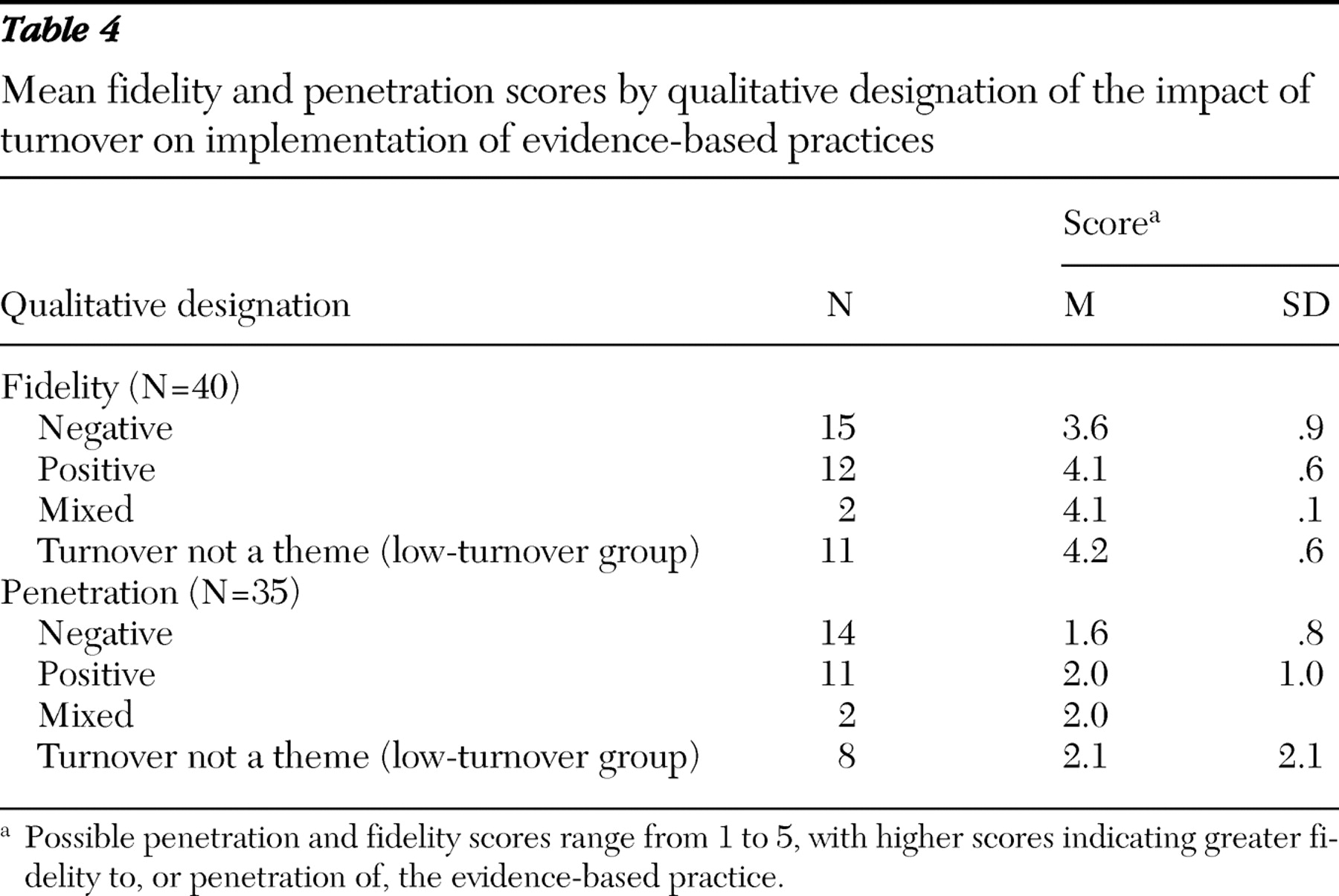

The qualitative data described above highlight the perspectives of agencies and staff on the important role of workforce issues in the successful delivery of psychosocial evidence-based practices. We thus blended qualitative and quantitative data to examine whether these perspectives were related to 24-month implementation outcomes. We compared our qualitative designations of turnover salience and direction of effect with quantitative implementation measures. We observed that when fidelity and penetration scores were stratified by qualitative designation of turnover category (low, medium, or high turnover), both outcomes increased with each decreasing qualitative category of turnover (

Table 3 ). Similarly, when mean fidelity and penetration scores were grouped by negative turnover, positive turnover, mixed turnover, or turnover that was not a salient theme expressed by teams, the lowest penetration and fidelity scores were experienced by implementation teams for whom leaders and observers reported that turnover was a substantial barrier in implementation (

Table 4 ).

Discussion

This study indicates that turnover among staff attempting to implement evidence-based practices was common. Further, team turnover did not appear to differ substantially by evidence-based practice. Our findings are suggestive of an overall inverse relationship between turnover and implementation outcomes during the implementation period.

Perhaps the most illuminating finding of this study comes from marrying the parallel quantitative and qualitative data. Of the teams with turnover above 100% in 24 months, all but two reported that turnover had a substantial impact on implementation. Further, staff with very high rates of turnover (<100%) described it as negative for implementation more frequently than staff with lower turnover. This indicates that although some turnover may have been beneficial to teams, depending on circumstances, very high turnover was almost always a hindrance to implementation. Our examination of fidelity and penetration rates by qualitative turnover category further supports this conclusion.

To our knowledge, this is the first study of implementation of evidence-based mental health practices to focus on the effect of team instability on implementation outcomes. However, our study is congruent with others reporting high turnover rates in community mental health (

3,

4,

5,

6,

7,

8,

9 ). Our study also indicates that practice change was dynamic and that not all personnel turnover was undesirable. This finding is supported by several organizational studies reporting that when people recognize that their job does not fit their skill levels, turnover can be positive for organizations (

22,

23 ). On the one hand, the process of turnover appears to be disruptive and leads to problems with implementation of complex service-based evidence-based practices via training intervention. On the other hand, the outcome of turnover is sometimes positive for implementation when measures are taken by agencies implementing the evidence-based practices to retool training strategies or hire more qualified team members. Finally, the mixed-methods findings substantiate much of the rhetoric regarding difficulties in the mental health care system's workforce (

1,

2,

24,

25,

26 ).

Limitations

Several limitations are noteworthy. This demonstration project did not have a nonimplementing control group for comparison, and so we are unable to comment on the effect of an organizational change effort itself on team turnover. Our study design also did not allow us to definitively tease out direction of effect between implementation of a new evidence-based practice and turnover. Further, the sites in this study were not randomly selected but rather responded to a national call to participate that was initiated by each state's mental health authority. Finally, although this demonstration project involved a large number of community mental health workers, the primary unit of analysis was the team. As such, the study's small sample precluded more robust statistical analyses.

Future directions

This study points to several directions for future research. Researchers in other fields have estimated that the economic costs of employee turnover are high (

27,

28,

29,

30 ). Further, some reports have suggested that lack of provider continuity caused by turnover can fragment care and lower its quality (

31,

32 ). Although antecedents of turnover in mental health have been studied (

5,

7,

32 ), prospective research examining the relationship of workforce turnover to delivery of high-quality mental health services is critical. Future research should tease out patterns and consequences of turnover that we were unable to address in our study, such as length of vacancy times and permanent team reduction (

22 ).

A second area of needed research includes studies that are able to tease out the interaction between policy- and clinical practice-level variables that drive mental health workforce turnover. Several studies have suggested that psychological burnout, low pay, young age, and lack of mentoring are antecedents to worker turnover in the community mental health context (

5,

27 ). Our qualitative data suggest that departures from the team or the entire agency were, at face value, due to lack of preexisting clinical skills, inadequate supports, difficult interpersonal team dynamics, or practitioners' unwillingness to change their practices. This finding is also supported by literature in other fields (

33,

34 ). Research is needed that blends detailed information on the experiences of consumers and providers with salient macro-level influences, such as the specific effects of state and federal policy mandates, wages, and labor market variables.

Finally, in light of political and economic forces that make investment in the behavioral health workforce a low priority, models of implementation that are adaptable to chronic turnover of employees are critically needed. Use of consumer and clinician electronic decision supports and other technologies that are not human resource dependent may be critical. Empirical testing of innovative hiring, training, and mentoring models may provide better quality of care for consumers.

Conclusions

This study indicates that public mental health agencies attempting to implement complex psychosocial evidence-based practices can expect to experience significant personnel change during implementation. Our quantitative analyses suggest that, on average, turnover is a hindrance to implementation of evidence-based mental health service interventions. However, putting in place a well-qualified team, examining hiring practices for the team when staff members turn over, and realigning policies to create better teams as turnover occurs can benefit implementation. Finally, it appears that practitioner perspectives on the impact of turnover on implementation are in line with the quantitative outcomes data suggesting that behavioral health workforce stability plays a vital role in delivery of high-quality services.

Acknowledgments and disclosures

This research was supported by the West Family Foundation, the Center for Mental Health Services at the Substance Abuse and Mental Health Services Administration, the Robert Wood Johnson Foundation, the MacArthur Foundation, and the Johnson & Johnson Charitable Trust, as well as by a predoctoral institutional training grant to the first author from the Agency for Healthcare Research and Quality.

The authors report no competing interests.