The mental health field has made strides in the development of effective services for people with severe mental illnesses; however, only 5% of these individuals receive evidence-based care (

1,

2,

3 ). To facilitate use of evidence-based practices in routine practice, the Substance Abuse and Mental Health Services Administration (SAMHSA) sponsored the development of program-specific toolkits for the National Evidence-Based Practices Project (

4,

5 ). Six toolkits were developed by researchers, clinicians, administrators, consumers, and family members to include informational materials for each stakeholder, training materials (such as videotapes, practitioner workbooks, and research articles), implementation recommendations, and fidelity scales (the toolkits and other information are available at

mentalhealth.samhsa.gov/cmhs/communitysupport/toolkits/about.asp ). These toolkits were used in the SAMHSA project, along with training and consultation, to examine strategies and barriers to successful implementation of these practices in eight states.

In addition to emphasizing evidence, national policy has moved toward endorsing treatments that facilitate recovery from mental illness (

2 ). Although recovery is individualized, one useful definition asserts that "recovery involves the development of new meaning and purpose in one's life as one grows beyond the catastrophic effects of mental illness" (

6 ). In this view, recovery can be thought of as managing one's illness in order to develop or regain a sense of self and place in society.

The illness management and recovery program was designed to address both agendas: to provide an evidence-based intervention while promoting recovery. The program was developed for the national project as a curriculum-based approach to teaching consumers how to set and achieve personal recovery goals and acquire the knowledge and skills to independently manage their illnesses (

7 ). Illness management and recovery uses educational, cognitive-behavioral, and motivational interventions to address ten modules: recovery, mental illness, stress-vulnerability, social support, medication management, relapse prevention, coping with stress, coping with problems and symptoms, getting needs met in the mental health system, and drug and alcohol use. This core curriculum is taught to all participants, but the teaching strategies are individually tailored (for example, role playing and selection of homework assignments).

Although the illness management and recovery program has been identified as an evidence-based practice and the components of the practice are supported by rigorous research, studies of the aggregate package are just being published, with positive findings. Two small pilot programs (uncontrolled pre-post evaluations) showed positive changes in illness self-management and knowledge (

8,

9 ), and a recent randomized controlled trial in Israel found positive change in self-management and coping but not in social support when the program was compared with usual care (

10 ). The purpose of this project was to conduct a statewide evaluation of illness management and recovery as part of the second round of national implementation projects funded by SAMHSA.

The recent focus on implementation has grown out of the recognition that wide gaps exist between research and actual practice and that passive dissemination of research does not result in better service (

11,

12,

13 ). Several models, such as Rogers' diffusion of innovations model (

14 ), have been applied to health care to describe effective implementation processes. Our implementation approach fits well with Simpson's transfer model (

15 ), which conceptualizes the transfer of research into practice as occurring in four related stages: exposure to the practice (for example, via videos and training), adoption of the practice (commitment and mobilization of resources), implementation (actually trying the practice), and practice (sustained use of the practice with internalized capacity to monitor practice and support the program).

Our project attempted to expose agencies to the illness management and recovery program and assist them in adopting and implementing the model, with the long-term goal of sustained practice. We closely followed the approach of the National Evidence-Based Practices Project (

5 ), using SAMHSA's toolkits, intensive training and consultation, monitoring of fidelity and consumer outcomes, and a strong collaborative partnership between Indiana's Division of Mental Health and Addiction and a state-funded technical assistance center, the ACT Center of Indiana (

16 ). The study focused on the implementation process, fidelity monitoring, and important consumer outcomes relevant to recovery, such as illness management, hope, and satisfaction with services. The results will inform future large-scale implementations that bring this practice into routine mental health settings.

Methods

Participants

We sent details of the study to the directors of the 30 community mental health centers in Indiana and asked interested sites to send a letter of commitment. Six responded, and they were selected to participate. In determining order of training, we considered readiness of sites (whether there was consensus among administrators and a specific program and leader identified for implementation), stated preference, and location. In year 2, before training began, administrators from one site decided not to participate, partly because the site was concurrently implementing two other new practices. Two additional sites contacted us and expressed strong interest in participating. We added these two sites and expanded implementation to seven sites.

Each of the sites identified one or more agency programs serving people with severe mental illness to implement illness management and recovery. Each site then identified a program leader and staff to be trained. After clinicians completely described the study to interested consumers, informed consent was obtained. Consumers were able to receive illness management and recovery services without participation in the study.

Implementation process

We initially hired two part-time trainers—one doctoral-level clinician and one consumer. We later added two master's-level clinicians (one full-time) and another consumer trainer. The initial pair of trainers participated in an intensive train-the-trainer workshop with Susan Gingerich, M.S.W., one of the developers of the illness management and recovery toolkit (

17 ), and received phone consultation from her throughout year 1. The trainers then worked with agencies to help implement illness management and recovery, including kick-off presentations, in-depth skills training, ongoing monitoring and supervision, and consultation as needed.

We originally proposed to have kick-off meetings before training. However, we observed that such meetings generated excitement and eagerness before the program was ready to begin serving consumers. Thus we began scheduling kick-off events after clinicians had been trained and were ready to provide illness management and recovery. The kick-off event included a presentation by one of our trainers, the introductory video from the toolkit, and a description of the implementation plan by the agency's program leader. In addition, two consumers who had completed the illness management and recovery program in an earlier pilot study (

9 ) discussed their experiences. During the kick-off, sites distributed sign-up sheet to identify consumers interested in the program. Three sites 4, 5, and 7 in the tables) chose not host kick-off events.

Skills training took place over two days and included didactic materials regarding evidence, recovery concepts, core values and qualifications of practitioners, logistics, and descriptions of the modules. Trainees also received an overview of educational, motivational, and cognitive-behavioral techniques used in the illness management and recovery program. Trainees observed videotaped sessions and used role play to practice the materials and skills used, such as interactive exercises aimed at improving goal setting. To date, more than 500 individuals across Indiana and surrounding states have completed the two-day skills training, 306 of whom were trained in the sites selected for this study.

Consultation from our trainers included technical assistance as needed through phone calls, e-mails, and semiannual site visits to assess fidelity. Program leaders were invited to participate in monthly conference calls with other program leaders (hosted by a trainer). Participation was variable, but some leaders expressed interest, and these calls were held monthly for approximately 12 months. In addition, clinicians requested more guidance on clinical techniques. Consequently, we hosted two case conference workshops (in years 2 and 3) for more advanced didactic training, in which clinicians and trainers presented specific cases and received feedback on them. In addition, we hosted several motivational-interviewing workshops and two cognitive-behavioral workshops specifically for clinicians in the illness management and recovery program. Although not part of the initial proposal, we provided fidelity assessment training directly to two sites (sites 2 and 4) by request so that they could better oversee and sustain high-quality programs internally over time.

Measures

Fidelity. The Illness Management and Recovery Fidelity Scale is a 13-item scale to assess the degree of implementation (

18 ). Each item is rated on a 5-point, behaviorally anchored scale, with a score of 5 indicating full implementation, 4 indicating moderate implementation, and the remaining scale points representing increasingly larger departures from the model. This scale has been used in several state implementation projects and shows sensitivity through increased scores with training and consultation (

19 ).

In addition to fidelity, we measured general agency characteristics with the General Organizational Index (GOI). The GOI is a 12-item scale designed to measure common agency practices that support evidence-based practices, such as the extent to which the practice is offered (that is, the "penetration") or whether the agency offers training or weekly supervision in the practice. The GOI was developed as a part of the National Evidence-Based Practices Project (

5,

19 ).

Fidelity assessments and the GOI were completed by two trained raters (a trainer and a research assistant). The raters reviewed charts, conducted interviews with staff and consumers, and observed the illness management and recovery intervention when possible. At the end of the day, the raters independently assessed the program and compared ratings. Discrepancies were resolved through discussion, and additional data were gathered as needed. Following each fidelity assessment, sites received a report with item-level scores and specific recommendations.

Consumer outcomes. Consumer outcomes were evaluated with consumer- and clinician-rated questionnaires. We assessed illness self-management as a direct outcome of intervention, and we assessed hope because it is often described as being central to recovery (

20 ). We also measured satisfaction, hypothesizing that as consumers made greater progress in recovery, they would express greater satisfaction with services.

Illness self-management was measured with the Illness Management and Recovery Scale (

21 ), developed specifically for assessing outcomes in the program. The scale includes parallel clinician and consumer versions, each with 15 items rated on 5-point behaviorally anchored scales. Items assess progress toward goals, knowledge about mental illness, involvement with significant others and self-help, time in structured roles, impairment in functioning, symptom distress and coping, relapse prevention and hospitalization, use of medications, and alcohol and drug use. Both versions of the scale have demonstrated adequate internal reliability and good test-retest reliability and have shown convergent validity with the Multnomah Community Ability Scale (

22 ), the Colorado Symptom Inventory (

23 ), and the Recovery Assessment Scale (

24 ) in a sample of consumers (

25 ). The clinician and consumer versions of the Illness Management and Recovery Scale have also been found to be sensitive to changes over time (

8,

10 ).

The Satisfaction With Services (SWS) scale is an 11-item consumer satisfaction checklist adapted from the Client Satisfaction Questionnaire (

26 ). The SWS was designed specifically for consumers with severe mental illness and has been used in several large-scale studies (

27,

28,

29 ).

Hope was assessed with the Adult State Hope Scale (

30 ), a six-item self-report measure, with items rated from 1, definitely false, to 4, definitely true. A series of studies demonstrated internal consistency, high levels of convergent and discriminant validity, and sensitivity (

30 ). This scale is appropriate for use with consumers with severe mental illness (

31,

32 ) and has been used as an outcome indicator in a controlled study of supported employment (

33 ).

Procedures

We implemented illness management and recovery at three sites in 2004–2005 and at the remaining sites during 2005–2006. Baseline fidelity assessments occurred before each site's implementation start date and semiannually thereafter. Clinicians distributed self-report surveys to participating consumers who returned these directly to the research team in self-addressed, stamped envelopes. Clinician ratings were also directly returned to the research team. The research team tracked when follow-up surveys were due and prompted clinicians to distribute them. Consumers and their illness management and recovery clinician were asked to complete the questionnaires described above at baseline and at six and 12 months after baseline. All procedures were approved by the institutional review board at Indiana University-Purdue University Indianapolis.

Data analysis

Data were double-entered to ensure accuracy and analyzed with SPSS, version 16.0. Program-level fidelity data were not analyzed statistically because of the small sample. For consumer-level data, descriptive statistics are presented for demographic characteristics. Means were calculated for each outcome scale, and participants' scales were used only if they had completed 80% of the items. Paired t tests were used to compare scale means at baseline with the means at both six and 12 months.

Results

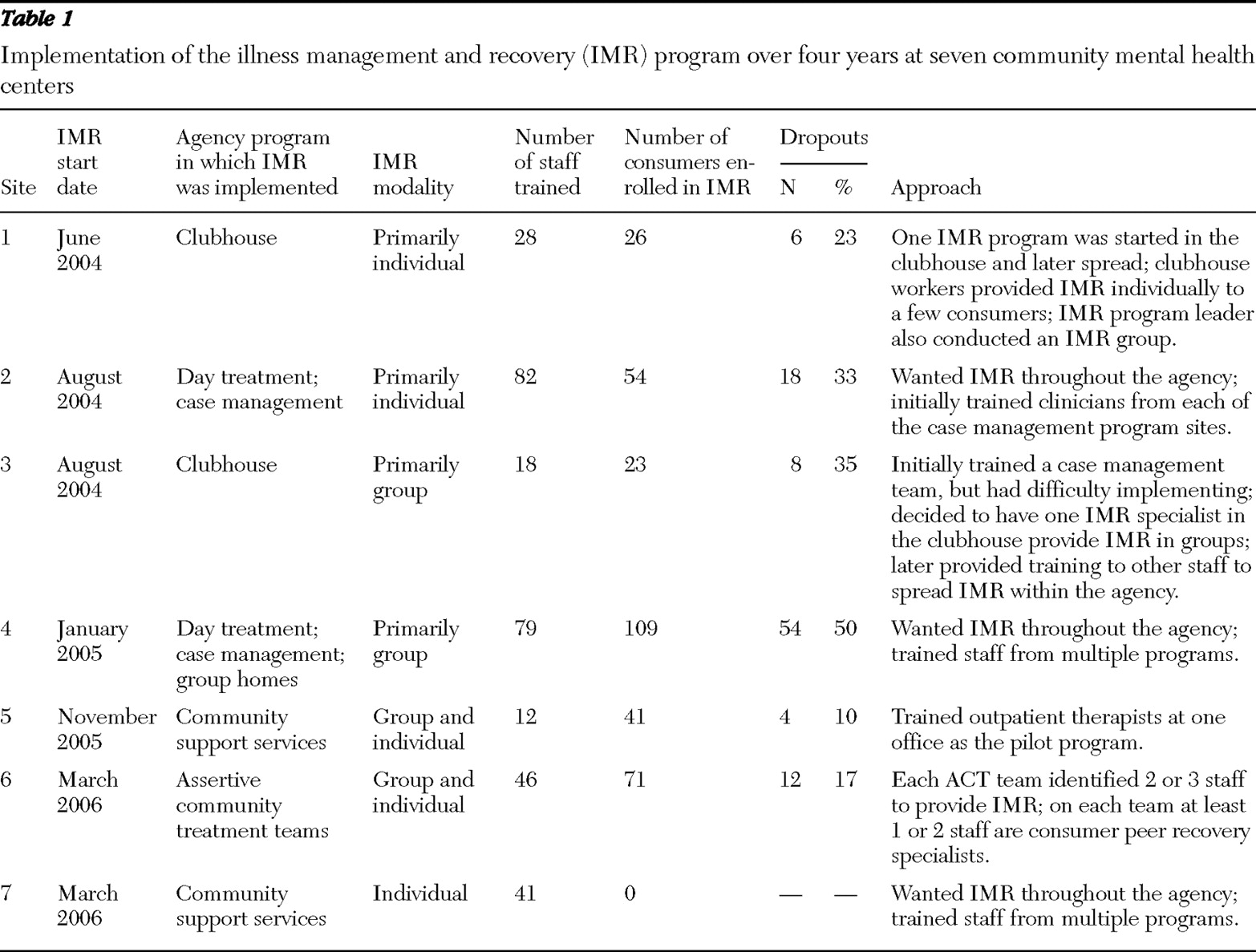

Table 1 shows the start dates, type of program selected by the agency to be trained in the intervention, type of modality (individual versus group), number of staff trained, number of consumers served, and the approach used for implementation.

Program implementation

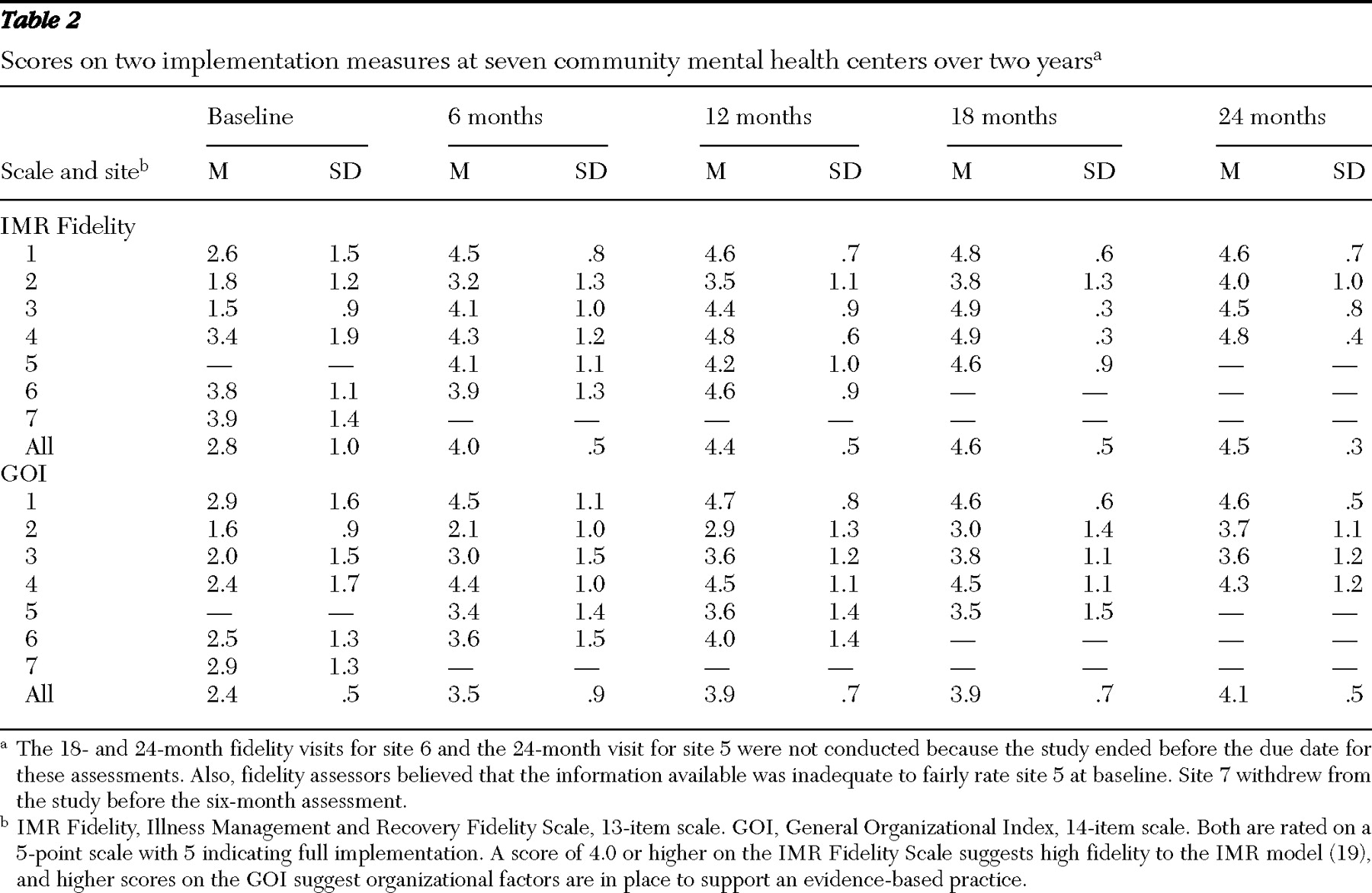

We began working with seven sites, but one site (site 7) had difficulty implementing the program and did not participate beyond the baseline fidelity assessment. Across the remaining agencies, fidelity scores increased over time, and fidelity scores specific to illness management and recovery increased more rapidly than those related to organizational factors (GOI scores) (

Table 2 ). The largest improvement in program fidelity scores was noted between baseline and six months, which is similar to the findings of the National Implementing Evidence-Based Practices Project (

19 ). A slight dip in illness management and recovery fidelity was noted from 18 to 24 months, which is partly attributable to more refined fidelity assessment procedures. All four of the sites that received a 24-month assessment were judged as reaching high illness management and recovery fidelity, defined as a score of 4.0 or above (

19 ).

Consumer outcomes

We received a baseline self-report questionnaire from 324 consumers. Of these, 267 (82%) were Caucasian, 159 (49%) were men, and 268 (83%) had at least a high school degree or a GED. The mean±SD age was 43.7±11.6 years. State administrative data for all adults served at these agencies (N=50,955) indicated that 84% were white, 37% were men, 65% had at least a high school degree, and the average age was 41.8 years. Within sites, men and consumers with higher levels of education were more likely to receive illness management and recovery. As shown in

Table 1, the number of consumers per site ranged from 23 to 109, with dropout rates ranging from 10% to 50%. However, because self-report questionnaires were distributed by clinicians, participation varied and actual rates of dropout are unknown. It was possible for consumers to participate in the program and receive the intervention without completing the questionnaires. Therefore, we were able to identify only dropouts who participated in both the intervention and completion of the questionnaires.

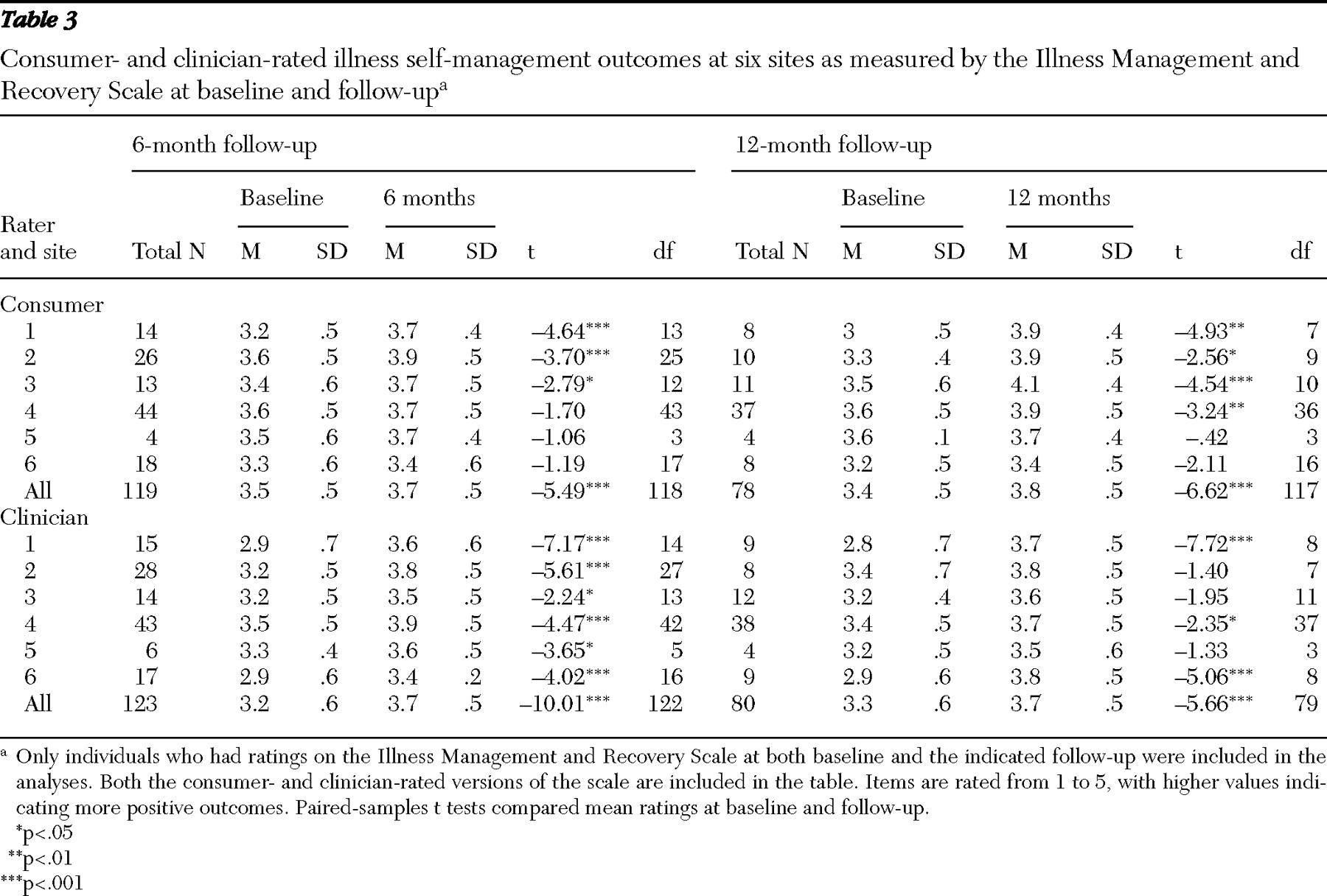

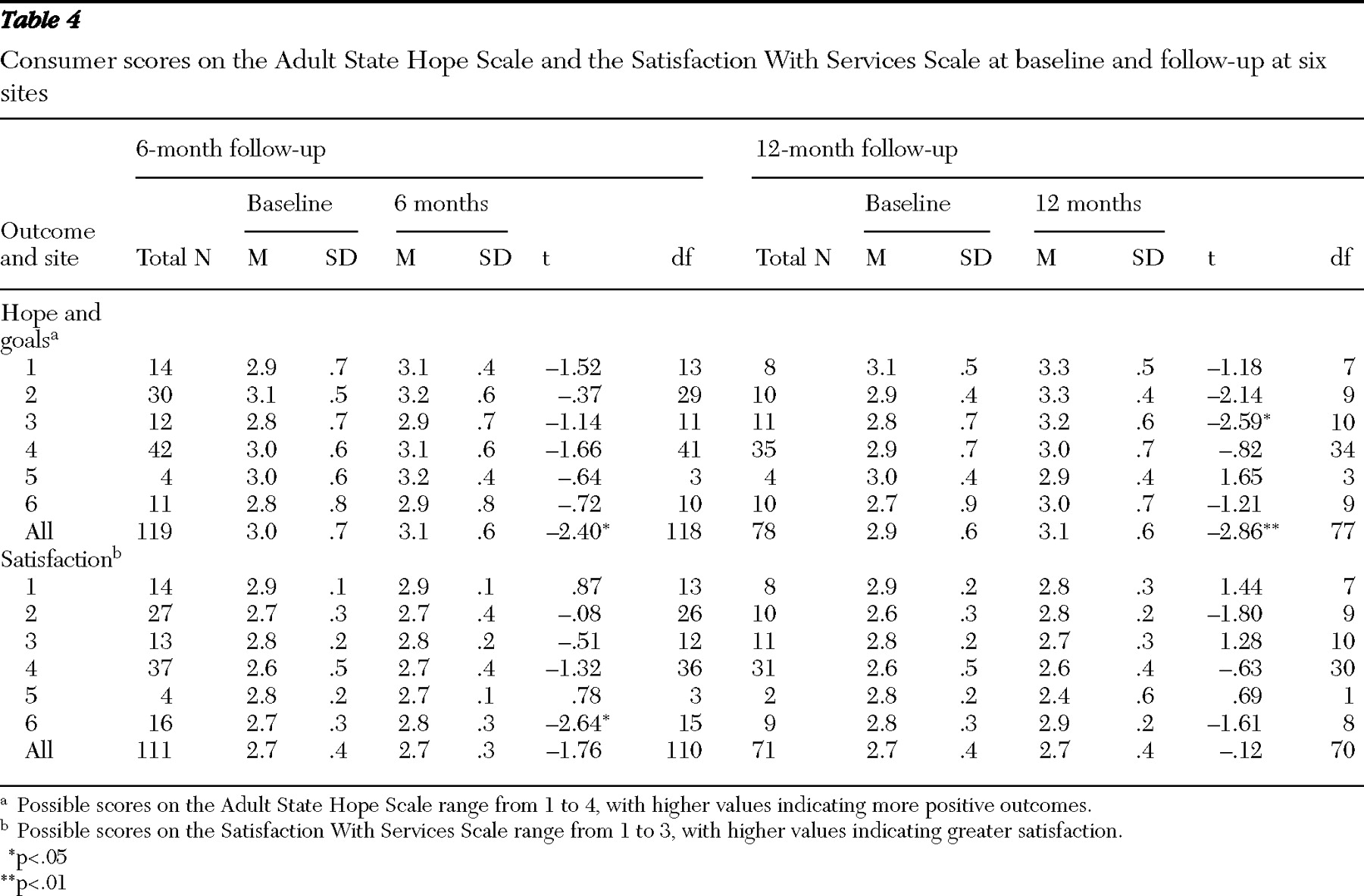

Questionnaire responses showed significant increases in illness management skills (self- and clinician-rated) and hope but no change in satisfaction with services at six- and 12-month follow-ups (

Tables 3 and

4 ). The effect sizes for change in illness management skills as rated by clinicians at both six and 12 months were large (.90 and .84, respectively), and consumer-rated illness management skills indicated moderate to large effects over this time (.49 and .84, respectively). For the overall sample, consumer-reported ratings of illness management skills indicated moderate to large effects (.49 at six months and .84 at 12 months). Although the improvement in hope was significant for the overall sample at both six and 12 months, the effect sizes were small to moderate (.20 and .35, respectively).

Discussion

We were able to successfully implement illness management and recovery at six of seven sites. Among the six sites where implementation was completed, five were able to achieve a high level of fidelity within 12 months, and the sixth site reached this level by the end of 24 months. The level of fidelity achieved was similar to findings in the National Implementing Evidence-Based Practices Project (

19 ), with scores at or above 4.0. The slower progress in improvement of organizational factors related to sustaining evidence-based practices (as measured by the GOI) suggests that organizational structures may be more difficult to transform in a short period. For instance, changing management and monitoring practices at the agency level to support illness management and recovery may take more consensus and infrastructure building than changing the practice of individual programs or practitioners. Anecdotal evidence indicated that some higher-level managers did not anticipate the need to address agency practices and envisioned that we would work only with the core practitioners. However, previous research suggests that organizational contexts are instrumental in successfully sustaining implementation and are well worth the effort (

12 ).

We found positive changes in consumer outcomes, as have others (

8,

9,

10 ). The improvements in illness management reported by both consumers and clinicians represented moderate to large effect sizes and were similar to findings from a randomized controlled trial in Israel (

10 ). Self-reported hope showed less improvement; significant but small improvements were seen in the overall sample at six and 12 months. Satisfaction with services did not change in the overall sample.

Dropout rates at sites ranged from about 10% to 50%. The program with 50% dropout likely represents an anomaly because it initially offered illness management and recovery in its subacute facility. People quickly transitioned off the unit to other parts of the agency where illness management and recovery was not yet in place. The agency stopped providing the full program in this setting but continued to present some of the materials to engage consumers and refer them to illness management and recovery after they left the subacute unit. The dropout rates in the other agencies are close to the range in other published studies, 11% to 29% (

8,

10 ).

Although we consider this to be a successful implementation project, there were several challenges. One site (site 7) did not implement illness management and recovery and appeared to have structural difficulties, even though leadership voiced support. This program had three different designated program leaders in one year, all of whom had substantial responsibilities besides illness management and recovery. In addition, these leaders were "drafted" into the position and may have lacked personal commitment to the program even if external resources had been available. We tried several types of interventions, including meeting with the program director and team leader to review the implementation requirements and the agency's commitment, additional on-site and phone consultation, and a "restarting." Finally, we addressed the implementation difficulties as a stage-of-change mismatch (

34 ), in that the agency appeared to be more in a "contemplation" stage than an "action" stage, whereas our approach was action oriented. Implementation at a second site (site 2) eventually reached a high degree of fidelity, but it took much longer than at the other five sites. Similar to site 7, site 2 initially planned to train a large group of clinicians at once, but it did not have the infrastructure for supervision, coordination, and support of these clinicians. A later restructuring with a new program leader helped facilitate implementation.

Conducting fidelity assessments with the Illness Management and Recovery Fidelity Scale as originally designed was challenging. At baseline, scoring was difficult because the scale is written specifically for the approach outlined in the toolkit, rather than for broader educational or goal-setting programs. When evaluating an agency program before the intervention was implemented, we rated the program on the closest available activities; for example, a program that offers educational groups with handouts is closer to the illness self-management and recovery program than a program that does not offer structured education. A second fidelity-related issue was the method of rating. Because the illness management and recovery program emphasizes clinically sophisticated techniques rather than outward model structures, some items included in the scale rate the quality and extent of certain therapeutic techniques. These types of ratings would best be obtained through observation of multiple sessions, as in psychotherapy research; however, this method is impractical for implementation monitoring on a wider scale. Thus reliance on chart documentation and interviews with clinicians and consumers, with some observation, was the method that we used for fidelity assessment.

Early in the project we worked with scale developers to revise the fidelity scale to be more useful in actual practice, and a revised version is now available (upon request from the ACT Center of Indiana) and was used in the latter part of our study. For nonresearch projects, we no longer measure fidelity before program implementation. Instead we wait at least three months to make the first fidelity assessment. This way the program has started and has some structures in place for a more meaningful rating. As a developmental tool, fidelity assessment at a later point seems a better use of resources and provides helpful feedback. Measurement of fidelity before implementation is an exercise in confirming the obvious—that the program has not been implemented.

Another challenge was providing illness management and recovery in a group format. A group approach can be cost-effective and has the added benefit of peer support. However, several sites initially reported difficulty ensuring that all consumers' goals were addressed while also covering enough of the material to make steady progress through the toolkit. Smaller groups (for example, three or four individuals) may work better than the eight participants specified in the fidelity scale. An alternative solution is to address personal goals for half the group in one meeting and for the other half in the following meeting. Gingerich and Mueser have since revised the original manual to include information specifically for group format (

17 ).

Some clinicians reported difficulty implementing illness management and recovery in a case management setting. At one site (site 3), case managers reported being distracted from the materials because of the time needed to "put out fires." We discussed potential solutions, such as assigning a "specialist" role on the team to one of the case managers while allowing the others to focus on case management needs. This approach worked well in an earlier pilot program with an assertive community treatment team (

9 ). Another possibility is to connect consumers' immediate needs with their long-term goals to help them see the relevance of illness management and recovery to their lives and current situation. Site 3 chose to identify a specialist who worked in the clubhouse. Although she was able to focus on illness management and recovery, the level of penetration at that site was low, with only one person running one illness management and recovery group at a time. Thus agencies need to identify penetration goals and adjust implementation plans accordingly.

Although consumer outcomes were positive, this was an uncontrolled study with self- and clinician-reported measures. We cannot rule out other factors that may have contributed to changes, such as selection, maturation, other interventions, and positive response bias. There were not enough sites, or enough variability in fidelity across sites, to examine the best method of implementation. However, sites with the least difficulty started small, with one pilot program that could later be spread. In contrast, agencies that sought immediate widespread implementation struggled with practicalities of supervising large numbers of clinicians and ensuring accountability for provision of the service. We recommend that agencies start with a pilot program, with training, close supervision, and monitoring of fidelity and program outcomes. In addition, the agencies that have continued to implement illness management and recovery beyond this demonstration project (the practice stage in Simpson's transfer model [15]) have internalized both the training and the monitoring functions by developing internal experts to serve as trainers and fidelity assessors. One agency hosts quarterly half-day retreats for staff to review outcomes, hear from consumers, and problem solve and plan for continued implementation. These types of efforts can help maintain support for the program over time.

Conclusions

This study of a statewide implementation demonstrates that illness management and recovery can be implemented with a high degree of fidelity in multiple sites in a relatively short period. Although the number of programs and the sample selected for outcome monitoring were limited, we learned a great deal from the process of working with sites to implement this package of evidence-based interventions focused on recovery.

Acknowledgments and disclosures

This study was funded by grant SM56140-01 from the Substance Abuse and Mental Health Services Administration and a contract with the Division of Mental Health and Addiction, Indiana Family and Social Services Administration. The authors appreciate the involvement of the following sites in the implementation project: Adult and Child Mental Health Center, Inc.; Bowen Center; Gallahue Mental Health Center; Madison Center; Park Center, Inc.; Quinco Behavioral Health Systems; and Wabash Valley Hospital, Inc.

Dr. Salyers, Mr. Gearhart, and Dr. Rollins are co-owners of Target Solutions, LLC, which provides consultation and training in illness management and recovery. The other authors report no competing interests.