Evaluations by consumers are widely recognized as important indicators of the quality of behavioral health services (

1,

2,

3). Such information can be used both for internal quality improvement and to meet requirements of third-party payers and regulatory agencies.

However, health plans and service delivery sites frequently use unstandardized instruments that have not been well validated. Important limitations of existing instruments and methods include the absence of information on the psychometric properties of the survey instruments, the instruments' lack of sensitivity for detecting dissatisfaction, low response rates, and a consequent lack of representativeness of responders (

4).

Data have been reported on the reliability and validity of the Client Satisfaction Questionnaire (CSQ) and the Service Satisfaction Scale (SSS-30) (

5). More recently, the Mental Health Statistics Improvement Program (MHSIP) of the federal Center for Mental Health Services has developed a consumer-oriented mental health report card to assess the care of persons with serious mental illness (

6,

7). The CSQ, the SSS-30, and the MHSIP consumer survey focus primarily on services received within particular treatment facilities.

Recent efforts to develop and implement nationally standardized performance and satisfaction tools that would allow comparisons between different service settings and health care delivery systems have brought together the perspectives and expertise of many stakeholders, including purchasers, providers, administrators, researchers, and consumers. The goals of these efforts are to develop meaningful indicators of the quality of mental health care, to achieve consensus among stakeholders on the indicators selected, to implement the survey tools, and, ultimately, to report on the comparative quality of the organizations assessed.

The American Managed Behavioral Healthcare Association (AMBHA) developed Performance-Based Measures for Managed Behavioral Healthcare Programs (PERMS), a set of 16 indicators that include aspects of access to care, consumer ratings of satisfaction, and quality of care (

8). The National Committee for Quality Assurance (NCQA) has developed the Health Plan Employer Data and Information Set (HEDIS), the most widely used report card system to compare quality of care across health care plans (

9). The Foundation for Accountability (FACCT), a not-for-profit organization, has also supported the development of quality measures and dissemination of performance information to consumers (

10).

A prominent effort in consumer evaluation of services is the Consumer Assessment of Health Plans Study (CAHPS) (

11). Funded by the Agency for Health Care Policy and Research (AHCPR), CAHPS is a collaborative effort of Harvard University, Rand Corporation, and the Research Triangle Institute, working with the health care industry and consumers. The goal of CAHPS is to develop a set of standardized survey questionnaires and report formats that can be used to collect and report meaningful and reliable information from health plan enrollees about their experiences (

12).

The CAHPS consortium worked closely with other groups to achieve consensus on a survey strategy, and the CAHPS survey will be the new HEDIS consumer survey starting in 1999. In 1998 AHCPR, NCQA, and the CAHPS consortium completed a convergence of the CAHPS survey and the NCQA Member Satisfaction Survey. This new instrument— CAHPS 2.0H—is part of health plan reporting requirements for NCQA accreditation in 1999. In addition, the Health Care Financing Administration is using the CAHPS survey to assess care in managed care plans providing care to Medicare beneficiaries. In 2000 they plan to use the CAHPS survey with Medicare beneficiaries receiving fee-for-service care.

This paper describes the goals, design principles, rationale, and development of a behavioral health version of the CAHPS survey, the Consumer Assessment of Behavioral Health Services instrument (CABHS).

Development of the behavioral health instrument

Goals of the project

The CABHS development team was assembled in January 1997 by the Harvard University CAHPS team to include experts in mental health and substance abuse quality and outcome assessment. The CABHS was developed over the first nine months of 1997.

The goal of the CABHS project is to develop and test a survey that can be used by consumers, clinicians, health care plans, purchasers, and state and federal agencies to collect information about the quality of mental health and substance abuse care in a variety of treatment settings. The survey data could be used to guide quality improvement and to facilitate more informed choices by consumers and purchasers.

Design principles and conceptual domains

The following general principles guided the development of both the CAHPS and the CABHS surveys.

• Survey instruments should allow valid comparisons across a wide range of potential users, including privately insured individuals and those in publicly funded programs, and for all types of health care delivery systems.

• Questions should focus on health care experiences for which consumers are the best or only source of information.

• Questions should cover areas that consumers and purchasers want to know about when they are choosing health insurance plans.

• Data from the survey should be as accurate and reliable as possible. Efforts should be made to maximize response rates, a specific and reasonable time frame for recall should be specified, and questions should be consistently understood by respondents.

• The survey should be as easy as possible to use by either mail or telephone and should provide comparable results when administered in different ways.

An extensive literature review and input from focus groups suggested domains of quality that are important in evaluating all health services and insurance plans.

• Access to care, including timeliness of appointments and availability of telephone services

• Quality of personal interactions and communication with providers, information given by providers to consumers and by consumers to providers, continuity and coordination of care, and global evaluation of care

• Health plan enrollment and payment for services

• Quality of the health plan, including access to information and services provided by the plan, administrative burden, and global evaluation of the plan.

Special issues

To identify quality issues that are particularly important to consumers of mental health and substance abuse services, we reviewed 16 published and unpublished consumer satisfaction surveys that have been used in mental health facilities and systems. (The surveys and citations of their sources are available on request.) We also examined performance indicators that have been identified by NCQA, AMBHA, and MHSIP and practice guidelines for psychiatric evaluation and treatment of major depressive disorders, bipolar disorders, substance use disorders, and eating disorders published by the American Psychiatric Association.

From these sources we identified three quality domains of particular significance to mental health and substance abuse service recipients: respect for consumer-patient rights, consumer-patient participation in the treatment process, and information about the benefits and risks of psychotropic medications.

Structure of the instrument

Many features of the CAHPS survey transferred to the behavioral health survey without alteration. For example, following the CAHPS format, we included screening questions that might not apply to everyone about the use of particular services and the occurrence of events. If a respondent answers "no" to a question, he or she continues on to the next question. Affirmative answers to screening questions are followed up with additional questions. Only "yes" or "no" answers are permitted for the screening questions, although the follow-up questions include an "inapplicable" response option. This approach was used because previous research indicated that the number of respondents who were specifically asked the screening question and who indicated that the question did not apply was much larger than the number who used the corresponding "inapplicable" response option for the follow-up questions (

13).

We also used the CAHPS response options for rating aspects of care and of the behavioral health plan. Thus most questions that used a rating scale were phrased in terms of how often particular events occurred during treatment, with the response options of never, sometimes, usually, and always. Global evaluations of care were rated on an 11-point scale from 0 to 10, with 0 indicating the worst possible care and 10 the best possible care.

Following the CAHPS model, we focused the behavioral health survey on outpatient services. Although inpatient care is an important and costly component of mental health treatment, utilization rates for inpatient service are low—10 percent, based on the pilot study. Consequently, we felt that detailed questions about the quality of inpatient care would be better asked at the facility level and targeted specifically to users of inpatient services.

Consumer focus groups

An initial instrument was reviewed by experts throughout the country. Based on their comments, we developed a revised version that was tested in consumer focus groups. Three focus groups were conducted, one each composed of consumers receiving treatment for depression or anxiety, substance abuse, and schizophrenia. Focus group members included males and females over age 18. Racial and ethnic minority groups were represented, as were consumers insured by private (commercial) health plans and public (Medicare disability and Medicaid) health plans. The focus groups completed the instrument and discussed the items and their responses. Participants' understanding of the wording of items and the response choices, the content of their responses, and their ideas about important issues guided refinement of the instrument.

Feedback from the focus groups helped identify several content areas that did not transfer well from the CAHPS survey, as well as more general conceptual issues. For example, the groups voiced great concern about adequacy of insurance coverage. Consequently, we added a question to assess how much of the services received were paid for by the plan.

Cognitive interviews

Based on the focus group results, a revised instrument was prepared and tested in cognitive interviews with ten patients who were being treated for a range of disorders, including depression, anxiety, substance abuse, and psychotic disorders, and who had different types of insurance coverage, including Medicare disability, Medicaid, and commercial insurance.

Research on consumers' comprehension of quality care indicators suggests that many survey items are not well understood (

14). Cognitive testing provides a qualitative process for evaluating questionnaire items to ensure that respondents are able to answer the questions, that the questions are consistently understood, and that respondents interpret the content of the questions as intended by the instrument's developers (

15,

16).

The cognitive interviews were divided into several brief sets of survey questions. After asking each set of questions, interviewers asked a series of probe questions to assess respondents' understanding. Respondents were then asked to elaborate on their answers so that their understanding of the substance of each question could be evaluated. Interviewers took detailed notes. All interviews were audiotaped, with respondents' written informed consent.

After the interviews, the interviewers met to discuss components of the survey that were not clear to respondents, including instructions, issues of what services to include in the evaluation of their care, and specific items that generated questions in the minds of respondents. Using information obtained from the cognitive interviews, we revised the instrument for pilot testing.

The resulting CABHS mental health and substance abuse module included 27 questions about the quality of outpatient services received over the last six months, 15 questions about services received from the health insurance plan for behavioral health, seven screening questions to determine the use of particular services, and nine demographic and health status questions to permit adequate description of sample characteristics.

The pilot test

Procedure

The survey was pilot tested from November 1997 through January 1998 with two groups of mental health consumers: 200 commercially insured individuals and 300 Medicaid enrollees. Both consumer groups were members of a health maintenance organization (HMO) for which the behavioral health component was managed by an external managed behavioral health care organization. The survey was conducted by the Center for Survey Research at the University of Massachusetts at Boston, an organization independent of the HMO and the managed care organization.

Consumers were randomly assigned to one of two modes of survey administration: mail and telephone. A questionnaire, including a cover letter, was mailed to the first group, followed by a reminder postcard, followed by a second complete mailing of the questionnaire and cover letter to nonrespondents. The survey was administered by telephone to the second group of consumers; before the telephone survey was conducted, potential respondents received an advance letter informing them about the survey.

Results

Response rates.

For commercially insured consumers, survey administration by telephone yielded a substantially higher response rate than the mailed survey (52 percent versus 33 percent). For consumers insured by Medicaid, the telephone response rate was lower than the response rate for the mailed survey (25 percent versus 30 percent) because of the large number of unusable telephone numbers among the Medicaid beneficiaries. Among the Medicaid consumers who were assigned to be interviewed by telephone, 55 percent were not reachable at the phone number given, or the phone had been disconnected. Among individuals with usable phone numbers, telephone response rates were 63 percent for commercially insured consumers and 57 percent for Medicaid consumers.

Respondent characteristics.

A total of 160 persons responded to the mailed or telephone version of the survey. Respondents did not differ significantly from nonrespondents in age or sex.

The majority of the respondents were female (126, respondents, or 80 percent), were between the ages of 25 and 44 (97 respondents, or 61 percent), and were high school graduates (129 respondents, or 82 percent). Seventy-eight respondents (49 percent) were commercially insured, and 82 (51 percent) were Medicaid beneficiaries. Almost two-thirds (100 respondents, or 64 percent) were Caucasian, 30 (19 percent) were black or African American, and 25 (17 percent) were members of other racial groups. Overall health was reported to be fair or poor by 43 respondents (27 percent). Mental health was reported to be fair or poor by 54 respondents (34 percent). (Percentages were calculated based on the number of respondents to whom the question applied and the number who answered the question.)

Missing data.

Analysis of the CABHS survey data revealed that each item was completed by almost all respondents. The number of respondents who did not answer a particular question ranged from 0 to 5 percent. However, responses to the screening questions indicated that many of the events assessed in the survey were not applicable to all respondents. For example, 96 respondents (60 percent) reported that they did not have a crisis or emergency situation.

Self-reported service utilization.

A total of 136 respondents (86 percent) reported receiving treatment for mental health, and 96 (76 percent) reported taking prescription medications as part of their mental health treatment. Fourteen respondents (9 percent) reported receiving services for alcohol abuse, and 11 (7 percent) received services for drug abuse. Thirteen respondents (10 percent) reported having received inpatient care.

Evaluation of care received.

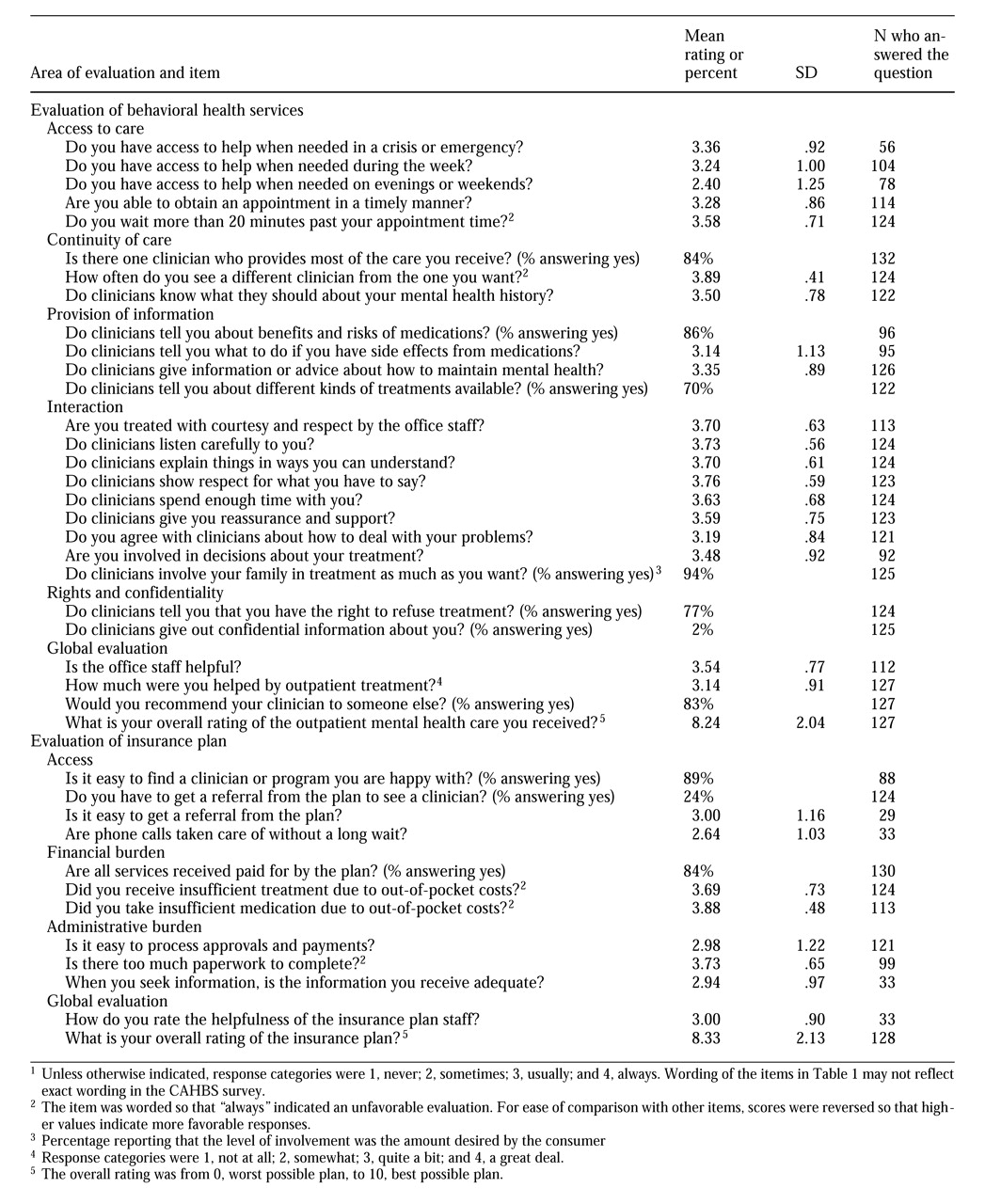

Responses to survey questions evaluating the care received suggested relatively positive experiences. As

Table 1 shows, the mean ratings ranged between 3 and 4 on the 4-point rating scale. Generally, clinician-consumer interaction was rated more favorably than access to care. The highest rated aspects of care were respect for the consumer from the clinician and the office staff, the frequency with which consumers felt listened to by clinicians, and clinicians' explanation of things in ways that consumers could understand. Least favorable ratings were given to accessibility of help in the evenings and on weekends. (The wording of items in

Table 1 does not reflect the exact wording in the CAHBS survey. A copy of the survey is available from the corresponding author.)

Evaluation of the behavioral health plan.

In response to questions about administrative burden and global evaluation of the insurance plan for mental health and substance abuse, respondents gave the most favorable responses to the question about paperwork, an expected result because all participants in this pilot study were HMO members for whom no paperwork was required. Respondents gave the least favorable responses to a question about handling of phone calls to the plan without a long wait. Global evaluation of the plan (a mean±SD rating of 8.33±2.13 on an 11-point scale) was almost identical to the global evaluation of outpatient services (a mean of 8.24±2.04).

Differences between commercial and Medicaid consumers.

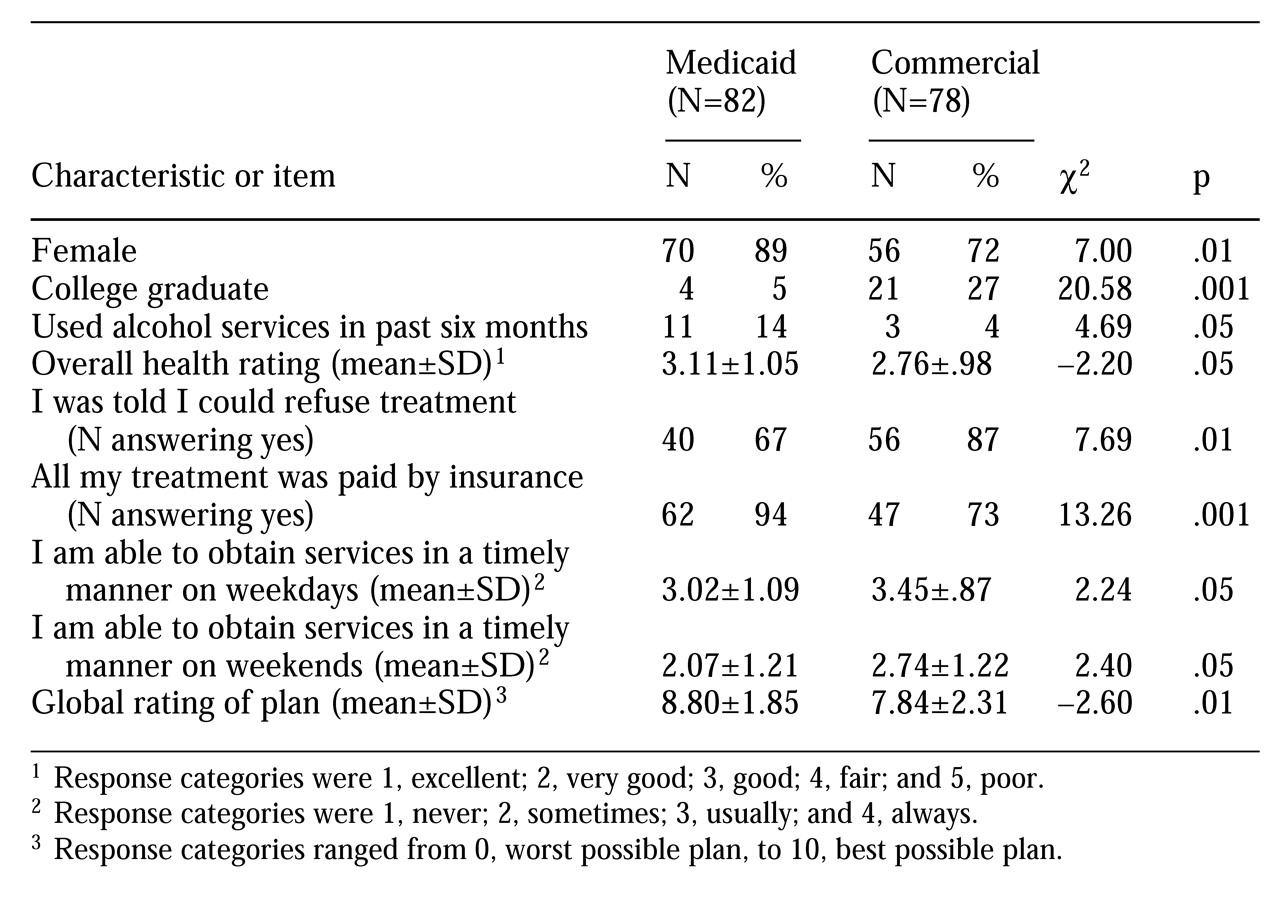

One of the goals of the CABHS survey is to discriminate among health plans. We assessed differences between respondents within the commercial plan and those within the publicly funded plan (Medicaid) using chi square tests for categorical variables and t tests for continuous variables. Because of the relatively small sample size and the large number of statistical tests, these differences should be viewed as suggestive only. However, they do indicate the capacity of the survey instrument to demonstrate differences among insurance plans.

As shown in

Table 2, nine survey items demonstrated statistically significant differences between Medicaid and commercially insured consumers. Two items indicated differences in demographic characteristics, one item indicated differential service use, and one item indicated differences in health status. Five items showed differential evaluation of services. Compared with consumers in commercial plans, a larger proportion of Medicaid consumers were female, were less well educated, and had received alcohol abuse services in the past six months. In addition, a larger proportion of Medicaid consumers gave lower ratings to their overall health status.

Despite differences in evaluation of services and health plans, more favorable ratings were not consistently reported by either group across domains. Consumers in commercial plans rated timeliness of help on weekdays, evenings, and weekends more highly than did Medicaid consumers. A larger proportion of commercially insured consumers reported that they were told they could refuse treatment they did not want (87 percent) compared with Medicaid consumers (67 percent).

On the other hand, Medicaid consumers rated their health plan more highly overall than did commercial consumers. In addition, a greater percentage of Medicaid consumers reported that all their treatment was paid for by their insurance plan, an expected difference because the benefit package differed for the two groups. Commercial enrollees were subject to a copayment and were limited to a maximum of 20 visits. Medicaid enrollees had no copayment or limits on the number of visits. The two groups did not differ significantly in their responses to any other survey items.

To determine whether the differences in ratings between the Medicaid and commercial plans were due to differences in demographic characteristics or health status, we conducted multiple regression analyses using gender, education, and overall health rating as independent variables and timeliness of help and global rating of the plan as dependent variables. Comparable logistic regression analyses were done for the two categorical variables "I was told I could refuse treatment" and "All my treatment was paid by insurance." Results indicated that when the analyses controlled for gender, education, and overall health status, differences between commercial and Medicaid enrollees remained statistically significant.

Conclusions and future directions

Several national organizations are involved in efforts to identify appropriate quality indicators for behavioral health, to implement consumer surveys, and to disseminate information about the quality of health plans to consumers and purchasers. As noted, members of the CAHPS team, NCQA, and others recently completed a lengthy process in which the NCQA Member Satisfaction Survey and the core CAHPS were systematically tested and a single instrument was developed to replace each of the existing surveys.

The Consumer Assessment of Behavioral Health Services (CABHS) incorporates all the appropriate changes and improvements incorporated in the 2.0 version of CAHPS.

In the behavioral health arena, members of the CABHS and MHSIP teams are engaged in a similar process to refine a behavioral health survey instrument that combines the best features of both surveys in an effort to coordinate and integrate national efforts to measure the quality of behavioral health services. These two groups are in regular communication with members of the AMBHA performance measurement committee, as well as consumers and government purchasers of behavioral health services. These efforts are designed to guide development of a national report card for behavioral health that will help purchasers and consumers in choosing insurance plans, as well as provide information needed to improve the quality of behavioral health care.

The current CABHS incorporates the design principles and methodological innovations developed by the CAHPS team. In addition, it incorporates questions of particular salience to users of behavioral health services. Cognitive interviews, focus groups, and pilot test data suggest that the questions in the CABHS survey capture the most important aspects of the quality of behavioral health services that can be reported by consumers. Thus a consumer-based measure of the quality of behavioral health plans that is comparable in content and design to CAHPS now exists.

Future efforts will focus on further field testing of the survey, comparing the CABHS and MHSIP consumer surveys, and developing scoring and reporting formats for CABHS that will be useful to consumers and purchasers in choosing behavioral health services and plans.

Acknowledgments

Research reported in this paper was supported by a grant from the MacArthur Foundation and funds from the Agency for Health Care Policy and Research cooperative agreement HS-0925 supporting the Consumer Assessment of Health Plans Study.