Rapid and sweeping changes in the U.S. health care system have fueled a growing interest among providers, purchasers, and consumers in understanding and measuring quality of health care (

1,

2). A general consensus exists that the quality of health care can be assessed on the basis of structure (characteristics of providers and hospitals), process (components of the encounter between provider and patient), and outcomes. However, a precise definition remains elusive. Donabedian (

3), the most widely cited author on the topic of health care quality, concedes that it is not clear whether "quality is a single attribute, a class of functionally related attributes, or a heterogeneous assortment."

Data for assessing health care quality can be obtained from a variety of sources, including clinical charts, administrative records, direct observation of the patient-provider interaction, outcome questionnaires, and patient surveys (

4). Most performance-monitoring systems rely primarily on administrative measures for quality assessment because of the availability of data and low cost of data collection (

5,

6). However, these data are increasingly being supplemented by information derived directly from consumers, usually in the form of patient satisfaction surveys. Although measuring satisfaction requires primary data collection and is thus more costly and time consuming than obtaining administrative measures, purchasers increasingly regard satisfaction questionnaires as an essential complement to administrative measures of health care quality (

7,

8).

Both of these sources of data can describe both the process and the outcomes of care. For instance, measures of satisfaction can provide information on treatments as well as a consumer perspective on the success of those treatments. Administrative data can provide information about number of visits, which is a process measure, or about readmission, which is a common outcome measure for inpatient care.

However, each type of measure is prone to certain shortcomings. Patient satisfaction surveys may be subject to nonresponse bias; that is, consumers who respond to health surveys may differ from those who do not. Recall bias—when consumers do not accurately recall information about their care—is also a potential problem. Administrative measures, although less prone to these forms of bias, may be a less sensitive measure of health care process than consumer-derived indicators (

9).

Despite the inclusion of both administrative and consumer-derived indicators in performance-monitoring systems, little is known about the relationship between these two types of measures (

10). For mental health populations, even less information explicating the role of patient-based measures in assessing quality of care is available (

11). Many satisfaction subscales directly parallel administrative measures of plan quality. For instance, most surveys ask consumers about access to care, while simultaneously measuring access by examining use of outpatient services. However, it is not known whether the constructs assessed by these two types of measures are identical, partly related, or wholly distinct. A better understanding of the relationship between administrative data and satisfaction data may help guide the selection of measures to be included in performance-monitoring systems.

A second issue that arises when assessing quality of care is that although data are collected at the level of the individual patient, comparisons of quality are generally conducted at the level of the provider or hospital. A literature has emerged to relate satisfaction to individual consumer experiences and behavior (

12,

13) as well as outcomes of care (

14), but few studies have examined the use of satisfaction measures to compare quality across different hospitals or providers (

10). Report cards rate plans or providers on the basis of mean satisfaction scores across groups of patients rather than on the ratings of individual patients (

15).

The study reported here used data compiled for a national mental health program monitoring system recently implemented within the VA health care system to examine the association between two types of measures of health care quality—consumer satisfaction and administrative measures. The administrative measures were chosen to use existing VA electronic sources of data while maximizing comprehensiveness, validity, and reliability (

16). A previous study identified a number of individual patient characteristics that were significantly associated with satisfaction with mental health care (

17).

The purpose of this study was to examine three questions: What is the association between patient satisfaction measures and administrative measures of plan quality for individual patients? Are there differences in the relationship between the two types of measures when examined on the individual versus the hospital level? Is it necessary to include both types of data when evaluating the performance of health care providers?

Methods

The study used a cross-sectional design to assess the association between data about satisfaction with inpatient psychiatric hospitalization, which was obtained by a questionnaire, and administrative data about the index hospitalization and care during the six months after discharge. Rather than fitting satisfaction and administrative measures into an independent-dependent categorization, the study treated each as correlative indicators of the underlying construct of quality of care. Patients completed the satisfaction questionnaire after the index hospitalization. Data on subsequent readmissions and outpatient care were gathered after the questionnaire was completed.

Sample

The sample was drawn from respondents to a nationwide VA satisfaction survey that was sent to a random sample of inpatients discharged to the community from VA medical centers between June 1 and August 31, 1995 (

17). Patients discharged to nursing homes were excluded because follow-up is generally provided in those settings rather than the VA. The subsample chosen for this study included veterans with psychiatric diagnoses (

ICD-9 codes 295.00 to 302.99).

Thirty-seven percent of individuals who were sent questionnaires mailed back responses, with a range of 24 percent to 69 percent across participating hospitals. In this sample of individuals with psychiatric diagnoses, respondents were somewhat more likely than nonrespondents to be older, female, married, and white, and less likely than nonrespondents to have psychotic or substance use disorders (

17).

Questionnaire

Data on satisfaction were collected using a method based on a four-step procedure designed to maximize response rates (

18). A total of 73 questions addressed ten domains of general quality of service delivery and four domains of alliance with inpatient staff. The ten general-quality domains were coordination of care, sharing of information, timeliness and accessibility, courtesy of staff, emotional support, attention to patient preferences, family involvement, physical comfort, transition to outpatient status, and overall quality of care. The alliance domains were sense of energy or engagement on the unit, practical problem orientation of the staff, alliance with clinician, and overall satisfaction with mental health services.

All of the subscales had Cronbach's alpha values of .6 and above, indicating adequate internal reliability (

17). The questionnaire was developed from other well-established instruments (

19,

20,

21). The concordance between the content of the subscales and those used in other studies suggest appropriate content validity—that is, the subscales reflect widely accepted domains of consumer satisfaction.

Administrative measures

Demographic data, diagnostic information, and other administrative measures were derived from two national VA files—the patient treatment file, a comprehensive discharge abstract of all inpatient episodes of VA care, and the outpatient file, a national electronic file documenting all VA outpatient service delivery. Each questionnaire contained a code that could be linked to a unique patient identifier (an encrypted Social Security number), which in turn was used to merge satisfaction data with the inpatient and outpatient data.

Several administrative measures constructed for a national VA mental health performance-monitoring system were used for comparison with the satisfaction measure. Inpatient measures included length of stay; readmission within 14, 30, or 180 days; and time until readmission. Outpatient measures included follow-up within 30 days or 180 days after discharge, days until first outpatient mental health follow-up (among those with ambulatory follow-up), and number of two-month periods after discharge with at least two mental health or substance abuse outpatient visits. These indicators have been shown to identify a substantial range of variation across hospitals with relatively little redundancy (

16).

Potential confounders

Demographic and diagnostic data were obtained to control for case mix in the multivariate analyses. These variables included age, race, gender, income, marital status, severity of medical illness using total number of medical diagnoses as a proxy (

22), and psychiatric diagnosis, which was reported as one of five dichotomous variables.

Summary components

To better understand the underlying relationship between the large number of satisfaction and administrative measures, principal components were derived from each set of variables using the SAS factor procedure, with varimax rotation. Components with eigenvalues of one or greater were retained; scree plots confirmed this cutoff as appropriate.

The principal-components analysis of satisfaction variables revealed two components that explained a total of 61.8 percent of the variance of the satisfaction subscales. The first, termed general service delivery, included nine of the ten subscales derived from the general survey. The second component, termed alliance with inpatient staff, included all four alliance subscales. These components parallel the technical aspects of care (delivery of services) and the interpersonal aspects that have been found to underlie a number of satisfaction measures (

23). Cronbach's alpha values for these components were .94 for the first and .82 for the second, indicating strong internal coherence of these constructs.

The principal-components analysis of administrative variables revealed five separate components—three inpatient and two outpatient—which explained a total of 84.9 percent of the total variance. The first inpatient component, readmission intensity, included measures of readmission within 180 days of discharge and total days readmitted within 180 days. The second inpatient measure, early readmission, included readmission within 14 or 30 days. The third measure was length of stay, composed of that single measure.

The first outpatient component, promptness-continuity of outpatient follow-up, comprised three measures: days until first outpatient visit, follow-up within 30 days of discharge, and number of two-month periods in the time after discharge with at least two visits. The second component, any outpatient follow-up, included the single variable connoting any visit within 180 days of discharge. Cronbach's alpha scores for these composite administrative variables ranged from .80 to .94, again reflecting strong internal coherence of these components.

Statistical methods

Random regression, also known as hierarchical linear modeling, a technique designed for models in which individual measurements are clustered into larger groups sharing common characteristics, such as hospitals, was used for all multivariate models (

24,

25). This type of analysis was required because of the lack of independence among observations—that is, because individuals within the same hospital cannot be considered as independent observations drawn from the target population. Random regression allows comparisons to be made at two distinct levels—patient and hospital—without a loss of statistical power, because all models use the same sample size (

26). Missing values were replaced with the mean value of the hospital where the patient was treated so that each analysis was based on 5,542 subjects. The SAS MIXED procedure was used for all random regression analyses.

Two levels of analyses were conducted. The first, which measured the associations between satisfaction and other performance measures at the level of the individual patient, included a random intercept term to account for potentially correlated errors attributable to similarities among patients treated at the same hospital. The second level of analyses measured the associations between mean satisfaction and other performance measures at the hospital level, with each model using a random intercept. Thus the former set of analyses examined whether for a given veteran improved satisfaction would be associated with higher ratings on administrative measures, such as an increased likelihood of follow-up after discharge. The latter set of analyses examined whether hospitals with higher satisfaction ratings also performed better on administrative measures of quality.

Each model adjusted for heterogeneity of patient caseloads by entering terms into the model pertaining to demographic and diagnostic characteristics of patients or hospital caseloads. Each association controlled for age, race, gender, marital status, income, number of medical diagnoses, and psychiatric diagnosis. The magnitude of each association was calculated as a standardized regression coefficient that represents the number of standard deviations of change in the outcome of interest per standard deviation of change in the explanatory variable. The standardized regression coefficient, which allows comparisons of magnitude across differing variables, represents an approximation of an r value.

All dependent variables were checked for normality of distribution, and all variables found not to be normally distributed were appropriately transformed. Because length of stay remained highly skewed after log transformation, it was converted into a five-level integer variable: less than eight days, eight to 14 days, 15 to 28 days, 29 to 60 days, and greater than 60 days. Because of multiple comparisons, the Bonferroni method was used to adjust the critical p value for statistical significance to .05 divided by 30, or .0017.

Results

Characteristics of the sample

A total of 5,542 veterans from 121 hospitals responded to the survey. Reflecting the veteran population from which the sample was drawn, the population was largely male (5,278 veterans, or 94.6 percent), white (3,895 veterans, or 70.3 percent), and poor, with a mean±SD annual income of $9,583±$4,499. The mean±SD length of inpatient stay was 13.2±52.22 days. The three most common psychiatric diagnoses were schizophrenia (1,237 veterans, or 25.3 percent), affective disorder (836 veterans, or 17.1 percent), and posttraumatic stress disorder (776 veterans, or 14 percent).

Associations between satisfaction and administrative variables

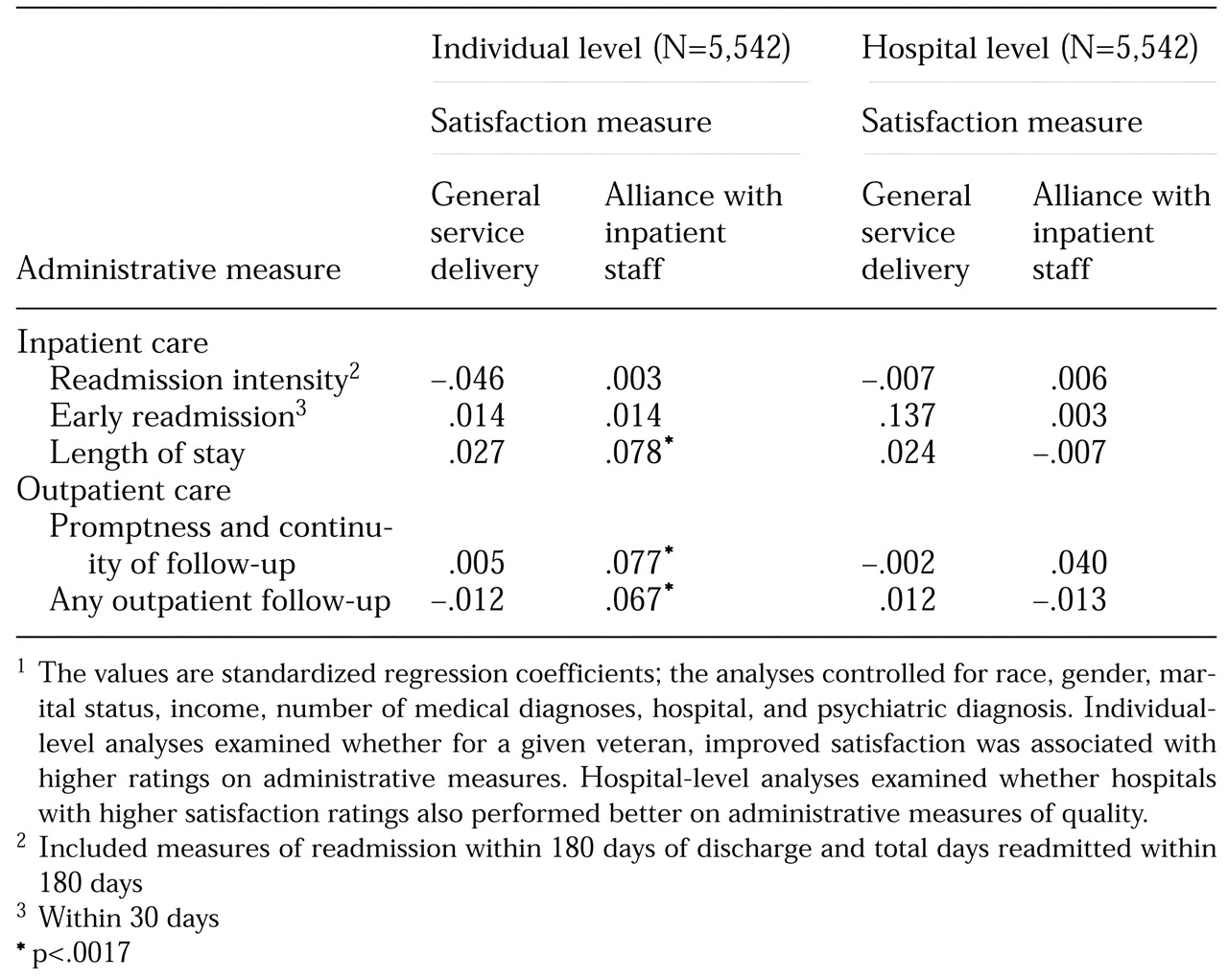

Table 1 presents the associations between the summary satisfaction and administrative variables. Each cell contains a standardized regression coefficient; analyses adjusted for race, gender, marital status, income, number of medical diagnoses, hospital, and psychiatric diagnosis.

At the level of the individual, satisfaction with general quality of service delivery was associated with decreased intensity of readmission—a measure derived from both the likelihood of readmission and the number of days readmitted after discharge. Better alliance with inpatient staff was significantly associated with a greater likelihood of outpatient follow-up, promptness of follow-up, and continuity of follow-up, as well as longer length of stay for the index admission.

Hospitals where patients expressed greater satisfaction with their alliance with outpatient staff also had higher scores for promptness and continuity of follow-up. No other associations between satisfaction and administrative measures were significant.

Discussion and conclusions

This study is the first that we are aware of to examine the association between the two types of indicators most commonly used in mental health performance-monitoring systems, administrative measures and patient satisfaction. At the level of the individual patient, a number of measures of satisfaction with inpatient care were significantly associated with increased likelihood of outpatient follow-up, promptness of follow-up, and continuity of outpatient care, as well as reduced likelihood of readmission. However, these relationships became highly attenuated when hospitals rather than individuals were the unit of comparison, despite the use of an analytic method that preserved sample size and statistical power and adjusted for case mix.

Limitations

Several limitations exist in each source of data. Study of patient satisfaction in any system of care should invariably raise questions about how well the findings can be generalized to other populations or financing systems. The population with mental disorders seen in VA facilities is similar to that seen in other public-sector settings, although VA has a lower proportion of women and of the very poor.

Second, despite the use of an established mail out-mail back method, the survey response rate for this sample was only 37 percent, a rate typical for mail-in questionnaires distributed to seriously mentally ill subjects (

12,

27). Past research has demonstrated that telephone and in-home surveys may help improve these response rates, although only to some extent and at considerably increased cost (

28). Developing better methods of maximizing response rates among seriously mentally ill people is essential both to ensure accurate measurement of satisfaction and to better understand its relationship to other indicators of quality.

Third, it is never possible to entirely adjust for differences in case mix when comparing quality across institutions. In this study, for instance, it is possible that unmeasured differences in severity of illness across institutions mediated differences both in satisfaction ratings and in quality measures across institutions. For instance, patients who have more serious mental illness might simultaneously have lower levels of satisfaction with care (

29) and worse continuity of care (

30). Developing better methods of risk adjustment for case mix is one of the most important challenges facing performance-monitoring systems today (

31).

Finally, causal statements about the relationship between satisfaction and other quality measures must always be made with caution. Satisfaction can simultaneously be a cause or an outcome of health utilization, and distinguishing between the two can be difficult (

32). In this study we used the temporal sequence of events—index hospitalization, followed by satisfaction survey completion, followed by readmission and other outpatient indicators—to guide our hypotheses about causality.

What is the association between patient satisfaction measures and administrative measures of plan quality for individual patients?

At the level of the individual, better reported alliance with staff was a significant predictor of higher rates of follow-up and promptness and continuity of outpatient mental health care. The link between alliance with inpatient staff and successful outpatient follow-up suggests that a positive patient-provider relationship in inpatient psychiatric settings may be associated with improved outcomes.

Satisfaction with general service delivery predicted reduced likelihood of readmission and fewer days readmitted. This latter relationship may ultimately be mediated by treatment outcomes. More satisfied consumers may have better outcomes after discharge, reducing the likelihood of rehospitalization. Whatever the mechanism, only the combination of satisfaction with the general and interpersonal aspects of care delivery was associated with higher quality as measured by effective use of inpatient and outpatient services.

Unexpectedly, longer stay was the strongest positive predictor of satisfaction with care for this sample. The finding suggests that this measure may identify a point of divergence between the consumer's and the health care institution's perspective on quality of care. Rapidly declining length of stay has been one of the hallmarks of psychiatric inpatient care over the past decade in both the public and the private sectors (

33,

34). Shorter stays, while valued by administrators and health care institutions for fiscal reasons, may lead to dissatisfaction for mental health consumers.

Are there differences in the relationship between the two types of measures when examined on the individual level and on the hospital level?

A number of associations existed between patient satisfaction and administrative measures for a given individual. However, these differences largely disappeared when mean satisfaction ratings and scores on administrative measures across hospitals were compared. With the exception of the link between alliance with inpatient staff and promptness-continuity of mental health follow-up, no significant associations were found between the satisfaction components and the administrative components at the hospital level. Even though the use of random regression for analyses at the hospital level preserved the same sample size and statistical power as on the individual level, higher scores on administrative measures were no more likely for hospitals with more satisfied patients than for those with less satisfied patients. This finding is of particular interest because for report card systems rating quality of health care, the hospital (or health plan), rather than the individual, is the relevant unit of comparison (

6,

8).

How can we explain the relatively weak associations between consumer-based and administrative measures of quality when comparing hospitals?

The literature has documented substantial variability in quality of care not only across but also within institutions (

35). These differences are not captured when data are compared between hospitals. If within a given institution, some respondents are satisfied and give the hospital high performance ratings and others are dissatisfied and give the hospital low performance ratings, then the association between satisfaction and administrative performance will wash out when hospitals are compared.

Is it necessary to include both types of data when evaluating the performance of health care providers?

Although the relationship between satisfaction and other performance measures may be attenuated when comparisons are made at a hospital level, there is evidence that satisfaction is nonetheless a valid construct to assess as a measure of quality across institutions. Pilot data from this survey (

17,

36) and other similar multidimensional satisfaction scales (

37) have demonstrated strong psychometric properties for these measures. Other studies have also found that these subscales can consistently identify differences in satisfaction across hospitals (

38).

The association between the two types of measures at an individual level suggests that consumer satisfaction and administrative measures of quality go hand in hand and supports the notion that the two are measuring related underlying constructs. The attenuation of the relationships at a hospital level points to the potential difficulties in using either source of data as a sole indicator of quality across institutions.

Acknowledgments

This work was partly sponsored by grants from the National Alliance for Research on Schizophrenia and Depression and the Donaghue Medical Foundation.