Potential Objections

A critic might object to these findings on a number of grounds. One objection is that the SWAP-200 descriptions were provided by clinicians, whose biases might make them unreliable. Several considerations limit the impact of this criticism. First, all observers have biases. Ideally, one would want to rely on as many credible data sources as possible, and future research assessing the validity of the SWAP-200 should draw upon a combination of clinician observation, self-report, informant report, and research interviews. However, we believe the judgments of experts with an average of over 18 years’ practice experience, who have known the patient over an extended period of time (in this case, for more that 33 sessions on average), are likely to be at least as informative as either self-reports or judgments made in 30 to 90 minutes by research assistants using structured interviews. This is particularly true given the potential confounds of state and trait that make assessment of personality disorders especially difficult (

15). One would expect that knowing a patient over an extended time would limit diagnostic “noise” reflecting the vagaries of current axis I state conditions. Such states can bias judgments when diagnosis rests exclusively on a brief cross-sectional snapshot of a patient at a single time.

Second, statistical analyses demonstrate that clinicians can, in fact, use the SWAP-200 to provide reliable descriptions of a disorder. High values for coefficient alpha show that there is good agreement from clinician to clinician, with relatively little measurement error or noise in the composite descriptions (i.e., in the diagnostic prototypes or composite descriptions of actual patients). This does not mean that an individual clinician’s description of a single patient is necessarily reliable; it means that if one averages across the descriptions provided by a group of clinicians, the resulting composite is highly reliable. The high alpha coefficients we obtained—above 0.90 for 14 of 15 diagnoses, and 0.85 for the other—demonstrate that we used large enough groups to accomplish this. These high alpha coefficients are particularly meaningful given that the clinicians differed substantially in their theoretical orientations, training, practice settings, and so on.

Using large samples of clinicians to refine axis II categories and criteria makes sound psychometric sense. The reliability of 797 experienced clinicians is going to be much higher than the reliability of a small number of experts sitting at a committee table, regardless of how knowledgeable the experts are. The reason is strictly mathematical (

49): The more clinicians, the more errors (i.e., idiosyncrasies of individual clinicians) will cancel themselves out.

Third, the gulf between clinical and research approaches to assessing personality disorders is wide (

10). If DSM is to guide clinical diagnosis, it should have clinical relevance, and we know of no better way to guarantee its fidelity to clinical reality than to harness clinical observation to refine it.

Another potential objection is that the high validity coefficients we report are artifactual, because the SWAP-200 descriptions of actual patients may have been based on the clinicians’ implicit prototypes or theories about their patients’ personality disorders, not on the actual characteristics of their patients. If so, then the SWAP-200 descriptions of actual patients would necessarily resemble the descriptions of hypothetical, prototypical patients with the same personality disorder.

This is unlikely for several reasons. First, the SWAP-200 includes 200 items, whereas any given axis II diagnosis includes only seven to 10 criteria. Thus, clinician-respondents in this study were on their own for the other 190, which our prior item analyses had demonstrated are nonredundant and are thus not simply amplifications of the criteria in axis II.

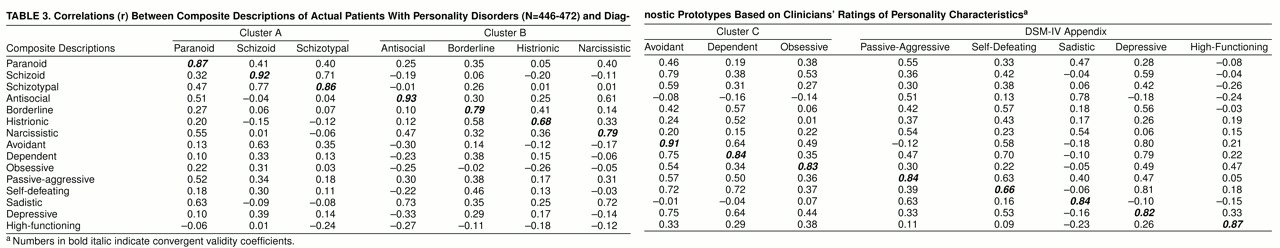

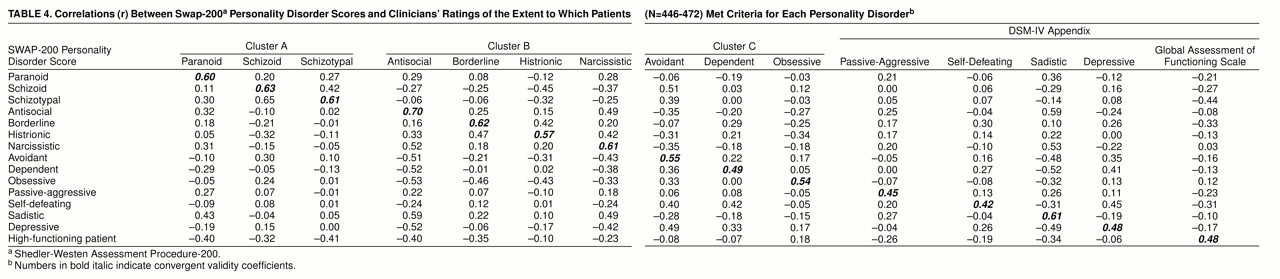

Second, our validity analysis based on clinicians’ ratings (of the extent to which patients met criteria for each personality disorder) is not vulnerable to this potential criticism. The results of this analysis do not depend on the clinicians’ primary diagnosis of their patients. Instead, we found that ratings of 15 different personality disorders correlated in expected ways with SWAP-200 personality disorder scores (which reflect the ranking of 200 different SWAP-200 items). To imagine that instead of describing an actual patient, clinicians were juggling 15 prototypes in their minds while trying to sort 200 items is implausible. If anything, the findings from this second validity analysis would have been biased downward if clinicians who described actual patients were really describing their implicit prototypes or theories about diagnoses, since clinicians would have skewed their understanding of the patient toward the primary diagnosis and away from any secondary diagnoses, leading to poor convergent and discriminant validity estimates on the other 14 dimensional ratings.

Third, as

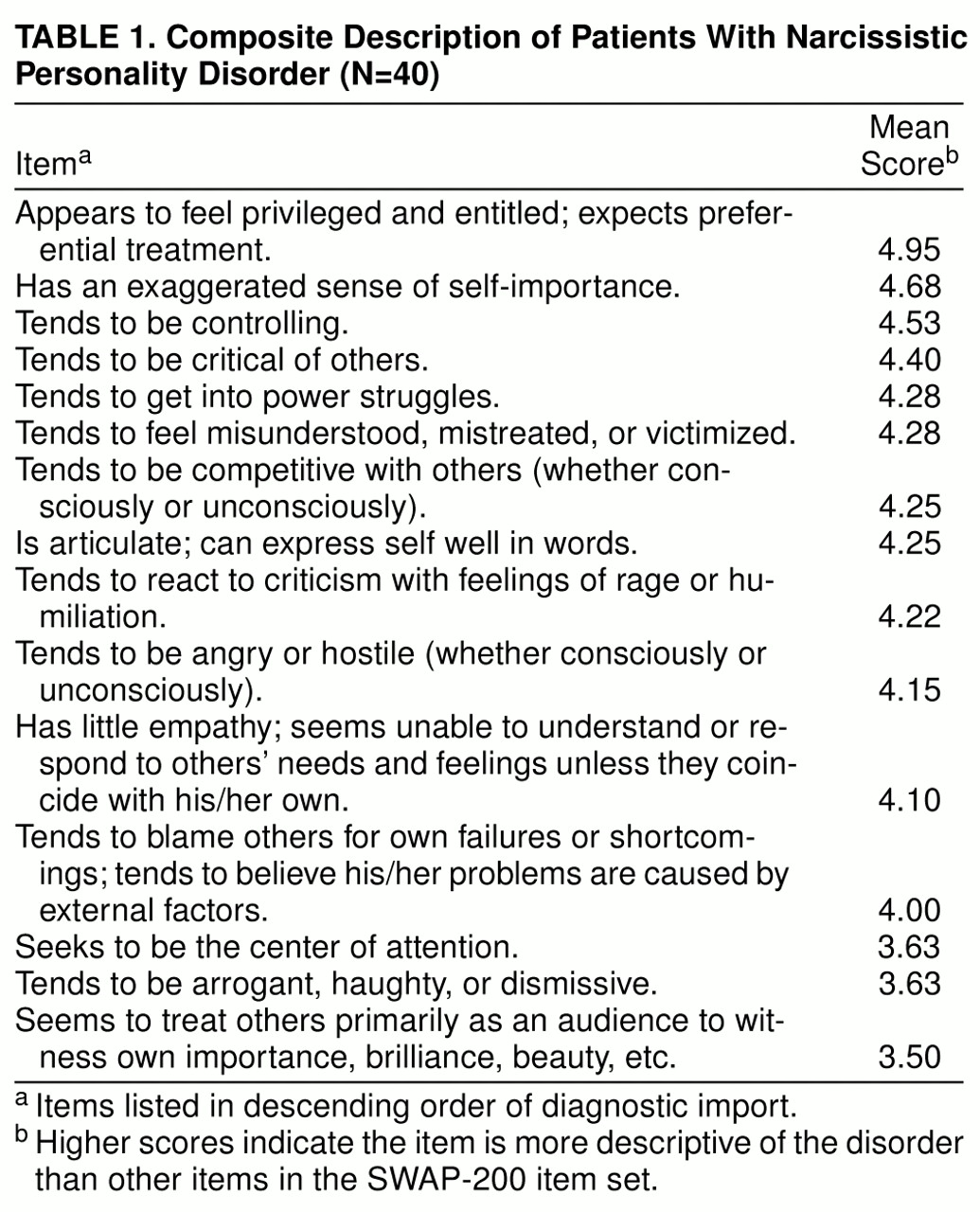

table1 and

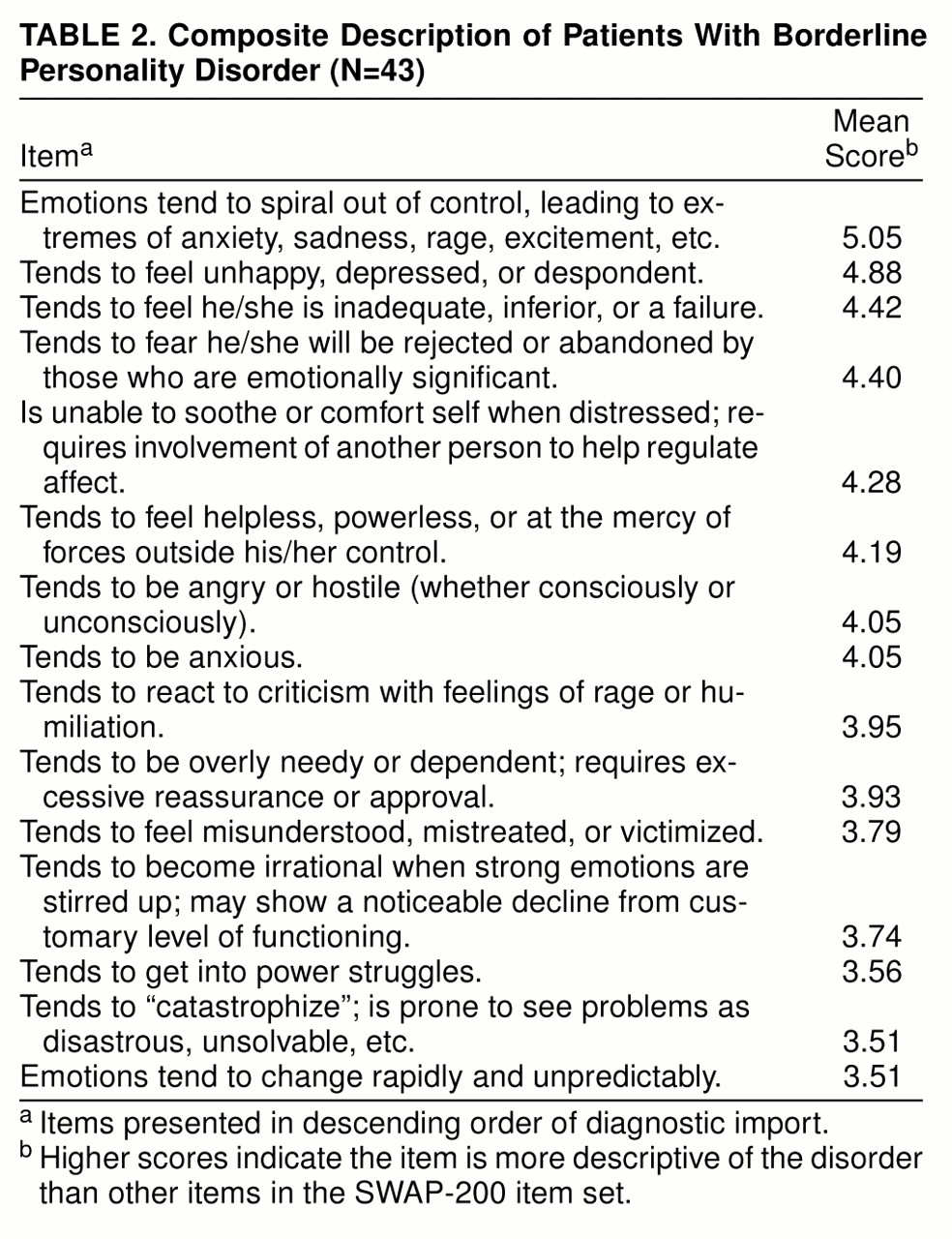

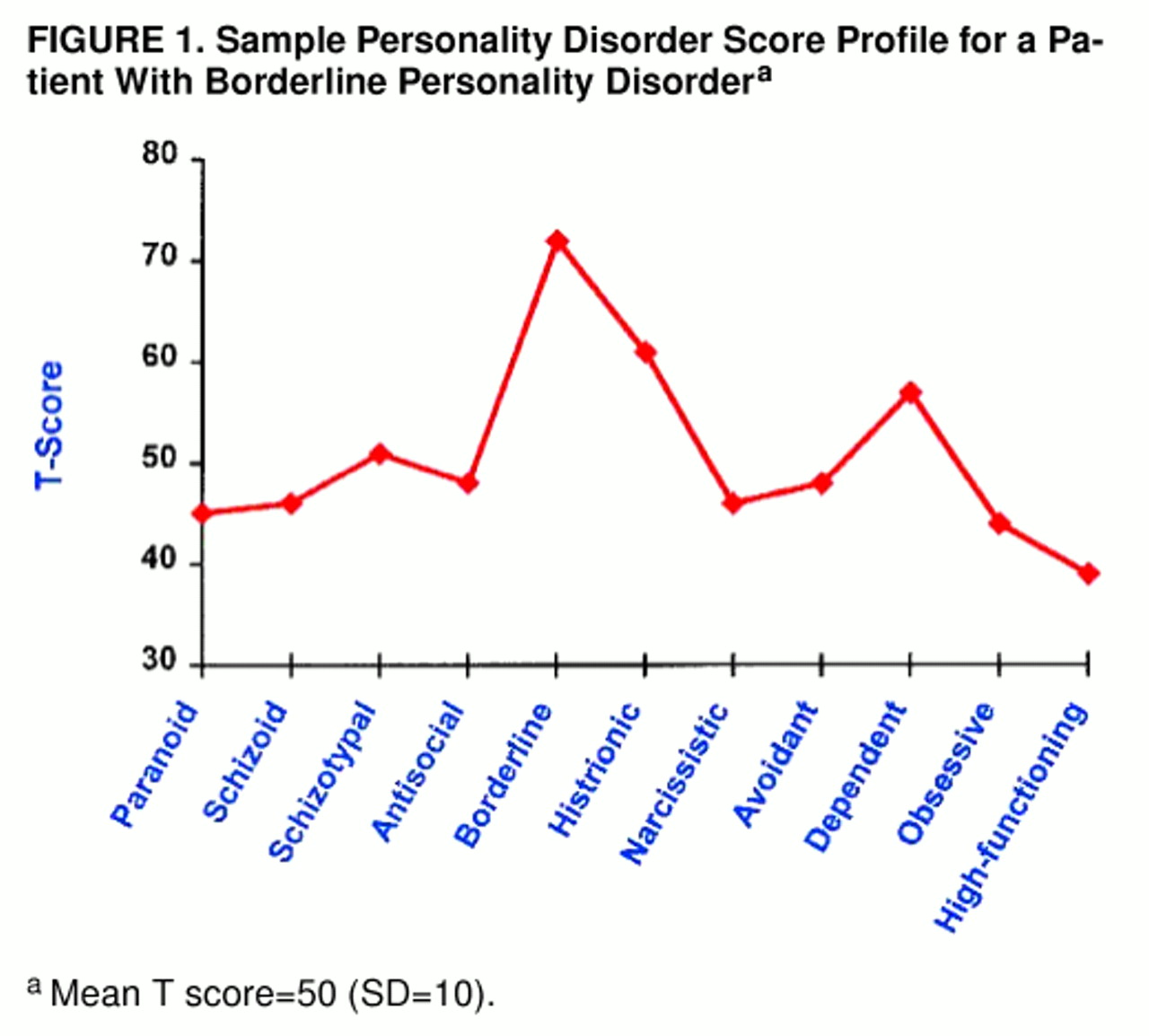

table2 clearly illustrate, clinicians did not simply reproduce the criteria from DSM-IV when describing actual patients. This also tells us that they were describing their patients and not idealized prototypes. For example, the composite description of borderline patients (

table2) bears only a family resemblance to the DSM-IV description. Only three of the nine criteria in axis II for borderline personality disorder were reproduced among the top nine items that empirically described these patients through use of the SWAP-200.

Fourth, although composite descriptions of actual patients correlated highly with diagnostic prototypes of the same disorder, the correlations between any two patients within a given category ranged from slightly negative to as high as 0.70. This tremendous variation also suggests that clinicians who described actual patients were not simply describing their prototypes and ignoring the attributes of the patient in front of them.

Fifth, even when we ignored the diagnoses and simply examined the relation between patients’ SWAP-200 mental health scores and Global Assessment of Functioning Scale ratings, we found a substantial relationship (r=0.48). A similar finding emerged in a pilot study (

37) in which patients were described by both their therapists and independent interviewers through use of the SWAP-200. In that study, clinicians were not asked to choose a patient with any particular diagnosis, and the interviewers were blind with respect to which (if any) axis II diagnosis or diagnoses the therapist believed the patient to have. Nevertheless, the SWAP-200 descriptions by the treating clinician and the independent interviewers correlated on average r>0.50.

Another potential criticism focuses on the content of the SWAP-200. Many experts have contributed to axis II, whereas one could argue that the SWAP-200 was devised by a task force of two. Can we show that we have included all the necessary items and have not included items that are unnecessary? No. Nor can the developers of any other measure. What we can say is the following. First, we used methods for scale construction devised and refined by personality psychologists and psychometricians over the past 50 years, e.g., making successive approximations, trying them out, adding new items where gaps existed, eliminating redundant items based on correlation matrices, eliminating items with minimal variance, and so on. Second, we included items from a broad range of sources, including, but not limited to, DSM-III-R and DSM-IV. The item set is far more inclusive than the items that make up the diagnostic criteria for axis II because it includes some version of all of the axis II criteria plus roughly 130 additional items. Third, over 950 clinician-consultants have used the instrument at this point and provided feedback about its comprehensiveness (e.g., we asked the clinicians if there was anything important they wished to express about a patient that was not covered in the SWAP item set).

Fourth, we asked clinicians in the present study to rate how well the SWAP-200 item set allowed them to describe their patients’ personalities through use of a 4-point rating scale (1=I was able to express most of the things I consider important about this patient, 2=I was able to express some of the things I consider important about this patient, 3=I was able to express relatively few of the things I consider important about this patient, 4=I was not able to express the things I consider important about this patient). Most clinicians found the SWAP-200 to be quite comprehensive (as one would hope, since by that time we had already had feedback from almost 200 clinicians on prior versions of the item set): 72.7% gave the SWAP-200 a rating of 1, and 26.7% gave it a rating of 2. Only 0.6% gave it a rating of 3, and none gave it a rating of 4. We do not have comparable ratings for the current DSM criteria but we plan to collect those data shortly; we doubt they will fare as well despite their far greater familiarity.

Finally, in assessing the utility of a new method, the question one must ask is how it compares to existing methods, not how it compares to a perfect, nonexistent method. Given that we designed this measure to avoid the pitfalls of current instruments, and given that the data for convergent and discriminant validity are strong, we believe it represents a potential advance. As both Livesley (

1, pp. v–x) and Tyrer (

50) have argued, one of the greatest hindrances to further advances in the field of personality disorders is that research attempting to refine axis II has focused almost exclusively on the taxonomy established by convention in the DSMs, without addressing some of the fundamental conceptual and psychometric problems built into it or attempting to explore alternative methods of classification.

A nosology of personality pathology should provide a language for describing personality that is clinically useful and experience-near. Axis II has come a long way in that direction, but we believe alternative methods may allow us to move at least a small step closer toward a clinically useful, empirically sound method for describing personality and personality disorders.