Shared decision making is a collaborative process between a provider and a consumer of health services that entails sharing information and perspectives and coming to an agreement on a treatment plan (

1,

2). This collaborative process is viewed as central to high-quality, patient-centered health care and has been identified as one of the top ten elements to guide the redesign of health care (

3). Shared decision making is also a growing area of interest in psychiatry, particularly in the treatment of severe mental illnesses (

4–

7). However, to study shared decision making and ensure its widespread use, tools are needed to assess whether core elements are present.

We have adapted for use in mental health settings a widely used tool for evaluating shared decision making. The tool is based on the work of Braddock and colleagues (

8–

13). This coding scheme is comprehensive and takes into account both providers' and consumers' input. Although we considered using the OPTION scale, another reliable approach to coding observed medical visits (

14,

15), its scoring is based solely on the provider's behavior. Given the important role consumers should play in the decision-making process (

2,

16), an ideal scoring system should also account for active consumer involvement.

The purpose of this study was to assess the applicability to psychiatric visits of the coding system developed by Braddock and colleagues. We tested interrater reliability and documented the frequency with which elements of shared decision making were observed. Given concerns about time constraints as potential barriers for shared decision making in psychiatry (

7) and the general medical field (

17), we also explored whether visits with high levels of shared decision making would take more time.

Methods

Study design and sample

Our sample included audio-recorded psychiatric visits from three prior studies that took place between September 2007 and April 2009. Participants were individuals with prescribing privileges (psychiatrists and nurse practitioners) and adult consumers in community mental health centers in Indiana or Kansas. Study 1 was an observational study of 40 psychiatric visits (four providers and ten consumers each) that examined how consumers with severe mental illness may be active in treatment sessions (

16). Study 2 was a randomized intervention study that examined the impact of the Decision Support Center (

18) to improve shared decision making in medication consultations. We used audio-recordings made at baseline, before the intervention (three providers and 98 consumers). Study 3 was an observational study of psychiatric visits with one provider and 48 consumers. The sample included eight providers (five psychiatrists and three nurse practitioners) and 186 consumers. Because of recording difficulties, audio-recordings were usable for 178 consumers. All audio-recordings were transcribed, deidentified, and checked for accuracy.

The consumer sample was predominantly Caucasian (N=92, 54%), with a large percentage of African Americans (N=67, 39%) and a small percentage reporting another race (N=11, 7%). Approximately half were male (N=89, 52%), and the mean±SD age was 43.6±11.2 years. Diagnoses included schizophrenia spectrum disorders (N=93, 55%), bipolar disorder (N=37, 22%), major depression (N=25, 15%), and other disorders (N=15, 9%).

Measures

Background characteristics.

In study 1, participants reported demographic characteristics on a survey form before the recorded visit, and providers reported psychiatric diagnoses. In studies 2 and 3, demographic characteristics and diagnoses were obtained from a statewide automated information database.

Shared decision making.

We adapted the Elements of Informed Decision Making Scale (

10). This scale is used with recordings or transcripts from medical visits. Trained raters identify whether a clinical decision is present (that is, a verbal commitment to a course of action addressing a clinical issue) and classify the type of decision as basic, intermediate, or complex. Basic decisions are expected to have minimal impact on the consumer, high consensus in the medical community, and clear probable outcomes and to pose little risk (for example, deciding what time to take a medication). Intermediate decisions have moderate impact on the consumer, wide medical consensus, and moderate uncertainty and may pose some risk to the consumer (for example, prescribing an antidepressant). Complex decisions may have extensive impact on the consumer and uncertain outcomes or controversy in the medical literature (or both) and may pose a risk to consumers (for example, prescribing clozapine). For each decision, raters determine the presence of nine elements: consumer's role in decision making, consumer's context (how the problem or decision may affect the consumer's life), the clinical nature of the decision, alternatives, pros and cons, uncertainties or the likelihood of success, assessment of the consumer's understanding, consumer's desire to involve others in the decision, and assessment of the consumer's preferences. Each element is rated as 0 (absent), 1 (partial; brief mention of the topic), or 2 (complete; reciprocal discussion, with both parties commenting). Items are summed for an overall score ranging from 0 to 18. Here we use the term SDM-18 for the sum of items.

Braddock and colleagues (

8) also described a minimum level of decision making based on the presence of specific elements according to the complexity of a decision. For basic decisions, minimum criteria for shared decision making include the clinical nature of the decision (element 3) and either the consumer's desired role in decision making (element 1) or the consumer's preference (element 9). For intermediate decisions, minimum criteria for shared decision making additionally require presentation of alternatives (element 4), discussion of pros and cons of the alternatives (element 5), and assessment of the consumer's understanding (element 7). Complex decisions require all nine elements. Here we use the term SDM-Min for the minimum criteria for shared decision making. The coding system developed by Braddock and colleagues has been used with high reliability in several studies of decision making with primary care physicians and surgeons (

8–

10,

12).

Adapting the measure for psychiatry.

Initially we developed a codebook from published descriptions (

8–

10) and later reviewed the codebook developed by Braddock and colleagues for further clarification. An initial team (a psychologist, psychiatrist, health communication expert, and two research assistants) read transcripts of individual sessions, applied codes, and met to develop examples from the transcripts. This was an iterative process of coding by individuals followed by consensus discussions between them. We kept all nine elements, but we added a code to alternatives (element 4) to classify whether nonmedication alternatives were discussed. We believed this to be important because medications can interfere with other activities that consumers undertake to maintain wellness (

18). We also added ratings to assess who initiated each element (to better identify consumer activity) and an overall rating to classify the level of agreement about the decision between the provider and consumer (for example, full agreement of both parties, passive or reluctant agreement by the consumer or provider, and disagreement by the consumer or provider). These aspects are not part of the overall shared decision-making score, but they provide descriptive information. Once we achieved reliable agreement, we trained two additional raters, who used the codebook with the initial transcripts. Throughout this process, we modified the manual, adding clarifications and additional examples as appropriate.

Coding procedures

To ensure that raters would be blind to the setting, all transcripts were given a random identification number, and identifying information was removed. Each rater coded the transcripts on his or her own. To maintain consistent coding, we distributed a transcript every two weeks, to be coded by all raters, which was followed by a consensus discussion.

Data analyses

We evaluated interrater agreement among three coders (a psychologist and two research assistants) for 20 randomly selected transcripts. Agreement was assessed by both percentage agreement and Gwet's agreement coefficient (AC1) (

19). Although the kappa statistic is often used, it does not correct for chance agreement and is difficult to use with multiple raters (

19). Gwet's AC1 allows for the extension to multiple raters and multiple category responses and adjusts for chance agreement and misclassification errors. On the basis of other work with AC1 (

20), coefficients above .8 can be considered to indicate strong agreement; coefficients in the range of .6 to .8 indicate moderate agreement, and those in the range of .3 to .5 indicate fair agreement. We also present the percentage agreement, because all possible response categories were not necessarily observed in the 20 recordings, which can leave corresponding cell frequencies empty. Thus interpretations of interrater agreement involve both percentage agreement and AC1.

We present descriptive data on SDM-18, SDM-Min, who initiated each element, and the overall agreement between the provider and consumer in the decision. We also examined the relationship between shared decision-making scores and session length, controlling for the level of decision complexity. These analyses involved partial correlation for SDM-18 scores and analysis of covariance for SDM-Min.

All procedures for this study were approved by the institutional review board at Indiana University-Purdue University Indianapolis.

Results

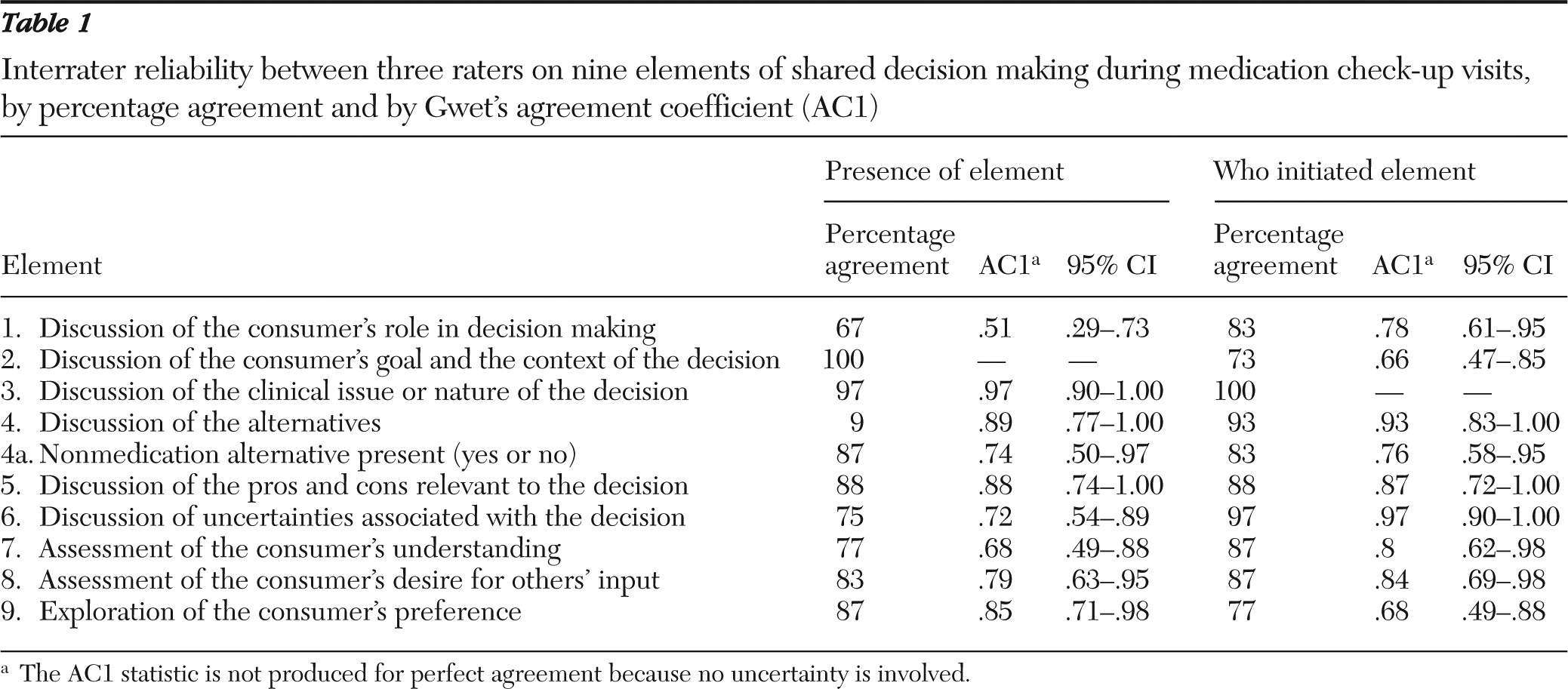

Interrater reliability across the elements of shared decision making was strong (

Table 1). Percentage agreement ranged from 67% (discussion of the consumer's role in decision making) to 100% (discussion of the consumer's goal and context). The AC1 statistic was moderate to strong for all elements (AC1=.68–.97) except the consumer's role in decision making (AC1=.51). Agreement on who initiated each element ranged from 73% (consumer's context) to 100% (discussion of the clinical nature of the decision). AC1 statistics were moderate to strong, ranging from .66 to .97. There was 100% agreement among raters on the level of agreement between consumer and provider regarding the decision (that is, full agreement of both, passive or reluctant agreement by either, and disagreement by either).

Overall, 128 of the 170 sessions (75%) contained a clinical decision. Only one visit contained two clearly separate clinical issues that resulted in decisions; the decisions were scored separately, and the first decision was included in these analyses. Types of decisions included stopping a medication (ten sessions, 8%), adding a medication (23 sessions, 18%), changing the time or administration of a previously prescribed medication (23 sessions, 18%), changing the dosage of a medication (35 sessions, 27%), deciding not to change a medication when an alternative was offered (51 sessions, 40%), or deciding on a nonmedication alternative (63 sessions, 49%). More than one of these decisions may have been present in the same discussion. However, we coded the elements of shared decision making on the basis of the overall discussion because of the highly related nature of the decisions (for example, decreasing one medication and adding a new medication to address a symptom). Overall, in terms of the nature of the decisions, 59 were basic (46%), 67 were intermediate (52%), and only two were rated as complex (2%).

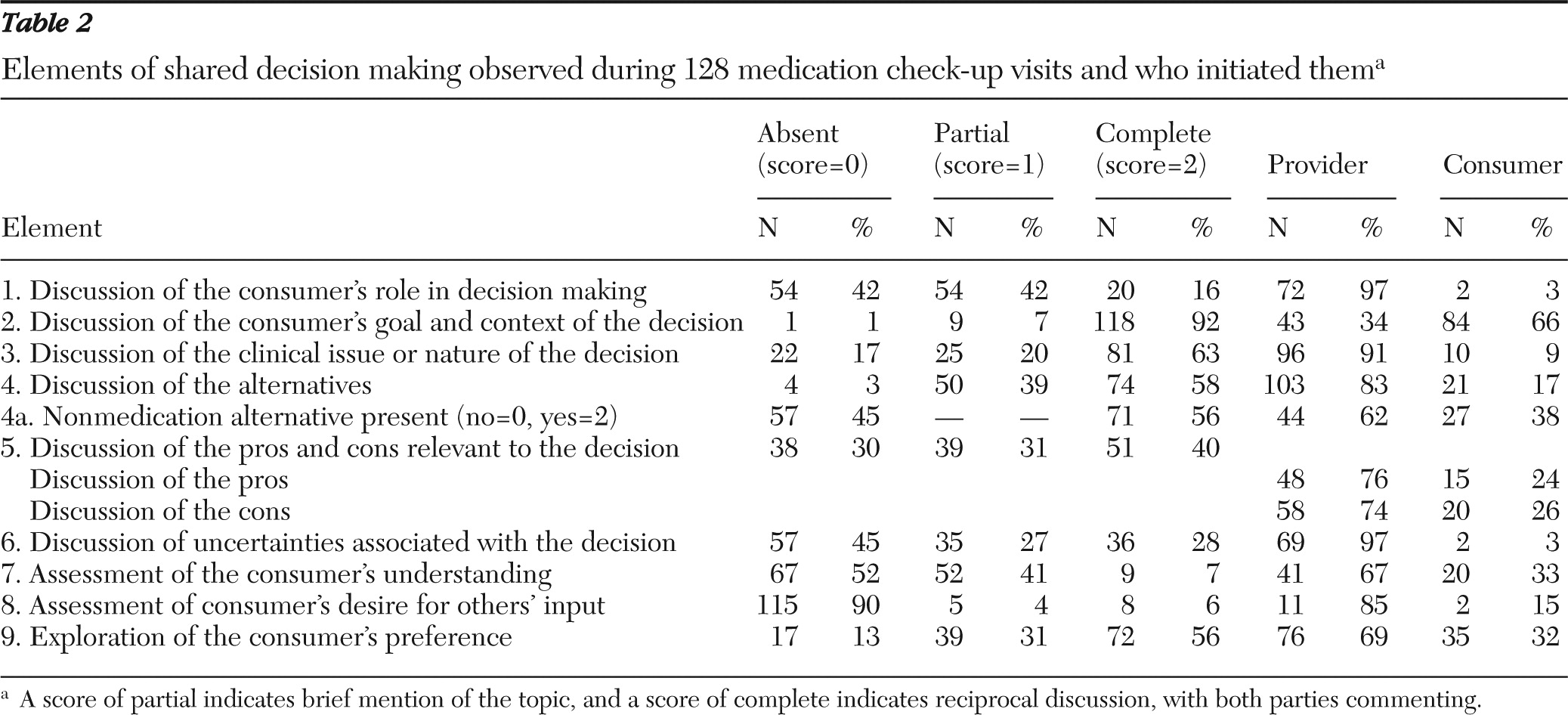

The frequencies of observed elements of shared decision making and who initiated them are shown in

Table 2. The element most often scored as having a complete discussion (score of 2) was the consumer's goal and context of the decision—that is, how his or her life was affected by the clinical concern (92%). A score of complete was also frequently given to discussions of the clinical nature of the decision (63%), alternatives to address the concern (58%; notably over half of these also included nonmedication alternatives), and the consumer's preference (56%). Elements that were least often scored as complete were assessment of the consumer's desire for others' input (6%) and assessment of the consumer's understanding (7%). In terms of who initiated the discussion of the elements, the provider was the primary initiator of all elements but one. Consumers most often initiated a discussion about the context of the clinical concern (in 66% of such discussions).

Agreement between provider and consumer was high. A total of 101 decisions (79%) were rated as being made with the provider and consumer in full agreement. The consumer was rated as passively or reluctantly agreeing in 19 decisions (15%), and the provider was rated as passively or reluctantly agreeing in eight decisions (6%). No decisions were observed in which the consumer or provider disagreed with the final decision.

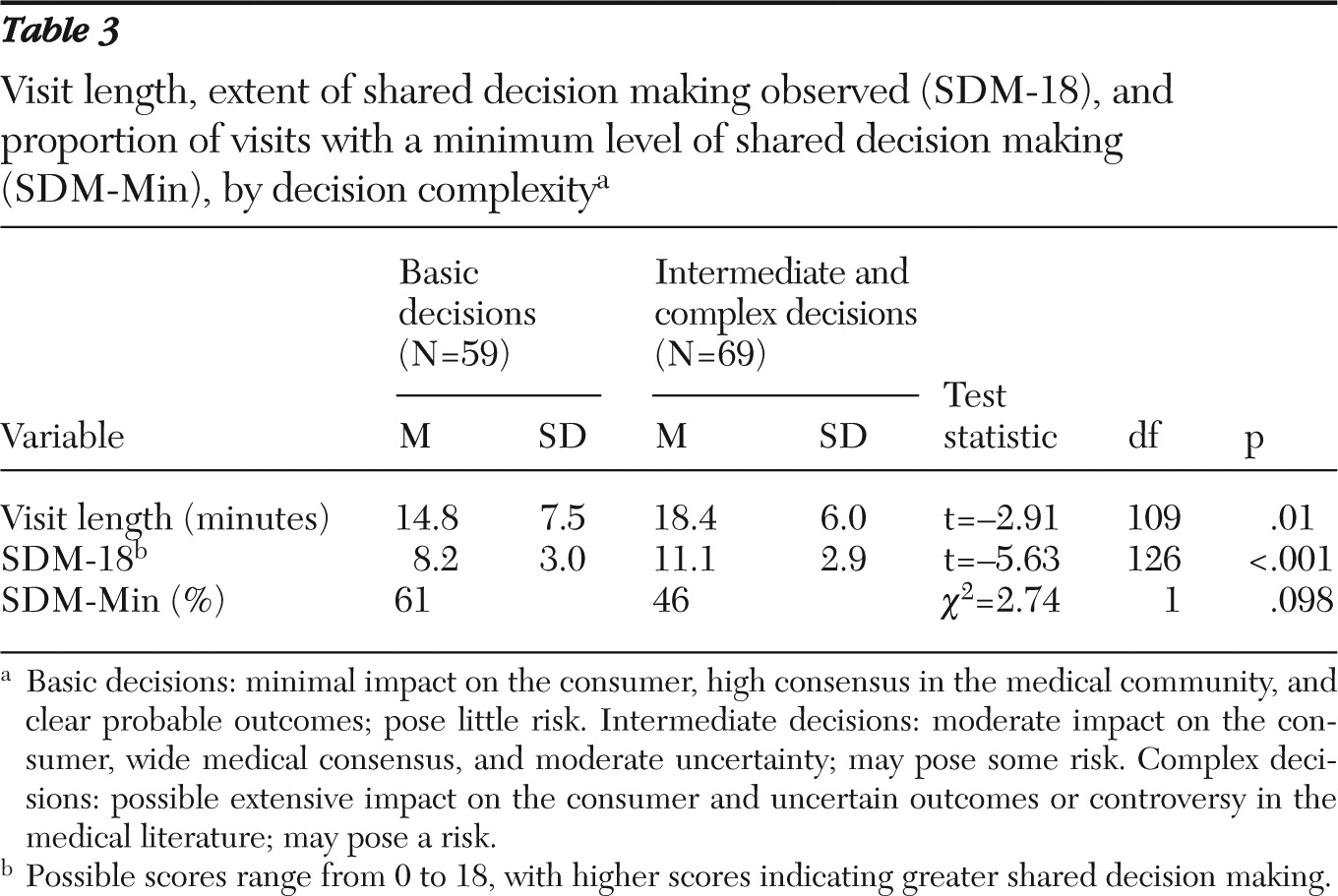

Finally, we examined the length of the visit in relation to shared decision making (

Table 3). The mean length of visits was 16.8±7.0 minutes (range three to 36 minutes) and the mean SDM-18 was 9.7±3.3 (range two to 17). The bivariate Pearson correlation between visit length and SDM-18 was low (r=.25, df=124, p<.01). However, after the analysis controlled for the complexity of the decision, the partial correlation between visit length and SDM-18 was no longer significant. Minimum shared decision making was present in 61% of basic decisions and in 46% of intermediate or complex decisions. Analysis of covariance revealed no significant effect of SDM-Min on visit length, when the analysis controlled for the level of complexity of the decision.

Discussion

The rating scale for shared decision making appears to be a reliable tool for assessing the level of shared decision making in psychiatric visits. Our raters achieved moderate to strong levels of agreement on individual elements of shared decision making, who initiated them, and the overall agreement between provider and consumer. In addition, the codebook created from this work, with examples from actual psychiatric visits, could be a useful tool for others seeking to measure shared decision making in psychiatric practice.

In terms of individual items, the element with the lowest reliability—rated fair—was the consumer's role in decision making. This may be a function of our scoring procedures. While scoring, we were aware that either this element or the consumer's preference could count toward minimum levels of decision making for basic or intermediate decisions. In our consensus meetings we often discussed whether particular quotes from a transcript should be included as evidence for a role in decision making or for a preference about the decision. Because either would count toward minimum decision making, we were less concerned about lack of agreement on the item pertaining to the consumer's role. To increase ease of use of the scale and to enhance reliability, we recommend integrating these items—for example, by counting a discussion of role itself as partial credit for an overall item on exploration of the consumer's preference; a “complete” score would also require some discussion of preference in addition to role.

We found that consumers and providers were in full agreement in 79% of the decisions, at least as judged by raters on the basis of statements in the transcripts. We have no way to determine how consumers or providers perceived the decisions. In addition, although overall agreement on the course of treatment appeared strong, just over half of the decisions (53%) met minimum criteria for shared decision making. When examined by decision complexity, 61% of the basic decisions met minimum criteria, compared with only 46% of the intermediate or complex decisions. These rates were similar to those in a sample of orthopedic surgery patients (

10) and higher than those in a mixed sample of patients in primary care and surgery settings (

8), although they are certainly less than ideal.

Notably, our sample of decisions, as well as the prior samples (

8,

10), rarely contained an assessment of the consumer's understanding or a discussion of the consumer's desired role in decision making or the desired role of others in helping with the decision. These appear to be important areas for growth in order to ensure a truly shared and fully informed decision-making process. Other elements that were frequently absent (absent in 30% or more of the decisions) were discussion of uncertainties regarding the decision and discussion of the pros and cons of the decision. The absence of these elements is particularly concerning in the context of intermediate and complex decisions. Tools such as written or electronic decision aids can enhance consumer involvement in and knowledge about treatment decisions (

21). In psychiatric settings, electronic decision tools appear particularly promising (

22,

23).

We found that the consumer's context was discussed in nearly all decisions, and in 92% of the decisions the discussion was rated as complete, with reciprocal sharing of information. Mental health care providers generally address psychosocial aspects of clinical problems and of treatment. On one hand, the high rate of discussion of the consumer's context is a positive finding because, at least in our sample, one element of shared decision making was being incorporated almost all the time. On the other hand, given the high level of scoring, this item may not meaningfully distinguish among decisions. If this item focused more specifically on consumer goals (rather than on the broader life context), it might be more sensitive to important variations in practice. A shift to explicit attention to consumer goals would be consistent with recovery-oriented principles of care (

18,

24). In addition, it may be useful to code both for broader context as well as for specific consumer goals (for example, the consumer wants to work and is concerned about sedating side effects).

In our adaptation of the coding system for shared decision making, we added a rating to describe who initiated each element as a potential way to identify how active consumers were in the decision-making process. In this sample, consumers appeared to be the primary initiator of discussions regarding life context, whereas providers were the primary initiators of all other elements. The elements in which consumers appeared somewhat active (initiating more than 30% of the discussions) included discussion of nonmedication alternatives, checking the consumer's understanding, and stating preferences. Had we rated the clinician's behavior alone, such as with the OPTION scale (

14), we may have missed some of these aspects of shared decision making. In addition, these elements may be fruitful areas on which to focus efforts to increase consumers' partnership with providers—for example, by developing interventions to coach people with mental illness to ask more questions (

25).

Shared decision making was correlated with longer visit time. Goss and colleagues (

26), who used the OPTION scale, found that high shared decision making was correlated with longer visits. However, they did not control for the complexity of the decision. In our sample, intermediate and complex decisions took more time than basic decisions. After the analysis accounted for complexity, shared decision making was not related to visit length, a finding consistent with findings of Braddock and colleagues (

8,

10). Physicians frequently cite the time needed for shared decision making as an obstacle to adopting this approach (

17), and the finding that it was not related to visit length provides further support for the feasibility of implementing shared decision making in psychiatric settings.

We adapted Braddock and colleagues' scale to assess shared decision making in psychiatric visits by using a convenience sample of audio-recorded and transcribed visits in community mental health settings. The sample was small and included providers who were willing to be audio-recorded, some of whom had recently entered a study to enhance shared decision making. Thus the rates of shared decision making observed in this sample may not generalize to other consumers and providers. It is notable, however, that even in this willing sample, rates of shared decision making were still modest. Further work is needed in more diverse samples to fully establish generalizability of the rating system. In addition, although reliability was strong and the scale appears applicable to psychiatric settings, we were not able to test criterion-related validity of the scale—for example, the extent to which scores predicted consumer satisfaction, treatment concordance, or other possible outcomes of a more shared process of decision making. Future studies could also examine predictors and possible moderators of shared decision making in longitudinal designs. For example, how long the consumer has been seeing the same provider and how long the particular problem has been addressed could alter the frequency of the shared decision-making elements observed.

Acknowledgments and disclosures

Funding for this study was provided by grant R24 MH074670 from the National Institute of Mental Health, by the U.S. Department of Veterans Affairs Health Services Research and Development Service (CDA 10-034 and HSP 04-148), and by the Kansas Department of Social and Rehabilitation Services. The funders had no further role in study design; in the collection, analysis, and interpretation of data; in writing the report; and in the decision to submit the manuscript for publication. The authors thank the providers and consumers from the Adult and Child Center, Indianapolis, Indiana; Wyandot Center, Kansas City, Kansas; and Bert Nash Community Mental Health Center, Lawrence, Kansas. They also thank Candice Hudson, B.S., and Sylwia Oles, B.S., for assistance in data preparation, and Clarence Braddock, M.D., for sharing his codebook.

The authors report no competing interests.