The treatment of psychiatric disorders is among the five most costly medical conditions among children, constituting $8.9 billion or 9% of total annual medical spending for children (

1). Research has identified a range of evidence-based practices for psychiatric conditions among youths (

2–

4). An ongoing challenge is the effective implementation of evidence-based practices in real-world settings; it takes 17 years for original research to change practice (

5).

Over the past decade, states have led the way in promoting evidence-based practices to improve quality of care and reduce costs. Over 20 states are actively implementing evidence-based psychosocial therapies (

6) or medication practices (

7). Evidence-based practice implementation can be economically sound, with one study reporting a 56% rate of return on investment (

8). The demand for states to implement evidence-based practices is matched by a demand for practical strategies and feasible measurement systems to track the impact of implementation (

9,

10).

New York State has been a leader in implementation of evidence-based practices within the child public mental health system. Propelled by the September 11, 2001, attacks and the expectation of trauma-related sequelae, New York State established the Child and Adolescent Trauma Treatments and Services Consortium with federal funds (

11). The consortium’s success in conducting large-scale training in evidence-based trauma practices led to the establishment of the Evidence Based Treatment Dissemination Center (EBTDC) (

12,

13). The EBTDC provides in-depth technical assistance for clinicians around specific evidence-based treatments. However, data from the New York State Office of Mental Health (NYS OMH) indicate that providers at 52% of eligible clinics statewide have participated in any EBTDC initiatives, suggesting uneven adoption (

14).

To expand training beyond traditional evidence-based treatments, New York State established the Clinic Technical Assistance Center (CTAC) in 2011. CTAC’s goals are to strengthen clinics’ capacity for meeting financial and regulatory challenges resulting from state and federal health care reforms. CTAC is thus focused on two core content areas: business and clinical evidence-informed practices. CTAC provides flexible training and consultation models that range from low-intensity, one-hour lunchtime Webinars to intensive learning collaboratives lasting six to 18 months.

Although substantial resources are invested in technical assistance, responses to such training have not been systematically examined. Nationally, strategies for scaling up evidence-based practices have not been guided by empirical knowledge about best practices for implementation (

15), and the promising benefits of evidence-based practices have not been consistently realized (

16). This article expands on an earlier article that described the training adoption behavior of clinics that are licensed by the NYS OMH to serve youths (

17). In the first two years after the establishment of CTAC, 77% of clinics adopted a median of five trainings. Business-practice and clinical trainings were equally accessed, with lunchtime Webinars being the most popular modality. To date, no study has examined what factors, if any, are associated with clinics’ adoption of training. Understanding these factors has significant implications for state strategies to promote clinic training participation and reduce costs associated with training dropout.

Following a theoretical framework of adoption (

18), we examined whether multilevel factors, ranging from extraorganizational to client-level factors, predicted clinics’ responses to training. Because predictors of clinic behavior may differ for various types of training, predictor variables for participation in business-practice and clinical trainings were examined separately. Using available administrative data and CTAC data on training participation, this study examined characteristics associated with any participation in business and clinical training and the intensity of participation among clinics that chose to enroll.

Methods

Population

The study population included the 346 clinics licensed by the NYS OMH to treat youths in New York State. However, key fiscal and structural data of interest were not available for clinics affiliated with state-run facilities (N=17), which are structured differently, so these clinics were removed from our analyses. At these facilities, a higher proportion of clients were youth clients (<18 years old) (68.7%±45.2% versus 39.6%±33.4% at other clinics; t=−3.13, df=325, p<.01) and a higher proportion of youth clients had a serious emotional disturbance (76.3%±21.9% versus 35.0%±27.1% at other clinics; t=–4.77, df=306, p<.001). Thus our sample included the 329 outpatient clinics licensed by New York State to treat youths but not operated by the state.

Notably, clinics in New York State can be structured hierarchically, such that one or more clinics are licensed as part of a parent agency. The effects of clinic “nestedness” on each outcome were tested by using chi square tests. Except for one outcome (intensity of business-practice adoption), nestedness was considered ignorable, and agency-level variables were assumed to equally represent all clinics associated with an agency. For the outcome on intensity of business-practice adoption, no models were built, based on our model building criteria described below; none of the variables examined were associated with intensity of business-practice training participation.

Data Sources

Clinic predictor variables.

Variables describing clinic characteristics that could be predictive of training adoption were extracted from four data sources. The U.S. Department of Health and Human Services Area Health Resources Files (AHRF) (ahrf.hrsa.gov) provided county demographic data. The NYS OMH directory of licensed clinics (bi.omh.ny.gov/bridges/index) provided clinic demographic data. The 2011 NYS OMH Consolidated Fiscal Report (CFR) system (

www.omh.ny.gov/omhweb/finance/main.htm) provided annual operational capacity and provider-level profiles. The 2011 NYS OMH Patient Characteristics Survey (PCS) (

bi.omh.ny.gov/pcs/index) provided “snapshot” data collected during a one-week period in October 2011 on client populations served by the 329 clinics. Depending on the variable, 3.64% to 9.42% of clinics were missing data from the CFR system and 3.34% to 9.42% were missing data from the PCS. Observations with missing data were dropped from statistical analyses.

Outcome variables.

Attendance data were prospectively tracked by training event (

17). Because adoption of an evidence-based practice, defined as the decision to proceed with partial or complete implementation, is a distinct process that precedes implementation (

18–

20), sending clinicians to be trained in an innovation represents an early adoption behavior by clinics. A clinic is counted as a training adopter if any individual provider affiliated with the clinic participates in any CTAC trainings. Among participating clinics, an average of 6.87±5.89 providers per clinic participated in any business-practice trainings; 7.09±5.35 clinicians per clinic participated in any clinical trainings. For each outcome variable (business-practice or clinical training), clinics were categorized in terms of any training adoption (yes or no) as well as by intensity of participation among clinics that participated in at least one training (low or high). Low training adopters were defined by exclusive participation in hour-long Webinars, and high adopters were defined by participation in trainings that required in-person attendance, attendance at multiple events associated with a training, or both.

Measures of Clinic Variables

A theoretical model of innovation adoption developed by Wisdom and colleagues (

18) provided a framework for clinic variable selection. Selection of the measures, described below, was guided by a review of constructs associated with four levels of predictors of innovation adoption (

19).

Extraorganizational variables.

Region-urbanicity served as a proxy for extraorganizational social networks (regions administratively linked by OMH) and training access (distance to urban locations where trainings are typically held). Clinics were initially categorized by region as downstate (New York City and Long Island) or upstate (Central, Hudson, and Western regions) and as urban or rural on the basis of AHRF county rural-urban continuum codes. Because downstate New York State is only urban, a new variable was used reflecting three categories of region-urbanicity: downstate-urban, upstate-urban, and upstate-rural.

Agency-level variables.

Each NYS OMH–licensed clinic is associated with a parent agency. Relevant agency-level attributes included affiliation, operational size, fiscal efficiency, and operational structure. Affiliation refers to whether agencies are classified as community-based providers or hospitals; it served as a proxy for interorganizational social networks and operational structures. The agency’s annual clinic-related total expenses, used by NYS OMH as a measure of operational size, served as a proxy for a variety of theory-based variables, such as absorptive capacity and resource availability (

19,

20). The fiscal efficiency of clinics affiliated with an agency is a derived variable defined by NYS OMH as the annual average dollar gain or loss per unit of standardized service provided. This measure took into account the type and length of each service encounter. Operational structure, or the annual proportion of clinical workforce (providers who bill for direct services) to total workforce, was used as a proxy for staffing structure.

Clinic-provider profile variables.

The CFR data provided two measures of clinic operational structure: clinical capacity, measured by the annual total clinical full-time-equivalents (FTEs), and outsourced services, measured by the proportion of all clinical staff whose services were contracted. Clinical capacity and degree of outsourcing are hypothesized to influence clinic investment in workforce training (strategic fit) (

21).

Clinic-client profile variables.

The PCS provided information that served as proxies for innovation values fit (

22). These variables included the proportion of youth clients; proportion of Medicaid or Medicaid managed care (MMC) visits, estimated by the proportion of youth visits billed to Medicaid or MMC; and proportion of youth clients with a serious emotional disturbance, a measure of clinical severity among youths served.

Statistical Analyses

Analyses were performed by using Stata, version 11.2 (

23). Descriptive statistics were used to characterize the clinic population according to the two outcome variables of interest: participation in business-practice trainings and clinical trainings. For each outcome variable, we examined clinic characteristics according to training adoption status (yes or no) and intensity of participation (low or high) among adopters. Chi square and t tests were used to compare categorical and continuous variables, respectively. Distributions of four continuous predictor variables (total expenses, gain or loss per unit of standardized service, total clinical FTEs, and proportion of clinical staff contracted out) were notably skewed; natural log-transformed versions of these variables were also tested for bivariate associations with the outcomes.

Multiple logistic regression models (adjusted odds ratios [AORs]) were used to assess the independent effects of predictor variables on clinic training participation. The same model-building procedure was used in four logistic regression models to predict any participation (yes or no) and intensity of participation (low or high) in each type of training (business and clinical).

The independent variables were entered into the models in the following ordered categories: extraorganizational variables, agency-level variables, clinic-provider profile variables, and clinic-client profile variables. Akaike Information Criterion values upon entry of each variable category into the model were compared to determine whether the information gained compensated for the increased model complexity and potential overfitting. Once the main effects for each model were determined, we explored the interactions of the following combinations of categorical and continuous variables, provided both pairs of variables were included among the main effects: region-urbanicity by total expenses, gain or loss per service unit, total clinical FTEs, and proportion of contracted clinical staff; and clinic affiliation by total expenses, gain or loss per service unit, and clinical capacity. Because none of the hospital-affiliated clinics outsourced any clinical services, interaction effects between clinic affiliation and proportion of services outsourced could not be examined. Once main effects and significant interaction terms were determined, reduced models created by removing statistically nonsignificant variables were tested to yield more parsimonious models with improved fit. For ease of interpretability and between-variable comparisons, all numeric variables were standardized for use in the models. Subsequently, model coefficients were interpreted as the change in odds of training adoption for an increase of one standard deviation in the respective predictor variable. All hypothesis tests were two-sided with alpha set at .05. Model fit of each final, reduced model was assessed with Hosmer and Lemeshow’s goodness-of-fit test by using eight predicted value groups. Specification errors were tested by checking for independent variable correlations with the model error term.

Results

A majority of clinics were located in downstate, urban areas (59%) and were affiliated with community-based agencies (82%) (

Table 1). The agencies with which the clinics were affiliated had average clinic-related total expenses of $6.04 million. Clinics operated at an average loss of $48.6 per unit of service provided and with a clinical workforce that represented 68.1% of the clinic’s overall workforce. Clinic-provider profiles indicated an average of 12.8 clinical FTEs on staff. On average, 8.3% of clinical services were provided by staff through outside contracts. Clinic-client profiles indicated that on average, youth clients constituted 39.7% of clients, of whom 49.8% were covered by Medicaid or MMC and 35.0% were classified as having a serious emotional disturbance.

Table 2 reports clinic population characteristics by uptake of business-practice trainings. Compared with nonparticipants, clinics that participated in any business trainings were disproportionately located upstate (χ

2=16.65, df=2, p<.001), had fewer total agency clinic-related expenses (t=2.21, df=315, p<.05), had more total clinical staff FTEs (t=−2.78, df=296, p<.01), had a smaller proportion of contracted clinical staff (t=3.09, df=296, p<.01), and served more youths (t=−2.31, df=311, p<.05) and more youths with a serious emotional disturbance (t=−2.36, df=296, p<.05). Among adopters of business-practice trainings, none of the variables examined were associated with intensity of training participation.

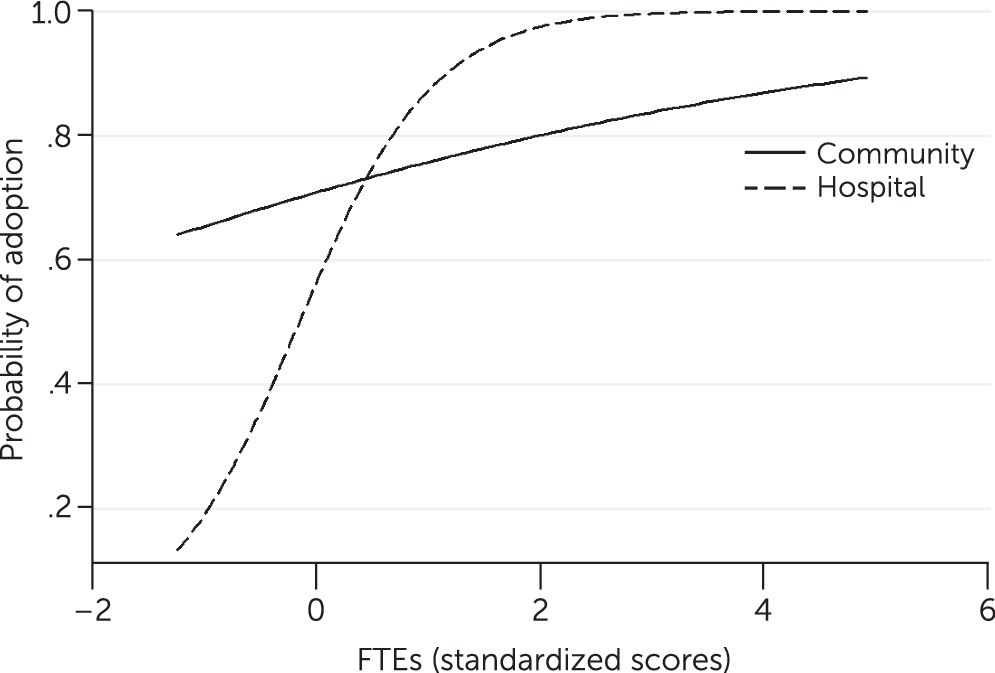

Table 2 also displays results from reduced logistic regression models describing any business-practice training adoption as a linear function of the clinic characteristics. Clinics associated with agencies that had larger total expenses (AOR=.65, 95% confidence interval [CI]=.50–.84), had greater gains per unit of service provided (AOR=.62, CI=.41–94), outsourced more clinical care (AOR=.60, CI=.46–.80), and had lower odds of participating in any business-practice trainings. A statistically significant interaction effect of clinic affiliation and total clinical FTEs on the probability of uptake of any business-practice trainings was observed (AOR=4.89, CI=1.31–18.28). Among clinics with fewer total clinical FTEs, hospital-affiliated clinics were less likely to participate in any business trainings; among clinics with more total clinical FTEs, hospital-affiliated clinics were more likely to participate (

Figure 1).

Table 3 reports clinic population characteristics by clinical-training participation. Compared with nonparticipants, clinics that participated in any clinical trainings were more likely to be affiliated with agencies that had a larger proportion of clinical workforce (t=−2.08, df=316, p<.05). These clinics also had more total clinical staff FTEs (t=−2.84, df=296, p<.01) and served more youth clients (t=−4.93, df=311, p<.001) and more youth clients who were covered by Medicaid or MMC (t=−3.62, df=316, p<.001). Among participating clinics, only one variable was found to be associated with intensity of clinical-training uptake; on average, the percentage of youth clients was higher at clinics with high versus low participation (54.6%±3.7% versus 39.9%±3.0%, t=−3.14, df=203, p<.01).

Reduced logistic regression models described any clinical-training participation as a linear function of the clinic characteristics (

Table 3). Clinics with more clinical FTEs (AOR=1.52, CI=1.11–2.08) and a larger proportion of youth clients (AOR=1.90, CI=1.42–2.55) had higher odds of attending any clinical trainings. In the model predicting adoption intensity, clinics with a larger proportion of youth clients (OR=1.54, CI=1.17–2.05) had greater odds of high uptake (data not shown).

Discussion

This study is the first, to our knowledge, to examine data on training participation collected prospectively from a population of state outpatient mental health clinics serving youths. To our knowledge, this is also the first attempt to test a multivariate model of adoption behavior involving multilevel characteristics proposed in current theoretical implementation models (

18,

20,

24,

25). Our results indicate that available administrative data can be valuable to states in predicting which clinics will participate in training. However, they were much less useful in terms of predicting the level of clinic participation; administrative data are primarily structural and lack more nuanced information—for example, provider attitudes—or local information—for example, details about the organizational culture or climate—that may influence clinic participation levels.

Various levels of clinic characteristics were found to be associated with adoption of different trainings. Business-practice training adoption was associated with agency (affiliation, size, and efficiency) and clinic-provider profile (outsourced clinical services) characteristics. By contrast, adoption of clinical trainings was associated with clinic-provider profile (clinical capacity) and clinic-client profile (proportion of youth clients) characteristics. These findings suggest that clinics make decisions on the basis of relevant agency, provider (strategic fit [

21]), and client (innovation values fit [

22]) factors.

We speculate that business-practice trainings were less attractive to clinics affiliated with larger agencies, which likely have in-house capacity for fiscal management or need different business-practice supports compared with clinics affiliated with smaller agencies. Clinics associated with more efficiently run agencies and those that outsource more clinical services were also less likely to participate in business-practice trainings. These agencies are likely to have less financial exposure and thus may be in less need of training in business practices. Our data suggest that clinical capacity may influence participation depending on agency affiliation. Among clinics with larger clinical capacity, hospital-affiliated clinics were more likely to participate in business-practice trainings. By contrast, among clinics with smaller clinical capacity, community-based clinics were more likely to adopt business-practice trainings. These findings suggest that operational structure or financial incentives may differ in different types of settings on the basis of clinical capacity, thus influencing business-practice adoption behavior.

Clinical trainings were more likely to be adopted by clinics with larger clinical capacity, which likely reflects clinics’ capacity to release clinical staff for training. Given that the trainings focused primarily on youth-related issues, it seems likely that clinics with a higher proportion of youth clients would consider the trainings more relevant and would be more likely to participate. In-depth interviews with a stratified random sample of these clinics are underway to test our hypotheses.

Implications for State Systems

Our findings suggest that state efforts to provide incentives or target training efforts should pay attention to specific clinic characteristics available through administrative data. Policy makers should understand factors that influence the types of training and the amount of training that clinics are willing or able to adopt. To help clinics move beyond training adoption to successful implementation, targeting training to address both clinic needs and clinics’ readiness for change is critical (

21). Health care reform and new accountability standards make it imperative for states to understand and leverage factors that influence clinic decisions to embrace, implement, and sustain quality improvement efforts.

Limitations

When used as the only data source, administrative data have limited value in predicting adoption of training. It may be helpful to collect and examine data about other theoretical factors that are hypothesized to drive adoption behavior in advance of large-scale rollouts of training initiatives. Information about providers—such as attitudes, organizational context, and baseline competencies about business practices and clinical knowledge—must be integrated with administrative data to help policy makers determine appropriate levels of technical support for successful quality improvement efforts.

In addition, clinics may adopt training not associated with CTAC; we were unable to account for the relationship between adoption of CTAC trainings versus other training. National differences in relationships between clinics, counties, and states may limit the generalizability of our findings outside New York State. Nonetheless, our findings provide support for existing theoretical adoption or implementation models that explicate multilevel influences.

Conclusions

This study represents a first effort to understand multilevel characteristics that influence training adoption within a state outpatient child mental health system. Although this study focused on baseline adoption behaviors (training participation), how adoption translates into actual implementation of effective practices to improve care quality and youth outcomes is a critical question. The answer is likely to inform development of optimal models for rollout of state training in service practices.