In response to the World Trade Center attacks on September 11, 2001, the New York Office of Mental Health (NYOMH) received funds from the Federal Emergency Management Agency (FEMA) to implement Project Liberty, a crisis counseling program aimed at alleviating psychological distress and helping people regain predisaster functioning. Project Liberty offered short-term outreach, crisis counseling, and public education services to affected individuals and groups at no cost to them. Project Liberty counselors also made referrals to longer-term mental health services when necessary (

1 ). The project provided face-to-face disaster-related services through outreach in community settings, such as homes, businesses, and schools. The assumptions underlying this broad-based response strategy are that most people's stress reactions, although personally disturbing, are normal responses to a traumatic event and will be short-term in duration (

2 ).

Crisis counseling services sufficiently aided a majority of individuals in recovering from distress related to September 11, 2001 (

3 ). Nevertheless, a sizeable group experienced persistent and disabling trauma symptoms for a prolonged period. Encounter data indicated that about 40 percent of all service recipients showed evidence of reactions suggestive of posttraumatic stress disorder, depression, or both and that the proportion of persons who entered services with such reactions was quite consistent over the 27 months of program operation (

4 ). This finding is consistent with previous reports indicating that a substantial proportion of persons may exhibit posttraumatic symptoms that persist after they experience a disaster (

2 ).

Using this information, NYOMH initiated additional counseling services for individuals more severely affected by September 11, 2001. The enhanced services program included a screening methodology, use of evidence-informed counseling interventions, and training and technical assistance for a select group of clinicians. Approved by FEMA and the Center for Mental Health Services, the enhanced services program represented the first time that crisis counseling services under a FEMA grant had been expanded to include clinical services. Separate interventions were developed for adults and children who exhibited severe or persistent trauma symptoms (

1 ).

In order to ensure the use of evidence-informed interventions for traumatic (

5 ), depressive (

6 ), and grief (

7 ) symptomatology, NYOMH contracted with the National Center for Post-Traumatic Stress Disorder (NCPTSD) and the Pittsburgh Bereavement and Grief Program to develop two interventions lasting ten to 12 sessions and to provide training, training manuals, and ongoing technical assistance to the clinicians selected to provide the interventions. Additionally, NCPTSD and NYOMH collaborated on the creation of a tool to make referrals to enhanced services (

8 ). Providing training in an intervention, however, did not guarantee its systematic implementation; therefore, it was important to evaluate the degree to which interventions were delivered with fidelity to the manual.

Although the gold standard for evaluating fidelity of treatment intervention relies upon multiple sources of assessment (

9 ), there is increasing emphasis on users' reports (

10 ). Essock and colleagues (

11 ) have suggested that individuals' reports about the presence of core components of empirically supported treatments may provide cost-effective evaluations of treatment fidelity. This study examined whether participants who received services at sites where all clinicians received training in one of the enhanced services interventions—the Brief Intervention for Continuing Postdisaster Distress, which used cognitive-behavioral techniques—were more likely to report receiving core components of that intervention than participants at sites where only some clinicians received the training. This construct was employed because the data set used does not permit direct linkage between the recipient and the clinician. However, data indicating the site where service was received were available for each recipient. Therefore, recipients from sites where all clinicians were trained in the intervention were contrasted with those where only some staff was trained. Although training and the manual for the grief intervention developed by Shear and colleagues was also provided as part of enhanced services implementation, too few recipients whose clinicians received the training were included in the evaluation survey to assess fidelity to this intervention.

Methods

Following a protocol approved by Mount Sinai School of Medicine's and NYOMH's institutional review boards, Project Liberty clinicians invited English- and Spanish-speaking recipients of enhanced services aged 18 or older to participate in an evaluation of Project Liberty services via telephone interview. Because clinicians who worked with the Fire Department of New York City (FDNY) did not offer the intervention that was developed by NCPTSD as part of its enhanced service program, interviews with FDNY service recipients were not included in the analyses reported here.

Beginning in October 2003, all enhanced services recipients (excluding those served from the FDNY) were invited to participate in a telephone interview. Clinicians providing enhanced services were instructed to distribute a permission-to-contact form to all adults using services. Recruitment continued until mid-December 2003, when enrollment in enhanced services ended. Approximately 225 individuals were served during this period. Of the 102 recipients who signed the permission-to-contact forms, 74 (73 percent) completed the first telephone interview and 60 (59 percent) completed follow-up interviews an average of seven weeks later. Because we were interested in how service recipients characterized the intervention after some exposure to treatment, we focused our analyses on responses during the second interview. The analyses examined the specific items developed in collaboration with the NCPTSD to capture core features of the intervention.

The 60 participants who completed second interviews had a mean±SD age of 46±10.5 years, 19 (32 percent) were male, 44 (73 percent) were Caucasian, four (7 percent) were African American, one (2 percent) was Asian American, nine (15 percent) were from another racial or ethnic group or more than one race, and two (3 percent) declined to provide racial identity. Comparison of demographic characteristics as well as site and event exposures showed no significant differences between those who completed both interviews and those who were lost to follow-up.

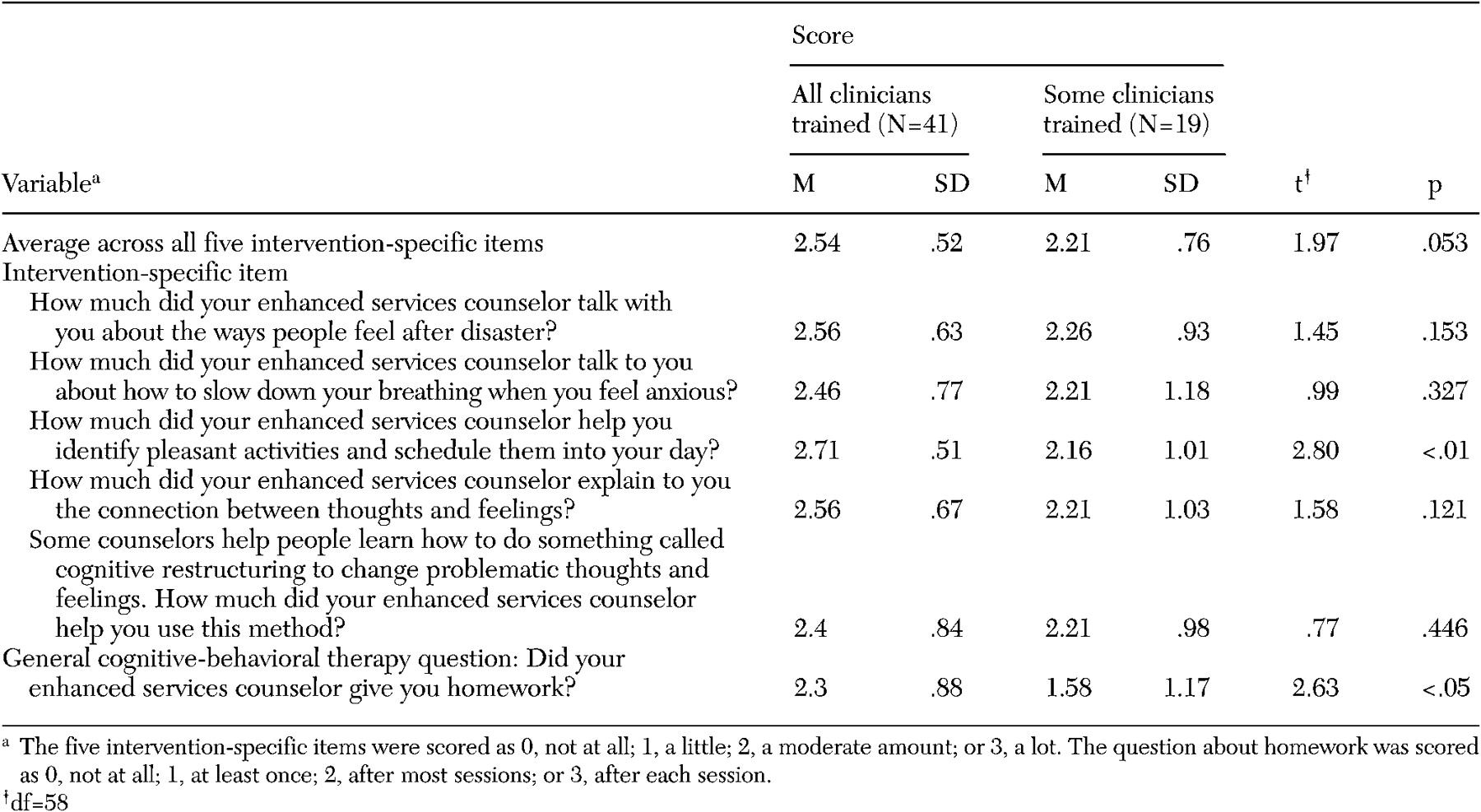

Participants were grouped according to whether they received services from one of nine sites where all clinicians completed training (41 participants) or whether they received services at one of the six sites where only some clinicians (25 to 50 percent of clinicians) completed such training (19 participants). Respondents rated how often their clinicians provided each of five components of the intervention using Likert scales (0, not at all; 1, a little; 2, a moderate amount; or 3, a lot). A sixth question was also asked regarding whether their clinicians assigned homework; this question was scored as 0, not at all; 1, at least once; 2, after most sessions; or 3, after each session. We created summary scores by computing means across the five intervention-specific items. We applied independent samples t tests to examine group differences on outcomes measures.

Results

Across all five intervention-specific items, individuals who received services from sites where all clinicians were trained reported that their clinicians, on average, applied techniques specific to the intervention more often than those who received services from sites where only some clinicians had been trained (

Table 1 ). Compared with respondents who received services where only some clinicians had received training, those who received services where all clinicians had received training were significantly more likely to report that clinicians identified pleasant activities that they could do every day (p<.01) and were more likely to report that clinicians provided all five key components of the intervention, although these results did not reach statistical significance (p=.053). Additionally, individuals who received services from sites where all clinicians were trained reported that their clinicians gave them homework significantly more often than those who received services from sites where only some clinicians were trained (p<.05) (

Table 1 ).

Discussion

We have presented a novel evaluation strategy to determine clinician adherence to a planned short-term intervention. Our results are informative with regard to the intervention we studied and as a possible new methodological approach to quality assurance monitoring. However, there are many limitations to this study, and results should be considered preliminary. For example, only a small percentage of service recipients agreed to participate in the interviews, so our results may not be generalizable. The sample was small, so failure to detect differences between the two groups may reflect a lack of power rather than a lack of between-group differences. The second interviews were conducted at different periods during the intervention, which could have influenced ratings. A person who had attended only a few sessions may have rated items differently than one who had completed the intervention. Also, there may be confounds with clinician skill and attendance at the training sessions. Perhaps administrators from the sites where all clinicians were trained were more supportive of using the manualized interventions and thus have developed a more skilled provider group. Thus the differences we observed should be considered suggestions of training effectiveness, not confirmation. Nevertheless, as the first (to our knowledge) systematic effort to evaluate treatment fidelity in disaster mental health services, we developed a method that was able to provide reliable distinctions between sites.

There is considerable interest in developing ways to optimize community response to disasters. In the aftermath of an unprecedented terrorist disaster, FEMA and NYOMH marshaled resources to provide an enhanced disaster mental health services program using a multimodal intervention strategy and evaluated this response.

The subset of findings from the evaluation study presented here, although preliminary, provides useful information related to intervention quality. The data suggest that clinicians generally implemented core features of the program. The generally high ratings on fidelity items may reflect the selection process for enhanced services providers. In order to compete for a contract, agencies were required to provide a description of the brief, manualized intervention they would implement if selected. Most planned to provide cognitive-behavioral interventions that were not so different from the intervention developed for Project Liberty. Nevertheless, our simple evaluation strategy identified significant training effects. This suggests that such a strategy has good sensitivity.

This study also suggests that evaluations of treatment fidelity can be conducted in a cost-efficient manner. Using individuals' responses to questions as simple as, "Did your enhanced services counselor give you homework?" may help administrators determine whether training produces desired changes in practice, determine the extent of treatment fidelity across sites, identify compliance outliers, develop remedial procedures, and make other programmatic adjustments.

Conclusions

Assessment of clinicians' adherence to planned intervention elements is an important component of assessment of therapeutic outcomes. The evaluation method used here is a cost-effective approach to rating such intervention fidelity. At the very least, service recipients' assessments can be used as a first step in identifying sites to be the focus of more time-intensive training, supervision, and monitoring.

Acknowledgments

This evaluation was funded by grant FEMA-1391-DR-NY (titled "Project Liberty: Crisis Counseling Assistance and Training Program") to New York State from the Federal Emergency Management Agency. The Center for Mental Health Services of the Substance Abuse and Mental Health Services Administration administered the grant. This study was also funded by grants MH-60783, MH-30915, and MH-52247 from the National Institute of Mental Health to the University of Pittsburgh. The authors thank the Department of Veterans Affairs National Center for Post-Traumatic Stress Disorder and Schulman, Ronca, and Bucuvalas, Inc.