There is some evidence that when service users interview research participants, the information they acquire may be different from that acquired by persons perceived as professional researchers. In particular, two studies that used a qualitative design found that research participants who were service users were more critical of services when they were speaking to someone they knew was a peer (

1,

2). For example, Clark and colleagues (

1) found no difference between professional researchers and peer researchers when participants were asked to complete a closed-question satisfaction schedule; however, when participants were given the opportunity to make open-ended comments, they made more negative comments when interviewed by a peer researcher. The authors speculated that participants felt less anxious about criticizing services when speaking with a peer researcher. Participants may hesitate to criticize services when speaking to a researcher who is not a peer because they may fear that the service will be withdrawn or that there will be repercussions.

However, there is also evidence that when structured scales are devised by researchers who are service users, participants report less satisfaction with treatments than when they respond to a scale designed by clinical researchers (

3). Thus a question arises about whether information collected by researchers who are service users may have an effect not only on qualitative data but also on quantitative data. This would suggest that some prior research has underestimated participants' negative views of services and treatments.

In the United Kingdom, the most common term for people who use psychiatric services and who also conduct research is “service user researcher.” Thus we have used that term throughout this report. In other countries the term “consumer researcher” is often used to refer to the same group of people.

This study compared data collected by service user researchers and non-service user researchers to determine whether the results differed. The specific focus was on perceived coercion among patients compulsorily admitted to a psychiatric hospital. We hypothesized that the inpatients would express a higher degree of perceived coercion to researchers who were service users.

Methods

The study was approved by the National Health Service Multi-Centre Research Ethics Committee in England. As part of a study of involuntary hospital admission, three sites contributed data to a study of researchers' disclosure of their service user status (

4,

5). Three categories of researcher status were used: user researcher disclosed status, user researcher did not disclose status, and researcher not a service user. User researchers disclosed their status for 20 consecutive interviews and then did not disclose their status for the next 20, and so forth. The dependent variables were two measures of perceived coercion that were collected within one week of the index compulsory admission to a psychiatric inpatient bed. The key comparison was between the levels of coercion assessed by the three different categories of researcher.

The McArthur Perceived Coercion Scale (MPCS) is a short self-report questionnaire consisting of five yes-no items (

6). The items assess perceived control and freedom in the admission process. Possible scores on the MPCS range from 1 to 5, with higher scores indicating greater perceived coercion. The coercion ladder (CL) is an adaptation of a scale originally designed as a global quality-of-life measure (

7). The CL is a visual-analogue scale with ten “rungs,” from 1, minimum coercion, to 10, maximum. The respondent marks the rung that best represents his or her experience. A standard script is read to each participant by the researcher to explain the instrument and how to use it. This form of interview allows participants to consider issues in a more global fashion.

The research sites were regional providers of mental health services in England. The sites differed in the services that they provided, and site was thus used as a proxy for several variables that may affect levels of reported coercion other than researcher variables or that may interact with them. Patients who used certain services, particularly psychiatric intensive care units (PICUs), may have perceived a greater level of coercion than patients who used acute wards. PICUs are locked wards that are used exclusively for patients when they are most disturbed. The research sites differed in the number of participants who were admitted to PICUs, which may have affected the results.

Clinical and sociodemographic characteristics included age, diagnosis, and race or ethnicity. Clinical status was measured by the Brief Psychiatric Rating Scale (BPRS) (

8).

Administrators responsible for detained patients in each service identified all compulsory admissions between July 2003 and July 2005. All detained patients were approached. Staff handed information sheets to potential participants to explain the study. If the potential participants agreed to take part, they were put in touch with a researcher who acquired their informed consent and then carried out the full interview. For this study, 548 participants were interviewed at baseline, which represented 50% of all compulsorily admitted patients during the study period.

Each of the three sites had two researchers, a service user researcher and a non-service user researcher. During the period examined in this report, the same six individuals remained at the three sites. All were recruited on the basis of the same job description, except for the requirement of personal use of mental health services, which included having had a hospital admission for psychiatric reasons. The requirement for a past hospitalization ensured shared experience between participants and service user researchers. We hypothesized that such shared experience would by itself result in a different style of interview, which would be augmented by specific disclosure. When the service user researchers disclosed their status, they told participants that they also had experienced psychiatric hospitalization.

All researchers underwent joint intensive training in the administration of all interviews. Reliability checks were carried out for the BPRS, which produced group agreement of 95%.

The numbers in the three subgroups—user disclosed status (93 interviews), user did not disclose status (149 interviews), and nonuser (306 interviews)—were sufficient to detect moderate standardized effect sizes between any pair of groups with at least 90% power (α=.05).

All analyses were carried out with SPSS, version 15, and Stata, version 10. In the first step, two-way analyses of variance were conducted in which the dependent measure was one of the two reported coercion measures For the MPCS we calculated the mean number of responses on this five-item scale for each participant. Potential explanatory factors were disclosure levels (user disclosed or did not disclose) and site (to indicate possible differences in patients or services). Interactions between site and disclosure or user-nonuser status were initially tested in these models. If disclosure significantly affected the assessment of coercion, then further analysis of researcher status used the three categories (user disclosed, user did not disclose, and nonuser). If disclosure was not significant, then the data were combined for the service user researchers. These subsequent analyses used the same dependent and independent measures.

The data were also further analyzed to detect confounding or masking by patient characteristics. These analyses used regression models that included the relevant dependent coercion measure, demographic characteristics, clinical symptoms, and receipt of services on a PICU.

Results

The total sample from the three sites included 548 patients, although not all participants provided complete data. Sites differed in recruitment of patients; site 3 recruited more participants largely for administrative reasons and because of its service configuration.

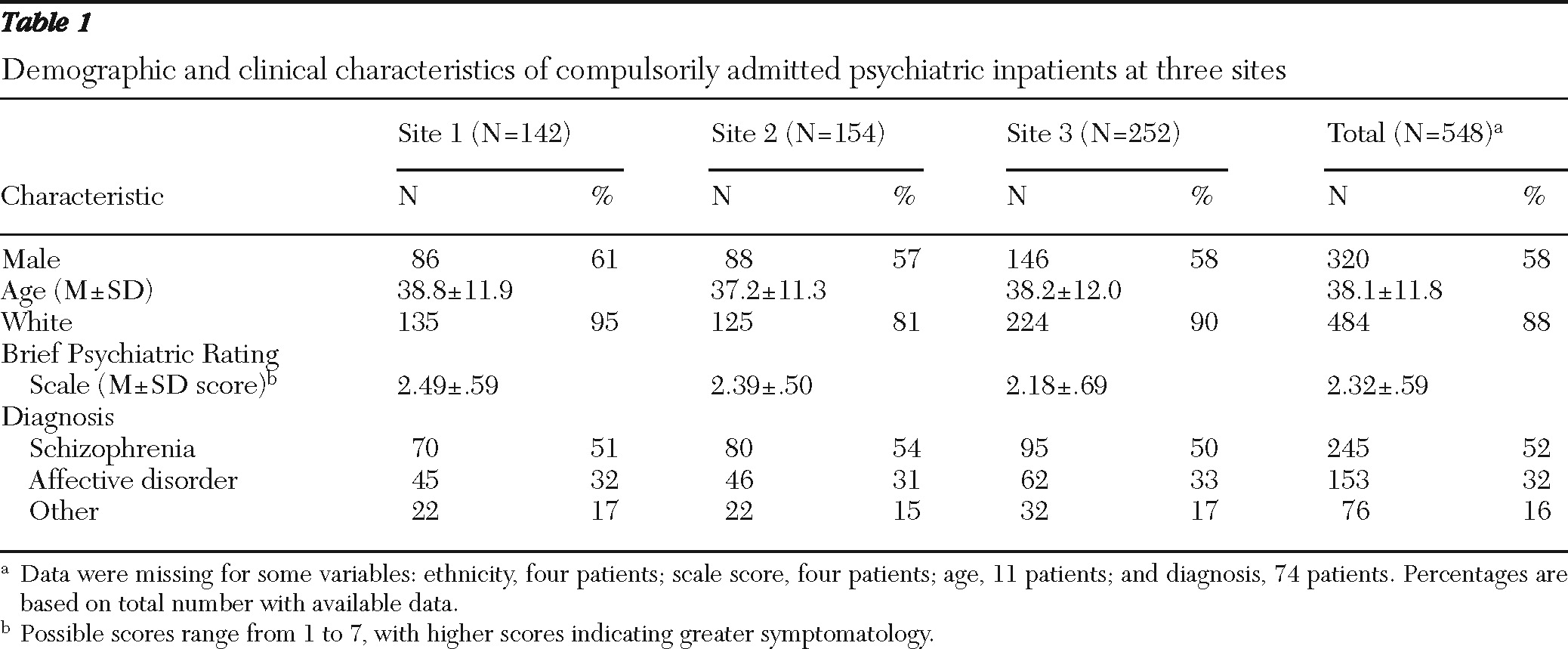

Characteristics of the sample are shown in

Table 1. No statistically significant differences were found between sites in any of the clinical or demographic variables examined.

The service user researchers interviewed 242 patients (44%), and the nonuser researchers interviewed 306 patients (56%). The service users disclosed their status to 93 of the 242 patients (38%). No significant interactions were found between disclosure and site; in a model without the interaction term, no significant difference for disclosed user status or site emerged. In the analysis of researcher status, no significant interaction was found, nor was a main effect of researcher status or site detected. However, the range of observed MPCS scores was very low (4.2–4.6), which would have reduced discriminatory power. This was to be expected because the data were collected at the time of involuntary admission when perceptions of coercion were likely to be higher.

Results for the CL were similar to those for the MPCS. No evidence was found of an interaction between site and disclosure, nor were main effects for disclosure or site found. For the 93 interviews in which the service user researcher disclosed his or her status, the mean±SD CL score (was 6.46±3.43). For the 149 interviews in which the user researcher did not disclose, the score was 6.57±3.22.

Disclosure was then collapsed across categories for user researchers, and the two coercion measures were compared for service users and nonusers. In these analyses a significant interaction of researcher status and site was found for the CL (F=18.8, df=2 and 490, p<.001). Post hoc Bonferroni tests showed that there was no difference between sites 1 and 2 but that these sites were both significantly different from site 3 (mean difference between site 1 and site 3=1.31, 95% confidence interval [CI]=.62–2.02; mean difference between site 2 and site 3=1.21, CI=.52–1.90).

The CL data were further investigated in relation to user and nonuser status in a regression analysis in which all putative moderating variables were entered, including the status-site interaction term. The variable included participant characteristics (age, gender, and BPRS score) and service characteristic (recruitment from a PICU). The interaction term remained significant (F=8.38, df=2 and 375, p=.001) even when the other terms had been entered. Only gender and symptoms were significantly related to coercion in the model; women reported more perceived coercion on average than men (difference=.75, CI=.06–1.40), and participants with more symptoms and more severe symptoms reported more coercion (a 1-point increase in symptoms increased reported coercion on average by .76 points, CI=.19–1.33).

However, at two sites service user researchers and non-service user researchers worked together in the same hospitals, but at the third site they did not. This was a rural site with three different hospitals, and thus it was more efficient for them to work in separate services. At the site where the user researcher and the nonuser researcher rarely worked together, the differences in reported coercion by user status were observed. Compared with researchers at the other two sites, the nonuser researcher at the third site, who mostly worked alone, elicited the lowest CL scores (6.53±3.30 for all 242 interviews by user researchers, 7.27±3.27 for the 158 interviews by nonuser researchers who worked with user researchers, and 4.14±3.15 for the 96 interviews by the nonuser researcher who worked alone.

Discussion

This study provided little evidence that researchers who were service users and who disclosed their status to participants elicited reports of higher levels of perceived coercion than user researchers who did not disclose. The single significant finding was the interaction between researcher status and site on CL scores, with the non-service user researcher at site 3 eliciting the lowest scores of all the researchers (that is, the least perceived coercion). This finding is open to two interpretations. First, the nonuser researcher at this site could be seen as an outlier, and the findings were the result of her personal interviewing style. Alternatively, the fact that the user and nonuser researchers at site 3 encountered each other infrequently may explain the finding. Researchers at the other sites had the opportunity to discuss their work regularly and modify it in the light of these discussions, a factor that could have led them to reach consensus on interview administration. At site 3, the two researchers generally met only for fortnightly supervision sessions.

Two other factors may have had a bearing on the results. Researchers were subjected to intensive training throughout the study, and one of the trainers was a service user researcher. This may have mitigated any effects of researcher status. Second, although the number of patients was adequate, the number of researchers was small, especially when subgroups are considered. A particular individual could therefore have a large influence. A stronger design, with a greater number of researchers, is needed to disentangle these effects.

Finally, it should be pointed out that disclosure was not an easy process for the service user researchers involved in the study. In the main study (

4), one research site was excluded because the service user researchers felt that they could not disclose their status, and the user researcher at another site did not follow the protocol and only disclosed on half the occasions required.

Conclusions

This study provided little support for previous findings of differences in information elicited by peer researchers compared with professional researchers. However, the results reported here cannot be generalized in terms of measurement of other outcomes or use of other scales or to studies with qualitative designs. Further work is necessary to determine the conditions under which these differences pertain and when they do not.

Acknowledgments and disclosures

This study was funded by grant 0230072 from the Department of Health, United Kingdom, and supported by Mental Health Network England. Dr. Rose and Dr. Wykes received support from the National Institute for Health Research Specialist Biomedical Centre in Mental Health at the South London and Maudsley Trust and Institute of Psychiatry, London. The funding agencies had no role in the study design; in the collection, analysis, and interpretation of data; in the writing of this report; or in the decision to submit the manuscript for publication.

The authors report no competing interests.