Capacity to Consent to Clinical Research

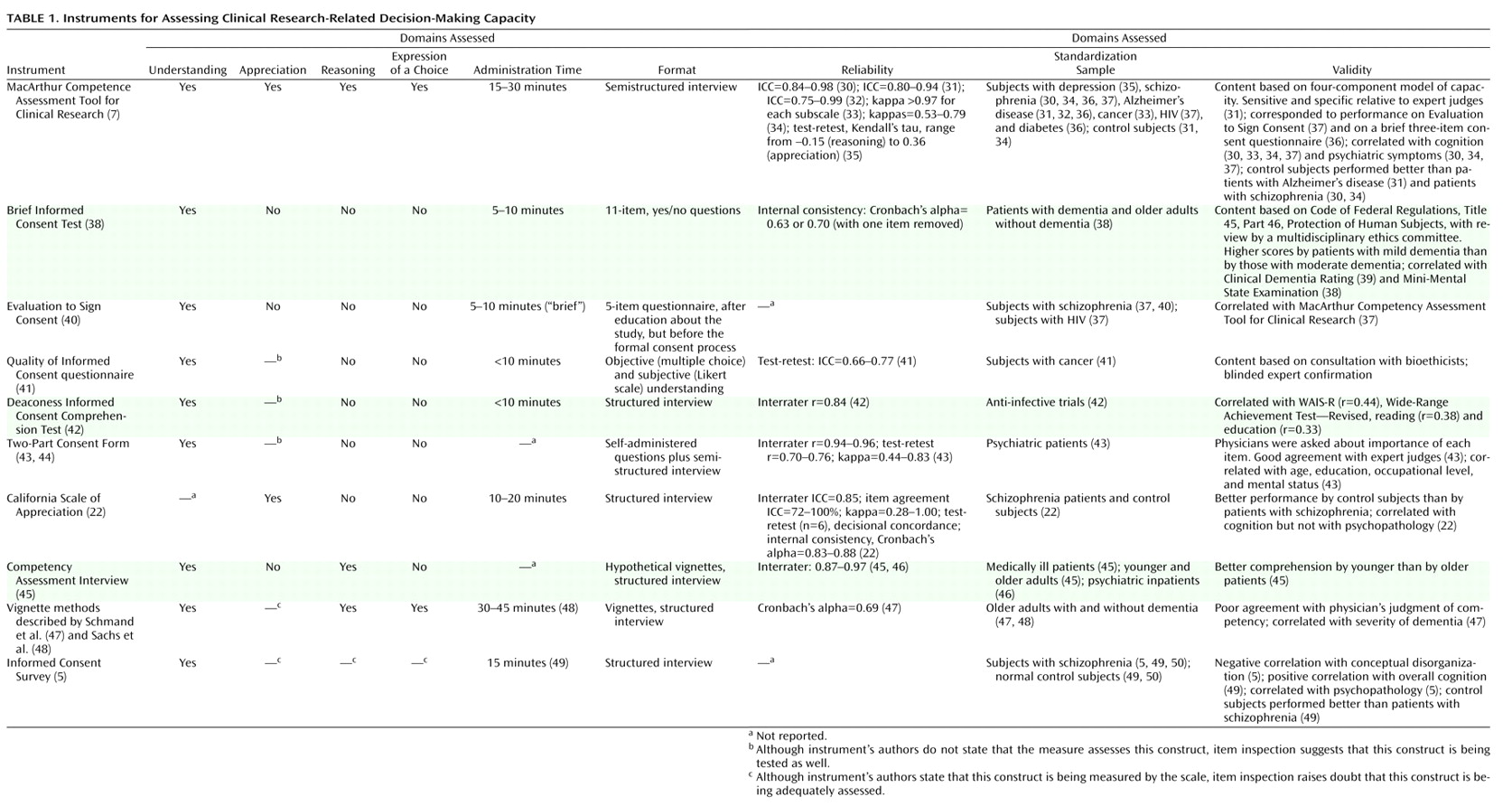

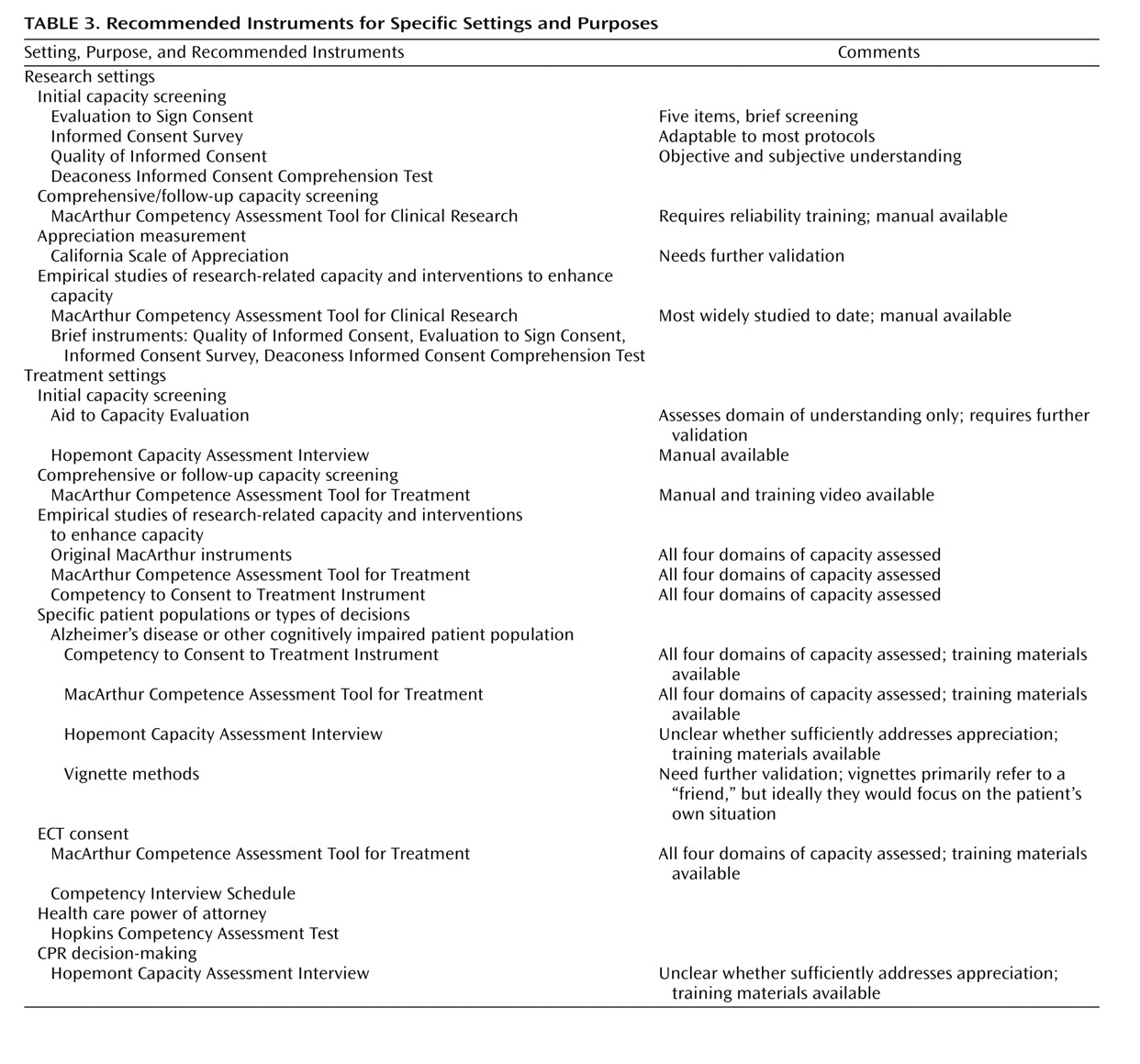

Two of the 10 instruments that focus on capacity to consent to a clinical research protocol—the MacArthur Competence Assessment Tool for Clinical Research and the Informed Consent Survey—are supposed to measure all four capacity domains, although whether the latter instrument adequately assesses appreciation and reasoning is debatable. Measures of understanding are included in nine instruments

(5,

7,

38,

40 –

45,

47), five of which assess only understanding

(39,

40 –44) . The California Scale of Appreciation focuses solely on assessment of appreciation (although understanding is likely also tapped), and the Competency Assessment Interview focuses only on understanding and reasoning. The vignette method

(47,

48) appears to cover understanding, reasoning, and choice, although appreciation may be tapped as well.

An important variation among the instruments is whether the disclosed information and query content are established by the instrument itself or must be tailored for the specific protocol. For instance, participants may receive standard disclosures and questions, and acceptable responses to the questions may be predetermined. The California Scale of Appreciation and the Competency Assessment Interview use hypothetical study protocols and standard questions (although the California scale could be tailored). Another approach is for the instrument to specify the text of the probes (e.g., “What is the purpose of this study?”) while allowing the disclosures and acceptable responses to be tailored; this approach is used in the Evaluation to Sign Consent, the Quality of Informed Consent questionnaire, the Deaconess Informed Consent Comprehension Test, the Informed Consent Survey, the MacArthur Competence Assessment Tool for Clinical Research, the Two-Part Consent Form, and the vignette method.

The instruments vary in the degree of skill and training required of interviewers for valid administration. The Quality of Informed Consent questionnaire and the Two-Part Consent Form are self-administered; a drawback of this format is that the process does not have a built-in opportunity to ask follow-up questions. The Evaluation to Sign Consent, the Brief Informed Consent Test, the Deaconess Informed Consent Comprehension Test, the Informed Consent Survey, and the vignette method use interviews, although they all appear to require minimal to moderate training of interviewers or scorers. Training is required for administering the MacArthur Competence Assessment Tool for Clinical Research—the only instrument for which a published manual provides scoring guidelines

(7) —because the items must be scored during the interview so that appropriate follow-up questions can be asked or requests for clarification elicited. The California Scale of Appreciation and the Competency Assessment Interview also require moderate training. Most of the instruments take less than 10 minutes to administer, although the more comprehensive ones take longer.

Psychometricians generally suggest that instruments to be used for clinical decision making have reliability values of at least 0.80

(21) . By this standard, most of the instruments we examined had acceptable interrater reliability, although no interrater reliability information was provided for the Brief Informed Consent Test, the Evaluation to Sign Consent, the Informed Consent Survey, and the vignette method. Test-retest reliability has been reported for four of the scales (the MacArthur Competence Assessment Tool for Clinical Research, the Quality of Informed Consent questionnaire, the Two-Part Consent Form, and the California Scale of Appreciation) ranging from –0.15 to 0.77 (the one negative correlation was for a specific subscale of the MacArthur Competence Assessment Tool for Clinical Research in one specific application study of women with depression

[35] ).

When item content varies with specific use, another potential source of variance may be introduced by the disclosures and acceptable responses that are specified for the different uses. The manual for the MacArthur Competence Assessment Tool for Clinical Research gives fairly detailed instructions on preparation of the items. However, no data are available on how consistent this and other modifiable instruments are, even with trained users—that is, on how “reliable” the item content preparation phase is, or the “inter-item-writer reliability.” In the absence of such data, it is not clear whether, or under what conditions, results from these instruments can be generalized across specific uses, even when referring to similar protocols. The reliability and validity data of one version may not generalize to other versions prepared by other users.

Information about the concurrent, criterion, or predictive validity has been published for the MacArthur Competence Assessment Tool for Clinical Research

(31,

37), the Brief Informed Consent Test

(38), the Evaluation to Sign Consent

(37), the Deaconess Informed Consent Comprehension Test

(42), the Two-Part Consent Form

(43), and the vignette method as described by Schmand et al.

(47) . The external criterion was generally capacity judgments made by physicians. However, interpreting lack of agreement between “expert” judgment and subjects’ performance on the instruments themselves is problematic. Convergence with opinions from other potential experts or stakeholders (e.g., patients, family members, and legal or regulatory authorities) was rare, although judgments of some nonphysician experts have been included in studies of the Quality of Informed Consent questionnaire

(41) and the Two-Part Consent Form

(43) . Several reports attempted to establish concurrent validity by showing the association with general functional or cognitive measures

(38,

42,

47), but because decisional capacity is context- and decision-specific, such correlations are not fully germane. Finally, Cronbach’s alpha, a measure of internal consistency, was 0.69 (fair) for the Schmand et al. vignette method

(47) and ranged from 0.83 to 0.88 for the California Scale of Appreciation

(22) .

Capacity to Consent to Treatment

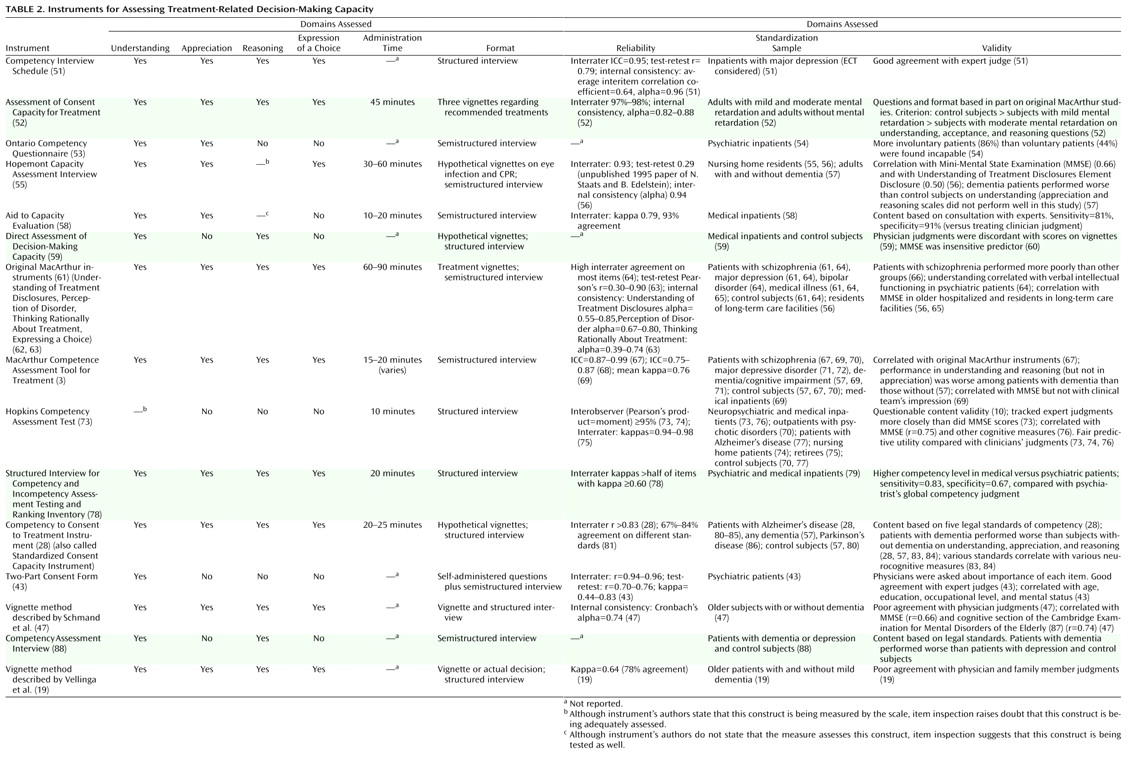

All 15 of the instruments that focus on capacity to consent to treatment (

Table 2 ) measure understanding, but only nine of them appear to assess all four capacity dimensions. Two of the remaining six instruments assess only understanding, two assess understanding and appreciation, and two assess understanding and reasoning.

Preset vignettes or content are used as stimuli in eight of the 15 instruments: the Assessment of Consent Capacity for Treatment, the Hopemont Capacity Assessment Interview, Fitten et al.’s direct assessment of decision-making capacity

(60), the original MacArthur instruments

(63), the Hopkins Competency Assessment Test, the Competency to Consent to Treatment Instrument, and the vignette methods (although Vellinga et al.

[19] presented the actual treatment decision to a subset of patients). In contrast, the patient’s actual treatment decision is used in the MacArthur Competence Assessment Tool for Treatment, the Competency Interview Schedule, the Ontario Competency Questionnaire, the Aid to Capacity Evaluation, the Two-Part Consent Form, the Structured Interview for Competency and Incompetency Assessment Testing and Ranking Inventory, and the Competency Assessment Interview; and it can form the basis for the Vellinga et al. vignette method.

All 15 instruments employ structured or semistructured interviews, although the Two-Part Consent Form uses a self-administered questionnaire, which is followed by additional questions when the questionnaire is returned

(43) . The degree of training needed to administer these instruments ranges from minimal, as in the Hopkins Competency Assessment Test, to more substantial, as in the Competency Interview Schedule, the Assessment of Consent Capacity for Treatment, the Ontario Competency Questionnaire, the Hopemont Capacity Assessment Interview, the Aid to Capacity Evaluation, Fitten et al.’s direct assessment of decision-making capacity

(59,

60), the original MacArthur instruments

(3,

63), the MacArthur Competence Assessment Tool for Treatment, the Structured Interview for Competency and Incompetency Assessment Testing and Ranking Inventory, the Two-Part Consent Form, the Competency to Consent to Treatment Instrument, the Competency Assessment Interview, and the two vignette methods. Detailed manuals to guide administration, scoring, and interpretation are available only for the Hopemont Capacity Assessment Interview, the original MacArthur Competence Study instruments (Understanding of Treatment Disclosures, Perception of Disorder, Thinking Rationally About Treatment), and the MacArthur Competence Assessment Tool for Treatment; a training video is also available for the latter. Administration time was not widely reported for these instruments, but it varies with the comprehensiveness of the evaluation.

Information on reliability was reported for 12 of the instruments. Adequate interrater reliability (≥0.80) has been reported for the Competency Interview Schedule

(51), the Assessment of Consent Capacity for Treatment

(52), the Aid to Capacity Evaluation

(58), the Hopemont Capacity Assessment Interview (unpublished 1995 paper of N. Staats and B. Edelstein), the Understanding of Treatment Disclosures, Perception of Disorder, Thinking Rationally About Treatment scales

(63), the MacArthur Competence Assessment Tool for Treatment

(67,

69), the Hopkins Competency Assessment Test

(73), the Structured Interview for Competency and Incompetency Assessment Testing and Ranking Inventory

(78), the Competency to Consent to Treatment Instrument

(28), and the Two-Part Consent Form

(43) . Data on internal consistency have been reported for the Competency Interview Schedule

(51), the Hopemont Capacity Assessment Interview

(56), and the original MacArthur instruments

(59,

63,

88) ; for the latter, internal consistency seemed to vary with the study population, with higher consistency reported for hospitalized psychiatric patients than for cardiac patients and healthy community samples. The authors of the Competency Interview Schedule

(51) and Schmand et al.

(47) used interitem correlations to evaluate internal consistency. Test-retest reliability has been reported for only four of the scales—the Competency Interview Schedule

(51), the Hopemont Capacity Assessment Interview (unpublished 1995 paper of N. Staats and B. Edelstein), the original MacArthur instruments

(63), and the Two-Part Consent Form

(43) . For the seven instruments with variable item content, no data have been published on the reliability of item preparation or on associations between versions prepared by different users.

Data related to concurrent, criterion, or predictive validity have been published for all of the treatment-consent capacity instruments except the Competency Assessment Interview. In most cases, the external criterion was judgments of decisional capacity made by physicians. Data on the various instruments’ ability to discriminate between patients who were judged by experts as competent and those who were judged incompetent were reported for the Competency Interview Schedule

(51,

89), the Aid to Capacity Evaluation

(58), the Hopkins Competency Assessment Test

(73,

76), Fitten et al.’s direct assessment of decision-making capacity

(59), the MacArthur Competence Assessment Tool for Treatment

(69,

70), the Structured Interview for Competency and Incompetency Assessment Testing and Ranking Inventory

(78), and the two vignette methods

(19,

47) . For Fitten et al.’s assessment instrument, the MacArthur Competence Assessment Tool for Treatment, and both vignette methods, performance on the instrument did not correspond to physicians’ judgments of older patients’ global competency; this lack of correspondence was interpreted as indicating that clinicians were relatively insensitive to the decisional impairment of these study subjects. Performance on cognitive tests was correlated with decisional capacity scores in some cases

(47,

56,

64,

69,

76) but not in others

(59,

60,

73,

74,

76) . Such findings are consistent with the notion that decisional capacity is a construct distinct from cognitive domains, although cognitive factors are important in the measured abilities. The degree of convergence between the scale’s results and opinions from family members was evaluated in Vellinga et al.’s vignette method

(19) ; family members’ judgments of subjects’ competency did not correspond well to results on the instrument.