Individuals with autism are notably deficient in both the recognition of emotional prosody

(1) and the perception of facial emotion

(2). Seemingly to compensate for these deficits, individuals with autism use effortful cognitive strategies based on learned associations and prototypical references to label emotional expressions

(3). Preferentially, they tend to categorize facial stimuli with reference to some nonsocial dimension rather than according to emotional content

(2). Thus, the social relevance and communicative value of emotional faces seem to be less salient stimuli for individuals with autism than for individuals without autism.

Investigations of facial emotion processing in autism with functional imaging techniques have revealed that in addition to lower activation of the fusiform gyrus, an area of the brain associated with the processing of faces, there are further absences or reductions in activation noted in limbic and paralimbic regions of the brain in individuals with autism

(4,

5). The latter regions function not only in the attachment of emotional significance to sensory experiences but also in the reception of emotionally arousing sensory stimuli through extensive reciprocal connections with sensory association cortices

(6). Thus, lessened fusiform activation identified during emotion processing

(4) may reflect a failure of emotional facial stimuli to acquire motivational or emotional significance. Therefore, the present study examined whether attenuation in neural activation to facial stimuli is present in subjects with autism when the salience of emotional cues is increased and additional prosodic information is provided.

Method

Eight men with autism (ages 20–33 years) and eight male comparison subjects of similar ages gave informed written consent to participate in this study, as approved by the ethics committee of McMaster University. Participants with autism had a DSM-IV diagnosis of autism (N=6) or Asperger’s syndrome (N=2), and neither they nor their parents or guardians reported any comorbid neurological or psychiatric disorders, drug or alcohol abuse, or history of head injury or seizures. The comparison subjects did not endorse drug or alcohol abuse or report neurological or psychiatric disorders, a history of head injury, or a familial history of autism. All subjects were assessed as right-handed

(7); nonverbal IQs

(8) were similar for participants with autism (mean=105, SD=18, range=80–130) and comparison subjects (mean=109, SD=16, range=90–135) (t=0.46, df=14, p>0.70).

Regional cerebral blood flow (rCBF) was measured during the performance of two task conditions: an emotion-recognition task and a gender-recognition baseline task, with each condition repeated four times (ABABABAB or BABABABA), with one-half of the subjects receiving each letter order. During both conditions, the subjects were administered a series of 36 trials in which the sound of a prosodic voice was presented concurrently with an image of a pair of facial stimuli. Facial images were displayed for 3.4 seconds and preceded by a 0.2-second fixation point. In the emotion-recognition condition, the subjects matched the emotional quality of the voice with the corresponding facial emotion by pressing the appropriate left- or right-hand response button. The emotions were never labeled for the subjects by the experimenter. The gender-recognition condition required that the subjects match the gender of a neutrally prosodic voice to the face of the appropriate gender. Visual and auditory stimuli were presented automatically by a computer, which also recorded response choices and latencies. Between scans, the subjects viewed 7–10 minutes of a videotaped program from a preselected menu of public television documentaries; the available choice of films did not include subject matter that was of strong interest for the participants with autism. The computer screen remained blank for 1 minute before each test condition began. Before the study, the subjects received up to 12 practice trials, and all demonstrated six successive correct responses on each task.

Facial stimuli were constructed by using standardized pictures conveying the emotions happy, sad, surprised, or angry

(9,

10). Each image was bound by a frame and masked by an oval so that just the face was visible. Pairs of facial stimuli were generated from a set of 44 distinct male and female faces and were presented on a video monitor at a viewing distance of 40–50 cm. As in the study by Anderson and Phelps

(11), auditory stimuli were constructed from recordings made by professional actors (three of each sex), who repeated a series of proper names in voices that conveyed the emotions happy, sad, surprised, and angry or that were neutral in tone. Forty-eight neutral and 48 prosodic distinct voice recordings were edited for consistent quality and equal volume.

rCBF was measured by using an ECAT 953/31 tomograph (CTI PET Systems, Knoxville, Tenn.). Before each scan, 466 MBq of H

2[

15O] was injected into an intravenous line and flushed with normal saline solution. Scan frames 3 to 5 were summed and reconstructed with filtered back-projection (Hann filter: cutoff frequency=0.3) to yield one image per scan, corrected for attenuation and analyzed in SPM 99

(12). To identify brain regions activated by each group during emotion processing, a multisubject repeated-measures design was used in which rCBF during emotion recognition was contrasted with rCBF during gender recognition (threshold=p<0.001, uncorrected). To identify brain regions that distinguished participants with autism from comparison subjects during emotion processing, a between-group random-effects analysis (threshold=p<0.001, uncorrected) was performed, as described by Woods

(13).

Results

Response latency and error measures showed that the participants with autism performed as well as the comparison subjects on the gender-recognition task; they responded as quickly but made significantly more errors than the comparison subjects during the emotion-recognition task (data not shown).

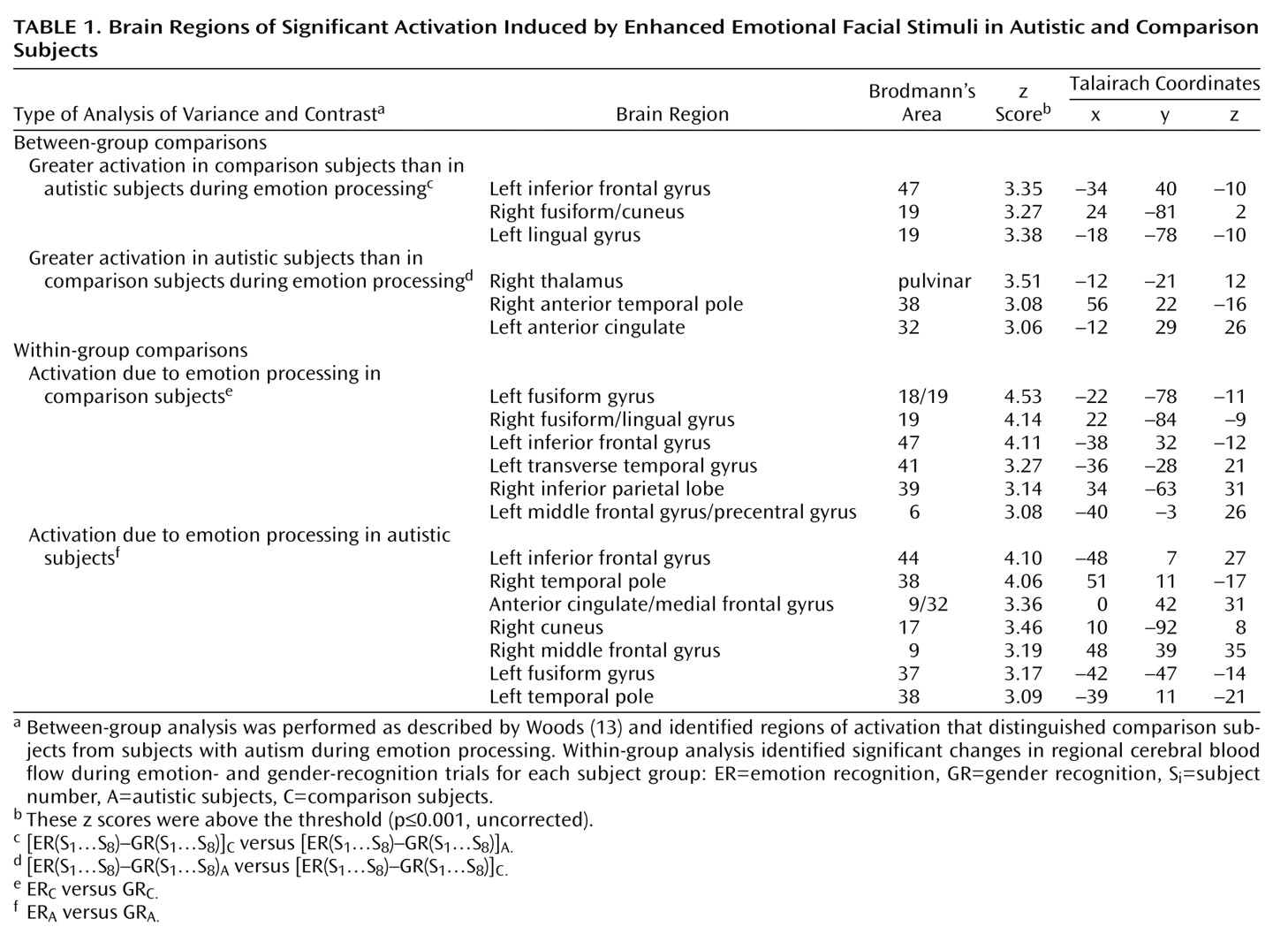

Between-group comparisons (

Table 1) revealed that the recognition of emotion by the participants with autism produced significantly more activation than that of the comparison subjects in the right anterior temporal pole, the left anterior cingulate, and the right thalamus. The recognition of emotions produced significantly greater activation in the comparison subjects than in the participants with autism in the right fusiform gyrus, the left lingual gyrus, and the left inferior frontal cortex.

Regions of activation that relate to the processing of emotion, relative to baseline gender recognition, are shown in the within-group results (

Table 1) and include regions not identified by the between-group analysis, such as the bilateral anterior temporal pole activation in the participants with autism.

Discussion

The present paradigm is relatively novel in its use of a cross-modal (visual and auditory) task to amplify the cortical response to facial emotional stimuli

(14). We found that when the emotional salience of facial stimuli was enhanced by the availability of prosodic information, adults with autism showed not only diminished activity in the right fusiform region, as observed previously

(4,

15), but also reduced inferior frontal activation. These results suggest that when recognizing emotion, high-functioning adults with autism place less processing emphasis on the extraction of facial information and the assembly and evaluation of an integrated emotional experience than do subjects without autism.

Instead, with emotion processing, our adults with autism showed greater activation than the comparison subjects in the thalamus, the anterior cingulate gyrus, and the right anterior temporal pole. Greater thalamic activation appears consistent with the suggestion that individuals with autism process facial stimuli through a selective analysis of features rather than holistically

(16). Conceivably, within the present context, the processing of faces along multiple select channels necessitates greater sensory modulation by the thalamus. The greater anterior cingulate activation observed for our participants with autism was localized to a region functionally associated with both allocation of attention to features of the sensory environment

(17) and direction of attention to a single modality under competing conditions of cross-modal stimuli

(18). Thus, in addition to placing greater demands for attention on our participants with autism than on our comparison subjects, the cross-modal emotional stimuli may have been processed as competing rather than complementary sensory experiences. Finally, in light of functional imaging research regarding categorization

(19), greater activation of the right anterior temporal pole in the adults with autism than in the comparison subjects may suggest that they accessed categorical perceptual knowledge to guide their decisions about emotional stimuli. Thus, the difficulties that individuals with autism experience in recognizing and understanding emotions may in part be due to their reliance on prototypical representations of emotions and use of categorical knowledge to solve novel problems of emotional experiences.

Although preliminary, these results suggest that emotion processing in autism fails to engage the limbic emotion system and instead is achieved in a feature-selective manner that places large demands on attention processes and draws on categorical knowledge to interpret emotional signals.