Alcohol use disorder has a lifetime prevalence of nearly one in three individuals in the United States (

1). An important goal is to identify at-risk individuals prior to the development of this disorder so that they can be targeted for early intervention. One way to determine early phenotypic differences in those at risk is to examine behavior at the level of an individual drinking session. For example, the rate of drinking and total alcohol exposure may differ between those at high and low risk. These parameters, however, are difficult to quantify in the field because of the lack of instruments that can continuously and accurately monitor blood alcohol concentration. Furthermore, asking individuals to report details about their rate of consumption does not account for variability in absorption and metabolism (

2) and would likely be inaccurate because intoxication impairs recall (

3). Despite these measurement difficulties, there is evidence that the rapid consumption of large quantities of alcohol leading to a blood alcohol concentration of 80 mg%, defined as binge drinking (

4), affects psychological and physical well-being. Binge drinking is associated with greater risk of negative health consequences (e.g., myocardial infarction) and legal trouble (

5,

6). Binge drinking may signify an innate preference for higher brain alcohol exposure and may begin before an individual meets criteria for an alcohol use disorder, but this hypothesis has never been empirically tested.

One method to assess alcohol consumption that overcomes many of these measurement difficulties is intravenous alcohol self-administration (

7). This method has shown good test-retest reliability and external validity (

8,

9) and has been employed in pharmacological (

10) and genetic studies (

11). Intravenous alcohol self-administration has several advantages over oral self-administration. Whereas oral administration at fixed doses can result in up to threefold variability in alcohol exposure between individuals as a result of pharmacokinetic differences (

12,

13), intravenous administration standardizes alcohol exposure by bypassing gastrointestinal absorption and first-pass metabolism. Interindividual differences in alcohol distribution and elimination are accounted for by using an infusion algorithm that adjusts for age, sex, height, and weight (

2). Accordingly, each infusion increases alcohol levels by a fixed quantity, allowing the infusion software to provide continuous estimates of blood alcohol levels that closely track brain alcohol exposure (

14) and breathalyzer readouts (

15). These estimates can then be used to measure an individual’s total alcohol exposure, as well as how quickly the individual reaches a binge level of exposure. This paradigm also eliminates specific cues associated with oral alcoholic beverages, including taste, smell, and appearance. As a result, intravenous self-administration should be driven primarily by alcohol’s pharmacodynamic effects, such as dopamine release in the nucleus accumbens (

16). This method is therefore ideal to determine whether preference for higher alcohol exposure is evident prior to the development of alcohol use disorder among individuals with biological risk factors.

The

DSM-5 lists the following genetic and physiological risk factors for alcohol use disorder (

17): family history of alcoholism (

18), male sex (

1), impulsivity (

19), absence of acute alcohol-related skin flush (

20), pre-existing schizophrenia or bipolar disorder (

21), and low level of response to alcohol (

22). Although these factors markedly increase the risk of developing alcohol use disorder, it remains unclear how they affect the likelihood of risky drinking patterns prior to disorder onset. In the present study, we examined the largest community sample to date of young adult social drinkers using intravenous alcohol self-administration. We investigated whether the genetic and physiological risk factors listed in

DSM-5 (except for skin flush and comorbid psychiatric disorders, which were exclusion criteria) were associated with the rate of binge-level exposure during an individual drinking session. We hypothesized that individuals at higher risk for developing an alcohol use disorder would exhibit a preference for higher brain alcohol exposure as demonstrated by higher rates of binging throughout the session and higher levels of total alcohol exposure.

Method

Participant Characteristics

A total of 162 social drinkers between the ages of 21 and 45 were recruited through newspaper advertisements and the National Institutes of Health (NIH) Normal Volunteer Office (for detailed demographic information, see

Table 1 and Tables S1–S3 in the

data supplement accompanying the online version of this article). To be included, participants must have consumed at least five drinks on one occasion at one point in their life. Participants completed a telephone screen and subsequently completed an in-person assessment at the NIH Clinical Center in Bethesda, Md. The study protocol was approved by the NIH Addictions Institutional Review Board, and participants were enrolled after providing written, informed consent.

Participants were excluded if they met any of the following 10 exclusion criteria: 1) nondrinker; 2) lifetime history of mood, anxiety, or psychotic disorder; 3) current or lifetime history of substance dependence (including alcohol and nicotine); 4) recent illicit use of psychoactive substances; 5) history of acute alcohol-related skin flush; 6) regular tobacco use (>20 uses/week); 7) history of clinically significant alcohol withdrawal; 8) lifetime history of suicide attempts; 9) current or chronic medical conditions, including cardiovascular conditions, requiring inpatient treatment or frequent medical visits; or 10) use of medications that may interact with alcohol within 2 weeks prior to the study. Females were excluded if they were breastfeeding or pregnant or if they intended to become pregnant.

All participants were assessed for psychiatric diagnoses, history of acute alcohol-related skin flush, drinking history, and other risk factors for alcohol use disorder. Diagnoses were assessed by the Structured Clinical Interview for DSM-IV Axis I disorders (

23). History of acute alcohol-related skin flush was assessed using the Alcohol Flushing Questionnaire (

24). Drinking history was assessed using the Alcohol Use Disorder Identification Test (

25). Two participants were excluded from this analysis because they were heavy drinkers based on the Timeline Followback Interview (>20 drinks/week for males, >15 drinks/week for females). One participant was excluded because software failure caused the session to be terminated prior to minute 20 of the alcohol self-administration session, resulting in a final sample size of 159 participants.

Alcohol Use Disorder Risk Factor Measures

Family history.

Participants completed the Family Tree Questionnaire (

26) to identify first- and second-degree relatives who may have had alcohol-related problems. They subsequently completed the family history assessment plus individual assessment modules of the Semi-Structured Assessment for Genetics of Alcoholism for all identified relatives (

27). This assessment is widely used in family history-based studies, including large genetic studies, such as the Collaboration on the Genetics of Alcoholism (

28). If no information was available about a relative, then that relative was scored as a 0. Relatives with a known history of alcohol-related problems were scored as a 1. A family history density score was calculated by dividing the number of relatives with alcohol problems by the total number of first- and second-degree relatives. One participant did not complete this measure, and his value was imputed with the sample median of 0 given that family history density was not normally distributed (Shapiro-Wilk test: p<0.001). We conducted all models with and without this participant and found that his exclusion did not alter our findings, and thus we report the results with this participant included.

Behavioral impulsivity.

Participants completed a delay discounting task (

29), which is a well-validated measure of behavioral impulsivity that has a robust association with alcohol use disorder (

30,

31). During this task, participants chose between smaller immediate rewards or $100 received after a delay (e.g., $90 now or $100 in 7 days). Immediate rewards ranged in value from $0 to $100, and delay periods ranged from 7 to 30 days. The degree of discounting delayed rewards,

k, can be calculated using the equation developed by Mazur et al. (

32). Since

k values were not normally distributed, they were normalized using a logarithmic transformation and reported as ln(

k). Lower values of ln

(k) suggest less impulsivity and lower degrees of discounting. A portion of the sample did not complete this task (N=25), and missing values of ln

(k) were imputed with the sample mean.

Level of response to alcohol.

Participants also completed the Self-Rating of the Effects of Alcohol form (

33). This instrument assesses response to alcohol during the first five drinking occasions of a person’s life, their heaviest drinking period, and their most recent drinking period. For each period, it asks how many drinks it took for them to feel different, to feel dizzy, to begin stumbling, and to pass out. The final score represents the mean of the number of drinks needed to achieve each outcome, with a higher number of drinks indicating a lower level of response to alcohol. We focused on the first five drinking occasions in the present analyses to reduce the potentially confounding impact of tolerance.

Intravenous Alcohol Self-Administration

Participants were instructed not to drink alcohol in the 48 hours prior to study procedures. Upon arrival, they provided a breathalyzer reading to confirm abstinence. Participants also provided a urine sample that was tested for illicit drugs and, for females, pregnancy; both had to be negative to proceed with the study session. After the participant ate a standardized (350 kcal) meal, an intravenous catheter was inserted into a vein in the forearm. Self-administration was conducted using the computer-assisted alcohol infusion system software, which controlled the rate of infusion of 6.0% v/v alcohol in saline for each individual using a physiologically based pharmacokinetic model for alcohol distribution and metabolism that accounts for sex, age, height, and weight (

2).

The alcohol self-administration session consisted of a 25-minute priming phase and a 125-minute free-access phase. During the first 10 minutes of the priming phase, participants were required to push a button four times at 2.5-minute intervals. Each button press resulted in an alcohol infusion that raised blood alcohol concentration by 7.5 mg% in 2.5 minutes, such that participants achieved a peak concentration of approximately 30 mg% at minute 10. During the next 15 minutes, the button remained inactive while participants experienced the effects of the alcohol. At minute 25, the free-access phase began, and participants were instructed to “try to recreate a typical drinking session out with friends.” Participants could self-administer ad libitum, but they had to wait until one infusion was completed before initiating another. Blood alcohol concentration was estimated continuously by the software based on infusion rate and model-estimated metabolism, and a readout was provided at 30-second intervals. Breath alcohol concentration was also obtained via breathalyzer at 15-minute intervals to confirm the software-calculated estimates; these readings were entered into the software to provide the model feedback, and the infusion rate was automatically adjusted accordingly (

2). Software estimates of blood alcohol concentration were used to determine whether a participant reached binge-level exposure, defined as achieving an estimated blood alcohol concentration greater than 80 mg% (

4). A limit was imposed such that estimated blood alcohol concentration could not exceed 100 mg% to prevent adverse events due to intoxication.

Statistical Analysis

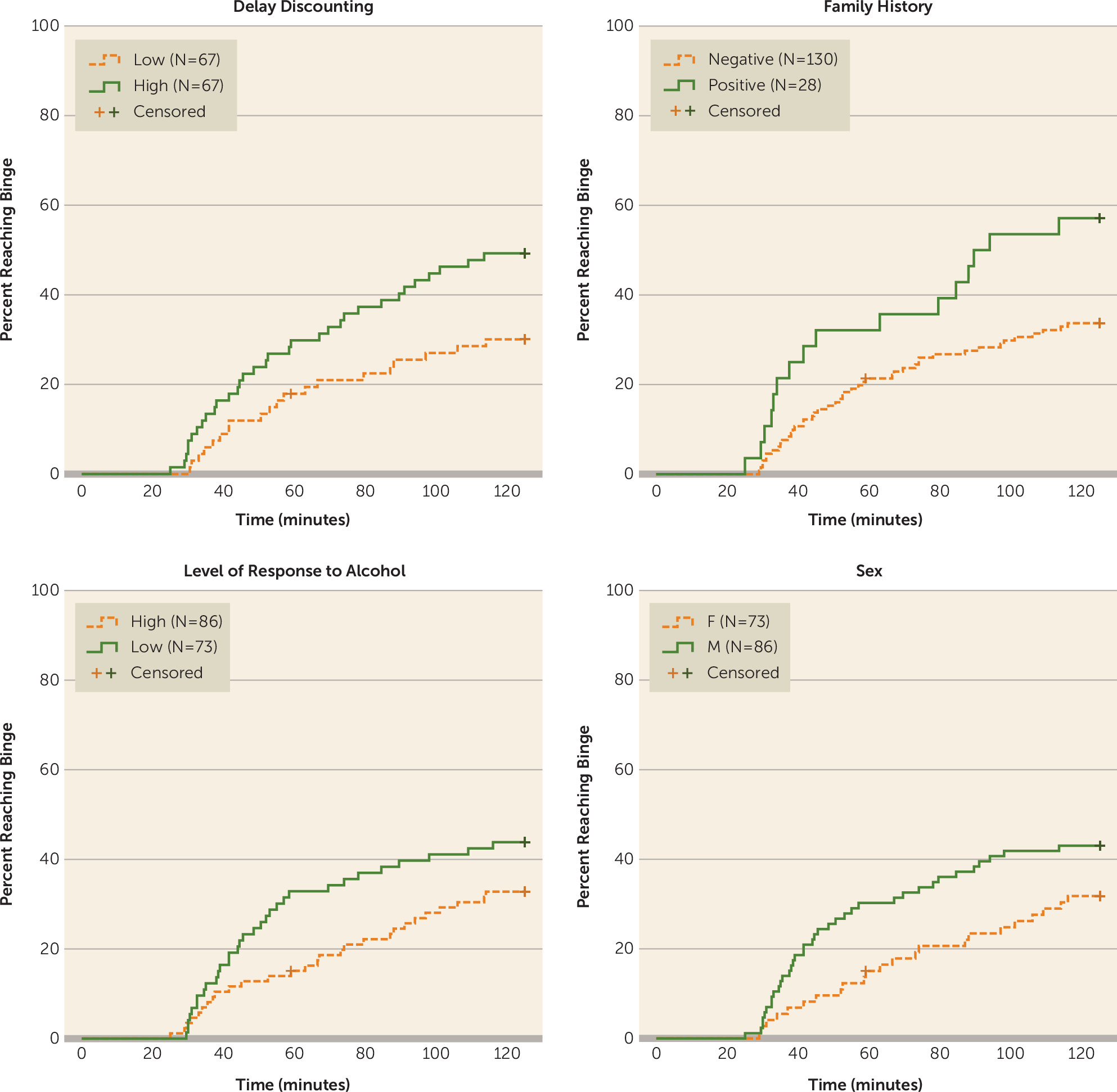

To examine whether risk factors for alcohol use disorder were predictors of rate of binging throughout the free-access phase of the intravenous alcohol self-administration session, we plotted Kaplan-Meier survival curves and conducted Cox proportional hazards models. We generated the following four Kaplan-Meier survival curves using binary variables (

Figure 1): 1) male compared with female; 2) family-history positive compared with negative; 3) high compared with low impulsivity (median split); and 4) high compared with low level of response to alcohol (median split). For the Cox proportional hazards analyses, the outcome variable was time to binge (estimated blood alcohol concentration of 80 mg%), and participants were censored when they reached a binge or ended the session early (one participant). For the initial Cox proportional hazards model, five independent variables were included: sex was coded as a binary variable (0 for females, 1 for males), and delay discounting, family history density, level of response to alcohol, and age were entered as continuous variables.

To determine whether faster rate of consumption translated into greater overall exposure to alcohol, we calculated the area under the curve for the estimated breath alcohol concentration by time plot during the free-access phase of the session. Three individuals ended the session early due to software malfunction or adverse events (at minutes 59, 88.5, and 99.5); thus, in order to generate the area under the curve for these participants, we imputed values for the remainder of the session by carrying their last observed alcohol concentration forward. To confirm the validity of this approach, we applied the same imputation procedure for 20 random participants starting at minute 59 and found that the imputed values correlated highly with the actual values (Spearman’s rho >0.9). We conducted Mann-Whitney tests to compare area under the curve distributions for each risk factor, as area under the curve values were not normally distributed (Shapiro-Wilk test: p<0.05). For these analyses, we used the binary categorical risk factors described above.

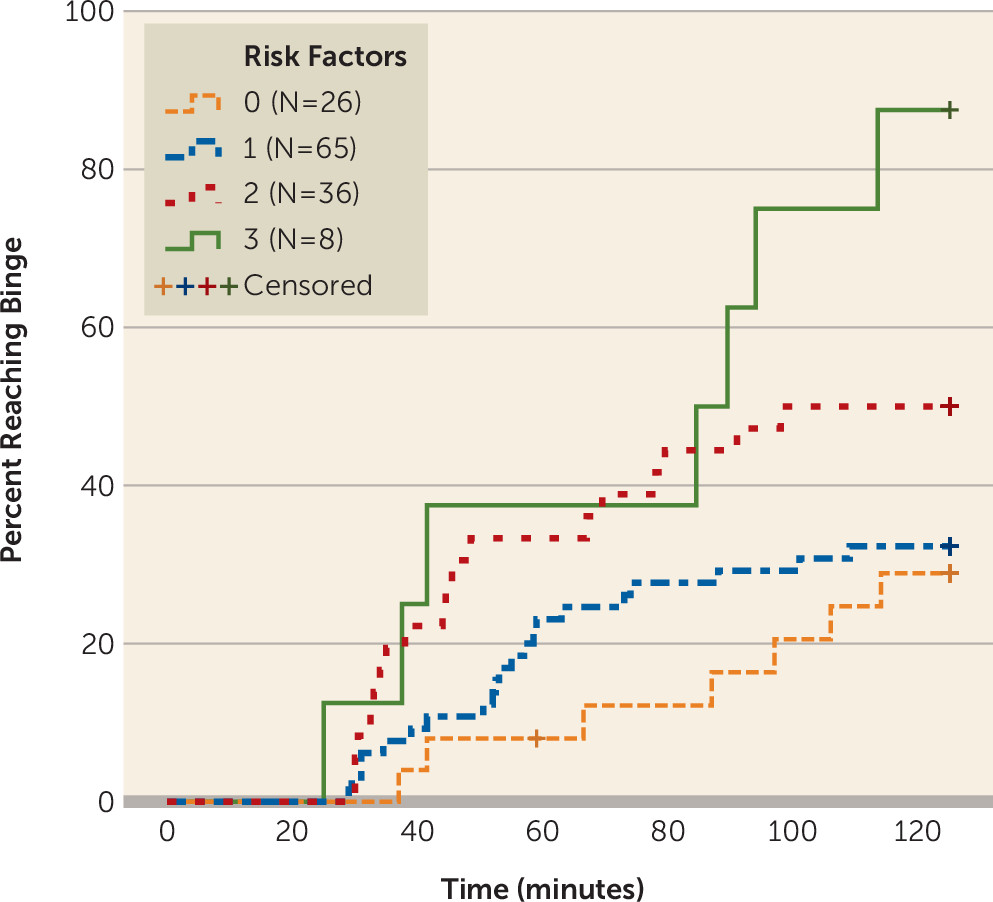

To assess the additive effects of significant variables from the aforementioned analyses, we coded individuals according to their number of risk factors for alcohol use disorder. For this analysis, we only used the binary risk factors described above, excluding level of response to alcohol, which did not contribute to the aforementioned models. We thus created four groups: zero-, one-, two-, and three-risk factor groups. The zero-risk factor group served as the reference group. We plotted Kaplan-Meier survival curves to examine differences between groups and also to fit a Cox proportional hazards model additionally adjusted for age. We also tested whether there was evidence of additive effects of risk factors on overall alcohol exposure during the session by comparing the area under the curve values for different risk groups using a Jonckheere-Terpstra test (

34,

35).

Results

Effect of Risk Factors on Rate of Binging

Overall, 60 participants achieved a binge-level exposure, and 99 participants had estimated blood alcohol concentrations beneath 80 mg% across the entire session. A higher percentage of bingers was found in family-history positive compared with negative individuals (57.1% and 33.1%, respectively), males compared with females (43.0% and 31.5%, respectively), high compared with low delay-discounting individuals (49.3% and 29.9%, respectively), and those with a low compared with high level of response to alcohol (43.8% and 32.6%, respectively) (

Figure 1).

We tested whether risk factors for alcohol use disorder predicted the rate of binging throughout the session using a Cox proportional hazards model with all four risk factors and age as independent variables (model 1). Family history density was a significant predictor (hazard ratio=1.04, 95% confidence interval [CI]=1.02–1.07, p=0.001), whereas male sex (hazard ratio=1.71, 95% CI=1.00–2.94, p=0.052) and delay discounting (hazard ratio=1.17, 95% CI=1.00–1.37, p=0.056) were marginally significant. Level of response to alcohol was not a significant predictor of the rate of binging throughout the session (hazard ratio=1.01, 95% CI=0.89–1.15, p=0.840) (

Table 2). Because the level of response was not contributing to the model and was significantly correlated with sex (Spearman’s rho=0.29, see Table S4 in the

online data supplement), we dropped it from the model. In this second analysis (model 2), male sex (hazard ratio=1.74, 95% CI=1.03–2.93, p=0.038), delay discounting (hazard ratio=1.17, 95% CI=1.00–1.37, p=0.048), and family history density (hazard ratio=1.04, 95% CI=1.02–1.07, p=0.002) all significantly predicted binge rate throughout the session. The effects of these risk factors remained consistent when controlling for the Alcohol Use Disorder Identification Test score (model 3). As would be expected, participants with a higher Alcohol Use Disorder Identification Test score were more likely to binge (hazard ratio=1.14, 95% CI=1.04–1.24, p=0.004).

Effects of Individual Risk Factors on Total Alcohol Exposure

We also tested whether each individual risk factor was associated with total alcohol exposure as measured by the area under the estimated blood alcohol concentration versus time curve during the free-access phase. Median alcohol exposure was higher in family-history positive individuals, males, and participants with delay-discounting scores above the median (see Figure S1 in the online data supplement), with significantly different distributions across sex and delay-discounting groups and marginal significance across family history groups (family history: U[28, 130]=2247, p=0.052; sex: U[86, 73]=3763, p=0.031; delay discounting: U[67, 67]=2839, p=0.008). There was no significant difference between those with high and low levels of alcohol response (U[73, 86]=2619, p=0.072).

Additive Effects of Risk Factors on Rate of Binging

To investigate whether the significant risk factors from the prior analysis had additive effects, we divided participants based on their number of risk factors into four groups: zero risk factors (N=26), one risk factor (N=65), two risk factors (N=36), and three risk factors (N=8), where zero risk factors indicates a family-history negative female with a delay-discounting score below the median (

Figure 2) (see Table S5 in the

online data supplement for characteristics of the sample by risk factor group). Cox proportional hazards regression controlling for age demonstrated that compared with the zero-risk factors group, individuals in the two-risk factors group (hazard ratio=2.54, 95% CI=1.05–6.12, p=0.038) and three-risk factors group (hazard ratio=5.27, CI=1.81–15.30, p=0.002) binged at higher rates throughout the session. The zero-risk factors group and the one-risk factor group did not differ (hazard ratio=1.29, 95% CI=0.55–3.04, p=0.562). These effects remained significant when controlling for the level of alcohol response as a continuous variable and the Alcohol Use Disorder Identification Test score (see Table S6 in the

online data supplement).

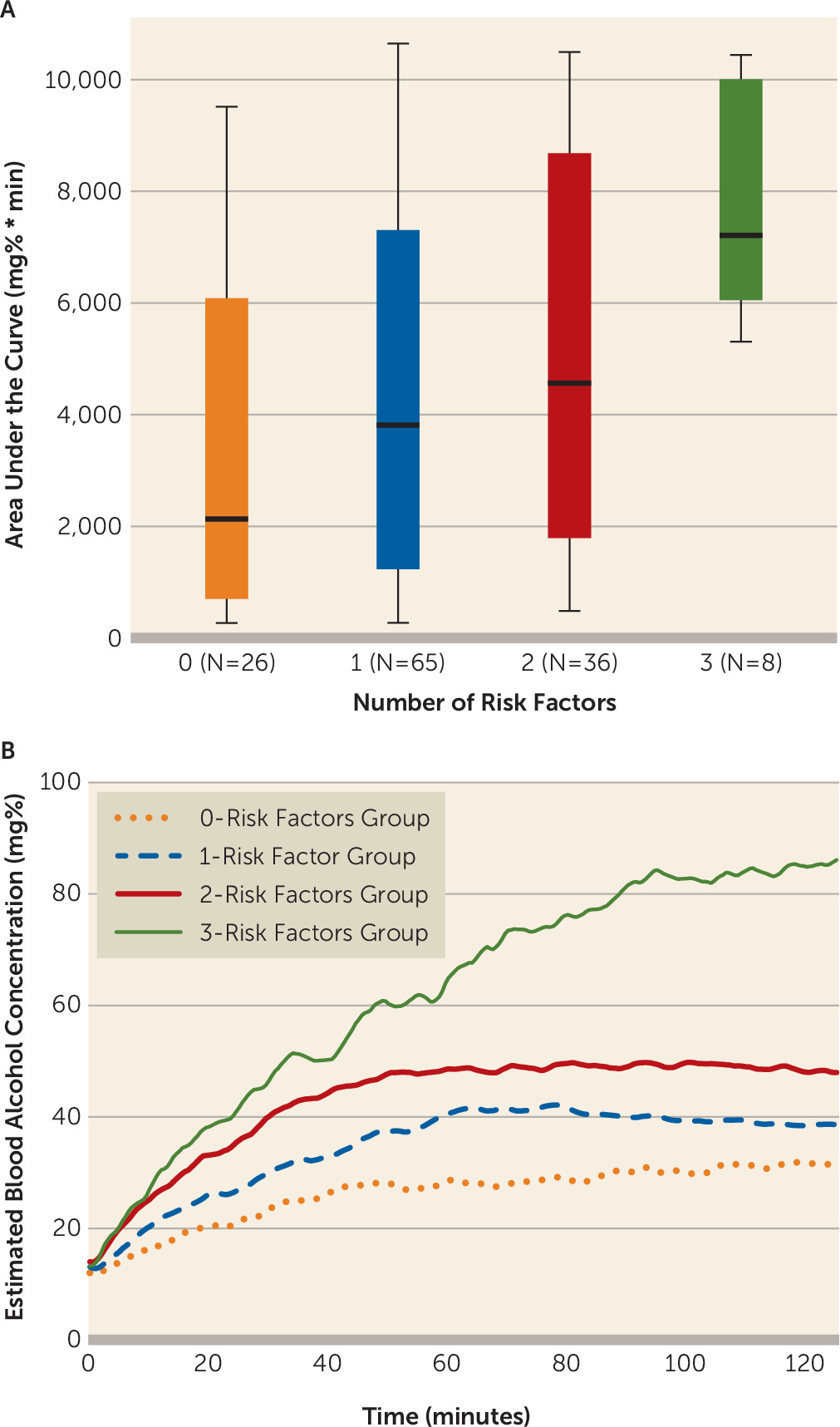

Additive Effects of Risk Factors on Total Alcohol Exposure

Individuals with a greater number of risk factors achieved higher levels of alcohol exposure, with median area under the curve values of 2132.5 mg%*min, 3814.8 mg%*min, 4565.7 mg%*min, and 7208.5 mg%*min for individuals with the lowest to highest number of risk factors, respectively. The results of a Jonckheere-Terpstra test for ordered alternatives indicated that there was a significant effect of number of risk factors on the distribution of area under the curve values with a small-to-medium effect size (T

JT=3746.0, p=0.001, Kendall’s τ=0.22) (

Figure 3). Bonferroni-corrected pairwise comparisons indicated that the distribution of the areas under the curve for the two- and three-risk factors groups were significantly different than that of the zero-risk factors group, and the three-risk factors group distribution of area under the curve values also differed from that of the one-risk factor group (all p values <0.05).

Discussion

Young social drinkers at risk for an alcohol use disorder had consumption patterns that were markedly different from low-risk drinkers during a free-access intravenous alcohol self-administration session. Vulnerable drinkers had higher rates of binging throughout the session and greater overall exposure to alcohol. The effects of these risk factors were additive. This finding is especially remarkable given the similarity of Alcohol Use Disorder Identification Test scores between the higher- and lower-risk groups and given that these effects remained largely unchanged when controlling for test scores. To our knowledge, this is the first large pharmacokinetically controlled study to show that the presence of risk factors for alcohol use disorder leads to different patterns of drinking at the level of an individual drinking session in young social drinkers who have not yet developed the disorder. These findings suggest an innate neurobiological preference for higher alcohol exposure that may contribute to alcohol use disorder risk.

Of the factors we examined, family history of alcoholism was most strongly associated with the rate of binging during the session, with a small-to-medium effect size. This finding is in accordance with epidemiologic studies showing that up to one-half of the risk of alcoholism is genetic and corroborates the results of a small intravenous alcohol self-administration study demonstrating that family-history positive individuals achieved higher alcohol exposures (

36). Our study extends these intravenous alcohol self-administration results by showing that participants with a greater percentage of biological relatives with alcohol problems were at greater risk. Our study also found higher rates of alcohol consumption in males compared with females, which is consistent with a recent study of intravenous alcohol self-administration in adolescents (

9). Delay discounting has previously been observed as a predictor of laboratory alcohol consumption (

8), and we confirmed that here. The level of response to alcohol was not related to the rate of binging or total alcohol exposure in our study. This may be partially due to the surprising fact that participants with a low level of response to alcohol in our study actually had lower family history densities for alcoholism than participants with a high level of response (see Table S3 in the

online data supplement), which is the opposite of what has been found in most studies (

37), although controlling for family history density did not change our results. Level of response to alcohol may have been influenced by recall bias and may have shown more predictive power if it had been assessed experimentally, as in the original studies by Schuckit (

22). Despite some evidence that level of response may vary as a function of rate of change in blood alcohol concentration and drinking history (

37,

38), we chose to use a simpler static measure of level of response here. More complex assessments of level of response may yield different results.

There were several limitations to this study, most notably the cross-sectional design. Longitudinal studies will be needed to confirm that differing patterns of consumption early on are predictive of the development of an alcohol use disorder. Intravenous alcohol self-administration also differs in many ways from real-world alcohol consumption. However, recent results suggest that intravenous self-administration is reflective of external consumption patterns when comparing across drinkers of varying severity (

9,

39). A few individuals in our sample were in their forties, and an even younger sample would have been ideal to assess the effects of these risk factors, although the vast majority of the individuals in our sample (86.1%) were at or below the age of 30. When we controlled for age in our analyses, the effects we observed remained significant. The additive risk factor analysis requires replication, especially given the low number of individuals in the three-risk factors group. Finally, we could not assess how acute alcohol-related skin flush, smoking, and preexisting psychiatric disorders contributed to the rate of binging in this sample because these were exclusion factors for our study. This limits the generalizability of our findings, especially because smoking and psychopathology are highly comorbid with alcoholism. Future studies should determine whether these factors affect rate

s of alcohol consumption in young adults.

Prior to the development of an alcohol use disorder, those at higher risk demonstrated differing patterns of alcohol consumption, including higher rates of binging and greater total alcohol exposure. Although most screening tools for alcoholism focus on quantity of consumption across many sessions, focusing on binging and total alcohol exposure during individual drinking sessions may be clinically relevant and may allow for earlier detection of high-risk individuals. Assessing binging and total alcohol exposure in the laboratory, and eventually in the field when appropriate technology is available, may be a helpful way of selecting individuals who require early intervention. Clinical questions regarding the time course of typical drinking sessions, in addition to standard questions about quantity of alcohol consumed, may help better characterize total alcohol exposure and stratify risk. There are likely neurobiological factors that contribute to the way each person drinks, and this may dispose some individuals to achieve blood alcohol concentrations that endanger them.

Acknowledgments

The authors thank the late Dr. Daniel Hommer for his mentorship and clinical oversight, Dr. Mary Lee, Dr. David T. George, and Nurse Practitioner LaToya Sewell for medical support and monitoring safety of the participants, as well as Markus Heilig, Reza Momenan, and Melanie Schwandt for their programmatic and operational support. The authors also thank Tasha Cornish and Monique Ernst for comments on earlier drafts of this study; Joel Stoddard and Ruth Pfeiffer for analytic suggestions; the staff of the 5-SW day hospital and 1-HALC alcohol clinic at the NIH Clinical Center; and, for their help with data collection, Julnar Issa, Megan Cooke, Marion Coe, Molly Zametkin, Kristin Corey, Jonathan Westman, Lauren Blau, and Courtney Vaughan.