However, neuropathology may clearly be present among nondemented persons.

4 In the case of β-amyloid, which can be imaged in vivo, neuropathology in nondemented persons appears to increase the risk of near-term conversion to clinical dementia.

5,6 If δ truly represents a dementing process, then its variance among nondemented persons may reflect the preclinical stages of such processes. We examine this possibility in the Freedom House Study (FHS), a longitudinal study of “successful” aging.

7 Although the FHS cohort was nondemented and noninstitutionalized at its inception, we have previously shown that it subsequently experienced significant longitudinal declines in global cognition, memory, executive function, and functional status.

8 The rate of change in executive function was strongly associated with the decline in functional status, suggesting an emergent dementing process.

7 Although we cannot determine the cause of these trends in the FHS data-space, we predict that they will be specifically associated with δ, measured at baseline in this nondemented sample.

RESULTS

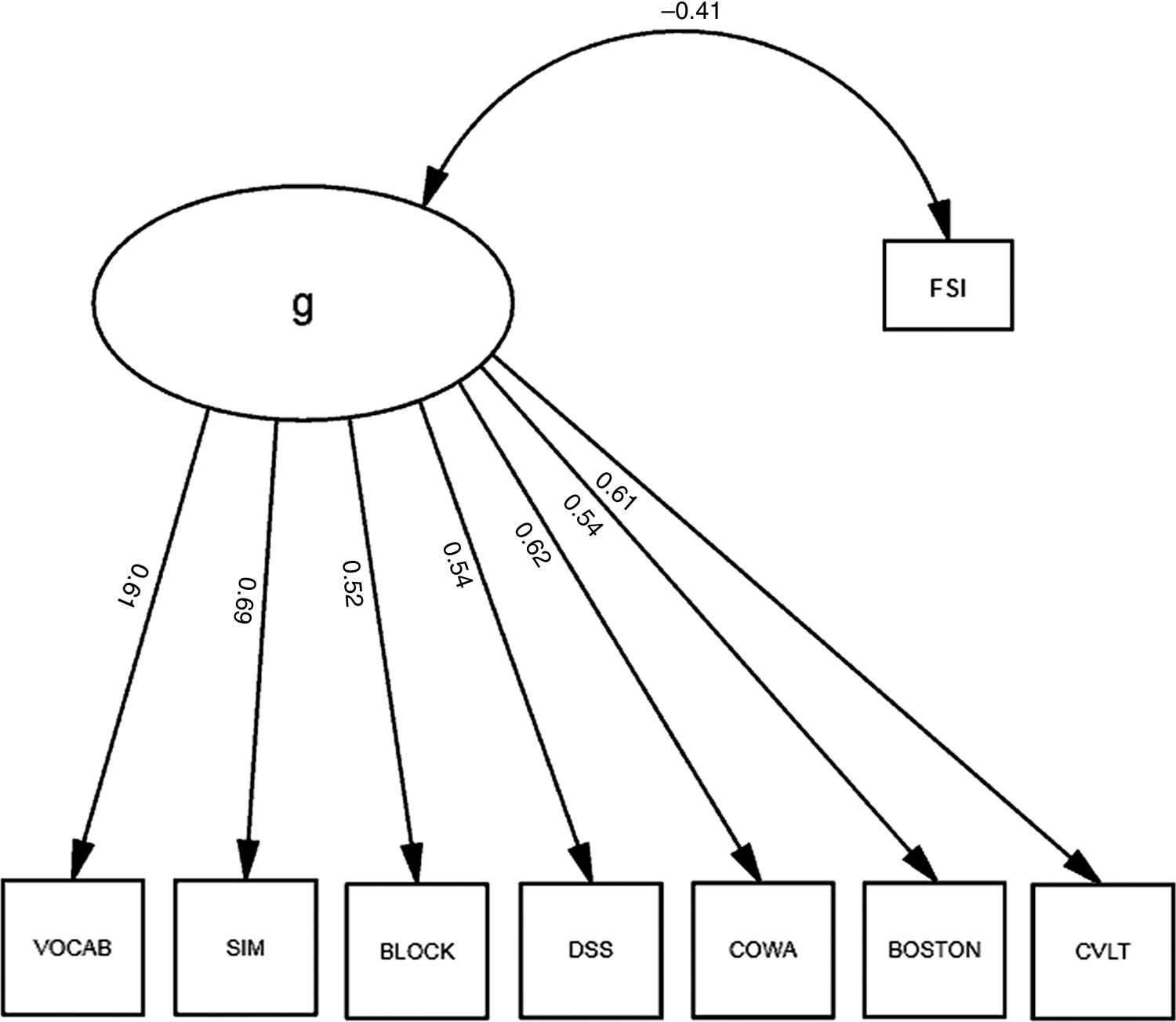

We first built a factor model of a latent variable, “g,” representing the variance shared across the cognitive measures in our battery. Each measure loaded significantly on “g;” “g” was most strongly loaded by WAIS–R SIM (r=0.67), COWA (r=0.62) and VOCAB (r=0.62), and least strongly loaded by WAIS–R BLOCK and DSS (both r=0.50). All loadings were significant (p<0.001).

Next, we correlated our FSI with “g” (

Figure 1). “Functional Status” was significantly associated with g (r = –0.41), which explained 16.8% of its variance. Thus, functional status shared a small but significant fraction of the variance in cognitive performance (i.e., “g”); “g” explained 52.3% of the cognitive battery's variance, but the model exhibited marginally-acceptable fit (χ

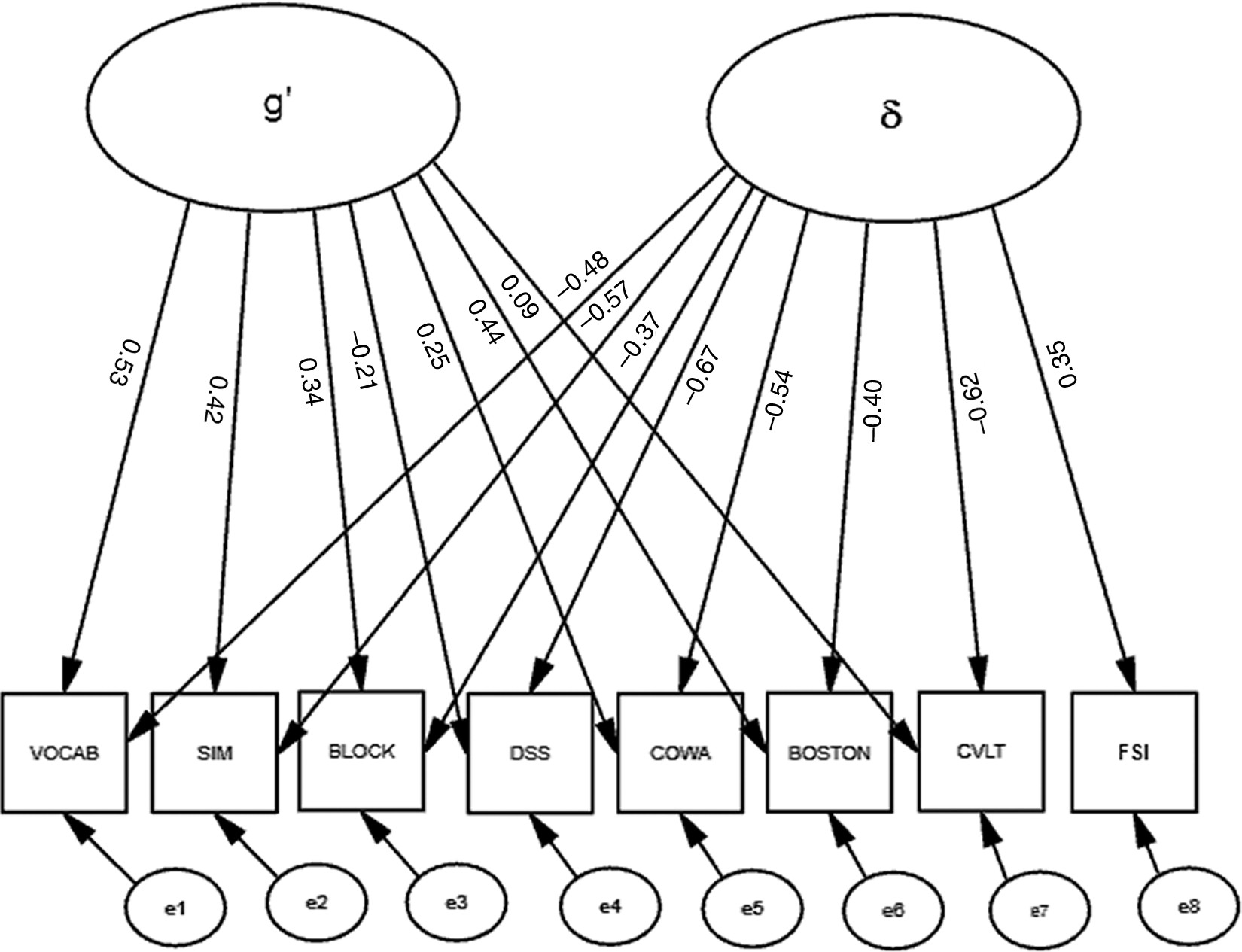

2: F=67.5; df: 18; p<0.001; RMSEA=0.070; BCC=163.38). Moreover, significant correlations among the residuals (data not shown) suggested that a multifactorial model might better fit these data. Therefore, we constructed a second factor, “δ,” representing the shared variance between our FSI and cognitive performance (

Figure 2). Unlike the model in

Figure 1, this model uses Functional Status as an indicator of a latent variable, rather than its correlate. This effectively parses the shared variance across the cognitive measures (i.e., “g”) into a larger fraction that is not related to functional status (i.e., g′), and a smaller fraction; (i.e., δ;

Figure 3). This two-factor model provides better fit to the data than the one-factor model represented in

Figure 1 (χ

2: F=32.5; df: 17; p=0.01; RMSEA=0.040; BCC=155.08). δ is significantly related to “Functional Status” (r=0.35) and negatively related to cognitive performance. All loadings on δ are significant. In contrast to g and g′, δ is most strongly loaded by DSS (r = –0.67). WAIS–R BLOCK's association with g′ was attenuated, and the loadings of the CVLT and WAIS–R DSS on g′ are no longer significant after the creation of “δ” (

Figure 2).

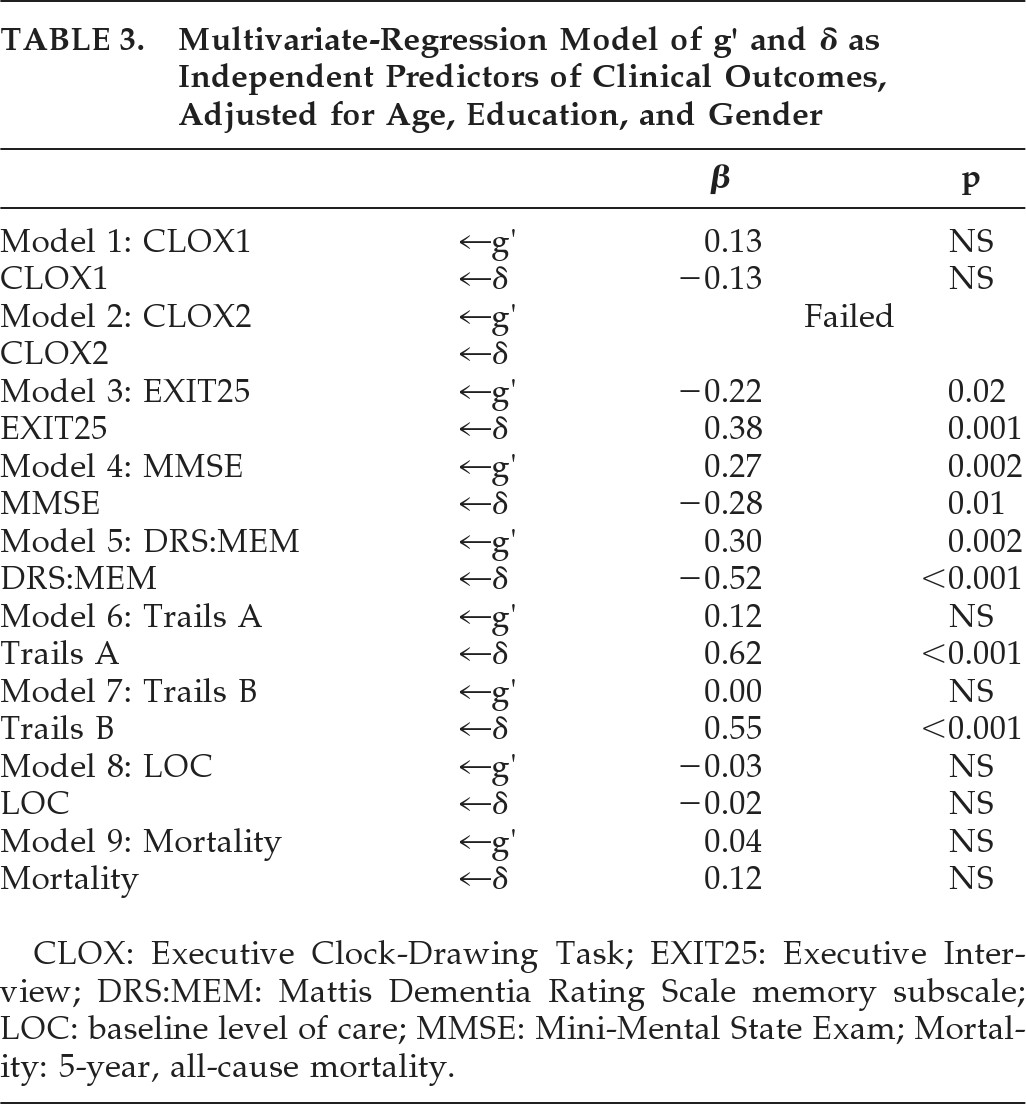

Next, we examined the clinical significance of δ versus g′ in multivariate-regression models of a variety of clinical outcomes. After adjusting for age, education, and gender, g′ and δ were independently, significantly, and moderately associated with DRS:MEM, MMSE, and EXIT25 scores; δ alone was moderately associated with baseline Trails B scores, and strongly associated with Trails A (

Table 3). Neither construct was significantly associated with baseline level of care (restricted variability), nor with 5-year, prospective all-cause mortality.

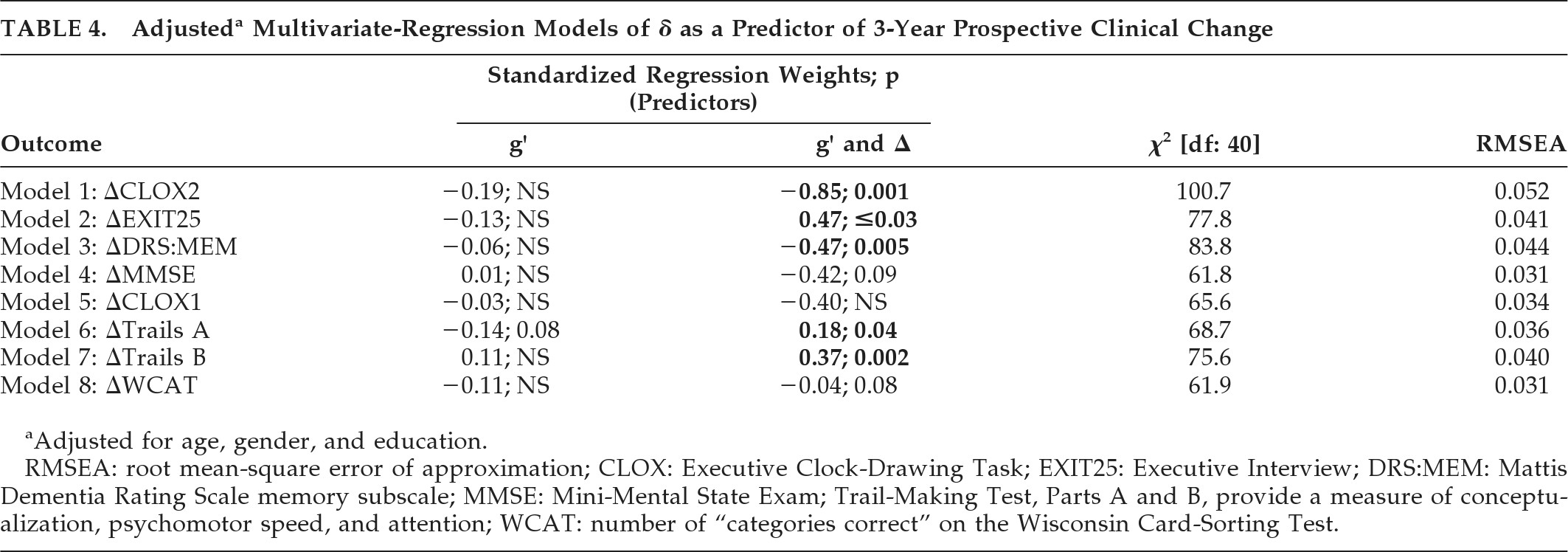

Finally, we examined g′ and δ as independent predictors of 3-year prospective change in cognitive performance, in multivariate-regression models of linear longitudinal change derived from LGC models, adjusted for age, education, and gender (

Table 4). All models showed excellent fit (i.e., RMSEA <0.05) except ΔCLOX2, which was acceptable (RMSEA=0.052).

Figure 4 presents the ΔEXIT25 model. Once again, δ was most strongly associated with nonverbal measures (DSS; r = –0.75) and g′ was most strongly associated with verbal measures (VOCAB: r=0.62).

δ was significantly correlated with ΔCLOX2, ΔEXIT25, ΔDRS:MEM, ΔTrails A, and ΔTrails B (

Table 4). ΔMMSE showed a trend: g′ was not significantly associated with prospective change in any clinical outcome independently of δ.

DISCUSSION

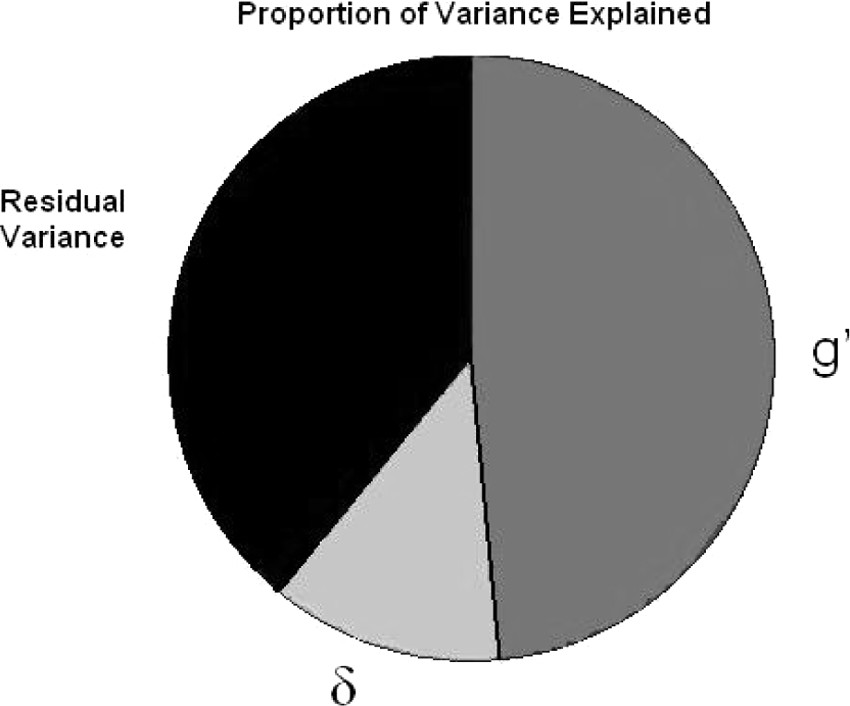

We recently reconceptualized “dementia” as a disorder affecting the cognitive correlates of functional status. In this article, we provide a formal model of that construct, which parses the variance in measured cognitive performance into three compartments: the shared variance that is relevant to dementia case-finding (i.e., δ); the shared variance that is irrelevant to dementia case-finding because it is not associated with functional outcomes (i.e., g′), and the residual variance that is unique to each specific measure in the battery. Despite the fact that this sample does not have dementia at baseline, previous analyses have demonstrated that it has suffered a longitudinally-evolving cognitive decline that is associated with declines in functional status (i.e., a “dementing process”). These trends are demonstrably associated with δ at baseline. In contrast, g′ has no significant association with longitudinal changes in cognition independently of δ.

This analysis also reveals how little of the total variance in cognitive performance may actually be relevant to dementia case-finding; δ can be estimated to represent only a small fraction of this sample's total cognitive variance (

Figure 3). Nonetheless, δ's variance appears to have more clinical utility than that of g′, and, by definition, g′ has no association with functional status. That so little of the battery's variance is associated with δ may explain why unfiltered cognitive task performance is such a relatively weak predictor of functional outcomes.

1δ is most strongly related to nonverbal measures, whereas g′ is most strongly related to verbal measures. Although it may be tempting to interpret these as reflecting “fluid” (δ) versus “crystalline” (g′) intelligence, respectively, it is important to note that δ differs from these and similar constructs derived from factor analyses of cognitive performance data

because functional status as used as an indicator of δ

and not its correlate. Thus, δ may correlate with “fluid intelligence,” but it is not its homologue. Since factor analyses suggest a systematic psychometric bias toward measures of memory and verbal abilities, many cognitive batteries may be insensitive to δ, and hence also to functional outcomes and dementia. Thus, for example, Loewenstein et al.

31 factor-analyzed an extensive battery of psychometric and functional status measures among 166 patients with AD. Six factors were extracted; however, none of the psychometric measures in Loewenstein et al.'s battery loaded on the factors associated with functional outcomes. Thus, their battery may have failed to detect the

essential psychometric correlates of AD dementia, despite the detection of AD-related cognitive changes. We have suggested instead that nonverbal cognitive decline may be most relevant to dementia-case-finding.

1 This impression was based in part on studies that report specific associations between nonverbal cognitive functioning and functional outcomes.

32,33 Nonverbal performance is strongly associated with δ.

The WAIS–R DSS was most strongly associated with δ and may be relatively useful in dementia assessment. We previously factor-analyzed the FHS psychometric battery, and found that DSS, the EXIT25, and COWA load significantly on a factor indicated also by both ADL and IADL.

34 Similarly, Barberger-Gateau et al.

35 factor-analyzed a battery composed of both cognitive measures and IADL items. Four factors were extracted. The dominant factor, explaining 30.3% of variance in the data-space, received significant loadings from both cognitive and functional measures. Again, the DSS had the strongest loading (r=0.80). Only this factor was associated with the 4-year cumulative risk of incident dementia in nondemented participants. This and our DSS dominant factor appear homologous to δ.

A potential limitation of this study is that our functional status assessment is relatively primitive and based on subjective ratings. The model might be improved by the use of performance-based functional measures. Pereira et al.

36 report an exceptionally strong correlation between the Disability Assessment of Functional Status (DAFS), a performance-based functional assessment, and EXIT25 scores, which are strongly associated here with δ, but not g′. Alternatively, a latent functional-status construct could be derived from the shared variance across several functional-status measures. Such a construct might further reduce the measurement error associated with δ and improve models of its related biomarkers. Also, we note that the residual variance in

Figure 3 is substantial and may contain domain-specific cognitive factors. If so, then it is possible that one or more additional cognitive factors would incrementally explain additional variance in functional status, above and beyond that related to δ (i.e., through a significant association with e8 in

Figure 2). However, this appears unlikely. In our 2007 review,

1 “global cognition” (represented here by g′ and δ) appeared to be more robustly associated with functional outcomes than any domain-specific cognitive capacity, including executive function. Additional analyses should clarify the relative strengths of global versus domain-specific cognitive functioning as predictors of functional outcomes. However, if domain-specific cognitive skills fail to add variance to functional outcomes independently of δ, it will suggest a fundamental limitation in the ability of cognitive measures to describe dementing processes.

Instead, our analysis suggests that the assessment of functional status should assume a larger role in dementia case-finding.

37 Such an approach is feasible. Barberger-Gateau et al.

38 have demonstrated that, where cognitive assessment is not feasible or unavailable, impairment on any of four IADL items can accurately diagnose dementia in community-dwelling older adults (sensitivity: 0.94; specificity: 0.71).

Unfortunately, neither the dementia literature nor the Mild Cognitive Impairment (MCI) literature has provided cogent guidance regarding the specific functional capacities or functional status measures that should be used to determine the presence of “dementia.” Our model is interesting because it explicitly parses the variance in functional status into dementia-relevant and irrelevant fractions (i.e., e8, in

Figure 2), which may represent physical handicap.

δ was independently associated with prospective changes in this initially-nondemented cohort. This suggests the possibility of using δ factor scores to identify the subset of nondemented persons most at risk of near-term conversion to a demented state. This may or may not be synonymous with those recognized as having “MCI” on the basis of baseline cognitive performance, given that 1) the cognitive measures used to make that diagnosis are not necessarily strongly associated with δ; 2) only a fraction of the variance in those relevant measures loads on δ; and 3) IADL, which empirically loads strongly on δ (Royall et al.; unpublished), is seldom used specifically to inform dementia status.

Finally, although we have shown δ to be specifically related to 3-year prospective changes in cognition, it remains a cross-sectional construct. However, there is no reason why we could not reiterate this model over serial observations in order to construct LGC or Growth Mixture Models (GMM) of the temporal change in g′ (Δg′) and δ (Δδ). These may be useful in understanding the natural history of dementing processes or in identifying the biomarkers associated with dementia progression or MCI conversion risk.

39 GMM would allow the identification of subgroups within a cohort with differentially-evolving Δδ.

In summary, we have explicitly distinguished dementia-relevant variance in cognitive task performance (i.e., δ) from the variance that is unrelated to a dementing process (i.e., g′). δ represents only a small fraction of the total variance in cognitive task performance, yet it is independently associated with baseline cognition and uniquely associated with longitudinal cognitive change, in contrast to g′. δ is most strongly associated with nonverbal measures, whereas g′ is most strongly associated with verbal tasks. These findings are consistent with previous studies suggesting that nonverbal tasks are more strongly associated with functional outcomes. Our ability to distinguish δ from g′ may improve our ability to model dementing processes among nondemented persons. These results have implications for the clinical assessment of dementia and suggest that functional status should assume a more important role.