Mental health systems have shifted away from a medical model and toward a recovery model of care over the past 40 years, and recovery-oriented care has emerged as the prominent paradigm for helping those with mental health conditions (

1–

5). This is demonstrated by the integration of recovery concepts and language into vision statements, mission statements, and service philosophies (

6) as well as into policies that govern service delivery and practice (

7) in many large mental health systems.

Few psychometrically tested and validated instruments are available to measure the extent to which programs encompass the necessary organizational precursors to drive recovery-oriented service delivery and, ultimately, outcomes. This is a persistent limitation in evaluating whether a mental health program has successfully transitioned to a recovery model. Some available instruments are helpful for measuring personal recovery (

8–

16), whereas others are useful for measuring the recovery orientation of services or providers (

17–

20). There are two main drawbacks in the existing menu of recovery instruments, however. First, existing instruments largely lack an evidence-based conceptualization of the multiple dimensions of recovery, such as those set forth by the Substance Abuse and Mental Health Services Administration (SAMHSA) (

21). Second, existing instruments for assessing recovery orientation, to our knowledge, are not rooted in organizational theory, even though a positive culture of healing and organizational commitment to supporting recovery (i.e., organizational climate and culture) are promoted as critical aspects of a recovery-oriented mental health system (

22–

24). Therefore, they are not helpful for understanding and measuring whether organizational climate and culture promote recovery-oriented service delivery.

According to organizational theory, climate and culture form the foundation for staff actions and the way services are provided. As key elements of the social psychological work context, they directly influence staff behaviors in the workplace (

25). Organizational climate is defined as shared meanings among people in the organization about events, policies, procedures, and rewarded behaviors (

25). These shared meanings are distinct from the actual policies, practices, and procedures themselves. Organizational culture is more enduring than climate. It connotes the pattern of assumptions that are shared within an organization and taught as the right way to think and perceive (

25). Several existing recovery instruments—for example, the Recovery Culture Progress Report (

26) and the Recovery-Enhancing Environment Scale (

27)—do, in fact, include items pertaining to organizational climate and culture. However, these touch only superficially on the constructs, are not explicitly connected to organizational theories, and to date have not been psychometrically assessed. In this study, we addressed this gap by developing a validated instrument to assess recovery-promoting climate and culture within mental health programs.

Methods

Conceptual Framework

Organizational theory on climate and culture.

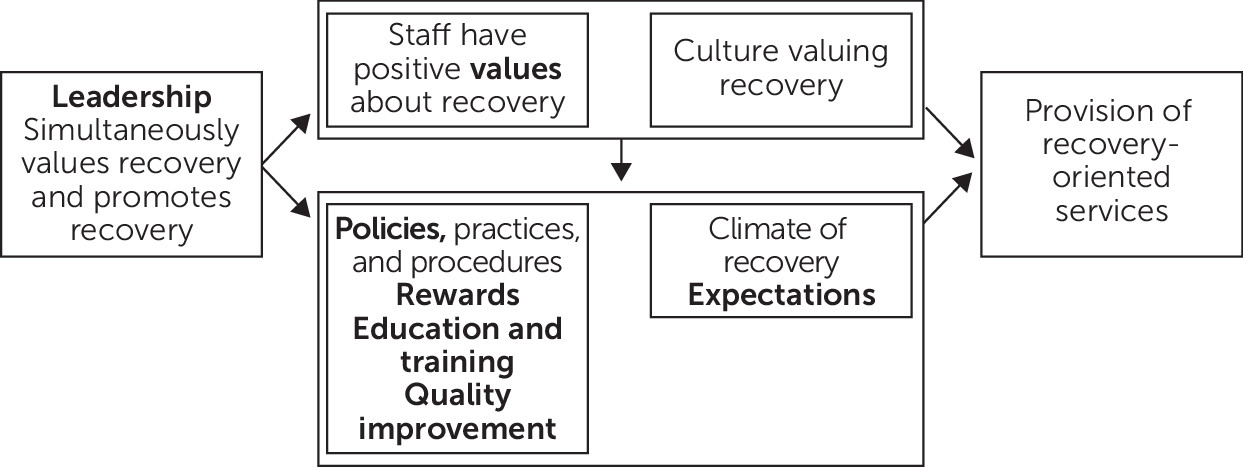

The model of organizational climate and culture by Ehrhart et al. (

25) guided the selection of organizational domains for instrument development (

Figure 1). It proposes that leadership affects both organizational culture (shared values) and climate (shared meanings and expectations held by staff). Organizational policies, practices, and procedures, in addition to leadership and staff expectations, are posited to affect organizational climate. We operationalized practices and procedures as staff rewards, staff education and training, and quality improvement processes. Therefore, the organizational domains used in instrument development were leadership, values, expectations, policies, rewards, education and training, and quality improvement.

Our approach was similar to Moos and Houts’s (

28) work in developing social climate scales in mental health settings. As is the usual practice in assessing climate and culture (

29–

32), we developed an instrument for staff to complete, and items elicited their perceptions of expectations, leadership, rewards, etc., in their organization. Climate and culture were measured as the aggregate (mean) values of staff perceptions within each organization.

Evidence-based dimensions of recovery.

A systematic review of recovery dimensions identified in the scientific literature (

33) further guided instrument development. The recovery dimensions used were individualized/person-centered, empowerment, hope, self-direction, relational, nonlinear/many pathways, strengths based, respect, responsibility, peer support, holistic, culturally sensitive, and trauma informed.

Item Development

Identification, adaptation, and development of items.

Using two compendiums of recovery instruments (

34,

35), we identified 15 existing instruments as potentially relevant to our study (see list in

online supplement) and reviewed each one. We then mapped relevant items to organizational domains and recovery dimensions in our conceptual framework and edited them for clarity. We drafted additional items as needed to ensure that each organizational domain and recovery dimension was represented in the item bank. That is, at least one item captured every recovery dimension within each organizational domain. Some items touched on more than one recovery dimension or organizational domain. (Details of the mapping exercise ae available in the

online supplement.)

Item review by recovery experts.

Three external recovery experts reviewed and independently rated each of the 51 items in the item bank. Experts had decades of recovery experience in mental health service provision, advocacy, and research. Each item was rated on 4-point scales for relevance to recovery (1, not relevant; 2, somewhat relevant; 3, quite relevant; and 4, highly relevant) and clarity (1, not clear; 2, somewhat clear; 3, quite clear; and 4, extremely clear). Any item with an average score below 3 on relevance or clarity was eliminated, and the remaining items were further refined on the basis of expert feedback.

Survey development and cognitive testing.

The resulting 35 items were then organized into a survey. Response options for 31 items were provided on a 5-point Likert scale, with 1 indicating that the element was not at all present and 5 indicating that the element was extremely present in the program, and the response options for the remaining four items were yes, no, and don’t know. We added several questions that captured respondent and program characteristics (e.g., tenure, role, and discipline). Next, we pilot-tested the survey with six mental health staff—a peer support specialist, a psychologist, a social worker, a nurse, and two program managers—using semistructured interviews to elicit feedback on survey length, flow, organization, and item and response comprehension. Items were refined once more before survey administration.

Study Setting, Recruitment, and Survey Administration

We administered the survey in U.S. Department of Veterans Affairs (VA) Psychosocial Rehabilitation and Recovery Centers (PRRCs). In 2008, the VA adopted SAMHSA’s definition of recovery as part of the transformation to a recovery-oriented system of mental health care (

36). All existing mental health day treatment and hospital programs were redesigned as PRRCs, in accordance with changes in VA policy and procedures. VA PRRC national policy enumerated the mission, vision, and values expected in these programs and proscribed program structure, services, and characteristics to ensure alignment with recovery principles (

37). PRRCs were the ideal setting to develop and test our recovery climate and culture instrument within a national mental health system because of the centers’ recovery-informed policies and expectations. There were 104 PRRCs at the time this study was conducted, each containing between two and 14 multidisciplinary staff.

The survey was programmed into a Web-based platform (Enterprise Feedback Management, Verint Systems, Inc., Melville, New York), and approvals were obtained from the VA Boston Healthcare System and Edith Nourse Memorial Veterans Hospital Institutional Review Board, unions, and survey compliance offices. The elements of informed consent were explained on the first screen of the electronic survey, and respondents were required to indicate their consent to participate.

PRRC staff were invited to complete the survey by e-mail. We obtained the e-mail addresses of PRRC staff from the VA Office of Mental Health and Suicide Prevention, which was a collaborator on the study. We used a three-step recruitment approach comprising a series of e-mail invitations and reminders (

38). Responses were collected over a 3-week period in May 2017. (Details about the recruitment process are available in an

online supplement.)

Data Analyses

We examined descriptive statistics for each item and then conducted exploratory factor analysis (EFA) to examine the factor structure of the data. We calculated the eigenvalues of the sample’s polychoric correlation matrix and conducted the EFA followed by geomin rotation. We determined the number of factors by identifying the number of eigenvalues greater than 1 and assessed factor loading patterns for each item. To ensure a clear factor loading structure, we kept items with a factor loading of greater than 0.40 on one of the factors (which indicates that 16% of the item variance could be explained by this factor and is considered a strong effect between the item and factor) and a factor loading of less than 0.3 on other factors. In addition to examining factor loading patterns, we considered the meaning of the factors, number of items, and each factor’s content coverage to determine the final factors. (Details on the factor loadings are available in the online supplement.)

For each item within each factor, we calculated the corrected item-total correlation, using the threshold that greater than 0.4 is acceptable. Also the Cronbach’s alpha if the item was deleted was calculated, and any value lower than the Cronbach’s alpha with all items was considered acceptable. We examined internal consistencies for each factor by calculating the Cronbach’s alpha and used the following criteria for determining acceptability: α>0.90, excellent; α=0.81‒0.90, good; α=0.71‒0.80, acceptable; α=0.61‒0.70, questionable; α=0.50‒0.60, poor; and α<0.50, unacceptable (

39). We calculated the mean and standard deviation of each factor and correlations among the factors.

Additionally, we explored differences in overall score by discipline and supervisory status, accounting for site-level clustering and unbalanced groups.

Instrument Validation With Site Visits

Site selection.

We assessed extent of recovery-promoting climate and culture for programs with four or more survey respondents. We first checked the intraclass correlation coefficient (ICC1) to ensure that aggregating staff responses to the site level was appropriate. The ICC1 was 0.35—well above the acceptable threshold for aggregation (ICC1>0.10 [

40]). Next, we ranked these programs by average score across all factors (average overall score). We invited the two PRRC programs ranked highest and the two ranked lowest to participate in site visits.

Site visits.

Two-person teams blinded to the PRRC rankings conducted 1.5-day site visits that included semistructured interviews with staff and program participants, group and meeting observations, and a facility tour. Site visitors independently rated each PRRC on the extent to which recovery principles were present in the organizational domains captured in the instrument and then discussed their ratings and reached consensus. Site visit consensus ratings were compared with average overall survey score rankings to determine whether the survey accurately discriminated between the sites rated highest and lowest by the site visitors (

41).

Results

Sample Characteristics

We sent survey links to 785 potential respondents and received 280 survey responses (36% response rate). Thirty-two responses did not include data for any instrument items and were excluded; the analytical sample contained 248 responses.

Table 1 reports sample characteristics. The majority of respondents were female (65%) and nonveterans (70%); 80% were not supervisors. The most frequent disciplines were social work (33%), peer specialist (17%), and psychology (17%). The percentage of staff in each discipline differed by no more than 3 percentage points from the distribution of disciplines for all PRRC staff, as reported in the staff directory.

Distribution of Item Responses

There were 228 to 244 responses for each item, and item means ranged from 2.62 (0.57 for dichotomous items) to 4.58 (0.63 for dichotomous items) with standard deviations between 0.49 and 1.48 (see online supplement). The percentage of missing responses for each item ranged from 2% to 8%. We did not find any clear pattern of missing or skipped items.

Exploratory Factor Analysis

Eigenvalues extracted from the polychoric correlation matrix of the 35 items revealed that seven eigenvalues were greater than 1, and the seven-factor solution explained 76% of the variance. Seven factors stemming from the EFA aligned well with the seven organizational dimensions in our conceptual framework. (The factor loadings are available in the online supplement.) Based on that evidence, we concluded that the seven-factor model was the final model.

Seven of the 35 items were not retained in the final instrument because they did not meet the criteria for retention. The resulting instrument contained 28 items (

Table 2).

Internal Consistency

The internal consistency reliability (Cronbach’s α) of the final 28 items was 0.81 overall, and the reliability of the individual subscales ranged from 0.84 to 0.88 (

Table 3). Cronbach’s alpha values for all subscales were above 0.8 and were considered good. Correlation among the subscales ranged from 0.16 to 0.61. No correlations were greater than 0.9, which indicated the unique content of each factor.

Sensitivity Analyses

We found no difference in overall score by discipline; however, we found a small, yet statistically significant, absolute difference in overall score by supervisory status. Nonsupervisors had an average overall score of 3.73±0.05 on a 5-point scale, compared with 4.03±0.10 for supervisors (p=0.012).

Site Visit Validation

Site visitors correctly identified the two sites ranked highest and the two sites ranked lowest. Further information about the site visit component of the study is reported elsewhere (

41).

Discussion

In this study, we developed and validated a psychometrically sound instrument to measure recovery climate and culture in mental health programs. To our knowledge, this is the first instrument to use both a comprehensive framework guided by organizational theory on climate and culture and an empirically based conceptualization of the multiple dimensions of mental health recovery. It measures key facets of climate and culture, such as leadership and rewards, which can be targeted for intervention to achieve recovery-oriented service delivery.

The two individual items with the highest average response were items 3 (“To what extent do your coworkers in the PRRC expect each other to deliver services in a way that is sensitive to each PRRC participant’s ethnic background, race, sexual orientation, religious beliefs, and gender?” [item 6 in the original 35]) and 6 (“How important is it to you personally that the PRRC continues to work with PRRC participants even when they refuse certain other treatments?” [item 10]). This suggests that these elements have been the easiest for PRRC programs to adopt when seeking a recovery orientation. The content in these items aligns with the recovery dimensions of cultural sensitivity, empowerment, and self-direction set forth by SAMHSA (

21). They also map to organizational domains of staff expectations (item 3) and staff values (item 6).

On the other hand, the two items with the lowest average response were items 20 (“To what extent are PRRC staff rewarded for promoting a holistic approach in the PRRC, including attention to health, home, purpose, and community?” [item 27]) and 21 (“To what extent are PRRC staff rewarded for promoting cultural sensitivity within the PRRC?” [item 28]). These items reside in the organizational domain of staff rewards, suggesting that rewards may be an underutilized area of development in the programs we studied. Also of note, the item about rewarding staff for promoting cultural sensitivity (item 21) was rated low while the item about staff expectations for being culturally sensitive and inclusive (item 3) was rated high. This dichotomy in the responses for these two items indicates that in the PRRCs we studied, staff expected each other to demonstrate cultural sensitivity, but reward systems for supporting and encouraging cultural sensitivity were not prominent.

The example of misalignment between staff expectations for cultural sensitivity and program reward structures that promote cultural sensitivity demonstrates how this tool, based on organizational theory of climate and culture, could be used in helping organizations develop a recovery orientation. Users of the instrument could assess program scores in each organizational domain and determine the areas in which the program performs well and areas that warrant improvement. These data could inform the program about how to target change efforts. In the example highlighted above, PRRCs could revamp staff reward and recognition practices to better align with recovery principles, such as by publicly recognizing staff for their contributions to recovery-oriented service, instituting achievement awards for promoting recovery principles, or encouraging medical center leadership to send a thank you note to staff for promoting elements of recovery in practice (

42).

The impetus for developing this instrument was to advance research on the relationships between climate and culture and recovery-oriented outcomes. As the preceding discussion illustrates, the instrument also has the potential to identify domains of organizational climate and culture that leaders can use to transform their organizations into recovery-oriented care systems. For that reason, this instrument could prove useful in VA mental health settings other than PRRCs, such as mental health outpatient programs, behavioral health interdisciplinary program teams, and residential treatment programs.

This study had several limitations. First, the findings are not directly generalizable to other mental health settings because we conducted this study only in the VA. However, testing the instrument in the VA afforded access to a national sample of program staff operating under one set of policies and procedures, which would be difficult to achieve in a national sample of mental health programs. Community mental health centers, for example, are heavily influenced by state-specific mental health policies that would pose challenges to testing the instrument. Furthermore, many of the instrument’s items were adapted from instruments developed outside the VA. For these reasons, it is likely our findings will translate to non-VA settings.

Second, the study had a 36% response rate, so responses may not be representative of the entire PRRC staff population. However, such a response rate is typical of other studies (

43–

45). Third, we eliminated seven items to obtain unambiguous factor loadings and ensure parsimonious scales in the final instrument. Although the content in these items was determined to be important in the initial phases of instrument development, these items did not perform well in psychometric analysis. Future users of the instrument may consider capturing this content using another data collection approach, such as semistructured interviews. Fourth, we did not conduct a confirmatory factor analysis (CFA) following the EFA because we lacked sufficient respondents to split the sample. Without conducting a CFA, we cannot confirm that the factor structure identified in the EFA was correct, and we cannot determine whether each factor was unidimensional. Finally, this study focused on the content validity and internal consistency of the instrument. Future research should assess its construct validity using external criterion variables, including personal recovery outcomes.

Conclusions

In this study, we developed a psychometrically tested and validated instrument to measure recovery-promoting climate and culture in mental health programs. The instrument is a necessary first step for conducting research on the extent to which recovery climate and culture drive recovery-oriented service delivery and individual-level outcomes. Recovery climate and culture measures should be incorporated into evaluations of the recovery orientation of mental health programs, and this instrument offers a tool for doing so.

Acknowledgments

The authors thank Allie Silverman and Jacquelyn Pendergast for their research support and Laurel Radwin and Michael Shwartz for their guidance and input on survey development processes.

The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the U.S. Department of Veterans Affairs or the U.S. government.