Although the concept of the multidisciplinary team is not new to health care, recent emphasis on quality, accessibility, and efficiency in the delivery of health care services has increased interest in interdisciplinary collaboration. In hospital-based psychiatry the multidisciplinary mental health team has long provided the foundation for comprehensive care, integrating multiple specialized treatment components within a stable and therapeutic treatment milieu. The multidisciplinary mental health team has also been a core feature of partial hospital and day treatment programs and of many outpatient public mental health settings, especially in more service-intensive models that address the care of patients who have severe and persistent mental illness.

To further standardize the team self-assessment process in this program, we developed an instrument for participants to use in structured self-assessment of team functioning. We have successfully used this instrument to facilitate teams' immediate reflection on their experience of working together in the context of treatment planning. However, we are only beginning to study the instrument's formal properties and have not yet reported any findings in connection with its use in a training setting.

In this article we present some preliminary data drawn from structured team self-assessments conducted before and after team training. We used the statewide model as it was implemented at one local hospital. First, we briefly review the structure of the training program and summarize the local implementation process. We then discuss the structured self-assessment instrument and present data consistent with the view that the training program helped the teams improve their performance.

Methods

Statewide training program

The training program mentioned above (

12), which was a collaborative project of the office of mental health of the Illinois Department of Human Services and the department of psychiatry of the University of Illinois at Chicago, involved the use of simulated treatment planning sessions in response to simulated videotaped patient interviews; postsession self-evaluation based on a structured rating scale designed for that purpose; and videotaping of the session for team review with consultation from senior professional staff.

The program brought together representative multidisciplinary mental health teams from ten state-operated hospitals across Illinois. The teams included representatives from the disciplines of psychiatry, nursing, psychology, social work, and occupational and activity therapy. Outpatient case managers from community mental health centers in the hospitals' networks also participated. Working from a model that emphasizes learning from experience as well as team and organizational learning, the training program provided opportunities for teams to engage in the work of treatment planning and then reflect on the sessions and conduct a self-evaluation process. Teams that participated in the program engaged in four such simulated treatment planning sessions, each followed by a structured self-assessment of the team's functioning, and plenary presentations and discussions of both the clinical material and the team members' experience of the team process during the treatment planning session.

Didactic components of the program included lectures on group dynamics, team building, aspects of psychiatric treatment planning, and regulatory and policy requirements. Additional group exercises were designed primarily to stimulate discussion of treatment planning, teamwork, and multidisciplinary team functioning. However, the central feature of the training was participation in the simulated treatment planning exercises with team members' reflection on and evaluation of the team's work. In the statewide program, the last of four simulated treatment planning sessions was videotaped. The videotapes were then reviewed by the respective teams with consultative input. A strong emphasis was placed on the leadership of the psychiatrist in the multidisciplinary team setting. As part of the statewide program, participating team members, assisted by internal, hospital-based consultants, developed plans for implementing the training at the local hospital level.

Local hospital implementation

In this article we report on an extension of the project, which was part of the leadership's implementation plan at a local suburban state hospital with about 500 beds and both civil and forensic treatment programs. In terms of patient population, the skill levels of staff, and the size and composition of units, this hospital is largely representative of programs throughout the state system. Among the state hospitals, it is probably the site with the broadest representation of the range of acute and longer-term care programs available. Thus many aspects of this setting are probably generalizable to other sites in the system.

In December 1999, a total of 102 mental health professionals from 25 multidisciplinary mental health care teams on 12 inpatient units each completed an initial baseline assessment by using the Scale for Leadership Assessment and Team Evaluation (SLATE). Outpatient case managers from community mental health centers in the hospital's network, who regularly participate in treatment planning meetings, also completed the assessment.

The baseline structured self-assessments were completed on the basis of the teams' recent experiences of working together in treatment planning meetings. This assessment activity was organized by hospital staff members who participated in the statewide training program, who themselves represented the disciplines of psychiatry, psychology, nursing social work, and occupational and activity therapy.

The results of the baseline structured self-assessments were tabulated, and feedback was given to the teams in January 2000. In completing the SLATE assessments, individual participants were assured anonymity, and, afterward, teams were provided with aggregate rather than individual data. In March 2000 two hospitalwide presentations were made as part of the implementation plan. The first was an in-service presentation on treatment planning and team building. The second was a grand rounds presentation that provided an overview of the statewide team training program, including information about the team self-assessment process in the context of simulated treatment planning sessions, the use of the SLATE for structured self-assessment, and videotaping of the treatment planning sessions with consultation as additional sources of information for team self-assessment.

During the six-month period from January through June 2000, all participating treatment teams were videotaped while they conducted real treatment planning sessions. They were assured that the tapes would be erased after the training exercise. In each team, the videotapes were reviewed with consultation from senior professional staff.

At the conclusion of the videotaped treatment planning sessions, the SLATE was again completed by the multidisciplinary treatment teams; 78 participants completed the posttraining assessment. The decrease in response rate may have been due partly to staff attrition or vacations or to the fact that participation was ultimately voluntary, given that completed rating scales were not identified by name. The results were tabulated and compared with the baseline team self-assessments, and the aggregate findings were shared with the participants.

Measuring team self-assessment and change

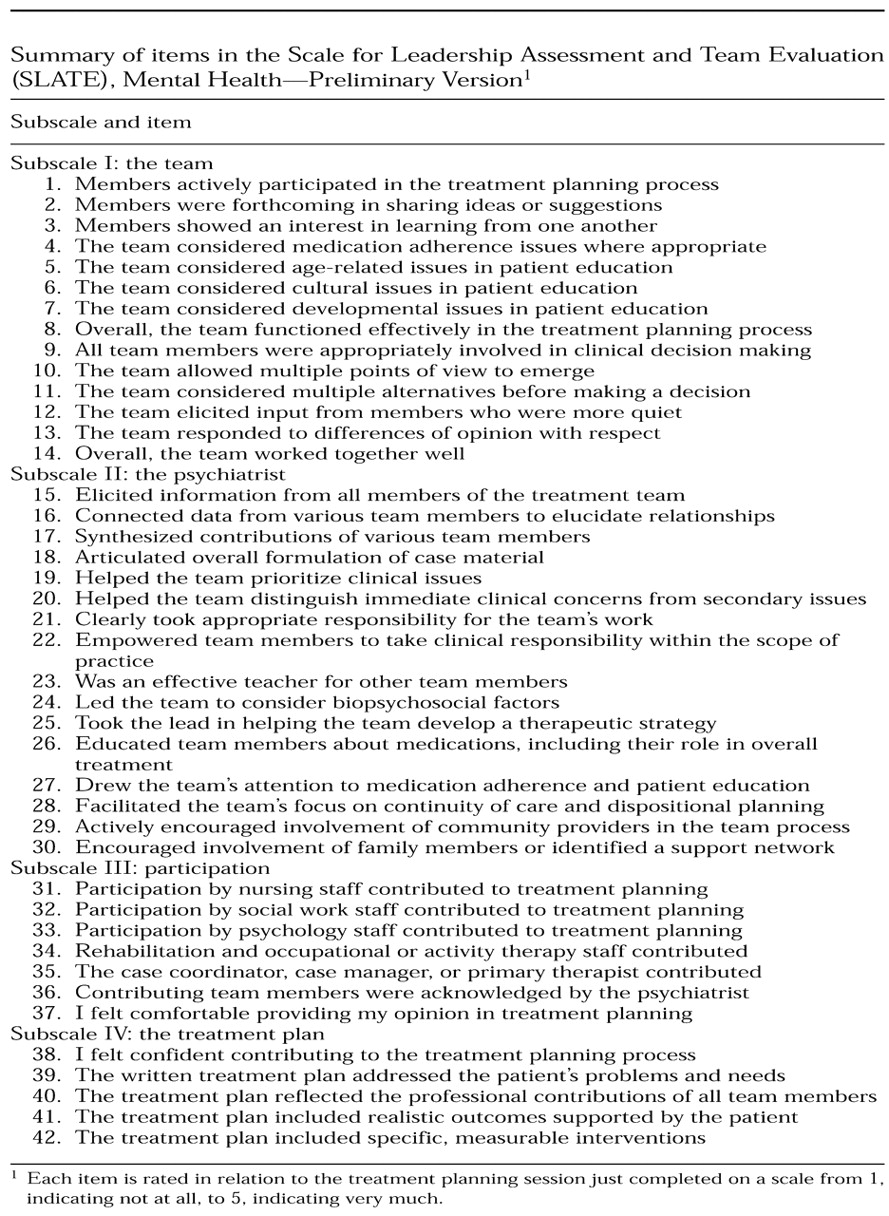

The SLATE, which is still under development, currently has 42 items. Each item assesses the extent to which the participant believes that a particular goal of team functioning in the treatment planning process has been realized, rated on a scale from 1, indicating not at all, to 5, indicating very much. The SLATE is explicitly biased toward the assessment of the psychiatrist's leadership of the team, informed by the assumption that leadership by a psychiatrist both influences and reflects the quality of the team's interdisciplinary functioning. Thus the largest of the SLATE's four subscales focuses on the psychiatrist and includes 16 items. Other subscales focus on the team as a whole, member participation, and the treatment plan. The subscales and individual items are summarized in

Table 1. The full SLATE in its current preliminary version is available from the first author on request.

Working from the assumption that the psychiatrist, having overall responsibility for the multidisciplinary team's work, is necessarily in a position of leadership, we developed the SLATE items primarily as an attempt to specifically describe the competencies required by a psychiatrist to lead a team and facilitate interdisciplinary collaboration. In addition, the self-assessment program and the SLATE items reflect a constellation of assumptions about leadership and team functioning that can be summarized as two concepts. First, the team needs and benefits from leadership but also constrains leadership. Second, assessment of team leadership requires the assessment of the effects of that leadership on team functioning, including the degree to which leadership emerges and is distributed across the team.

The specific items in the SLATE reflect these assumptions and are related to both process and content. Examples of behaviors emphasized for psychiatrists include synthesizing contributions of various team members and helping the team prioritize clinical issues. Examples of behaviors of team members include actively participating in the treatment planning process, being forthcoming in the sharing of ideas or making suggestions, showing an interest in learning from one another, eliciting input from more quiet members, respectfully responding to differences of opinion, and displaying consideration of various treatment-related issues.

Data collection and analysis

Because this program was originally implemented not as a research project but as a new training exercise in team self-assessment, and in an effort to encourage frank responses by participants, individual and team SLATE scores were not tracked. In fact, after the responses had been tabulated and collective feedback had been provided to the group as a whole, the individual responses were destroyed. Hence our analyses were confined to the study of changes in scores on the SLATE items over time across the whole group, aggregated by subscale and for the scale as a whole. We analyzed changes over the course of the program in overall scores and scores on subscale items by using the Mann-Whitney U test for two independent samples, a nonparametric alternative to the t test for equality of means (

13).

Results

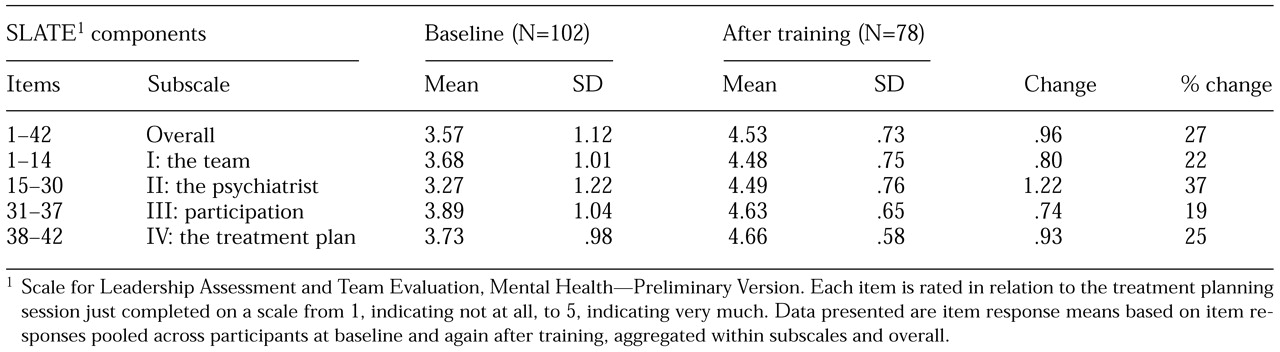

Table 2 lists the mean±SD scores on the SLATE items at baseline and at posttraining follow-up for the instrument overall and for the four subscales. The overall mean score increased from 3.57 to 4.53, a difference of .96, or 27 percent, with significantly higher ratings at the end of the training program (U=3,397,429, Z=-40.1, p<.001).

The mean scores for all four subscale items increased over the course of the training. The mean score for the team subscale increased by 22 percent (U=418,854, Z=-20.9, p<.001); for the psychiatrist subscale by 37 percent (U=418,501, Z=-27.9, p<.001); for the participation subscale by 19 percent (U=110,574.5, Z=-14.1, p<.001); and for the treatment plan subscale by 25 percent (U= 43,487, Z=-15.1, p<.001). For the individual SLATE items, which are not separated out in the analyses in

Table 2, all but one increased in absolute value; one item (item 14 in

Table 1) showed no change.

Consultants and study participants found that leadership themes were salient in the self-assessments, which was consistent with our observation that the increase in the mean score for subscale items appeared to be greatest for items pertaining to the psychiatrist. It was noted, for example, that the leadership qualities of psychiatrists varied widely across teams. Some psychiatrists led their teams as adept facilitators, whereas others delegated the facilitation role and adopted a more didactic stance—synthesizing, formulating, and teaching in a biopsychosocial mode.

Some psychiatrists seemed inclined not to take charge in the team setting. One team with such a psychiatrist had adapted to this style quite effectively: leadership of the group changed hands seamlessly, rotating among several members—including the psychiatrist—as the team's work was efficiently accomplished. In another team, however, a nonpsychiatrist attempted to assume the leadership role and was rejected by the other team members. In a third group, it was clear that a team member other than the psychiatrist was the acknowledged leader. The latter two groups appeared to function less efficiently, and clinical issues were often inadequately addressed.

In some cases, psychiatrists were given specific feedback beyond the replay of the videotape and the debriefing. Thus the treatment planning exercises also served a team diagnostic function, supplementing the structured self-assessment process with consultative feedback and impressions about areas of team functioning that needed improvement.

Discussion and conclusions

In this article we have sought to do several things. First, we have summarized briefly a training program for multidisciplinary mental health care teams that was developed in the context of a public multihospital system. The program aimed to provide multidisciplinary mental health teams with a procedural framework for engaging in self-assessment of team functioning by reflecting on and evaluating the team's own treatment planning process. The model and the measurement instrument—the SLATE—were both intentionally weighted toward scrutiny of the psychiatrist's leadership so that, although team functioning as a whole was clearly evaluated, a greater number of discipline-specific and competency-based items were aimed at evaluating the psychiatrist than at evaluating the other members of the team. Nevertheless, all the psychiatrist-directed items were constructed as relational inquiries that assessed leadership in the team context. A majority of the SLATE items addressed other aspects of team functioning.

Second, we have described the process by which the systemwide initiative was implemented at one local hospital. Although the basic model and method of measurement remained the same, transferring them to this hospital involved some modifications. In particular, team self-assessments were all obtained in the context of actual treatment planning meetings. In contrast, the self-assessments of teams that participated in the original training, which brought many teams together at one central location, made use of simulated treatment planning sessions based on videotaped simulated patient interviews. At the local site, the training intervention essentially consisted of exposure of the teams to self-assessment, feedback of aggregate self-assessment data to participants, two hospitalwide didactic presentations, the ongoing work of treatment planning in the context of the initiative, videotaping of a treatment planning session, and review of the videotape with consultative input.

Third, we have summarized the current version of our measurement tool, the SLATE, which has been practically useful. However, further study is required to establish its psychometric properties.

Fourth, we have presented data from the local hospital project based on teams' completion of the SLATE at baseline and after the training. The data presented here have important limitations that must be noted. The training program was not originally designed as a research project, and collection and presentation of data represented an exercise in program evaluation for the benefit of both the hospital leadership and the participants.

In exposing the participants to a new method of evaluation, we were careful to be sensitive to confidentiality matters. As a result, the initial individual SLATE ratings were destroyed after the data were tabulated. Given that there were no identifiers on the completed self-assessment forms, changes over time could not be tracked either by individual participant or by specific team. In addition, this data set could not be used to study the properties of the SLATE as a scale, because the relationships between items for individual participants were not available for study.

Despite these limitations, we were able to study changes in the magnitude of the scores for individual items, aggregated for the overall SLATE and for each of the subscales, by comparing baseline scores with those obtained at the end of the training. Such a comparison, although unsuitable for the study of the properties of the scale, allowed us to observe changes in self-assessment across the group of participants as a whole after the training. Using this method, we found significant increases in scores for the SLATE overall and for each of the four subscales.

Although these findings are compatible with our intention to induce the multidisciplinary mental health teams to examine and improve their functioning in each of these areas, conclusions beyond this general compatibility must be limited. Experiences with statewide training in clinical case simulations (

12) and at the hospital level with real treatment planning sessions as reported here have led a majority of the participating consultants and trainees to believe that the model is useful.

However, whether the improvement in team self-assessment was related to actual improvements or to the teams' desire to appear more functional could not be determined definitively. Nor has a clear relationship been established between team self-assessment ratings on the SLATE and actual performance in terms of clinical outcome variables. Finally, the teams might have improved under other training models, and any such improvement could be a function of the teams' being studied or observed—the Hawthorne effect. However, these issues and issues pertaining to the reliability and validity of the SLATE can be studied further in the context of this training model. We are currently engaged in such studies.

The training program described here can be viewed in several ways, including as a practical application of experiential learning about groups; as an extension of clinical education models, usually applied on an individual and discipline- or profession-specific basis, to team practice; as a technique for competency assessment; and as an attempt to measure team performance.

As a practical application of experiential learning about groups, the model can be seen as a learning laboratory that creates a setting in which individuals can examine their own and others' behavior, make observations, discuss their observations with other participants, and attempt to draw conclusions about the work of the group. As such, the model is informed by—and has affinities with—experiential learning approaches such as the Tavistock model for the study of group relations, leadership, and authority (

14).

As an extension of clinical education models, our model draws on the concepts of the case method and learning-by-doing (

15,

16), uses a recording device to study clinical interventions and clinical decision making, and draws on the assistance of a clinical supervisor or consultant. This model attempts not only to build and improve the clinical skills of an individual practitioner but also to transfer them to the level of the multidisciplinary team. We are currently developing a version of the SLATE for use by an evaluator outside the team, but we have not yet attempted to use the instrument in that way.

It is clear that, as a technique for competency assessment, the SLATE is biased toward evaluation of the psychiatrist. In fact, the scale was constructed from the starting point of identifying psychiatrists' competencies in the areas of team leadership and multidisciplinary collaboration. Nonetheless, the training program involves the team as a whole, and a majority of the SLATE items address team issues other than psychiatrist leadership. The model provides a framework for conceptualizing competency in the context of the multidisciplinary team as a whole (

17).

At least two major areas of the SLATE require further work if the instrument is to be used to assess team performance. First, additional study of the formal properties of the SLATE as a measurement instrument is desirable. Currently, we are collecting and analyzing data that will likely allow us to address issues of reliability, validity, and the scale's internal structure—for example, whether factor analysis supports the current subscale structure. Second, in assessing actual team performance, it will be important to try to link self-assessment to clinical outcomes. We are interested in studying this issue further and would like to be able to show that improved self-assessment is reflected in better clinical outcomes for patients.

In reviewing the literature, we have found few examples of comparable approaches to the assessment of team functioning and even fewer that are specific to mental health. We are aware of at least one study of multidisciplinary mental health teams that pays attention to the modeling of processes of collaboration (

11). This approach shares with ours an interest in the team process as a means of promoting individual and team learning and finding areas of potential improvement in team functioning.

With regard to measurement instruments, we have reviewed one team assessment scale that is in some ways similar to the SLATE. The Team Checkup (

18), developed by a consulting group for a potentially broad range of organizational applications, is not specific to mental health or to health care generally, but it does address issues similar to those addressed in the SLATE. In addition, it is our understanding that the Team Checkup has been used in some health care settings. As with the SLATE, the Team Checkup has been found to have practical value, especially in the contexts of organizational consultation and team building, but its psychometric properties have not been studied.

It seems likely that interest in the functioning of teams—in business, medicine, and multidisciplinary mental health care—will remain high. In an atmosphere that demands accessible, consumer-friendly, high-quality, and efficient services, methods for improving team performance that can be shown to be related to clinical outcomes will become increasingly attractive. Although mental health care has a strong tradition of multidisciplinary service delivery, our review of the literature and of work in the area suggests that there is considerable room for improvement in the processes by which representatives of multiple disciplines work collaboratively. In the study we have reported here, we have drawn on a range of established methods to create a framework in which teams can examine such processes. Our preliminary findings suggest that participating teams benefit from such self-assessment, but the link to improved clinical outcomes remains to be established.