In 2004, California voters passed Proposition 63, which was enacted into law as the Mental Health Services Act (MHSA) in January 2005 (

1). The law levies a 1% tax on adjusted gross incomes over $1 million, which was used to provide new recovery-oriented, client-centered mental health services for mental health consumers throughout the 58 county departments of mental health in California. This tax resulted in an 18% increase in funding in fiscal year 2008–2009 over the level of funding in fiscal year 2003–2004 (

2).

A core MHSA program is the full-service partnership program. This program is a modified assertive community treatment program based on an earlier recovery-oriented program in California, referred to by the assembly bill number which brought it into being, AB2034, which provided intensive, comprehensive public mental health services to individuals with mental illness and who were homeless or in contact with the criminal justice system. Like AB2034 programs, the full-service partnership program provides flexible funding, intensive case management, and services such as housing, employment, education, peer support, co-occurring disorder treatment, and outreach (

2).

Participation in the full-service partnership program is voluntary; individuals must agree to enter the program. To be accepted into the program, clients must be unserved or underserved and, depending on age, have at least one of the following characteristics: homeless or at risk of homelessness, involved or at risk of involvement with the criminal justice system, frequently hospitalized for mental health problems, or frequent user of emergency department services (

3). However, because the full-service partnership program does not have the capacity to serve all individuals who meet the entry criteria (full-service partnership participants make up a small percentage of participants in the public mental health system), a significant proportion of individuals whose condition is similar to that of full-service partnership participants remain outside of the full-service partnership program (

2).

The services available to all individuals in the public mental health system and the additional or enhanced services available to those in the full-service partnership program are shown in the box on this page. Services available under the state's Medicaid program often serve as the foundation for full-service partnership care plans; however, they may be provided to full-service partnership enrollees with enhancements such as reduced provider caseloads, increased service frequency and intensity, field-based access and delivery, and similar enhancements.

One measure of the effectiveness of a comprehensive treatment program for serious mental illness is the impact of such a program on mental health-related emergency department visits. In other words, the occurrence of emergency department visits can be seen as an indication of a failure to provide adequate and effective mental health care. Nationally, emergency department visits have been on the rise, increasing by approximately 40% from 1992 to 2001 (

4). Even assertive community treatment programs have difficulty in reducing emergency department visits. Clarke and colleagues (

5) analyzed a randomized controlled trial of assertive community treatment versus usual care and found no difference in the number of emergency department visits between clients in usual care and clients in assertive community treatment-type programs. The study did show, however, that clients in assertive community treatment programs staffed by mental health consumers had fewer emergency department admissions compared with clients in assertive community treatment programs with staff that did not include mental health consumers.

Only one study examining the relationship between time in a full-service partnership program and emergency department visits has been published. Gilmer and colleagues (

6) used data from San Diego County and propensity score methods to match full-service partnership program participants with non-full-service partnership program participants. The study found that after one year the probability of emergency department visits among full-service partnership program participants was reduced by 32% compared with other participants.

In this study we used a retrospective nonequivalent control group design and used data from seven California counties for 155,203 individuals. The study tested the hypothesis that enrollment in the full-service partnership program will reduce the odds of emergency department visits compared with those receiving usual care in the public mental health system and that this reduction will increase with time spent in the full-service partnership program.

Methods

Study design

This study used a retrospective nonequivalent control group design. To understand the effectiveness of this design as implemented here, consider that there are at least two ways to control for baseline differences between a treatment group and a control group. One is to randomly assign individuals to each group. Statistically, this approach yields unbiased estimates of any treatment effect because there are no statistically significant correlations between treatment assignment and any of the unmeasured individual baseline characteristics of either group. This is the case because even though most time-invariant individual characteristics are unmeasured, they are statistically equivalent between the treatment and control groups because of the randomization procedure. Similarly, even though most time-varying individual characteristics are also unmeasured, randomization implies that any changes that occur over time will be the same (statistically equivalent) across the two groups (

7).

When randomization is not available and the treatment and control groups are not equivalent because of selection issues, it is still theoretically possible to obtain the situation where there are no statistically significant correlations between treatment assignment and any of the unmeasured individual baseline characteristics of either group by exhaustively accounting for all such differences and statistically controlling for them. With panel data (repeated measures of the same individuals) it is possible to use statistical methods that exhaustively control for all time-invariant factors, such as genetics, personality, sex, race, time of birth, psychiatric history, medical history, and socioeconomic history (

8,

9). In addition, these methods will also control for all individual behavioral propensities that do not change over time (

8,

9). In other words, the statistical methods used in this study were designed to account for the fact that the treatment group contained more individuals whose past history included homelessness, involvement in the criminal justice system, and high use of mental health services and to thus produce results comparable with, although not identical to, what might be expected from a classically designed, randomized experiment.

Data

We used data from the Short-Doyle/Medi-Cal (SD/MC) file to obtain information about clients with respect to Medicaid-reimbursed emergency department visits and sociodemographic characteristics. Dates of client participation in the full-service partnership program were obtained from the Data Collection and Reporting (DCR) system file. Finally, we obtained information on client psychiatric diagnoses from the Consumer and Service Information (CSI) System. All data systems are maintained by the California Department of Mental Health.

Because these data were existing administrative data, informed consent was not required. This project was approved by both the Committee for the Protection of Human Subjects of the University of California and the Committee for the Protection of Human Subjects of the State of California Office of Statewide Health Planning and Development.

Using these data we created a balanced panel where information on the characteristics and outcomes of each client were collected for each quarter from January 2007 to June 2008 (six quarters). These dates were chosen because over 90% of full-service partnership program clients entered the full-service partnership program after January 2007 and because data were available only until June 2008 at the time this study was conducted. We included only individuals in the SD/MC data set who had received a mental health service during this period and were age 18 or older.

Counts of emergency department admissions were stratified by county to determine whether counties had consistent administrative policies concerning how they provided and recorded emergency services. We found that some counties switched between reporting a positive number of urgent care admissions and reporting no traditional emergency department admissions and vice versa, whereas other counties reported no traditional emergency department admissions for lengthy periods. To be sure we identified all such administrative reporting patterns, we further evaluated monthly counts of emergency department admissions by county to see which counties had consistent administrative reporting patterns for emergency department admissions for the period from July 2000 to June 2008.

Only seven of California's 58 counties were found to consistently report emergency department admissions. We excluded all other counties from the analysis to avoid inferring full-service partnership program effects from administrative changes in how emergency services were recorded. Note that we do not consider these reporting differences across counties to be data errors but merely temporal differences across counties in administrative policy regarding how to provide services for clients in crisis. The way in which emergency services are recorded will affect billing, so counties have an incentive to accurately portray how they are serving clients in crisis.

The seven counties used in this analysis were Humboldt, Los Angeles, Sacramento, San Diego, San Francisco, San Mateo, and Santa Clara. These counties represent both Northern California (Humboldt, Sacramento, San Francisco, San Mateo, and Santa Clara), and Southern California (Los Angeles and San Diego) and represent approximately half (48.5%) of the population of California. The final size of the analytic data set included 931,218 observations representing 155,203 clients (the characteristics and behavior of each client were observed and measured six times, yielding 931,218 observations).

Statistical analysis

Our dependent variable described whether or not a client used mental health-related emergency department services during a given quarter. Because our dependent variable was binary and we had limited information on each client, we estimated an individual fixed-effects logistic model (

10). Individual fixed effects control for all non-time-varying characteristics, including all historical events occurring before the beginning of the study. This approach also controlled for all baseline differences between individuals in their propensity to choose full-service partnership treatment and all baseline differences between individuals in their propensity to leave full-service partnership treatment once they had entered.

Therefore, the only variables needed in the model were the full-service partnership treatment indicator (yes-no), cumulative duration of time spent in the full-service partnership program (total time spent in the full-service partnership program, measured in quarters for up to six quarters), quarter fixed effects representing the specific periods in the study, and the interaction of quarter fixed effects and county indicators. The latter interaction terms were included to take into account all time-varying county-level factors, such as funding levels, changes in the provision of usual care, and other time-varying county-specific changes that could affect outcomes.

Individuals entered the full-service partnership program at different points during the study period. In addition, clients could voluntarily leave and re-enter the full-service partnership program at any time. To determine whether an individual was participating in the full-service partnership program during a given quarter, we measured participation as of the midpoint of the quarter.

Data management and analytics were performed with SAS 9.2 and Stata 10. The individual fixed-effects logistic regression included robust standard errors clustered by client. Note that although the initial size of the analytic file was extremely large, individual fixed-effects logistic regression automatically dropped all observations that did not contribute to parameter estimation, resulting in a smaller sample. It can be mathematically shown that individuals with all positive outcomes (emergency department visits every period) or all negative outcomes (no emergency department visits in any period) do not mathematically contribute to the estimation of the parameters. This regression method produces unbiased parameter estimates in large samples such as the one used in this study (

10). Numerous studies in the psychiatric literature have used fixed-effects logistic regression to control for unobserved sources of confounding (

11–

13).

Results

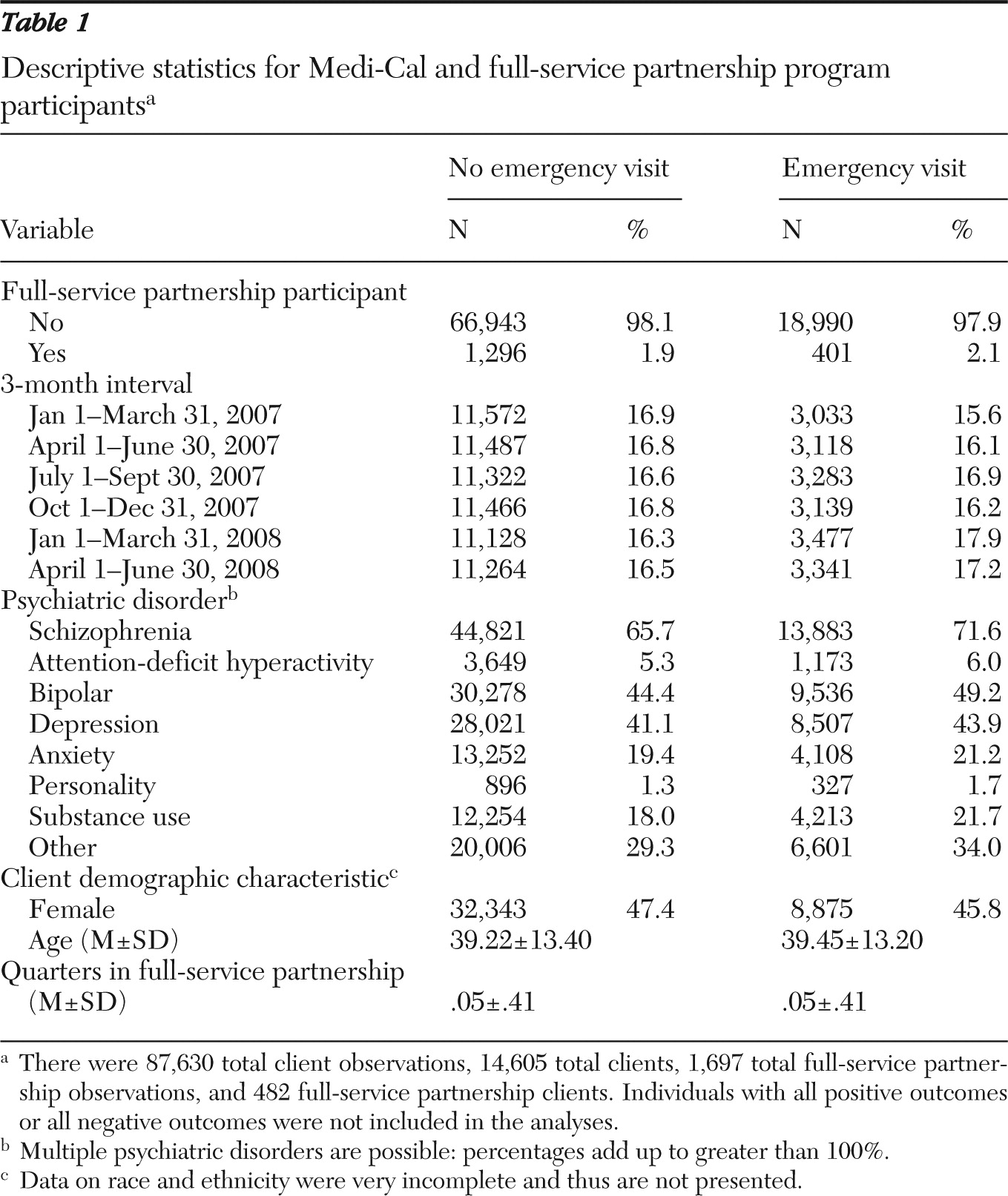

Descriptive statistics shown in

Table 1 include 87,630 observations (14,605 individuals), with 1,697 observations (482 individuals) of persons participating in the full-service partnership program (individuals with all positive outcomes or all negative outcomes were dropped from the analyses). The 482 figure does not represent the exact number of full-service partnership participants, in part because of the midpoint calculation mentioned above, but it likely contains only a very small amount of measurement error. The average full-service partnership enrollee has participated in the program for almost three quarters (mean±SD=2.59±1.45 quarters). For illustrative purposes,

Table 1 contains selected client characteristics that are not included in the individual fixed-effects logistic model because their inclusion would have resulted in the occurrence of perfect collinearity. Note that the racial-ethnic data for the subset of clients analyzed in this study were very incomplete and thus are not reported.

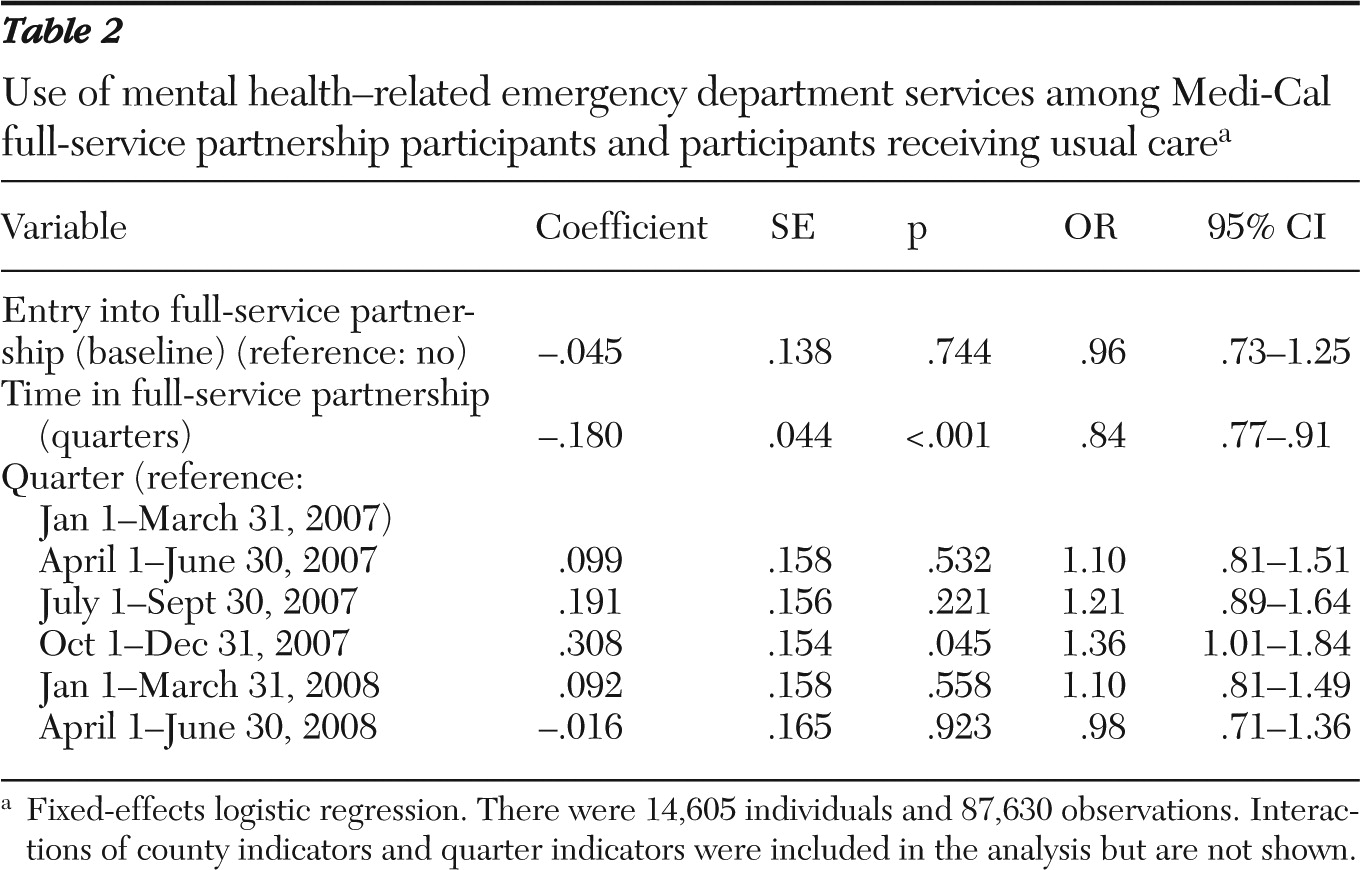

Table 2 presents the results after analyses controlled for individual fixed effects, time in the full-service partnership program, quarter fixed effects, and the interaction of quarter fixed effects and county indicators. There was no statistical difference between the treatment and control groups in the odds of having an emergency visit at baseline, showing that the model effectively removed any baseline differences between the treatment and control groups. In addition, a joint test of the statistical significance of the interaction of the quarter fixed effects and county indicators was strongly significant (

χ2=134.32, df=30, p<.001), illustrating the importance of including these controls for all omitted time-varying variables at the county level.

Our main variables of interest showed that the odds of having any emergency department visits were 54% less (odds ratio [OR]=.46, p<.001) for full-service partnership participants relative to those in usual care by the end of four quarters of treatment. The OR was computed as follows: quarters in the full-service partnership program were multiplied by the coefficient on quarters in the full-service partnership program, which was then added to the baseline coefficient (the baseline coefficient is referred to as entry into full-service partnership in

Table 2). This sum was then exponentiated. By the sixth quarter of treatment, the odds of having any emergency department visits were 68% less (OR=.32, p<.001) for full-service partnership participants relative to those in usual care.

Discussion

The external validity of this study can be conservatively extended to the seven counties analyzed. A more moderate view would extend external validity to the entire state because both northern and southern counties were included, as well as one rural county (Humboldt). We determined full-service partnership participation by matching the DCR and CSI records to SD/MC records through linking variables and included only counties that had consistent reporting patterns of emergency department services to avoid interpreting administrative reporting artifacts as treatment effects. The seven out of the 58 California counties used in the final analysis represent 48.5% of the California population.

Our use of individual fixed-effects logistic regression enabled us to control for all differences between clients in the treatment and control groups at the beginning of the study. Overall, the results show that the full-service partnership program was successful in reducing emergency department admissions; specifically, the odds of using emergency department services were reduced by 54% relative to usual care by the fourth quarter of participation in the full-service partnership program. The odds of using emergency department services were 68% less by the sixth quarter of participation in the full-service partnership program. This phenomenon cannot be explained by regression to the mean because regression to the mean would explain a reduction of the use of emergency service only down to the level seen among those receiving usual care.

Strengths of this study include the use of a quasi-experimental design using nonequivalent treatment and control groups. This, along with the ability to control for all history and characteristics of study participants at baseline and for all time-invariant and time-varying county-level characteristics, allowed us to largely control for the main threat to internal validity in this study: selection bias (

7).

Weaknesses of this study include the use of administrative data, which may be subject to classical measurement error. Such measurement error in the independent variables can result in estimated parameters that are asymptotically biased toward zero (

14,

15). However, because none of these data were self-reported, we expect such measurement error to be very low. In addition, to the extent that systematic measurement error in the dependent variable was present in terms of unknown changes in how emergency services are classified, our controls for all time-varying county-level changes would account for such error. Thus, although we believe our data contain little or no measurement error, if classical measurement error is present, our estimates may understate the true relationship between full-service partnership participation and emergency department visits.

The results of this study are similar to the findings of Gilmer and colleagues (

6), who studied a single county in California, San Diego. This study used a far larger sample and a different but equally rigorous estimation strategy, confirming the value of the full-service partnership program in reducing emergency department visits. Although Gilmer and colleagues did not find an overall cost savings from the full-service partnership program, they found that the program offset 82% of its costs in a public setting. It is arguable that because of data limitations not all costs could be considered, so the true cost offset may be even higher. In addition, further program refinement may result in lower costs, bringing the offset level into the realm of cost neutrality or even cost savings.

Conclusions

California's full-service partnership program has demonstrated success by achieving a drastic decrease in the use of mental health-related emergency department visits in the seriously mentally ill population. In order to maintain the program, rigorous cost-effectiveness research at the state level should be completed. If the results of such research are as promising as the cost-effectiveness findings from San Diego County, the full-service partnership program could be scaled up without fear of further straining California's currently fragile state budget. In addition, assuming that the full-service partnership program is cost-effective, the level to which the program should be scaled up would need to be informed by a determination of the maximum percentage of clients willing to participate in a modified assertive community treatment program.

Acknowledgments and disclosures

This project was jointly funded by contract 08-78106000 from the California Department of Mental Health and by award 04-1616 from the California Health Care Foundation.

The authors report no competing interests.