Efforts to bring evidence-based practices (EBPs) to scale in large community mental health (CMH) systems require capacity building through sustainable resources and strategies for maintaining adequate expertise in the face of high turnover rates (

1), role changes, and other barriers (

2–

4). In CMH systems, effective approaches for building capacity require strategies that are both financially feasible and time-efficient, given limited resources and high work demands. This study examined a two-phase capacity-building model that establishes in-house expertise and then builds on that expertise by using computer technology.

Typically, EBP expertise is built through a knowledge acquisition phase, followed by consultation with experts (

5). Historically, knowledge acquisition has relied on costly and time-intensive in-person workshops (

6); however, more recent efforts have shifted toward Web-based training. Although Web-based training offers a convenient, self-paced approach to learning, concerns have been raised regarding retention in training of Web-based trainees. Whereas some studies report high retention (78%−100%) (

7–

10), many report significantly lower retention in Web-based training compared with in-person training (64% versus 95%) (

11). These retention rates raise questions about whether Web-based training is an adequate resource for capacity building.

After the knowledge acquisition phase is complete, expert-led consultation has been identified as vital to develop EBP expertise and maintain behavior change (

5,

12–

16). Furthermore, a greater number of hours spent in consultation is associated with increased adherence to EBP and sustained skills (

17,

18). Studies combining Web-based training with expert-led consultation have resulted in significant improvement in trainees’ skills (

10,

17,

19,

20). However, the high cost and limited availability of experts are barriers in lower-resource settings (

21). Train-the-trainer models, which train a small number of staff to train others in an EBP, have also been used to maintain adequate EBP expertise (

22). Although the train-the-trainer model is effective for enhancing trainees’ knowledge and skills (

23,

24), trainer turnover leaves programs vulnerable to loss of EBP expertise (

25).

Utilizing the collective expertise of an initial trained cohort to train and support new clinicians within a program may present a better approach for sustaining expertise in an EBP, because the knowledge is distributed among many staff, making it more robust to staffing changes. This study examined the outcomes of a two-phase model used to build capacity for an EBP in a large CMH system in the context of an ongoing program evaluation project. In the first phase, cohorts of trainees participated in in-person training workshops, followed by weekly consultation led by experts (in-person, expert-led [IPEL] phase), which has been found to be effective (

26). Once this phase was completed, a second phase was used to maintain and expand expertise in the EBP. The second phase included Web-based training followed by consultation led by peers trained in the IPEL model (Web-based, trained-peer [WBTP] phase). This study evaluated the effectiveness and clinician retention of this approach and examined whether WBTP was not inferior to IPEL in EBP knowledge acquisition, EBP competency, and clinician retention.

Methods

Setting and Participants

This study analyzed data from an archival, deidentified data set that was collected in the context of an ongoing program evaluation project, the Beck Community Initiative (BCI) (

27,

28). The University of Pennsylvania Institutional Review Board deemed the study to be exempt as authorized by 45 CFR 46.101, category 4. The BCI aims to improve the quality of care for persons in recovery by using implementation strategies to infuse cognitive-behavioral therapy (CBT) into CMH services. This data set consists of a subset of 362 clinicians from 29 programs, trained by the BCI from 2007 to 2015 using IPEL (N=214) and WBTP (N=148).

Table 1 presents background information for clinicians.

Table 2 presents information about the programs. The influence of type of program setting (outpatient versus nonoutpatient) on main outcomes of the BCI (for example, competence and retention) did not vary significantly (

29).

Procedures

IPEL phase.

IPEL began with 22 hours of an in-person CBT workshop, followed by six months of weekly, two-hour group consultation, led by doctoral-level CBT experts. The average number of clinicians in each consultation group was seven (range six to eight). Consultation focused on applying CBT, including review of audio-recorded sessions. Initially, instructors led consultation meetings, and as the training progressed, group leadership shifted to group members. At the conclusion of the IPEL phase, the clinician group continued meeting as a peer-led consultation group (

27,

28).

Over the course of the IPEL phase (that is, workshop plus consultation), for each CMH program, each BCI instructor spent an average of 84 hours in training activities, including adaptation of training materials (10 hours), delivery of workshops (22 hours), and leading 26 two-hour weekly consultations (52 hours).

WBTP phase.

Once a program’s IPEL cohort of clinicians shifted to internal group consultation, the program transitioned to the WBTP phase, which was designed to broaden CBT capacity beyond the initial group and replace clinicians lost to turnover and to other staffing changes. The average number of WBTP clinicians per program was five (range none to 16).

The Web-based training content was based on the IPEL core training curriculum and contained the same information as the in-person workshops. Videotaped role-playing, on-screen activities, and quizzes were added to increase engagement. The Web-based training was self-paced and Internet accessible, and all content was available in English or Spanish. Clinicians were required to complete the Web-based training within six weeks, after which they joined their program’s ongoing consultation group. A BCI instructor visited internal consultation groups every six to eight weeks to provide support and to help resolve any barriers to sustained practice.

In the WBTP phase, over the course of a 7.5-month training period, the average BCI instructor time spent in training activities was 6.5 hours, which was 8% of the 84 hours required to complete training activities for the IPEL phase.

BCI participation requirements for both IPEL and WBTP.

Clinicians’ audio-recorded sessions were rated for CBT competency at three time points: prior to the consultation (baseline), middle of consultation (midconsultation), and end of consultation. Clinicians who completed the workshop (in person or Web based), attended at least 85% of consultations, submitted at least 15 recordings, and demonstrated CBT competency on their end-of-consultation audio submission (that is, a total score of ≥40 on the Cognitive Therapy Rating Scale [CTRS] [29]), received a certificate of competency. Clinicians who did not demonstrate competency on their end-of-consultation audio recording were allowed to submit additional recordings for evaluation of competency (referred to as the “competency assessment point”).

Measures

Competency.

The CTRS (

29) is the observer-rated measure most frequently used to evaluate CBT competency (

30). The 11 items assess general therapy skills (for example, interpersonal effectiveness) and CBT-specific skills (for example, focus on key cognitions). Each item is scored on a 7-point Likert scale (0, poor, to 6, excellent), with total scores ranging from 0 to 66. A cutoff total score of 40 or higher is used in clinical trials to represent competent delivery of CBT (

31). The CTRS has demonstrated adequate internal consistency and interrater reliability (

32). CTRS raters were doctoral-level CBT experts, and demonstrated a high interrater reliability for the CTRS total score (intraclass correlation coefficient=.84).

CBT knowledge.

The CBT Knowledge Quiz is a 20-item multiple-choice test developed to assess knowledge of CBT principles and interventions. The quiz was administered at the pre- and postworkshop phase (that is, in-person or Web-based training). CBT Knowledge Quiz scores were the percentage of correct answers on the quiz.

Analytic Plan

Statistical analyses were conducted with SPSS, version 22 (

33), and HLM, version 7 (

34).

Propensity score calculation.

Propensity scores were added as covariates in all models to control for nonrandom assignment to IPEL and WBTP (

35). Propensity scores were calculated by using trainees’ baseline data to create a probability model that assigned a probability from 0 (assigned IPEL) to 1 (assigned WBTP) to each trainee. The baseline variables used in propensity score calculations were baseline CTRS scores, theoretical orientation, discipline (that is, social work or other), program, and years of experience (

Table 1). These variables have been selected for propensity score calculations in a past publication on BCI data as potentially having influenced assignment to condition or the outcomes of interest (

26).

Aim 1: comparing competence for IPEL and WBTP.

Tests of noninferiority were conducted to assess whether WBTP was not inferior to IPEL at increasing CTRS scores by the end of consultation and the competency assessment points (

36). Noninferiority tests are ideal when a new approach is not likely to offer greater improvement over a previously established approach, but when the new model is more affordable and efficient than the model already established to be efficacious (

37).

First, two separate three-level hierarchical linear models (HLMs) were conducted to create an adjusted model used in noninferiority analyses. In both models, assessment point (level 1) was nested within trainee (level 2), which was nested within program (level 3). The three assessment points entered at level 1 included baseline, midconsultation, and end of consultation (model 1) and baseline, midconsultation, and competency assessment point (model 2). Phase (IPEL or WBTP) and propensity scores were added at level 2.

Noninferiority was established if the difference in adjusted CTRS scores between the two approaches was smaller than a predetermined clinically meaningful difference (that is, delta). Delta was set at 4.5 CTRS points on the basis of a statistically significant and reliable change criterion for competence (

38). If the lower limit of a 95% confidence interval (CI) was less than 4.5 CTRS points, then the WBTP phase would be considered noninferior to the IPEL phase.

Two two-level hierarchical generalized linear models (HGLMs) were used to examine the influence of training phase on the probability that a clinician would reach competence by the end of consultation (model 1) and by the competency assessment point (model 2). Bernoulli-type models, in which every level 1 record corresponded to a clinician with a single binary outcome (1, reached competence, and 0, did not) were used. Phase and propensity scores were added at level 1. Clinicians were nested within programs (level 2). Variance components analyses determined differences across programs.

Aim 2: comparing knowledge acquisition and retention for IPEL and WBTP.

A two-level HLM with clinicians (level 1) nested within programs (level 2) was conducted to assess the influence of training phase on postworkshop CBT Knowledge Quiz score. Preworkshop CBT Knowledge Quiz score, propensity score, and training phase were added at level 1.

A two-level HGLM was used to examine the influence of training phase (IPEL or WBTP) on the probability that a clinician would complete the full training (workshop plus six months of consultation) with the same variables at levels 1 and 2 as specified above.

Next, each stage of training (that is, workshop and consultation) was examined separately. A Bernoulli-type HGLM was performed to determine the influence of training phase on the probability that a clinician would complete each stage. For all HGLMs, variance components analyses determined differences across programs.

To compare the length of time spent in the consultation stage for IPEL and WBTP trainees, a Cox regression model was performed. Clinicians who completed six months of consultation were right censored.

Results

Aim 1: Comparing Competence for IPEL and WBTP

CTRS scores.

Table 3 shows means and standard deviations of CTRS scores at each time point. Tests of noninferiority showed that WBTP was not inferior to IPEL at midconsultation (CI=−1.43 to .73), end of consultation (CI=−1.74 to 1.06), and competency assessment point (CI=−1.89 to .37); the lower limits of the CIs did not exceed the 4.5 noninferiority margin.

Competence.

The number of clinicians reaching competence at the end of consultation were 117 (61%) for the 192 IPEL clinicians who reached this point and 51 (48%) for the 106 WBTP clinicians. The odds of reaching competence at the end of consultation were lower for a WBTP trainee than for an IPEL trainee (odds ratio [OR]=.83, CI=.44–1.59). This OR was not statistically significant, but it varied significantly across programs (χ2=32.03, df=18, p=.02).

The number of clinicians reaching competence at the competency assessment point were 150 (78%) for the 192 IPEL clinicians who reached this point and 73 (69%) for the 106 WBTP clinicians. The odds of a WBTP trainee reaching competence at the competency assessment point were greater than for an IPEL trainee (OR=1.41, CI=.81–3.00). This OR was not statistically significant and did not vary significantly across programs.

Aim 2: Comparing Knowledge Acquisition and Retention for IPEL and WBTP

Knowledge acquisition.

Training approach did not significantly influence postworkshop CBT Knowledge Quiz scores. This relation did not vary significantly across programs.

Retention.

Retention in the full training program was lower for WBTP than for IPEL: 192 (90%) IPEL completers versus 106 (72%) WBTP completers. The odds of not completing the full training were 3.33 times greater for a WBTP trainee than for an IPEL trainee (CI=2.23–5.46). This OR was significant (p<.001) for trainee type (WBTP vs. IPEL). Additional analyses indicated that the OR did not vary significantly across programs.

In the knowledge acquisition stage, WBTP had lower retention than IPEL: 211 (99%) IPEL completers versus 138 (93%) WBTP completers. The odds of not completing the knowledge acquisition stage were 3.88 times greater for a WBTP trainee than for an IPEL trainee (CI=2.02–7.46). This OR was significant (p<.001) and did not vary significantly across programs.

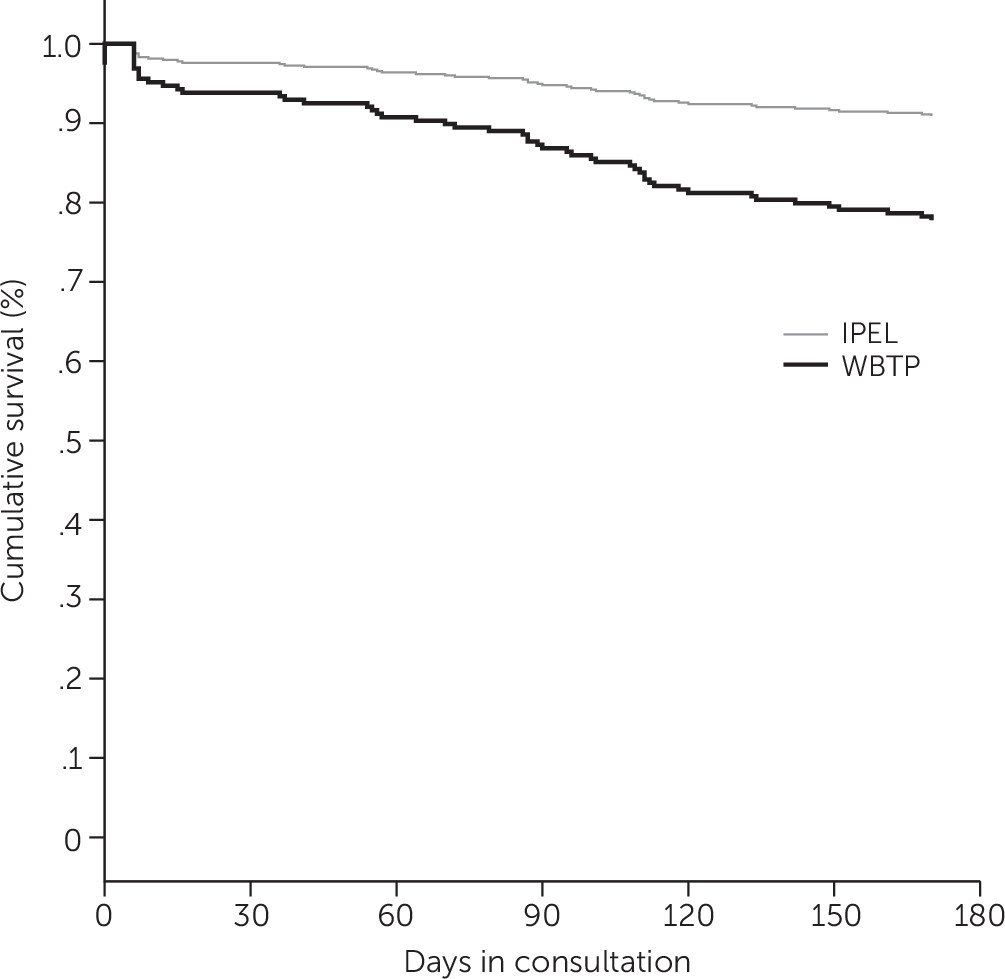

In the consultation phase, the mean±SD number of days in consultation for IPEL clinicians was 91.58±53.97, compared with 62.84±49.06 for WBTP clinicians. Among trainees who entered the consultation phase, 192 (91%) of the 211 IPEL trainees and 106 (77%) of the 138 WBTP trainees completed six months of consultation. The likelihood of not completing the consultation phase was 2.63 times greater among WBTP clinicians compared with IPEL clinicians (B=–.968, SE=.296, Wald=10.71, df=1, p<.001; hazard ratio=2.63, CI=1.00–4.70) (

Figure 1).

Discussion

This study demonstrated that Web-based training followed by consultation with trained peers resulted in CBT competency that was not inferior to that of clinicians trained by experts in an in-person workshop and consultation. In addition, the likelihood of reaching competence did not differ significantly between the two training phases. Consistent with previous research (

7,

10,

11,

17,

39), knowledge acquisition through Web-based training did not differ from that achieved through an in-person workshop. Given the need for effective, sustainable, and efficient strategies for bringing EBPs to CMH systems, the noninferiority of the WBTP model, which required 8% of the resources of IPEL, may offer a viable alternative to more expensive expert-dependent models and potentially fragile train-the-trainer approaches (

25).

Consistent with prior research, WBTP clinicians were more likely than IPEL clinicians not to complete training. Possible reasons for the lower completion rate in WBTP include differences in comfort, buy-in, motivation, and accountability. These aspects of training may have been higher for IPEL trainees, who completed the process with a cohort and received more frequent contact and feedback from BCI instructors, compared with WBT trainees, who completed WBT independently and then joined an already established group.

Several strategies could be used to address these differences between training phases. WBTP trainees could join the training with a cohort of trainees within or across programs (connected via message boards or electronic mailing lists, such as Listserv). In addition, strategies that provide internal support and incentives, such as setting performance expectations in which completion of the WBTP phase aligns with job responsibilities and providing pay increases for those certified in an EBP, may help address buy-in and accountability (

40). Furthermore, certification of CBT supervisors increases supervisors’ ability to support WBTP trainees, providing more frequent contact and feedback, such as in the IPEL phase. Future research is needed to examine and address barriers to retention.

Of note, the retention rate observed in this study was higher than rates reported in similar studies using Web-based training followed by expert-led consultations (

7,

9–

11,

41). Given that these studies typically included shorter consultation periods, expert-led consultations, and monetary incentives for participation, the retention rate observed for WBTP indicates the strength of the current model for retaining clinicians. Furthermore, lower retention may be a tenable tradeoff for the lower cost of WBTP compared with IPEL, given that WBTP required 8% of the training resources to achieve noninferior competency outcomes.

Beyond the need for less time of experts in WBTP, this sustainable resource and strategy for building expertise may offer additional benefits. Web-based training can be completed during free periods in the workday, which may be more feasible for clinicians than in-person workshops. Also, the Web-based training content can be made available in multiple languages (it was available in Spanish in this project), allowing for engagement of more clinicians. Finally, the IPEL-WBTP model may be more robust to turnover, because CBT expertise is distributed among the entire previously trained cohort rather than among a few individuals (as in some train-the-trainer models).

These findings offer strong support for the use of WBTP to build capacity; however, several limitations should be considered when interpreting these findings. Randomization of clinicians and programs was not possible because data came from program evaluation of an ongoing initiative that uses this phased approach. Propensity scores were used to approximate randomization by balancing potentially confounding baseline variables that may have influenced results. Nevertheless, the variables selected may not have accounted for unmeasured between-group differences that influenced outcomes. In addition, use of a knowledge quiz developed specifically for the BCI made it difficult to compare knowledge acquisition achieved through this initiative to that achieved in other training programs. Other studies have also developed their own knowledge tests (

7), suggesting that this may be common practice in the field; differences in the focus of each training initiative may necessitate tailored knowledge measures specific to the constructs deemed most important in a particular approach. Finally, BCI instructors were aware of the training approach when rating session recordings. High rates of interrater agreement, however, suggest that instructors rated tapes consistently across both WBTP and IPEL.

Additional research is needed to compare this approach of IPEL followed by WBTP directly to other EBP capacity-building approaches, such as train-the-trainer models (

25). Furthermore, although estimates of expended instructor time were calculated, the focus of this study was not on cost-effectiveness. Future studies should examine the cost-effectiveness of different capacity-building approaches, including costs associated with developing Web-based training as well as productivity losses related to time spent in training.

Conclusions

This study, as part of an ongoing CBT implementation effort, provides data on an effective and efficient phased approach to building capacity for an EBP in a CMH system and informs efforts to implement and increase use of EBPs within these systems. Given the need for feasible and affordable approaches to create sustainable resources and maintain EBP expertise over time, it is important to continue to identify strategies to build capacity for EBPs in large mental health systems.