Functional imaging studies are beginning to define the role of human limbic and paralimbic structures in emotion processing.

1–12 Because most studies have focused on younger individuals, little is known about emotion-related neural circuitry of older persons. The first study examining emotion-evoked regional cerebral blood flow (rCBF) changes in elderly persons

13 found that visually induced happiness, fear, and disgust were associated with increased rCBF in emotion-specific limbic structures. Sadness, however, was not examined. The main aim of the present study therefore was to examine regional brain activity during sad affect compared with neutral and happy affect induced by visual stimuli. The identification of the functional neuroanatomy associated with processing of sadness-inducing stimuli in healthy elderly subjects will serve as a basis to examine the systems level of the neurobiological changes occurring during aging

14 that may influence phenomenology and pathophysiology of depression and depressive disorders in elderly individuals.

Because this is one of the first investigations of functional imaging of emotion processing in elderly persons, its purpose remained largely exploratory rather than hypothesis testing. We considered, however, that in younger adults the ventral medial prefrontal cortex has been consistently reported to be a region of the neural circuit associated with sad affect. Implication of the ventral medial prefrontal cortex (including the subgenual cingulate cortex) in emotion regulation spans from physiological sad emotion to clinical depression.

8,15,16 The importance of the subgenual prefrontal cortex in clinical depression is confirmed by the role it plays in modulating serotonergic, noradrenergic, and dopaminergic neurotransmitter systems targeted by antidepressant drugs.

17METHODS

Subjects

Subjects were 17 healthy elderly volunteers (13 right-handed; 8 females and 9 males) recruited from the community. Average age was 65 years (SD=7.3; range 57–79), and mean education level was 14.5 years (SD=3.4; range 9–19). Subjects had no history of psychiatric or neurological disorder or alcohol/substance abuse, no current use of psychotropic medications, and no gross brain abnormalities on magnetic resonance (MR) scans. Psychiatric morbidity was ruled out by using the Structured Clinical Interview for DSM-IV (SCID)

18 and the Present State Examination.

19 Medical and psychiatric records were collected as well. Mean full-scale IQ was 116.7 (SD=16.0; range 93–150), verbal IQ was 110.4 (SD=12.0, range 93–130), and performance IQ was 120.7 (SD=17.6, range 83–150). Scores on the Benton Facial Recognition test long form (mean=46.5, SD=3.5, range 41–51) and on the Visual Form Discrimination test (mean=29.4, SD=2.4, range 26–32) were within the high end of the normative curve.

20 All subjects gave written informed consent to protocols approved by the University of Iowa Human Subjects Institutional Review Board.

Activation Stimuli

This report is based on data collected as part of a larger project undertaken to examine the functional neuroanatomy associated with several emotional states in healthy individuals and in subjects with brain damage. This study focuses on sadness in elderly volunteers in comparison with emotionally neutral and happy conditions.

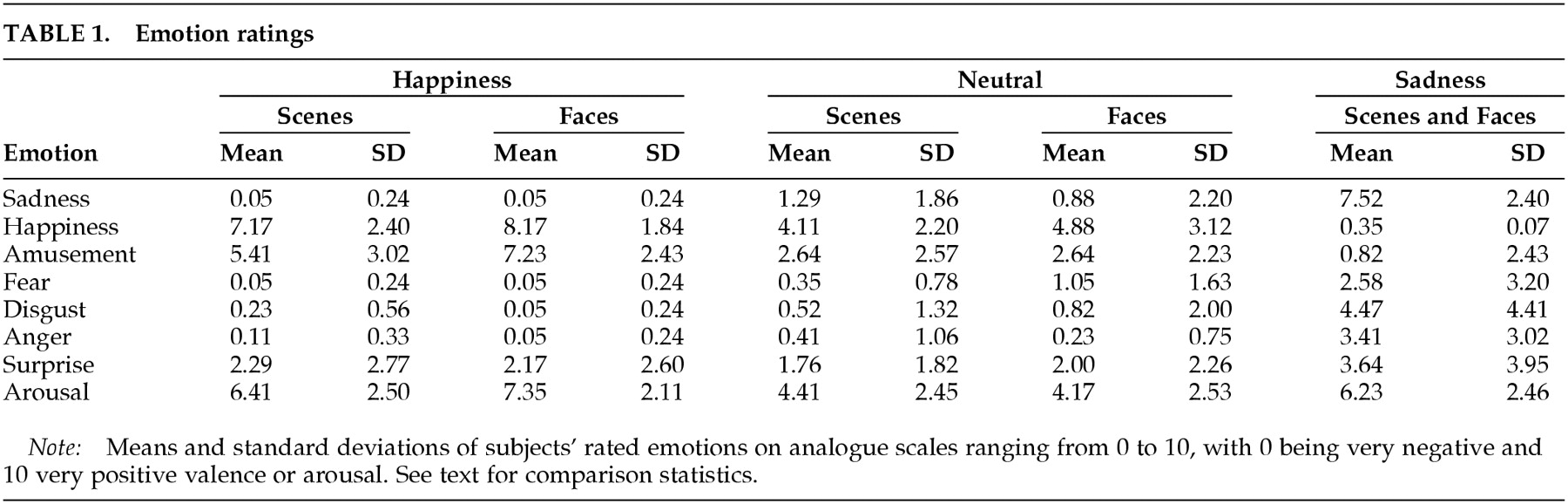

Emotion in the project was induced through visual stimuli containing either nonfamiliar human faces or objects and scenery. Subjects were told that they would be watching pictures with emotional content and that they should let the pictures influence their emotional state. Subjects were also made aware that they would be asked to rate their feelings (i.e., happiness, amusement, sadness, fear, disgust, anger, and surprise) and arousal intensity by using verbal analog scales ranging from 0 to 10 (0=absence of feeling, 10=very intense feeling).

Stimuli were chosen from a large database of standardized emotionally evocative color pictures producing highly reliable affective and psychophysiological responses.

21 Full description of individual images is available from the authors on request. The emotionally neutral and happy stimuli included exclusively either human faces or objects, scenery, animals, or landscapes (“scenes”). Thus, in the neutral and happy sets of stimuli made up of “scenes,” no human faces were included. Sad affect, however, is a relatively difficult emotional state to induce in elderly persons by using stimuli including exclusively human faces (Paradiso and Robinson, unpublished data). Viewing of sad faces may induce compassion and empathy, but less so sadness. Hence we felt that images eliciting sadness should include a

combination of scenes depicting filth, squalor, desperation, malnourishment, disease, and death with stimuli portraying the facial features of suffering individuals.

The sad condition was contrasted with the two happiness-laden conditions (only faces or only scenes), which showed appetitive satisfaction, beauty, and success, and with the two emotionally neutral conditions (only faces or only scenes).

Five sets of 18 complex images were selected on the basis of their cumulative normative valence score (scale: 0=very negative, 9 =very positive) and of the response of a group of raters in Iowa (not included in the study). The mean valence was computed as the sum of all individual pictures' normative valence scores divided by the number of pictures in the set. The mean valences were as follows: sad sequence (faces combined with scenes), 2.57 (SD=0.66); neutral faces, 5.48 (SD=0.80); neutral scenes, 5.53 (SD=0.78); happy faces, 7.15 (SD=0.71); and happy scenes, 7.32 (SD=0.47). Mean arousal scores were computed as the sum of all individual pictures' normative arousal scores divided by the number of pictures in the set. The mean arousal scores were as follows: sad sequence, 4.98 (SD=0.90); neutral faces, 3.89 (SD=0.73); neutral scenes, 3.70 (SD=0.92); happy faces, 4.45 (SD=0.65); and happy scenes, 4.68 (1.09).

Subjects had not seen the images prior to the experiment day. Each set of stimuli was shown once and in random order on an 11×8-inch computer monitor positioned 14 inches from the subject. Images were displayed individually for 6 seconds (108 seconds total per image set). Images were 7.75 inches wide and 7.5 inches high and subtended visual angles of 29° vertically and 28° horizontally. Display of images began 10 seconds prior to the arrival of the oxygen-15 ([

15O]) water bolus in the brain, assessed individually for each subject.

22PET Data Acquisition

We measured rCBF by using the bolus [

15O] water method

23 with a GE-4096 PLUS scanner. Fifteen slices (6.5 mm center to center) with an intrinsic in-plane resolution of 6.5 mm full width, half maximum, and a 10-cm axial field of view were acquired. Images were reconstructed by using a Butterworth filter (cutoff frequency=0.35 Nyquist). Cerebral blood flow was determined by using [

15O] water (50 mCi per injection) and methods previously described.

24 For each injection, arterial blood was sampled from time=0 (injection) to 100 seconds. Imaging was initiated at injection and consisted of 20 frames at 5 seconds per frame for a total of 100 seconds. The parametric (i.e., blood flow) image was created by using a 40-second summed image (the initial 40 seconds immediately after bolus transit) and the measured arterial input function. A preliminary injection was employed to establish stimulus timing.

22 Ratings were collected while subjects were lying in the scanner 60 to 90 seconds after the end of each individual PET scan/emotion stimulation.

MR Image Acquisition and Processing

MR images consisted of contiguous coronal slices (1.5 mm thick) acquired on a 1.5-tesla GE Signa scanner. Technical parameters of the MRI acquisition were as follows: spoiled gradient recall acquisition sequence, flip angle=40 degrees, TE=5 ms, TR=24 ms, number of excitations=2. MR images were analyzed with locally developed software (BRAINS).

25 All brains were realigned parallel to the anterior commissure/posterior commissure (AC-PC) line and the interhemispheric fissure to ensure comparability of head position across subjects. Alignment also placed the images in standard Talairach space.

26 Images from multiple subjects were co-registered and resliced in three orthogonal planes to produce a three-dimensional data set that was used for visualization and analysis.

PET Image Processing

Co-registration of each individual's PET and MR images utilized a two-stage process using an initial coarse fit based on surface matching of MRI and PET images and then a variance minimization program using surface fit data as input.

27 Brain landmarks identified on MRI were used to place each co-registered image into standardized coordinate space. An 18-mm Hanning filter was applied to the PET images.

25Statistical Analysis

Statistical analysis of the blood flow images utilized a modification of the Montreal method.

28 A within-subject subtraction of the sad condition with the faces and scenes neutral conditions and with the faces and scenes happy conditions was performed. The faces and scenes neutral conditions were also contrasted with each other. This was followed by across-subject averaging of the subtraction images and computation of voxel-by-voxel

t-tests of blood flow differences.

Subjective ratings were analyzed by using means and standard deviations and overall and paired F-tests. In such analyses, conditions are measured relative to one another and are referred to as activations.

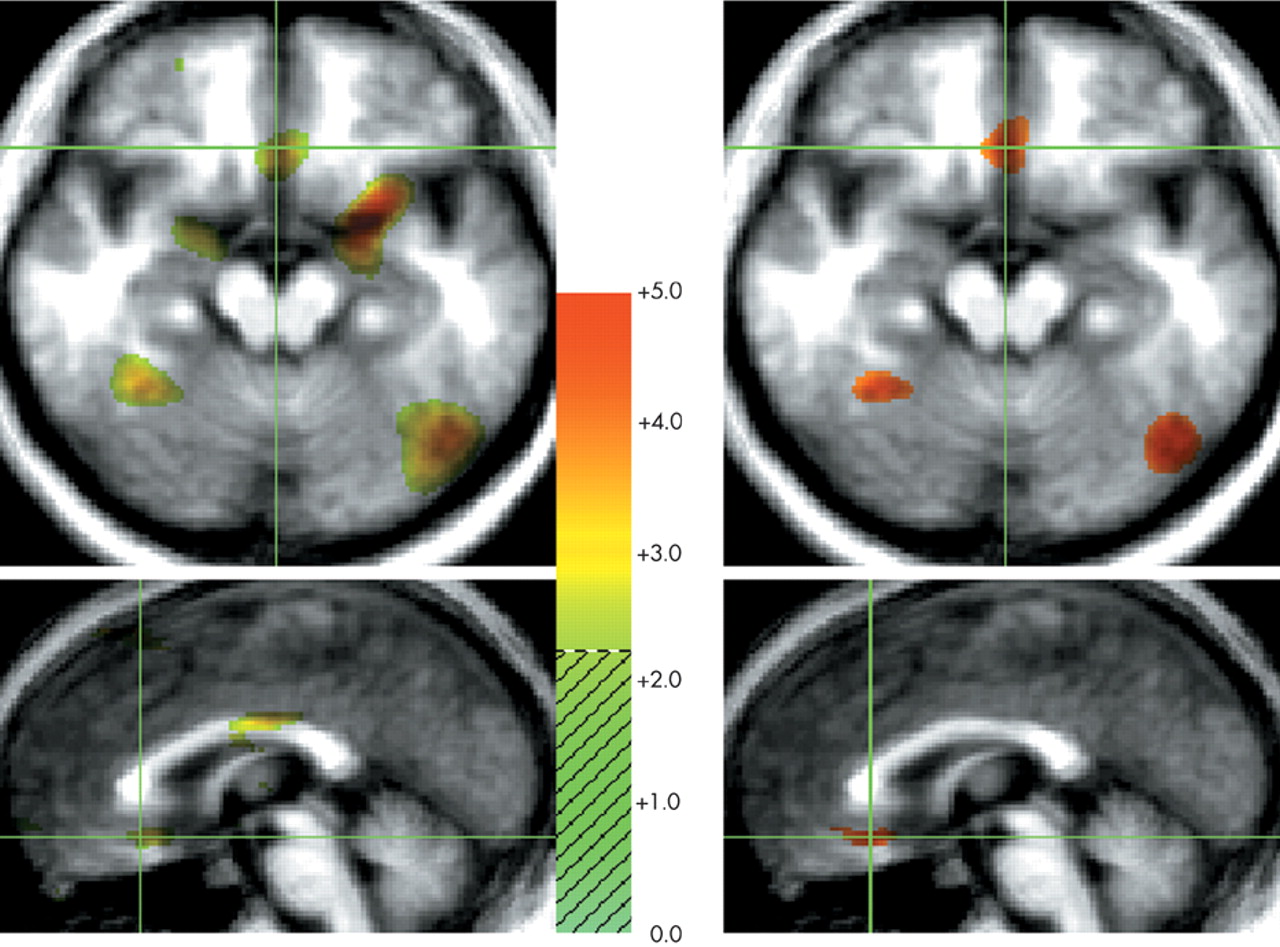

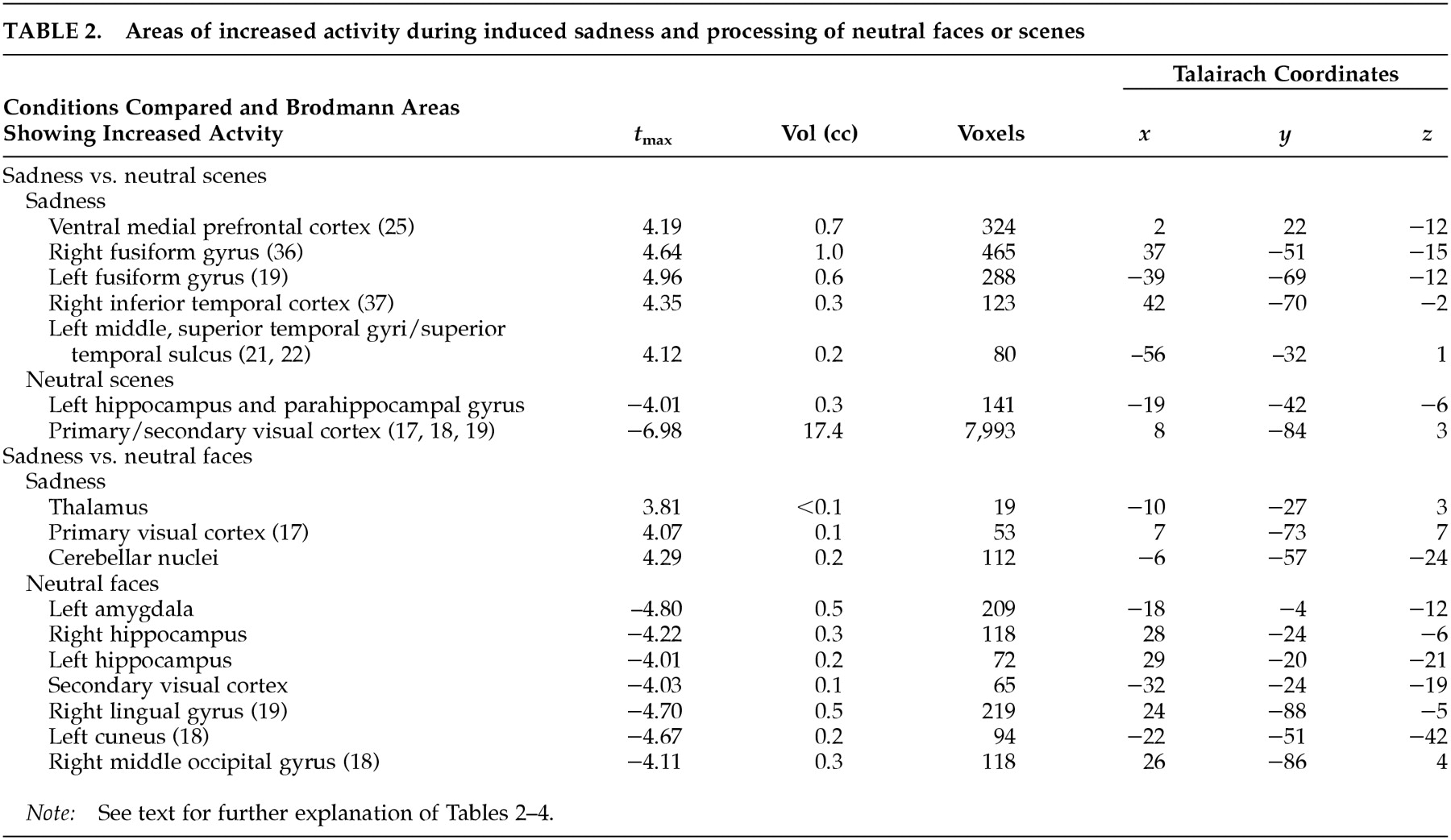

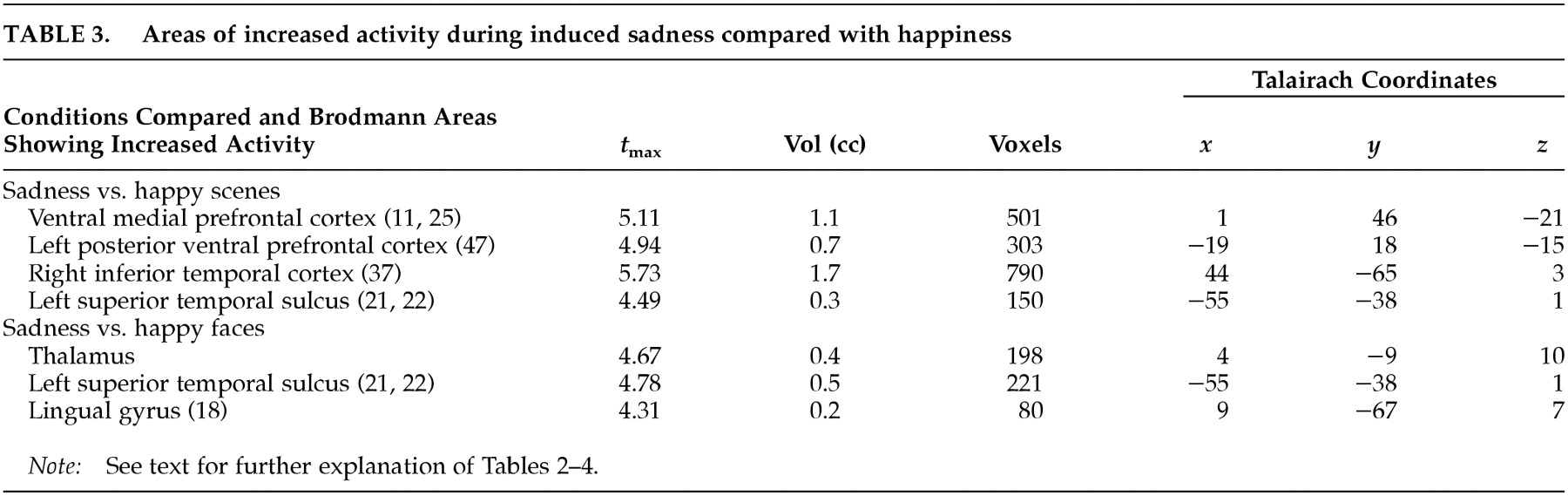

DISCUSSION

This study examined the functional neuroanatomy associated with a state of sadness induced through visual stimuli in healthy elderly individuals. There were two major findings in this study. First, a visually induced state of sadness was associated with increased activity in the ventral medial prefrontal cortex and in the thalamus. These activations were not dependent on reported overall arousal and were specific for the sad affect. Second, limbic nodes associated with induced sadness differed depending on the content (faces or “scenes”) of the comparison condition regardless of whether this content was neutral or happy. Structures of the ventral medial prefrontal cortex were associated with sad affect when the sad condition was contrasted with both neutral and happiness-laden scenes conditions. On the other hand, sad affect was associated with increased thalamic activity when contrasted with both happy and neutral faces. These data show that variations in the results of functional imaging studies of emotion may be moderated by the specific content of the control stimuli, raising the question of the reliability of imagery-based emotion induction studies. As an alternative, recalling past episodes of sad events is a powerful way to induce sad affect. However, a control condition balanced for imagery of human faces and objects may be difficult to achieve using methods based on personal memories.

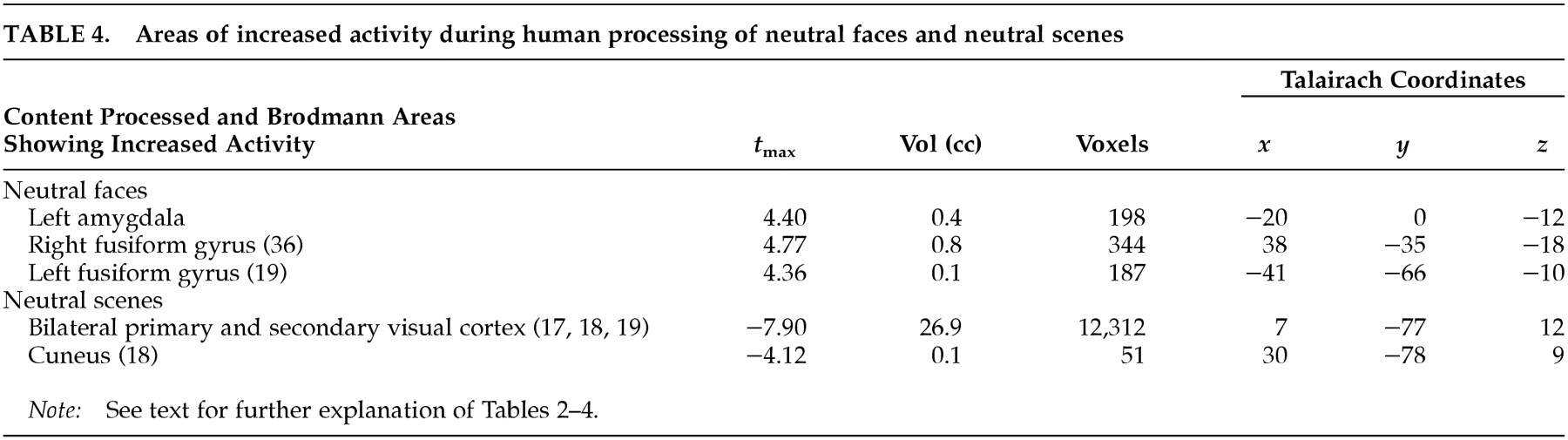

It is clear that in the present study, the regions related to sad emotion differed depending on whether the control condition contained faces or objects/scenes. What is the neurobiological basis for this phenomenon? Brain systems associated with processing of emotions share brain regions with systems processing human faces.

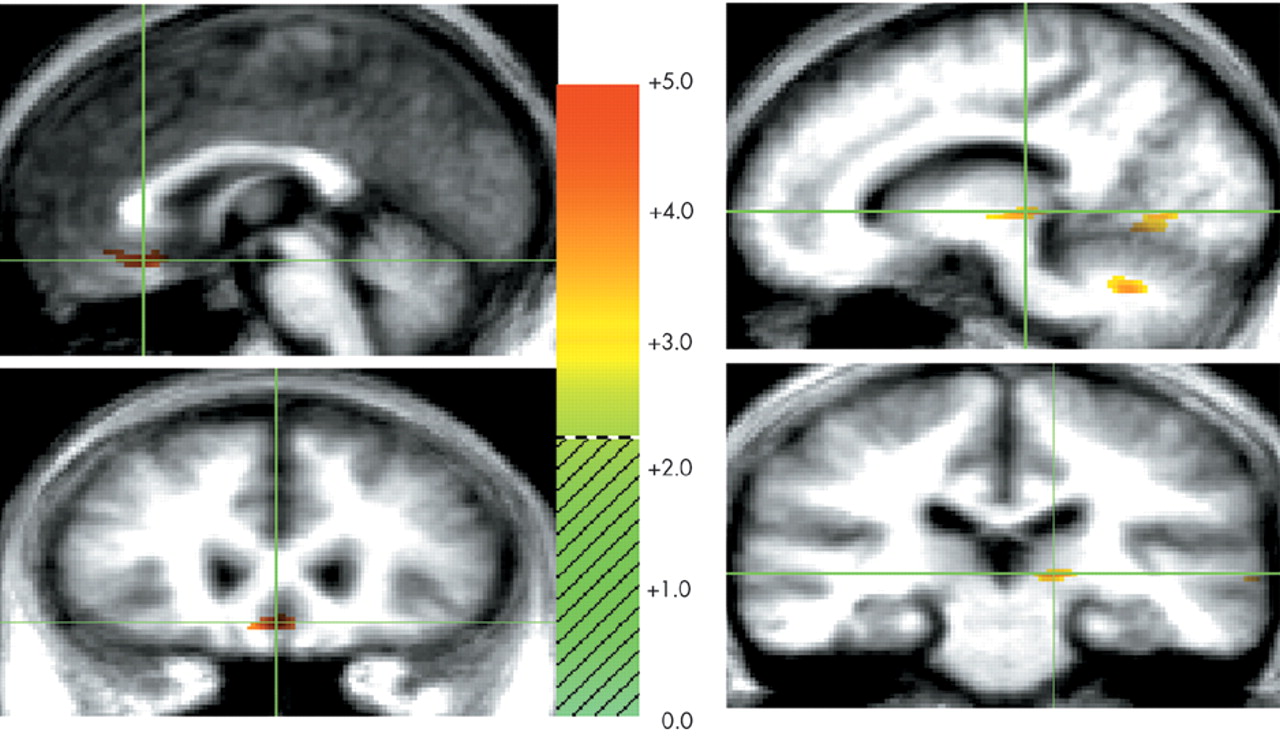

29 When contrasted with neutral and happiness-laden conditions containing either exclusively scenes or exclusively human faces, the neural activity associated with processing either faces or scenes components in the sad condition was subtracted out. For instance, medial portions of the ventral prefrontal cortex were engaged during sad affect in comparison with scenes conditions but not in comparison with faces conditions. To further examine this issue, we analyzed the neutral faces versus neutral scenes comparison at a significance threshold of

t=2.20 and found increased activity in the ventral prefrontal cortex during processing of neutral faces (

Figure 2A,B). However, only the comparison of the sad condition with the neutral and happiness-laden

scenes conditions yielded a large enough change in activity in the ventral medial prefrontal cortex to be detected at our laboratory's customary conservative

t-value of 3.61. The activity generated in the ventral prefrontal cortex during processing of either neutral or happy faces

30 explains the failure to show differential ventral prefrontal activity during the sad condition when compared with faces stimuli.

Hence, the ventral prefrontal cortex may respond to both emotionally neutral faces and sadness-charged faces, but the larger increase in activity may be achieved as a result of processing of predominantly face-delivered sad stimuli. The ventral portion of the human prefrontal cortex may function as convergence zone for stimuli carrying social significance

31 (e.g., conspecies faces

30) and emotional introspection.

3,32 Studies of patients with ventral prefrontal damage and socially inappropriate behaviors are consistent with this observation. Individuals with ventral prefrontal lesions show impairment in recognition of faces expressing emotion and inability to evaluate subjective emotional states.

33,34The neuronal circuitry delineated in the present study is consistent with the temporal-thalamic-ventral prefrontal cortex connectivity postulated to be the anatomical basis of stimulus-reinforcement association and extinction in the visual domain.

33 The ventral prefrontal region shares connections with the pivotal limbic nodes of the thalamus. Functional imaging studies have shown that the thalamus is one of the limbic regions associated with sad mood induced by visual stimuli and recall of a personal event.

5,35 Similar results were obtained studying emotional responses to negative visual stimuli in the elderly using PET.

13 The present study is consistent with a role for the thalamus in the experiential aspects of emotion.

6 Further studies should aim at understanding the role of individual thalamic nuclei in different emotion generation modalities and valence.

Several regions in striate and extrastriate visual cortex were associated with sad affect in the present study. The inferior temporal cortex and the superior temporal sulcus send direct and indirect (via thalamus) efferents to the ventral prefrontal cortex.

33 These fibers transport visual stimuli. Recent studies have shown that extrastriate cortex is more extensively activated by emotional

36 than neutral facial expressions,

9 therefore suggesting its participation in emotion processing.

3,37 In the present study, differential extrastriate cortex activity was a finding in the sad/happy contrasts, making unlikely its relationship to arousal.

As a footnote to that observation, the role of arousal in the present study and in functional neuroimaging studies of emotion activation is an important one to discuss. Whereas in this study subjects' reported arousal during the happy scenes and during sadness almost overlapped, during the happy faces condition arousal was one unit larger (on a scale 0–10) than during sadness. This result did not reach a statistically significant difference, and one should question whether it has any physiological importance. To a broader observation, having values of arousal that are fully equal across emotions may be less crucial if we consider that functional neuroimaging of emotion usually operates under the assumption that arousal is an independent factor varying linearly with valence. The corollaries of this assumption of linearity are that arousal can be fully controlled for through experimental design and that arousal components attached to different emotions (e.g., anger and disgust), albeit of the same values, have overlapping neural functional correlates. This assumption and its corollaries may create a false perception of precision.

Consistent with the results of other negative-emotion induction studies,

13 sadness in elderly individuals did not show increased rCBF in the amygdala. Changes in amygdala activity are usually reported during externally induced negative emotions in younger people.

1–4 Single-cell recordings

29 and human neuroimaging studies

38 have demonstrated increased amygdalar activity in association with face processing. Hence it is plausible that here the differential activity in the amygdala is related to stimulus content (i.e., human faces) rather than affect. Hippocampal and parahippocampal activity found in association with both neutral conditions compared with the emotional condition is perhaps explained by the encoding of new perceptual information (in the case of the parahippocampal gyrus related to scenes) at the time of PET imaging.

39 Midline cerebellar activity during emotion in elderly subjects was present in both our previous

13 and present studies, which required no movement for task completion. Increased cerebellar activity has been reported in emotion studies involving the visual domain.

4,40 Unraveling the specific role of the cerebellum in emotion requires further research.

Whereas the intended emotion of sadness was reliably induced, it should be noted that some subjects reported other emotions in response to sad stimuli as well. As can be observed in

Table 1, fear and disgust were reported and are among the emotions that may have bearings on the interpretation of the data. This phenomenon may be due to the nature of our stimuli. However, we gather from our clinical and research experience that human emotions are very often not univocal. If emotional states are carefully explored and assessed, one is likely to detect the complexity of the human affects. Precision may be just an artifact introduced by the observer at the expense of the complexity of human experience.

In summary, sad affect elicited through complex visual stimuli was associated with both ventral prefrontal cortex and thalamic activity, the region activated depending on the content rather than the affect of the comparison condition. This finding suggests that the ventral medial prefrontal cortex is an important convergence zone for responses to faces and to sad affect. Whereas the thalamus was not part of our original a priori hypothesis, many studies since our study was designed have found thalamic activation during negative affect in samples of younger subjects.