Since most new research and treatments for dementia focus on slowing the progression of Alzheimer’s disease (AD),

1,2 it is critical to identify the need for intensive diagnostic evaluation so that early treatment can delay its progression.

3,4 People with mild cognitive impairment (MCI), characterized by marked memory impairment without disorientation, confusion, or abnormal general cognitive functioning appear to develop AD at a rate of 10%–15% a year.

5–7 Research concerning MCI, both as a distinct diagnostic entity and as a precursor to AD,

6–11 suggests that instruments focused upon MCI measurement would provide useful screening information for decisions concerning full diagnostic evaluations for AD. Brief or automated neuropsychological tests may be the preliminary step most suited to determine the need for evaluations, which require costly neuropsychological, biochemical, or neuroimaging techniques.

12While memory deficits have been found to be the most reliable single predictor,

5,10,13–15 studies indicate that tests sampling different cognitive domains, when combined, significantly enhance the predictive validity of a test battery because of variations in the initial cognitive deficits associated with AD.

15–19 Current methods of detection are costly and often deferred until later in the disease process when interventions to delay AD are likely to be less effective. Therefore, an effective screening device for MCI would be cost efficient and incorporate tests that assess multiple cognitive domains. In this article, we assess the reliability and validity of a touch screen test battery that accomplishes these goals and is administered, scored, and interpreted by computer: The Computer-Administered Neuropsychological Screen for Mild Cognitive Impairment (CANS-MCI).

METHODS

Subjects

A community sample of 310 elderly people was recruited through senior centers, American Legion halls, and retirement homes in four counties of Washington State. Exclusionary criteria were non-English language, significant hand tremor, inability to sustain a seated position for 45 minutes, recent surgery, cognitive side effects of drugs, indications of recent alcohol abuse, or inadequacies in visual acuity, reading ability, hearing, or dominant hand agility. The subjects were predominantly Caucasian (86%), female (65%), and had at least some college education (76%). Subject age ranged from 51–93 years, with the majority between 60–80 years of age (63%).

Neuropsychological Measures

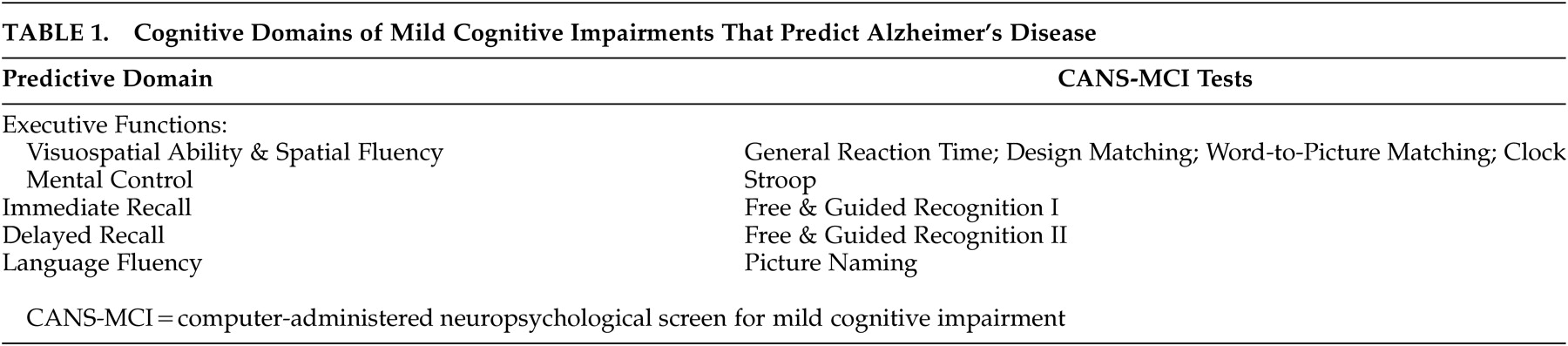

The CANS-MCI tests were based upon the cognitive domains found to be predictive of AD in previous neuropsychological research: visuospatial ability and spatial fluency;

4,15,17,20–22 executive mental control;

1,23,24 immediate and delayed recall;

4,5,13–15,17,18,20–27 and language fluency

4,5,17,18,20–22,25,27 (

Table 1). The usability of the CANS-MCI has been previously evaluated.

28 It can be fully self-administered, regardless of computer experience, even by elderly people with MCI. It does not cause significant anxiety-based cognitive interference during testing. After a researcher enters a subject identification and adjusts the volume, the tests, including any assistance needed, are administered by a computer with external speakers and a touch screen.

The CANS-MCI was developed to include tests designed to measure executive function, language fluency, and memory (Table 1). The executive function tests sample two cognitive abilities: mental control and spatial abilities. Mental control is measured with a Stroop test (on which the user matches the ink color of word names rather than the name itself). Spatial abilities were tested with: a general reaction time test with minimal cognitive complexity (on which the user touches ascending numbers presented on a jumbled display; design-letter matching; word-to-picture matching; and a clock hand placement test (touching the hour and minute positions for a series of digital times). Memory for 20 object names was measured with an immediate and a delayed free and guided recognition test. Language fluency is tested with a picture naming test (on which pictures are each presented with four 2-letter word beginnings).

Unless specifically described as latency measures, the CANS-MCI and conventional neuropsychological tests in this study measure response accuracy. All latency and accuracy measures on the CANS-MCI are scalable scores.

The following conventional neuropsychological tests were used to assess the validity of the CANS-MCI tests: Weschler Memory Scale-Revised (WMS-R) Logical Memory Immediate and Delayed (LMS-I and LMS-II);

29 Mattis Dementia Rating Scale (DRS);

30 and Weschler Adult Intelligence Scale, Digit Symbol test.

31 LMS-II scores were used to classify participants as having normal cognitive functioning or MCI. The LMS-II has previously been found to differentiate normal from impaired cognitive functioning.

4,5,17Procedures

Each testing session lasted approximately 1 hour. The progression of tests was designed to minimize intertest interference. The order was: Digit Symbol, DRS, LMS-I, CANS-MCI, LMS-II. The CANS-MCI tests lasted approximately 1/2 hour. During the first session, test procedures were started after the testing procedure was explained and informed written consent obtained. Subjects were tested at Time 1, one month later (Time 2), and six months later (Time 3). This study was approved by the University of Washington’s Human Subjects Committee and the Western Institutional Review Board.

Data Analyses

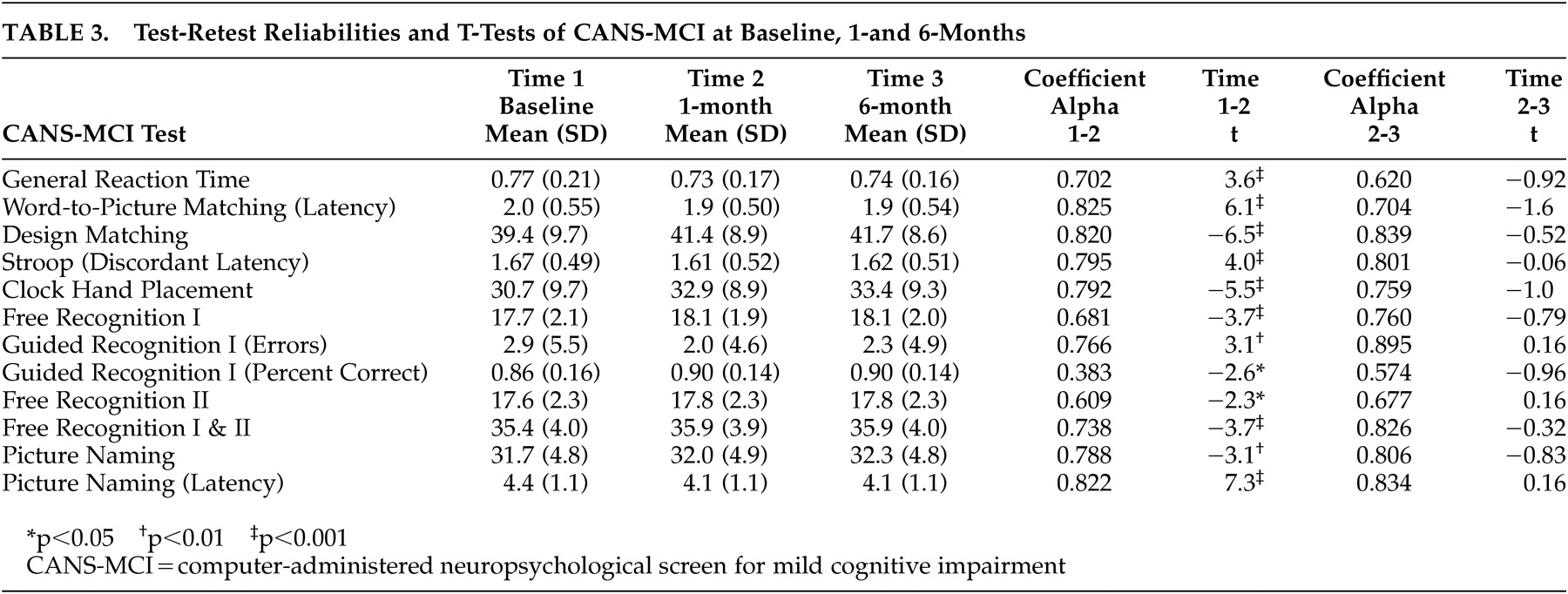

Alpha coefficient reliabilities were performed to analyze the internal consistency of the scale items. Pearson correlations and paired sample t tests were used to evaluate the test-retest reliability of the CANS-MCI tests. Concurrent validity was evaluated by running Pearson correlations to compare the CANS-MCI tests with scores on conventional neuropsychological tests administered during the same test sessions. To assess diagnostic validity, independent sample t tests were used to analyze differences between those subjects in the lowest 10th percentile of cognitive functioning and subjects in the highest 90th percentile based on their LMS-II scores. We conducted exploratory and confirmatory factor analyses on different subsets of data to establish whether our tests measure the expected cognitive domains.

Discussion

The results of these initial analyses indicate the CANS-MCI is a user-friendly, reliable, and valid instrument that measures several cognitive domains. The CANS-MCI computer interactions were designed to provide a high degree of self-administration usability. Our results indicate high internal reliability and high test-retest reliability comparable to other validated instruments.

30–33 One score, Guidance Percent Correct, was not highly reliable and therefore was not included in further analyses. The test scores are all more consistent from Time 2 to Time 3 than they were from Time 1 to Time 2, as demonstrated by the t-scores. Even memory tests are more stable once subjects are familiar with the tests, despite the much longer time interval. All CANS-MCI test scores improve with one previous exposure, which indicates that familiarity with the testing procedures appears to make test results more dependable. It would be advisable to have a patient take the test once for practice and use the second testing as the baseline measure. Recommendations for more extensive evaluation should be based upon multiple testing sessions, at least several months apart.

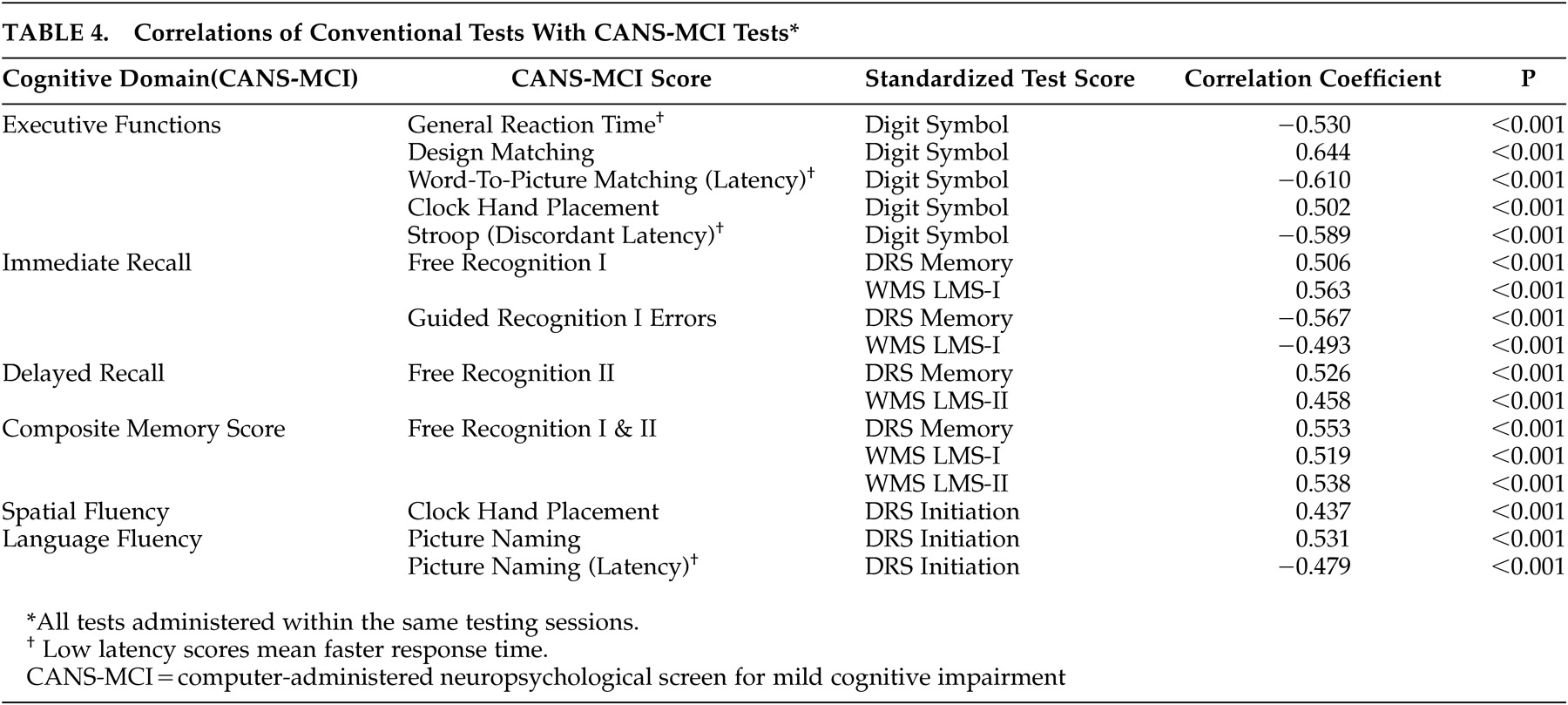

The CANS-MCI subtests correlate with the LMS-I & II, Digit Symbol and DRS highly enough to demonstrate concurrent validity. However, the moderate correlations indicate some differences, which might be attributed to the different ranges of ability the tests were designed to measure. For example, the DRS Initiation Scale is less discriminating at higher ability levels

30 than are the CANS-MCI Clock Hand Placement and Picture Naming tests with which is it best correlated; 42% of the subjects obtained a perfect DRS Initiation score, while 4.8% and .5% received perfect scores on the Clock Hand Placement and Naming Accuracy tests, respectively. The LMS-II is less discriminating than the CANS-MCI Free Recognition II score at the lower levels of functioning; 20 (7%) subjects received a LMS-II score of 0 and 1 (0.4%) of the subjects received the lowest score on the Free Recognition II. On the other hand, the LMS-II appears to be more discriminating at the higher levels of functioning. No subjects received a perfect LMS-II score, while 19% of subjects got the highest score possible on Free Recognition II. The CANS-MCI Free Recognition II test is substantially different from the LMS-II free recall test because free recall without prompting (used in the LMS-II) is more difficult than guided recall. Like other tests that guide learning,

34 the CANS-MCI Free Recognition test may enhance “deep semantic processing,”

13 allowing trace memories to improve its scores while not improving test scores on unprompted recall.

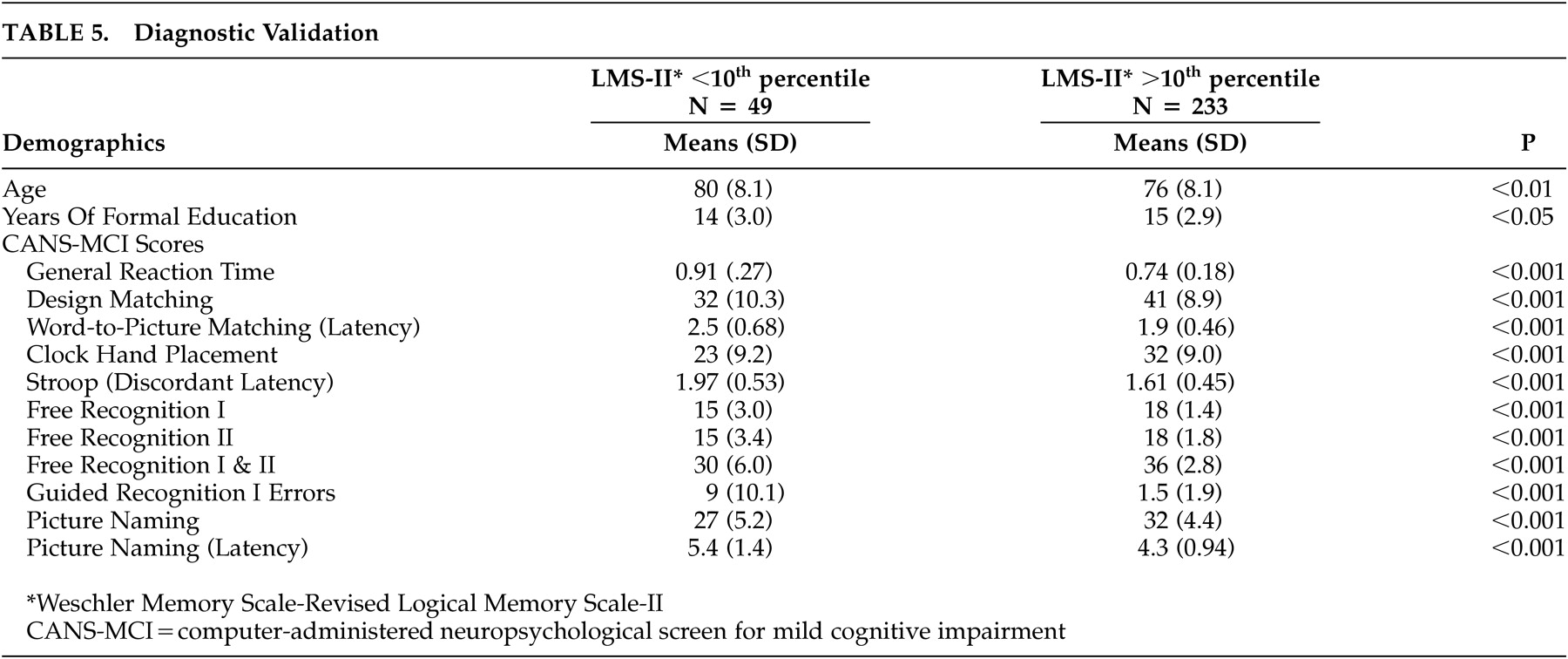

35Diagnostic validity is an important criterion for cognitive screening effectiveness. T tests based upon the LMS-II diagnostic criterion show that the CANS-MCI tests differentiate between the memory-impaired and unimpaired elderly. Although the reference measure (LMS-II) is a limited criterion of diagnostic validity, the corresponding differences on all the CANS-MCI tests suggest that the CANS-MCI has enough diagnostic validity to pursue more extensive analyses. The CANS-MCI also measures changes over time, a feature that seems likely to enhance its validity when assessing highly educated people who have greater cognitive reserve. Given that reserve capacity can mask the progression of AD,

36 comparisons of highly educated persons against their own previous scores might detect the insidious progression of predementia changes long before the diagnosis of MCI or AD would otherwise be reached. The extent to which sensitivity/specificity and longitudinal analyses confirm this dimension of validity is currently being studied, using full neuropsychological evaluations as the criterion standard.

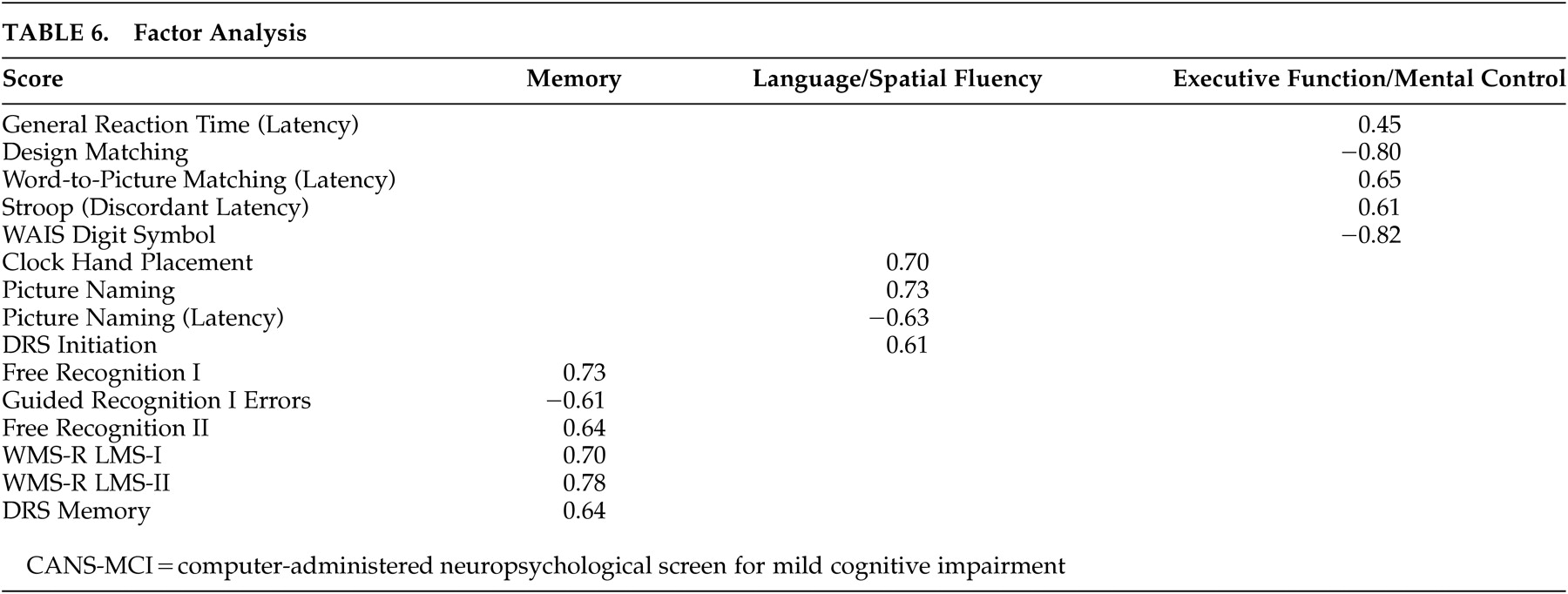

The factor analyses indicated that CANS-MCI tests load onto three main factors: memory, language/spatial fluency, and executive function/mental control. These factors are similar to the three aspects of cognitive performance measured by the Consortium to Establish a Registry for Alzheimer’s disease (CERAD) test batteries.

37 The CANS-MCI, LMS and DRS tests that measure the immediate acquisition or delayed retention abilities load onto Memory. Language/spatial fluency has many tests that measure the retrieval from semantic memory of words (or in the case of the Clock Hand Placement Test, spatial representation of numbers). The General Reaction Time, Design Matching, Word-to-Picture Matching, Stroop (Discordant Latency), and the Digit Symbol tests make up the Executive Function/Mental Control factor which appears to represent rapid attention switching and mental control. The memory, language/spatial fluency, and executive function/mental control factors were highly correlated, as would be expected of cognitive functions all associated with MCI and/or AD. Even though highly correlated, they appear to be distinct factors.

The Clock Hand Placement test was not part of the executive function/mental control factor as hypothesized, though in pairwise analyses (

Table 4) the test had the highest correlation with the Digit Symbol Test--a conventional measure of several executive functioning abilities that is highly predictive of AD.

21 However, the Clock Hand Placement test also correlated moderately with the DRS Initiation Scale (r=0.437), a scale that involves the naming of items in a grocery store. Both the Clock Hand Placement and DRS Initiation tests involve mental visualization translated into a response, and both load on the language/spatial fluency factor. The test that most heavily loads on language/spatial fluency (picture naming) also requires visualization of an answer before making a response.

Computerized tests have several advantages. By consistent administration and scoring, they have the ability to reduce examiner administration and scoring error,

12,38 as well as reduce the influence of the examiner on patient response.

33 Because such tests are self-administered and no training is required to administer them, they take little staff time. Other cognitive screening tests, both brief and computerized, still take significant amounts of staff time to administer and score. Medications for stalling memory decay are being widely used and are expected to be most effective when started early in the course of dementia. Therefore, it will become increasingly important to identify cognitive impairment early in the process of decline.

3,4,39 The CANS-MCI is also amenable to translation with automated replacement of text, pictures, and audio segments based upon choice of a language and a specific location (e.g., English/England) at the beginning of the testing program. The CANS-MCI was created for U.S. English speakers and versions have not yet been validated for other populations.

There are several limitations to the study. A community sample increases the chance of false positives because of the low incidence of impairment in the population as compared to a clinical sample.

40 There may also be a selection bias in our sample since participants were volunteers with high levels of education.

The ability of the CANS-MCI to discriminate between individuals with and without MCI has not yet been adequately compared with findings of a criterion standard such as an independent neuropsychological battery. We do not yet know what combination of tests and scores have the most sensitivity and specificity in predicting the presence of MCI. The next step in our research is to determine this using a full neuropsychological battery as the criterion standard. Further, we will look at what amount of change over time is significant enough to warrant a recommendation for comprehensive professional testing.

The CANS-MCI is a promising tool for low-cost, objective clinical screening. Further validation research is under way to determine if its longitudinal use may indicate the presence or absence of cognitive impairment well enough to guide clinician decisions about the need for a complete diagnostic evaluation.

ACKNOWLEDGMENTS

The authors wish to thank Bruce Center, Ph.D. for statistical assistance.

Research for this study was supported by the National Institute on Aging, the National Institutes of Health (1R43AG1865801 and 2R44AG18658-02), and an earlier test development grant from the Department of Veterans Affairs, Veterans Health Administration, Health Services Research and Development Service (LIP 61-501).

A partial version of these data was presented at the 8th International Conference on Alzheimer’s Disease and Related Disorders, Stockholm, Sweden 2002.