Previous research has focused on cognitive enhancement in schizophrenia

(1). An extensive literature has developed on the purported cognitive-enhancing effects of second-generation (a.k.a. “atypical”) antipsychotic medications

(2), and in addition there has recently been increased interest in identification of compounds chosen specifically for their cognitive-enhancing potential

(3,

4). These studies have examined cognitive enhancement effects on a wide array of cognitive ability areas, including episodic memory

(5), attention/vigilance

(6), executive functioning

(7), psychomotor speed

(8), and verbal skills

(9). While some of these studies have used adequate clinical trial methodology, the majority have had substantial methodological limitations. For instance, many of the studies did not use blinded designs or random assignment to treatment conditions (see Harvey and Keefe

[1] for a review of these issues). There has been equivalent interest in developing cognitive-enhancing techniques that are behavioral in nature, with many of the same issues applying to these studies as well.

One of the most salient methodologic issues that must be considered in interpreting apparent improvements in cognitive test performance is whether such changes actually reflect improved cognitive ability or simply result from improved test performance without improvement in the underlying cognitive ability. Test performance may appear to improve because of the combined influence of normal variability in test performance (due to measurement error) plus examinees’ increased familiarity with the test materials and procedures over repeated exposure (“practice effects”). This issue also applies to nonpharmacological interventions (i.e., cognitive remediation) and to inferences regarding the importance of correlations between cognitive test performance and the results of neuroimaging studies. Unreliable measurement would reduce the validity of inferences associated with correlations between test performance and imaging results, but few data are available to address the stability of performance on these cognitive measures.

One method of addressing practice effects is the use of alternate forms, yet full equivalence of forms is an ideal that is difficult to achieve, and many of the cognitive tests that are most widely used in the schizophrenia literature have only one version. Moreover, alternate forms do not fully eliminate practice effects. There appear to be at least two potential components to practice effects: 1) an examinee’s performance may improve with repeated testing because he or she learns the specific item content over repeated presentations, and 2) an examinee’s performance may improve with repeated testing because he or she becomes more familiar with performing tasks similar to the target assessment battery (method variance) or becomes more familiar or comfortable with being administered neurocognitive tests in general. Parallel forms are rarely perfectly parallel, but even when ideal they correct for effects listed under the first component but do not correct for those subsumed under the second.

To some degree, interpretive difficulties in clinical trials that are due to measurement error and practice effects can be circumvented by study designs that include double-blind random assignment of some participants to a placebo (or other noncognitive enhancing) arm. However, there have been increasingly frequent concerns expressed about the use of placebo-controlled designs in schizophrenia research. Also, even with an adequately controlled design, while one may be able to show differences in group mean performance, it is difficult to evaluate whether changes have occurred on the level of an individual patient. This level of change is truly the endpoint goal of cognitive enhancement treatments, regardless of mechanism.

Identification of meaningful changes in performance on the part of schizophrenia patients, either due to improvements in performance associated with treatment or deterioration in clinical status, requires understanding of normative patterns of test-retest stability

(10,

11). Test-retest data from healthy comparison subjects may provide some insight, but norms from cognitively stable schizophrenia patients are needed because patients may show more test-retest error variance than healthy individuals

(12). With the advent of newer antipsychotic medications with reduced severity of side effects

(13) and greater efficacy in critical symptom domains

(14), it is difficult to perform clinical trials in which patients have the possibility of being randomly assigned to receive older antipsychotic treatments. If normative data were collected on clinically stable individuals receiving older medications, these data might be helpful in evaluating changes seen in future studies examining compounds with purported cognitive-enhancing effects. The purpose of the present study was threefold: 1) to examine practice effects over a test-retest interval similar to a clinical trial, 2) to develop norms for evaluating cognitive change among middle-aged and older psychotic patients treated with conventional antipsychotic medications, and 3) to identify the width of the prediction intervals for defining “unusual” changes that would be unlikely to be due to either measurement error or practice effects. We also examined whether retest stability and prediction intervals varied as a function of baseline levels of cognitive impairment, in that patients with schizophrenia tend to vary in their levels of impairment and it may provide useful information for later trials in terms of differential variance associated with greater or lesser levels of impairment.

This study used a neuropsychological battery (the Aged Schizophrenia Assessment Schedule–Cognitive) that was originally designed by an advisory team of neuropsychological experts for Eli Lilly and Company for use in multisite studies of the cognitive effects of antipsychotic medications among middle-aged and older patients with psychotic disorders. In the present study, we provide a comprehensive study of retest effects on cognitive functioning in schizophrenia, performed on 45 clinically and cognitively stable outpatients who were receiving stable doses of conventional antipsychotic medications. Participants completed the Aged Schizophrenia Assessment Schedule–Cognitive battery at baseline and at an 8-week follow-up evaluation during a period of clinical stability without changes in their treatment status.

Method

Subjects

Participants were 45 middle-aged and older patients with schizophrenia (N=33) or schizoaffective disorder (N=12) who were recruited and evaluated at any of three research sites, the University of California at San Diego Department of Psychiatry, Mt. Sinai School of Medicine, or the University of Cincinnati Department of Psychiatry. All participants were 45 years of age or older, each was receiving clinically appropriate doses of conventional neuroleptic medications during the study, and there had been no changes in their medication treatment during the month preceding the baseline evaluation. We excluded patients with a history of other psychiatric diagnoses or medical conditions that might impact their cognitive functioning (e.g., substance abuse or dependence, neurologic conditions, head trauma with loss of consciousness, seizure disorder, or degenerative dementia). Patients were also excluded if their current antipsychotic medication dose was greater than 1500 mg/day in chlorpromazine equivalents, they were receiving selective serotonin reuptake inhibitor antidepressants, or they had a current diagnosis of major depression. These criteria were intended to ensure that the patients in the sample were clinically stable over the test-retest interval.

Measures

Psychopathology and motor symptoms

Level and changes in severity of psychopathology were assessed with the positive and negative subscale scores of the Positive and Negative Syndrome Scale

(15) and the Montgomery-Åsberg Depression Rating Scale

(16). Severity of extrapyramidal symptoms was assessed with a modified version of the Simpson-Angus Rating Scale

(17), which examined nine items (gait, balance, arm dropping, rigidity of major joints, cogwheeling, glabella tap reflex, tremor, salivation, and akinesia). It had a potential score range of 0 to 30: three items (balance, cogwheeling, glabella tap) were rated from 0 to 2, the other six were rated from 0 to 4. Motor abnormalities were also evaluated with the global clinical assessment of akathisia score from the Barnes Rating Scale for Drug-Induced Akathisia

(18). The latter score has a potential score range from 0 (absent) to 5 (severe akathisia).

Neuropsychological battery

The Aged Schizophrenia Assessment Schedule–Cognitive battery consists of the following specific components: total correct on the McGurk Visual Spatial Working Memory Test

(19); total learning and delayed recall scores from the Word List Learning Test

(20); seconds to complete parts A and B of the Trail Making Test

(21); d-prime and omission error totals from the Continuous Performance Test–Identical Pairs Version

(22); total raw score on the digit span subtest, raw score for the letter-number sequencing task, and total correct for digit symbol-coding, all taken from the WAIS-III

(23); raw scores for total perseverative errors and total categories completed in the 64-card Wisconsin Card Sorting Test

(24); total correct for the letter fluency task; total words for the animal fluency task; right- and left-hand totals for the Finger Tapping Test; raw score (total correct minus total errors) on the WAIS-III symbol search; total correct and correct minus errors of commission on the digit cancellation task; total correct on the Benton Judgment of Line Orientation

(25); total correct on the Hooper Visual Organization Test

(26); and total errors on the modified (30-item) version of the Hiscock Digit Memory Test

(27).

The measures in this battery provide a comprehensive assessment of a number of constructs relevant to schizophrenia, its treatment, and normal aging, including 1) verbal skills (verbal fluency), 2) attention/working memory and vigilance (McGurk Visual-Spatial Working Memory Test, Continuous Performance Test–Identical Pairs Version, digit cancellation test, digit span, letter-number sequencing), 3) verbal learning and memory (Word List Learning Test), 4) psychomotor speed (Trail Making Test–part A, digit symbol-coding, symbol search), 5) abstraction/problem solving/mental flexibility (64-card Wisconsin Card Sorting Test, Trail Making Test–part B), 6) perceptual organizational ability (Hooper Visual Organization Test, Benton Judgment of Line Orientation Test), 7) motor speed (Finger Tapping Test), and 8) effort/motivation (Hiscock Digit Memory Test).

In designing the Aged Schizophrenia Assessment Schedule–Cognitive battery, the advisory team attempted to incorporate tests that targeted cognitive constructs for which preliminary studies had suggested atypical antipsychotic medications might have a beneficial impact (such as verbal fluency, attention/working memory, verbal learning, and abstraction/problem-solving), as well as some cognitive abilities for which there was not an expectation of improvement (perceptual organization, motivation/cooperation). Another primary consideration in selecting the specific tests to include in this battery was that the battery was being designed for repeated administration to older psychotic patients in clinical trials who might not tolerate lengthier assessments. Two tests were used with alternate forms in a fixed order (digit cancellation and the Word List Learning Test) from baseline to the follow-up evaluation to minimize the degree to which practice effects on these particular measures might be observed from patients’ learning the item content.

Procedures

All subjects were assessed individually by experienced staff members under the supervision of doctoral-level neuropsychologists at each site. The Aged Schizophrenia Assessment Schedule–Cognitive battery was administered at baseline and again 8 weeks later by the same site-specific staff member. For some patients, the testing sessions were completed over several days (within a 1-week window) to minimize fatigue.

Statistical Analyses

We calculated the mean and standard deviation of each score at baseline and at the 8-week follow-up evaluation. For each score, we calculated the correlation (Pearson’s r) between the baseline and 8-week follow-up score as our index of test-retest reliability. Practice effects were measured in terms of the mean difference between follow-up minus baseline scores. Changes in mean scores were evaluated in terms of paired sample t tests. Significance was defined a priori as p<0.05 (two-tailed.)

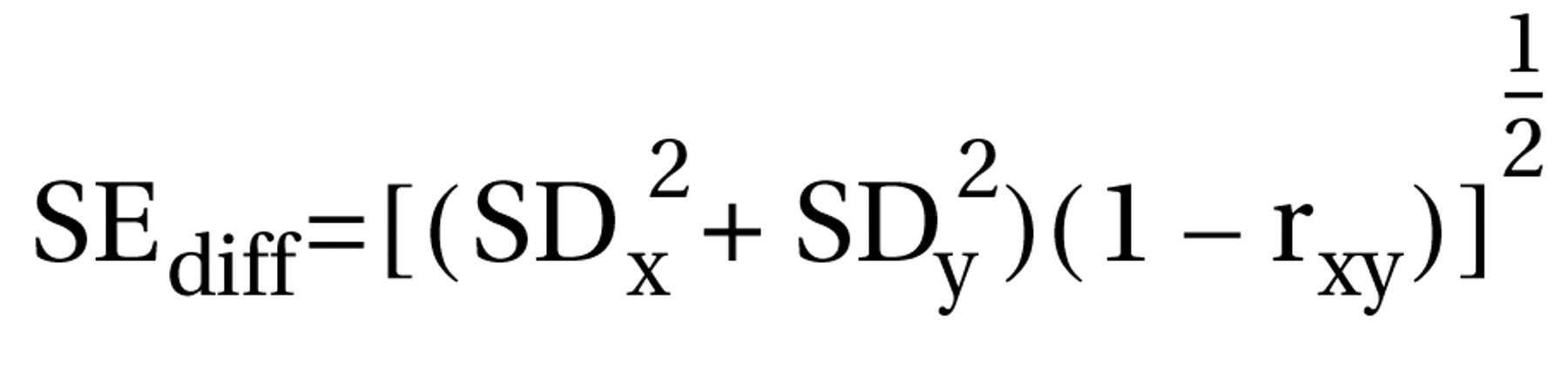

Norms for change were developed for the Aged Schizophrenia Assessment Schedule–Cognitive variables on the basis of mean practice effect and standard error of measurement associated with each of the variables using the reliable change index plus practice effect method

(10–

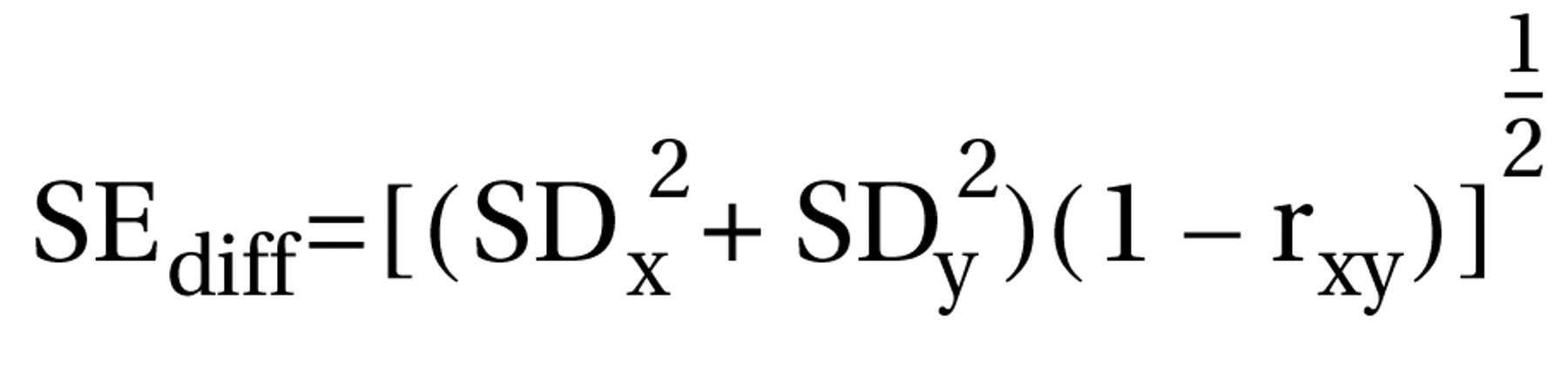

12). Specifically, 90% confidence intervals were developed for the standard error of the difference for each test. The standard error of the difference describes the spread of the distribution of neuropsychological change scores that would be expected if no actual change in cognitive abilities had occurred. The standard error of the difference (SE

diff) was determined from the standard error of measurement for each test using the following formula:

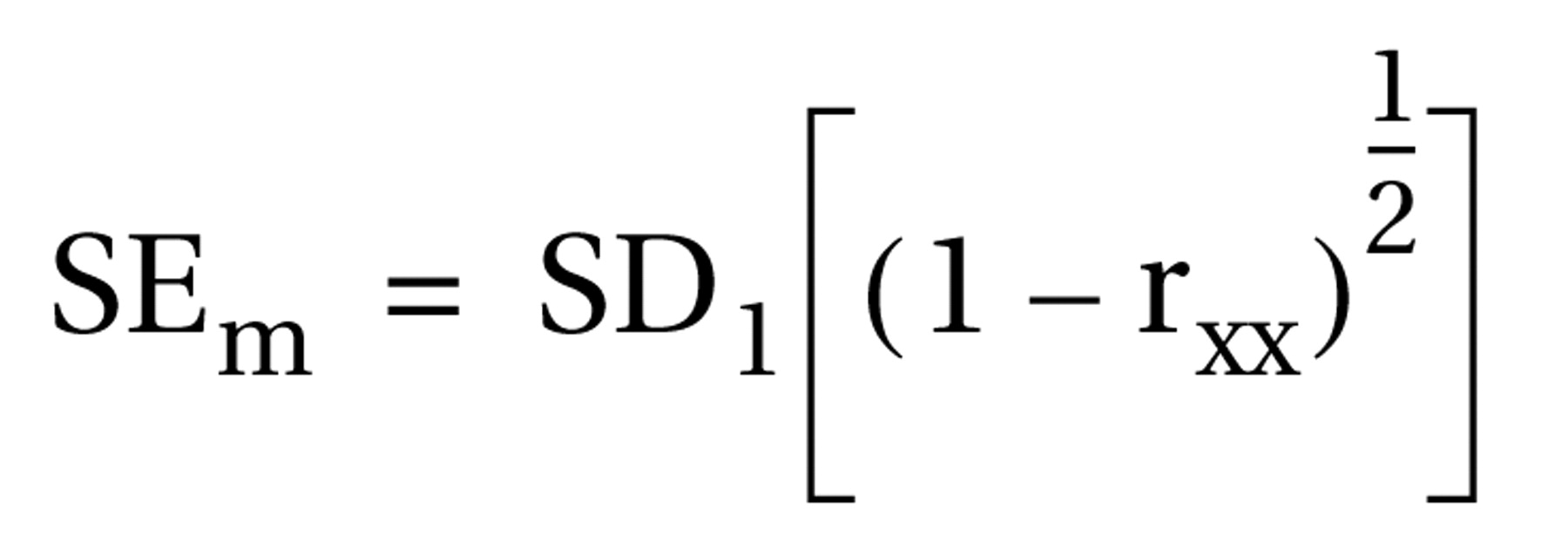

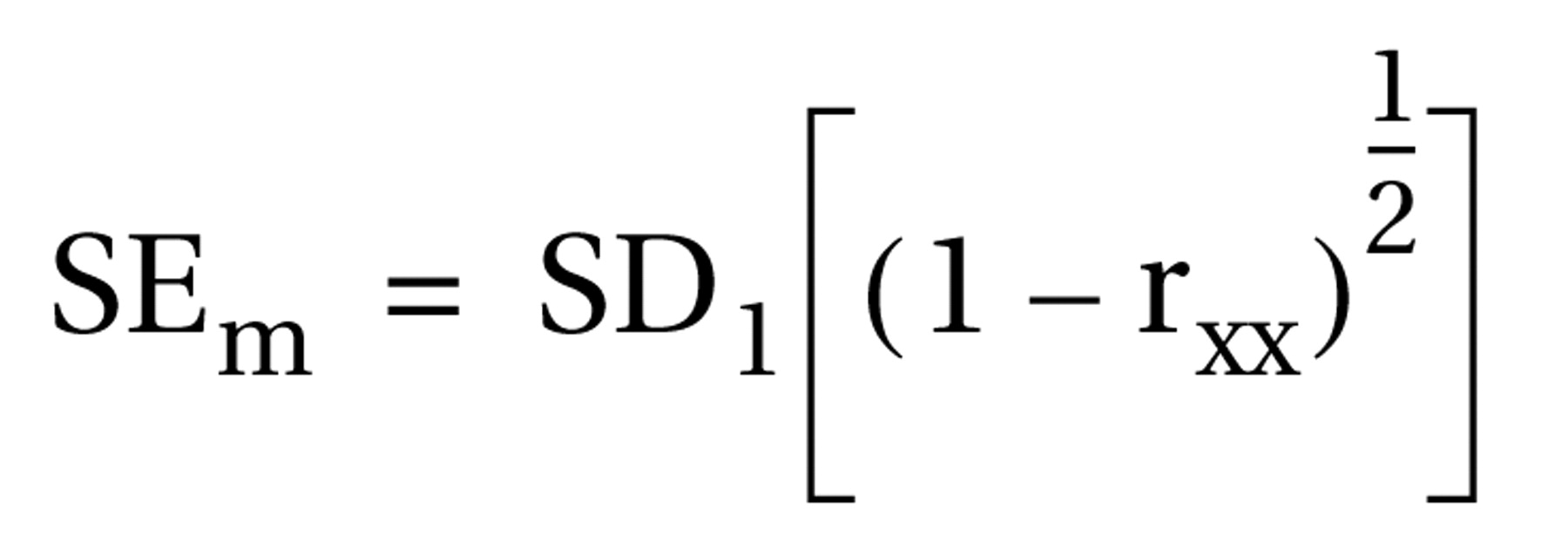

The standard error of management (SE

m) was, in turn, estimated from the standard deviation of baseline scores (SD

1) and test-retest reliability (r

xx) for each test (calculated in terms of Pearson’s r between each respective baseline and 8-week follow-up score), as observed within the present sample, using the following formula:

The values of the standard deviation of baseline scores and test-retest reliability, as well as the practice effect (the mean difference between follow-up minus baseline scores), were determined from the present sample. Then, a 90% confidence interval for expected retest scores (X2) was determined by multiplying the SEdiff by ±1.64, using the formula:

90% confidence interval = (X1 + mean practice effect) ± 1.64 SEdiff.

Thus, X1 represents the baseline score for each subject, and the mean practice effect equals the mean of the change scores (retest score minus baseline) for all subjects in the sample. Using this definition, 90% of the retest scores by chance alone should fall between the lower and upper boundaries (adjusted for practice effects) of this confidence interval. Retest scores above this boundary would be expected to occur less than 5% of the time and would represent statistically significant improvement; scores below this boundary would reflect statistically significant worsening.

Given a test battery of this size, most subjects may be expected to demonstrate “significant change” by random chance (i.e., on average, at least two scores on a 22-test battery would be expected to exceed a 90% confidence interval). Thus, we also applied the confidence intervals for each test to the subjects within this data set to determine the number of tests that he or she had retest scores that were better, worse, or within the expected confidence intervals. This base rate information is intended to provide a basis by which users of these tests can determine the number of tests on which significant positive or negative changes must be observed in order to exceed the level expected by chance alone.

Finally, we examined all of our test-retest differences as a function of the baseline levels of cognitive functioning in the subjects. We expected that patients with varying levels of cognitive impairment might have differences in their error variance, although we did not have a specific hypothesis as to which group would demonstrate more variability. In order to define a subgroup of patients with general cognitive impairment, we created a summary score that was based on performance across 11 scores for which we had sufficient normative basis to define impairment (Trail Making Test parts A and B, digit span, letter-number sequencing, digit symbol, symbol search, 64-card Wisconsin Card Sorting Test perseverative errors, letter fluency task total correct, animal fluency, and dominant and nondominant mean scores on the Finger Tapping Test). For each score, we converted the raw score to a scaled score, wherein the normative mean was 10 and standard deviation was 3, with higher scores reflecting better performance. We were interested in absolute level of performance, rather than correcting for demographic influences, so these conversions were based on the WAIS-III Reference Group Norms, the 64-card Wisconsin Card Sorting Test Census Matched Adult Sample, and currently in press update of the scaled score conversions from the Heaton, Grant, and Matthews norms. We then calculated the mean scaled score across these tests. We defined those subjects whose mean scaled score was 7 or less as “impaired.”

Results

Site Effects

Multivariate analyses of variance (MANOVA) were used to compare the three sites on demographic variables, psychopathology and motor symptoms, and baseline and change scores on the neuropsychological measures. None of these three MANOVAs reached statistical significance.

Demographic Characteristics

The study group represented a diverse range of patients in terms of age (mean=59.4 years, SD=8.4, range=45–77), education (mean=12.0 years, SD=2.6, range=5–17), and ethnicity (Caucasian: 53.3%, N=24; African American: 35.6%, N=16; Latino: 6.7%, N=3; Asian American: 4.4%, N=2). Eighty percent of the patients (N=36) were male. The mean test-retest interval was close to the targeted interval of 8 weeks (actual mean interval was 8.8 weeks, SD=0.9, range=6.9–12.3).

Stability of Psychopathology and Motor Symptoms

There were no significant changes in the mean psychopathology ratings or motor symptoms. All paired-sample t tests comparing the baseline to 8-week follow-up ratings were nonsignificant (all t<1.63, all p>0.11), suggesting no significant changes in mean performance, and all test-retest correlations were significant (all r>0.69, all p<0.001) suggesting participants tended to maintain their relative positions within the distribution of each psychopathology rating or motor rating scale from baseline to follow-up. Since none of the change scores for psychopathology or movement disorder symptoms were significantly different from 0, changes in psychopathology and motor symptoms were not used as covariates in the subsequent analyses evaluating the stability on cognitive measures.

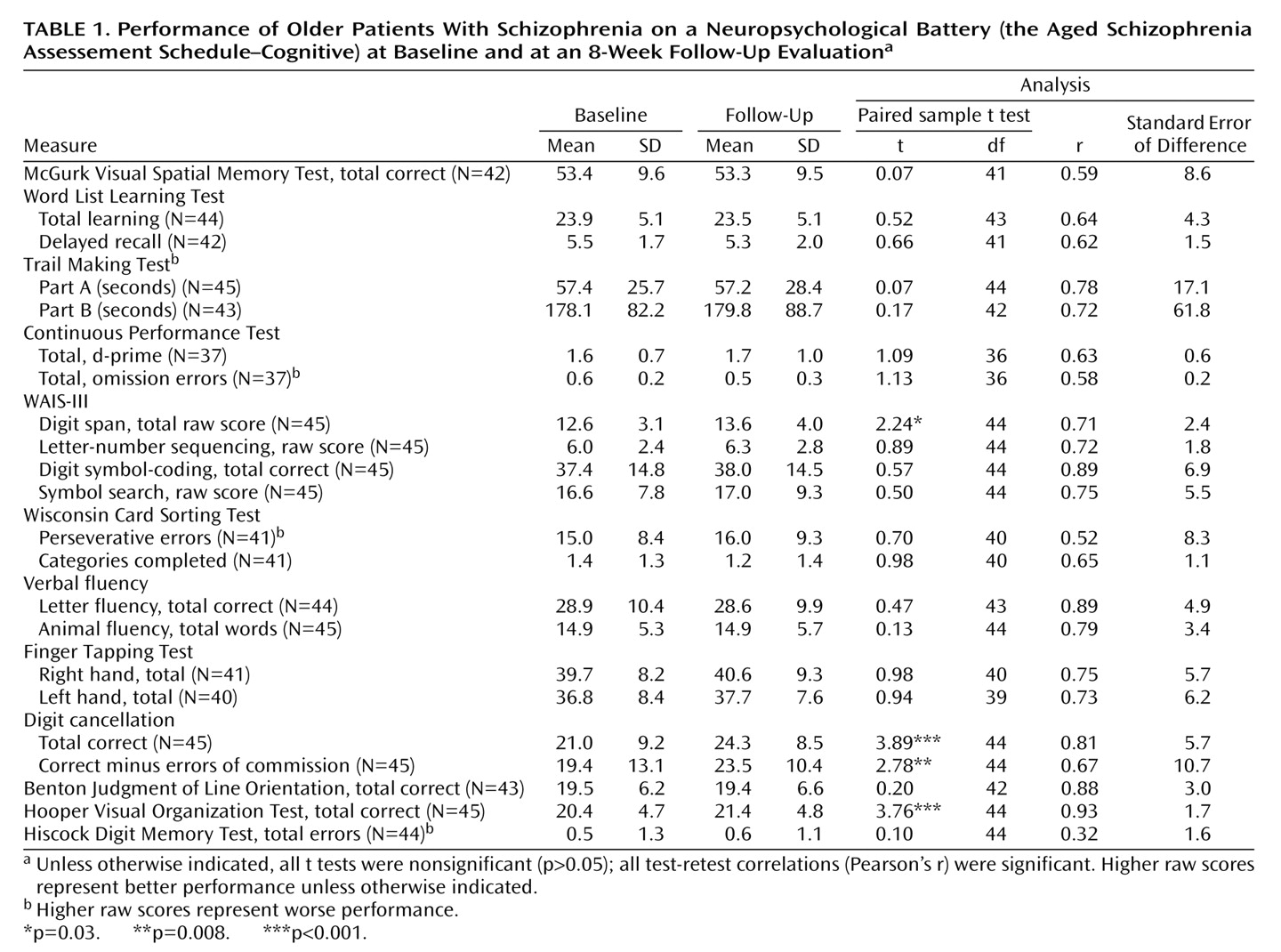

Neuropsychological Performance From Baseline to 8-Week Follow-Up Evaluation

As shown in

Table 1, the baseline to 8-week test-retest correlations for each of the 22 neuropsychological scores were all significant (all p<0.001 except for total errors on the Hiscock Digit Memory Test [p<0.04]) suggesting that the performance relative to the entire sample tended to remain stable across the 8-week follow-up. With one exception, all of the correlations were in the range that would be considered a “large effect size” (r≥0.50). The one exception was the Hiscock score: while statistically significant, the magnitude of the correlation (0.32) was at the lower end of the “medium” effect size range. Yet, constricted variance and a highly skewed distribution of Hiscock errors is to be expected (errors are rare among cooperative examinees), so the potential magnitude of the test-retest correlation may be naturally attenuated. For example, 91% of the sample (N=40 of 44) had 0 or 1 errors and only 4.5% (two patients) had three or more errors, thus truncating the range of scores and reducing the correlations.

As also shown in

Table 1, practice effects on most of the 22 neuropsychological test scores were absent or minimal. Paired-sample t tests revealed significant changes on only four of the 22 scores (all in the direction of improved performance with retesting): digit span, digit cancellation (total correct), digit cancellation (total correct minus total errors), and the Hooper Visual Organization Test (total correct). Note that two of these scores came from a single task (digit cancellation), and it is notable that this task was one of the few in the battery that used alternate forms. If the Bonferroni correction were applied to correct for multiple comparisons (p<0.002 [0.05/22]), only the digit cancellation total correct and Hooper total correct changes would remain significant.

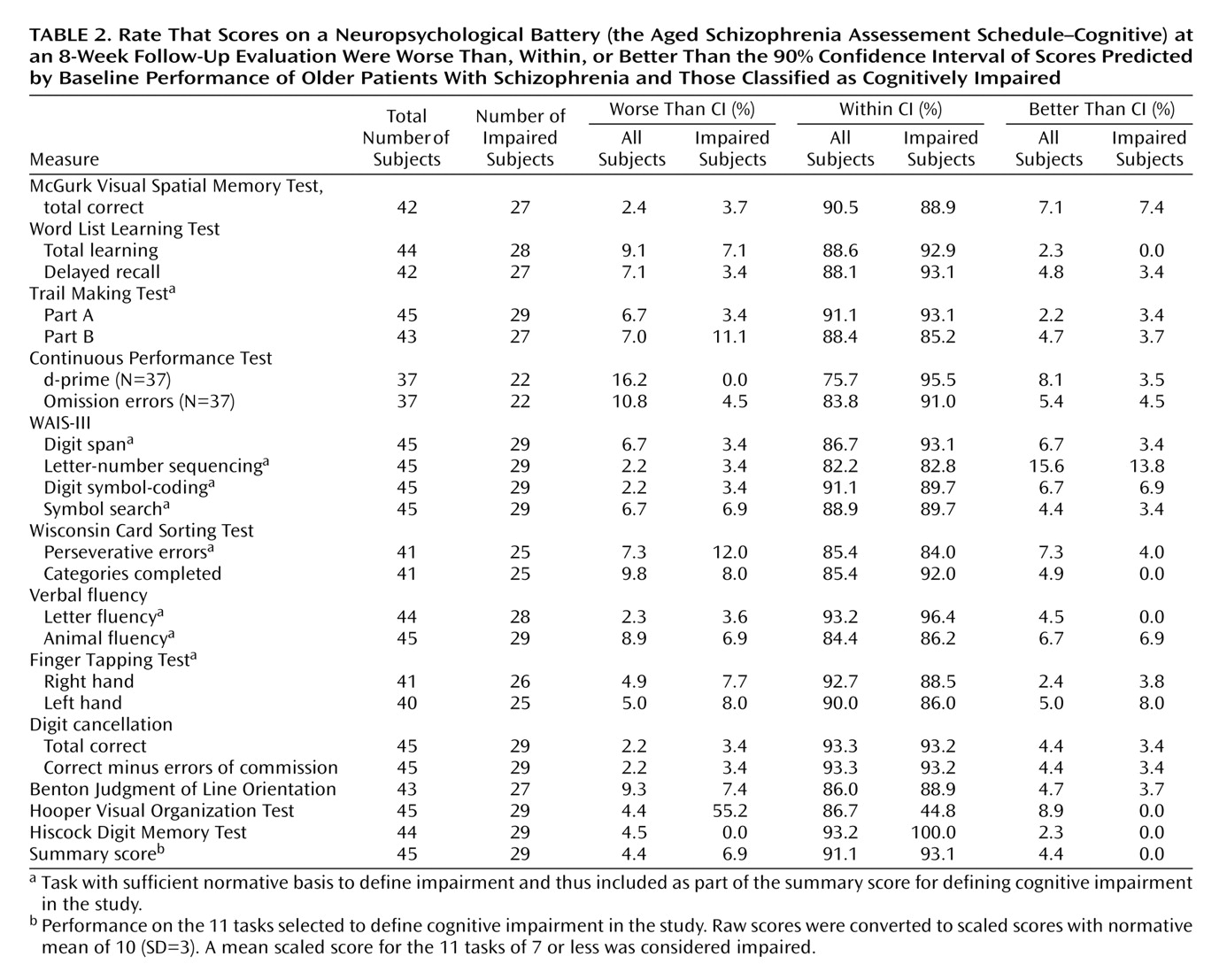

Observed Follow-Up Scores Versus Predicted Follow-Up Scores

For each subject, we calculated the difference between the follow-up score and the baseline score. For each test score, the mean of these differences across all subjects was taken as the expected practice effect for that test. We then added that expected practice effect (the mean difference among all subjects) to each subject’s baseline score to compute a “predicted follow-up score.” We then placed 90% confidence intervals around each predicted follow-up score using the methods described in Statistical Analyses. For each test, we then computed the proportion of participants whose actual follow-up score was worse than, within, or better than the confidence interval around the predicted follow-up score. As shown in

Table 2, most test scores were in fact within the 90% confidence interval around predicted follow-up scores for each of the 22 test scores. As expected, a small proportion of the observed follow-up scores fell outside the 90% confidence intervals (by chance alone, 5% of the scores are expected to be worse than the 90% confidence interval, 5% are expected to be better than the 90% confidence interval). Since scores of 5% are expected, only those scores substantially greater than 5% reflect a tendency toward excessive change. As seen in the table, with few exceptions, both the impaired patients and the entire sample were essentially similar in the distributions of follow-up scores. The only test with a major skew in its distribution was the Hooper Visual Organization Test, where no impaired patient did notably better on retest and a substantial subset did worse.

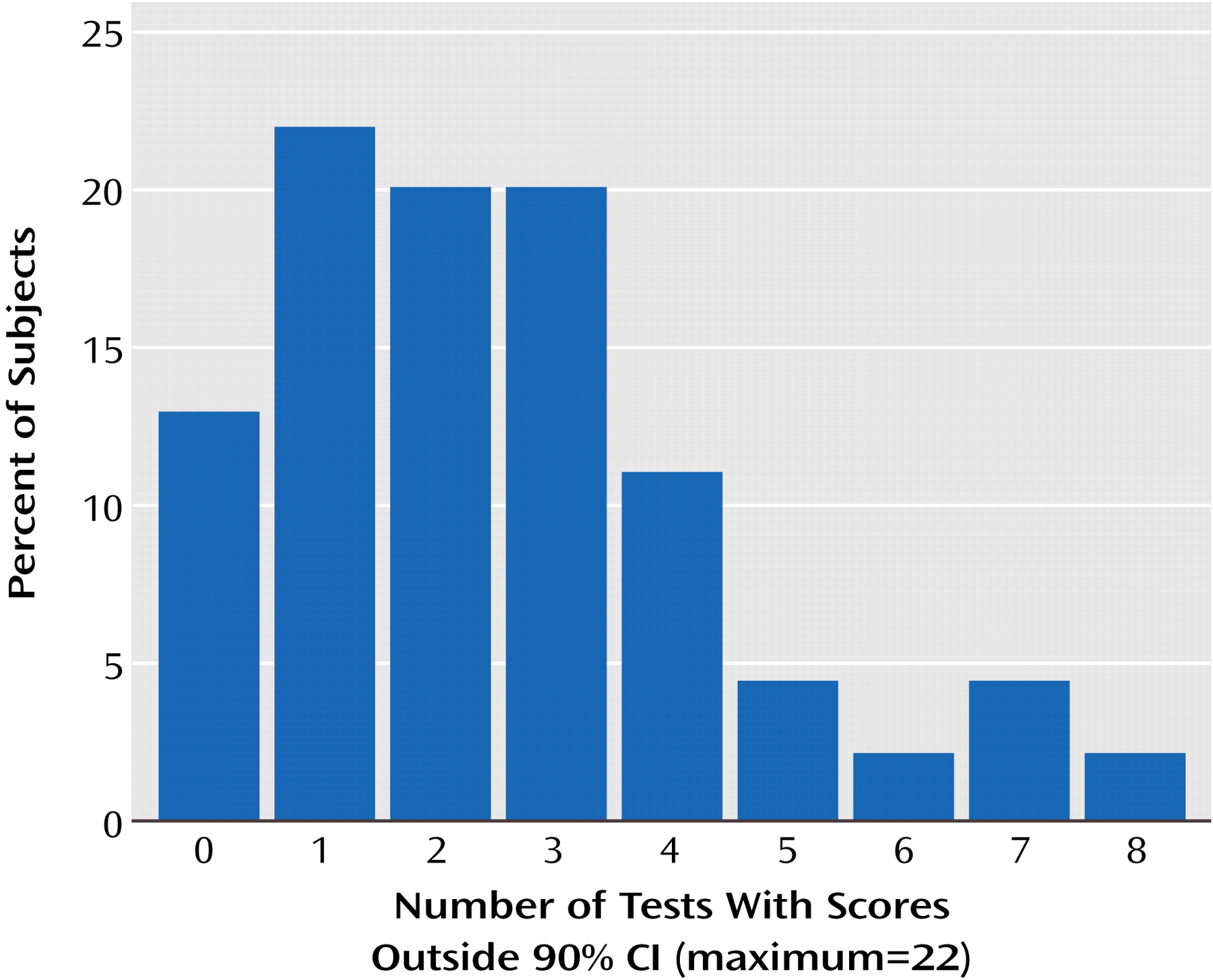

Next, for each subject we counted the number of observed follow-up test scores that were worse than, within, or better than the 90% confidence interval around each respective predicted follow-up score. These scores are presented in

Figure 1. Only six (13.3%) of the 45 participants had no scores at all with “significant change” (follow-up score better than or worse than the 90% confidence interval) on the 22 tests examined. However, since two tests per subject would be expected to be outside the 90% confidence interval by chance alone, the fact that the majority of subjects (55%) had no more than two follow-up scores that fell outside the 90% confidence interval indicates that these test scores are quite similar in their retest characteristics across subjects. In fact, only 9% of the subjects had more than five individual scores from the 22-test battery that were outside the 90% confidence interval at retest.

Discussion

There are several findings of interest in this study. First, there was no evidence of substantial practice-related changes in performance at retest for the majority of the cognitive variables that we examined. This is noteworthy because these are the constructs that are commonly used in clinical trials to study cognitive enhancement with newer antipsychotic medications. Several of these studies have not employed conventional antipsychotic comparator samples because of the difficulty in obtaining patients who are willing to enter a clinical trial and be randomly assigned to older antipsychotic medications. These data suggest that the changes in cognitive functioning reported in those studies, wherein treatment with olanzapine, ziprasidone, and risperidone

(28–

30) enhanced cognitive functioning, were not likely to be solely due to practice or other retesting effects on these cognitive performance measures when the performance of patients treated with conventional medications is used as a point of reference. In fact, the largest effect size for a retest difference in performance was for the difference between two alternate forms of a single test, the digit cancellation test. This finding suggests, albeit tentatively, that variance across alternate versions of the same test may be greater than retest effects with the same forms in this population.

A second finding of potential importance is the range of variance in correlations between baseline and retest performance. These data may have implications for the ability to detect reliable changes in performance associated with pharmacological or behavioral cognitive enhancement. While most tests had high test-retest reliability, some of the other tests had relatively lower test-retest reliability, such as the Wisconsin Card Sorting Test measures of categories completed and perseverative errors. For tests with reduced test-retest reliability, larger individual changes would be required in order to be detected definitively than the changes seen in some of the more reliable measures. It is of interest that the Wisconsin Card Sorting Test has demonstrated considerable variability in terms of the results across cognitive enhancement studies, while psychomotor speed, which was quite reliable in this study, has been consistently improved with atypical antipsychotic medications across studies.

For some of the variables, however, the high test-retest reliability and low standard error of the retest scores suggests that very small differences in performance would be detectable as clinically significant. For instance, in the domain of visual-motor speed, an improvement in performance of 5 seconds on part A of the Trail Making Test would exceed the 90% confidence interval, and a change of only three items on WAIS-III digit symbol test would exceed this criterion as well. Thus, relatively small changes would be seen as clinically significant, surpassing both practice effects and random variance effects. To put this in context, in a large-scale clinical trial, patients randomly assigned to treatment with olanzapine improved by an average of 8.5 seconds on part A of the Trail Making Test

(28). The findings of the current study would suggest that many of those patients would be expected to have experienced a clinically significant improvement in their trail-making performance as a result.

Recent studies have reported that digit symbol-coding performance from the WAIS-III is the most robust correlate of employment status in patients with schizophrenia

(31). The data from this study suggest that this measure has extremely high short-term test-retest reliability. This high reliability also suggests that it would be easier to find correlations between this variable and functional status measures than with other variables (such as the Wisconsin Card Sorting Test) that have relatively somewhat lower test-retest reliability. Similarly, relatively modest changes in performance could be found to be statistically significant because of the high reliability of this measure. At the same time, there are several studies that indicate that digit symbol-coding performance may improve over longer follow-up periods

(32,

33). Those studies examined patients much earlier in their illness than the current sample. Conversely, the wide test-retest confidence intervals for some of the other tests indicate that it may be difficult to detect individual changes in performance unless they are quite substantial in magnitude. It should also be considered that the longer the assessment, the more measures that would be expected to improve by chance alone.

A further point that is worthy of note is that the lack of practice effects detected in this study may be associated with the conventional antipsychotic treatments being received by the study participants. Recent research has indicated that treatment with newer antipsychotic medications is associated with greater learning with practice than conventional antipsychotic treatment

(34). It has been suggested that conventional antipsychotic treatment may actually interfere with practice effects that would occur otherwise

(34,

35). This is a difficult point to prove without retesting patients who are not receiving any antipsychotic treatments. Such a research design is problematic, in that the scientific information to be gained might not be seen to justify the risks to the participants in the study. The small proportion of patients who had any substantial number of test scores outside the 90% confidence interval at the follow-up evaluation suggests that the lack of practice effects was not due to increased random variance in most cases.

The limitations of the study include the age and clinical stability of the patients. Younger patients and those who were acutely ill at entry into the study might have different patterns of stability. Further, tests performed at a ceiling level at baseline could not improve with practice, but this does not appear to be an issue with the instrumentation in this study. There were only two tests in the battery in which baseline performance was even within two standard deviations of perfect scores, the spatial working memory test and the Benton Judgment of Line Orientation test. Thus, 20 of 22 tests still had substantial room for practice effects, and none were actually performed at ceiling level.

In conclusion, the results of this study suggest that older patients with schizophrenia who are receiving conventional antipsychotic treatment manifest quite stable cognitive performance over time, demonstrating little evidence of practice effects and little evidence of wide scatter in retest performance across subjects. There are test-by-test variations in performance that indicate that clinically significant differences across tests may differ. In addition, there may be interindividual differences in retest variance in test scores. This may be an important issue for later research, but the small number of subjects in this study who had any substantial retest variance in their scores would preclude analysis of this factor. Further, the issue of the relative validity of alternative versions of tests versus using the same test across repeated assessments has not been resolved by these results.