Predicting Suicide Attempts and Suicide Deaths Following Outpatient Visits Using Electronic Health Records

Abstract

Objective:

Method:

Results:

Conclusions:

Method

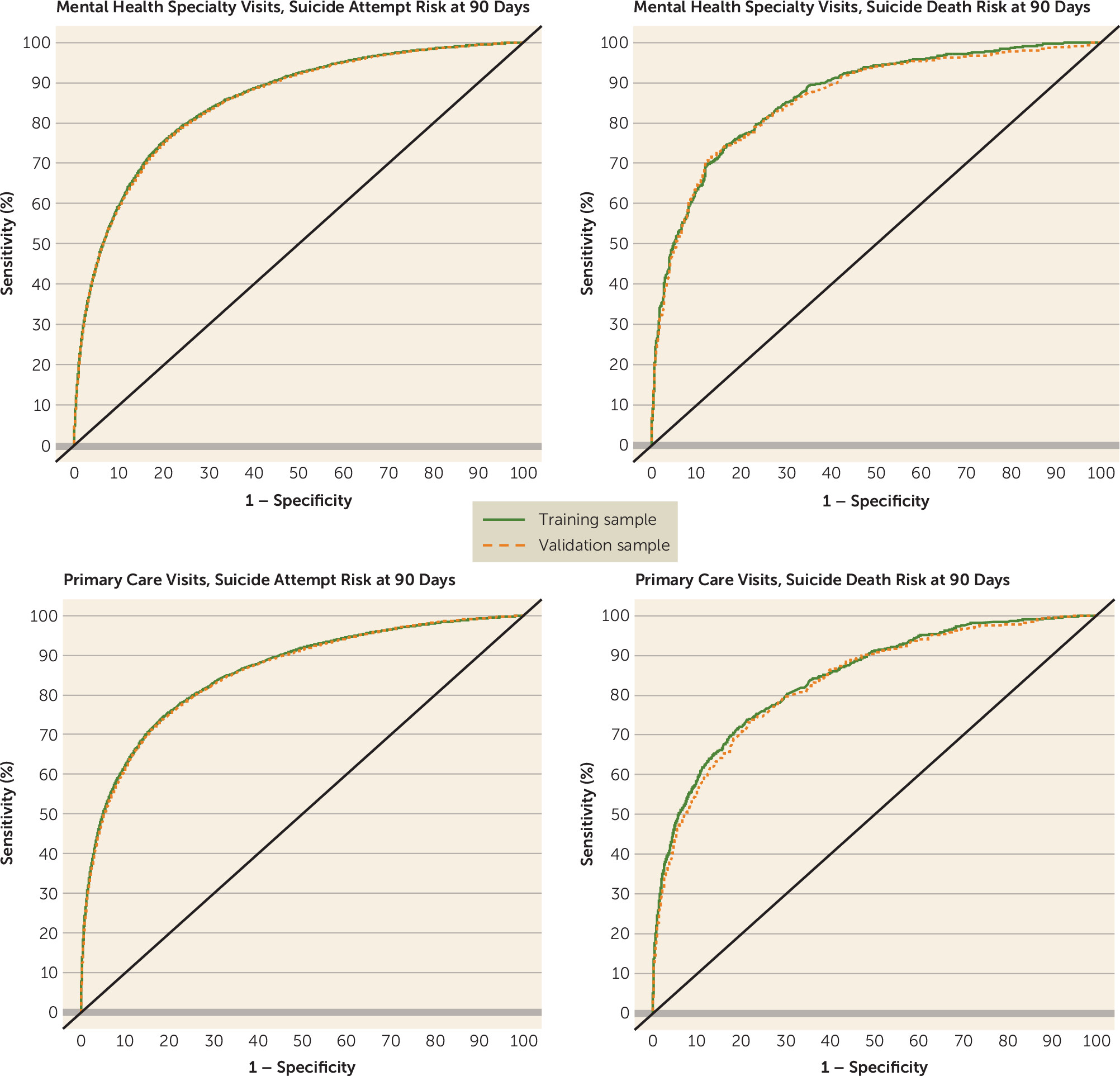

Results

| Mental Health Specialty | Primary Care | |||||||

|---|---|---|---|---|---|---|---|---|

| Training Sample | Validation Sample | Training Sample | Validation Sample | |||||

| Characteristic | N | % | N | % | N | % | N | % |

| Visits | 6,679,128 | 3,596,725 | 6,297,465 | 3,387,741 | ||||

| Female | 4,157,997 | 62 | 2,239,213 | 62 | 3,872,830 | 61 | 2,083,424 | 61 |

| Age group (years) | ||||||||

| 13–17 | 671,313 | 10 | 360,619 | 10 | 250,878 | 4 | 135,070 | 4 |

| 18–29 | 1,118,492 | 17 | 603,044 | 17 | 822,668 | 13 | 442,774 | 13 |

| 30–44 | 1,744,704 | 26 | 939,431 | 26 | 1,337,686 | 21 | 720,878 | 21 |

| 45–64 | 2,453,509 | 37 | 1,321,986 | 37 | 2,466,992 | 39 | 1,326,237 | 39 |

| 65 or older | 691,110 | 10 | 371,645 | 10 | 1,419,241 | 23 | 762,782 | 23 |

| Race | ||||||||

| White | 4,562,203 | 68 | 2,455,211 | 68 | 4,162,033 | 66 | 2,237,952 | 66 |

| Asian | 302,231 | 5 | 162,400 | 5 | 379,910 | 6 | 204,272 | 6 |

| Black | 600,219 | 9 | 324,233 | 9 | 514,021 | 8 | 276,260 | 8 |

| Hawaiian/Pacific Islander | 74,473 | 1 | 40,118 | 1 | 103,420 | 2 | 55,833 | 2 |

| Native American | 65,309 | 1 | 35,332 | 1 | 69,425 | 1 | 37,717 | 1 |

| More than one or other | 38,223 | 1 | 20,485 | 1 | 43,445 | 1 | 23,391 | 1 |

| Not recorded | 1,036,470 | 16 | 558,946 | 16 | 1,025,211 | 16 | 552,316 | 16 |

| Hispanic ethnicity | 1,486,400 | 22 | 800,547 | 22 | 1,430,611 | 23 | 769,498 | 23 |

| Insurance Type | ||||||||

| Commercial group | 5,057,328 | 76 | 2,724,286 | 76 | 4,198,138 | 67 | 2,258,974 | 67 |

| Individual | 827,218 | 12 | 445,749 | 12 | 1,079,401 | 17 | 580,225 | 17 |

| Medicare | 363,598 | 5 | 194,773 | 5 | 576,184 | 9 | 310,001 | 9 |

| Medicaid | 213,573 | 3 | 114,767 | 3 | 297,710 | 5 | 160,063 | 5 |

| Other | 217,411 | 3 | 117,150 | 3 | 146,032 | 2 | 78,478 | 2 |

| Patient Health Questionnaire item 9 score recorded at | ||||||||

| Index visit | 657,998 | 10 | 354,918 | 10 | 312,065 | 5 | 168,569 | 5 |

| Any visit in past year | 1,328,571 | 20 | 714,693 | 20 | 671,643 | 11 | 362,438 | 11 |

| Length of enrollment prior to visit | ||||||||

| 1 year or more | 5,810,841 | 87 | 3,129,151 | 87 | 5,352,845 | 85 | 2,879,580 | 85 |

| 5 years or more | 3,772,409 | 56 | 2,031,916 | 56 | 3,542,358 | 56 | 1,907,063 | 56 |

| Visits followed by | ||||||||

| Suicide attempt within 90 days | 41,470 | 0.62 | 22,329 | 0.62 | 16,302 | 0.26 | 8,688 | 0.26 |

| Suicide death within 90 days | 1,529 | 0.02 | 854 | 0.02 | 856 | 0.01 | 445 | 0.01 |

| Suicide Attempt or Death, by Care Setting | |

|---|---|

| Suicide attempt following: | |

| Mental health specialty visit (of 94 predictors selected) | Primary care visit (of 102 predictors selected) |

| Depression diagnosis in past 5 years | Depression diagnosis in past 5 years |

| Drug abuse diagnosis in past 5 years | Suicide attempt diagnosis in past 5 years |

| PHQ-9 item 9 score=3 in past year | Drug abuse diagnosis in past 5 years |

| Alcohol use disorder diagnosis in past 5 years | Alcohol abuse diagnosis in past 5 years |

| Mental health inpatient stay in past year | PHQ-9 item 9 score=3 in past year |

| Benzodiazepine prescription in past 3 months | Suicide attempt diagnosis in past 3 months |

| Suicide attempt in past 3 months | Suicide attempt diagnosis in past year |

| Personality disorder diagnosis in past 5 years | Personality disorder diagnosis in past 5 years |

| Eating disorder diagnosis in past 5 years | Anxiety disorder diagnosis in past 5 years |

| Suicide attempt in past year | Suicide attempt diagnosis in past 5 years with schizophrenia diagnosis in past 5 years |

| Mental health emergency department visit in past 3 months | Benzodiazepine prescription in past 3 months |

| Self-inflicted cutting/piercing in past year | Eating disorder diagnosis in past 5 years |

| Suicide attempt in past 5 years | Mental health emergency department visit in past 3 months |

| Injury/poisoning diagnosis in past 3 months | Injury/poisoning diagnosis in past year |

| Antidepressant prescription in past 3 months | Mental health emergency department visit in past year |

| Suicide death following: | |

| Mental health specialty visit (of 43 predictors selected) | Primary care visit (of 29 predictors selected) |

| Suicide attempt diagnosis in past year | Mental health emergency department visit in past 3 months |

| Benzodiazepine prescription in past 3 months | Alcohol abuse diagnosis in past 5 years |

| Mental health emergency department visit in past 3 months | Benzodiazepine prescription in past 3 months |

| Second-generation antipsychotic prescription in past 5 years | Depression diagnosis in past 5 years |

| Mental health inpatient stay in past 5 years | Mental health inpatient stay in past year |

| Mental health inpatient stay in past 3 months | Injury/poisoning diagnosis in past year |

| Mental health inpatient stay in past year | Anxiety disorder diagnosis in past 5 years |

| Alcohol use disorder diagnosis in past 5 years | PHQ-9 item 9 score=1 with PHQ-8 score |

| Antidepressant prescription in past 3 months | PHQ-9 item 9 score=3 with age |

| PHQ-9 item 9 score=3 with PHQ-8 score | Suicide attempt diagnosis in past 5 years with age |

| PHQ-9 item 9 score=1 with age | Mental health emergency department visit in past year |

| Depression diagnosis in past 5 years with age | PHQ-9 item 9 score=2 with age |

| Suicide attempt diagnosis in past 5 years with Charlson score | PHQ-9 item 9 score=3 with PHQ-8 score |

| PHQ-9 item 9 score=2 with age | Bipolar disorder diagnosis in past 5 years with age |

| Anxiety disorder diagnosis in past 5 years with age | Depression diagnosis in past 5 years with age |

| Risk Score Percentile Strata | Predicted Riskb (%) | Actual Riskc (%) | % of All Attemptsd | Standardized Event Ratioe |

|---|---|---|---|---|

| Suicide attempts | ||||

| Following a mental health specialty visit | ||||

| >99.5th | 13.0 | 12.7 | 10 | 20.7 |

| 99th to 99.5th | 8.5 | 8.1 | 6 | 12.9 |

| 95th to 99th | 4.1 | 4.2 | 27 | 6.7 |

| 90th to 95th | 1.9 | 1.8 | 15 | 3.0 |

| 75th to 90th | 0.9 | 0.9 | 21 | 1.4 |

| 50th to 75th | 0.3 | 0.3 | 13 | 0.51 |

| <50th | 0.1 | 0.1 | 8 | 0.16 |

| Following a primary care visit with a mental health diagnosis | ||||

| >99.5th | 8.6 | 8.0 | 15 | 30.5 |

| 99th to 99.5th | 4.1 | 4.2 | 8 | 16.3 |

| 95th to 99th | 1.6 | 1.6 | 25 | 6.2 |

| 90th to 95th | 0.7 | 0.7 | 13 | 2.6 |

| 75th to 90th | 0.3 | 0.3 | 18 | 1.2 |

| 50th to 75th | 0.1 | 0.1 | 12 | 0.49 |

| <50th | 0.04 | 0.04 | 9 | 0.17 |

| Suicide deaths | ||||

| Following a mental health specialty visit | ||||

| >99.5th | 0.654 | 0.694 | 12 | 24.6 |

| 99th to 99.5th | 0.638 | 0.595 | 11 | 21.5 |

| 95th to 99th | 0.162 | 0.167 | 25 | 6.3 |

| 90th to 95th | 0.068 | 0.088 | 16 | 2.3 |

| 75th to 90th | 0.031 | 0.029 | 16 | 1.1 |

| 50th to 75th | 0.014 | 0.015 | 13 | 0.54 |

| <50th | 0.003 | 0.003 | 6 | 0.12 |

| Following a primary care visit with a mental health diagnosis | ||||

| >99.5th | 0.536 | 0.435 | 14 | 28.8 |

| 99th to 99.5th | 0.181 | 0.197 | 7 | 13.0 |

| 95th to 99th | 0.092 | 0.083 | 22 | 5.6 |

| 90th to 95th | 0.035 | 0.038 | 13 | 2.5 |

| 75th to 90th | 0.018 | 0.019 | 19 | 1.3 |

| 50th to 75th | 0.009 | 0.009 | 15 | 0.62 |

| <50th | 0.003 | 0.003 | 10 | 0.19 |

| Risk Score Percentile Cut-Points | Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) |

|---|---|---|---|---|

| Suicide attempts | ||||

| Following mental health specialty visits | ||||

| >99th | 16.8 | 99.1 | 10.4 | 99.4 |

| >95th | 43.7 | 95.2 | 5.4 | 99.6 |

| >90th | 58.3 | 90.3 | 3.6 | 99.7 |

| >75th | 79.2 | 75.2 | 2.0 | 99.8 |

| >50th | 92.1 | 50.0 | 1.1 | 99.9 |

| Following primary care visits with a mental health diagnosis | ||||

| >99th | 23.5 | 99.1 | 6.1 | 99.8 |

| >95th | 48.2 | 95.1 | 2.5 | 99.9 |

| >90th | 61.0 | 90.1 | 1.6 | 99.9 |

| >75th | 79.1 | 75.1 | 0.8 | 99.9 |

| >50th | 91.4 | 50.1 | 0.5 | 99.9 |

| Suicide deaths | ||||

| Following mental health specialty visits | ||||

| >99th | 23.1 | 99.0 | 0.62 | 99.9 |

| >95th | 48.1 | 95.0 | 0.26 | 99.9 |

| >90th | 64.3 | 90.0 | 0.17 | 99.9 |

| >75th | 80.4 | 75.1 | 0.08 | 99.9 |

| >50th | 94.0 | 50.0 | 0.05 | 99.9 |

| Following primary care visits with a mental health diagnosis | ||||

| >99th | 20.9 | 99.0 | 0.31 | 99.9 |

| >95th | 43.1 | 95.0 | 0.13 | 99.9 |

| >90th | 55.7 | 90.0 | 0.08 | 99.9 |

| >75th | 74.8 | 75.1 | 0.05 | 99.9 |

| >50th | 90.3 | 50.0 | 0.03 | 99.9 |

Discussion

Potential Limitations

Methodologic Considerations

Context

Clinical Implications

Footnote

Supplementary Material

- View/Download

- 594.42 KB

References

Information & Authors

Information

Published In

History

Keywords

Authors

Funding Information

Metrics & Citations

Metrics

Citations

Export Citations

If you have the appropriate software installed, you can download article citation data to the citation manager of your choice. Simply select your manager software from the list below and click Download.

For more information or tips please see 'Downloading to a citation manager' in the Help menu.

View Options

View options

PDF/EPUB

View PDF/EPUBLogin options

Already a subscriber? Access your subscription through your login credentials or your institution for full access to this article.

Personal login Institutional Login Open Athens loginNot a subscriber?

PsychiatryOnline subscription options offer access to the DSM-5-TR® library, books, journals, CME, and patient resources. This all-in-one virtual library provides psychiatrists and mental health professionals with key resources for diagnosis, treatment, research, and professional development.

Need more help? PsychiatryOnline Customer Service may be reached by emailing [email protected] or by calling 800-368-5777 (in the U.S.) or 703-907-7322 (outside the U.S.).