The advances and promises around AI have skyrocketed, with billions of dollars already invested and much more expected. OpenAI’s ChatGPT has become a household name, extending its influence into various industries and professions, including psychiatry. A

recent survey of 138 psychiatrists affiliated with APA revealed high use and expectation for ChatGPT in clinical question-answering, administrative tasks, and training.

The global market for chatbots in mental health and therapy is

projected to reach approximately $6.5 billion by 2032, growing at a much faster rate than health care chatbots in general. Factors driving this growth are well-known in the psychiatric community: a shortage of mental health professionals, the increasing prevalence of mental health conditions, and a rising demand for scalable, accessible, convenient, affordable, and evidence-based mental health services. Since the release of ChatGPT in November 2022, the ease of building chatbots using AI technologies and the proliferation of large-language models have also contributed to this trend.

Although many observers and analysts expect AI to transform society, industry, and sciences, this technology has its limitations and risks. The enthusiasm for ChatGPT’s potential in clinical practice needs to be grounded in a realistic understanding of its capabilities and the challenges of integrating such technologies into the clinical workflow in a way that preserves patient privacy, maximizes patient safety, and supports provider well-being. The rapid advancement of AI technology underscores the need for the psychiatric community to stay informed and proactive about the adoption and integration of AI into mental health care.

Generative AI 101: Understanding GPT and Language Models

GPT, or generative pre-trained transformer, is a type of

language model designed to understand and generate human-like text that can be used for tasks such as text completion, translation, and conversation. Language models leverage a surprisingly simple idea of predicting the next word given the surrounding context. For example, given the sentence “How are [BLANK] doing today?,” GPT predicts a probability distribution over the most likely words, such as “you,” “they,” or “we.”

This simple idea becomes incredibly powerful when scaled up with vast amounts of training data, such as billions or trillions of words from the internet. Advanced computing power and the latest graphical processing units enable the model to learn natural language capabilities like semantics, grammar, and inference.

Generative AI extends beyond language models like OpenAI’s GPT-4 and Meta’s LLaMA, which are examples of large language models (LLMs). Generative AI encompasses a broad range of AI techniques that learn from vast amounts of data to identify underlying patterns and generate new content with similar patterns based on the data it has ingested. For example, models like OpenAI’s DALL-E or Stability AI’s Stable Diffusion can generate images from text descriptions, while OpenAI’s Sora can generate videos. Generative AI can translate inputs across modalities, converting text, image, audio, video, or even brain signals into text, image, audio, video, and more.

Generative AI models that power popular chatbots like OpenAI’s ChatGPT, Microsoft’s Copilot, and Anthropic’s Claude are often referred to as foundation models. Some of the most advanced of these are called frontier models. While foundation models can be used as standalone systems, oftentimes they serve as a starting point or “foundation” for the development of more advanced and complex AI systems because of their key properties:

•

They have emerging capabilities. Foundation models can behave in ways that they were not explicitly trained for, leveraging extensive, diverse datasets to handle novel challenges. For example, GPT-4 can solve novel tasks in mathematics, medicine, and law.

•

They show potential on a range of applications. Foundation models can be customized for specific tasks, leading to more flexible, adaptable, efficient, and cost-effective AI development. Techniques for adaptation include prompt-engineering, in-context learning, fine-tuning, and retrieval-augmented generation—and more are being invented today.

•

Foundation models are frequently integrated into more complex systems as core components. While foundation models can stand on their own, these complex architectures incorporate multiple foundation models and/or computer systems to conduct more sophisticated tasks.

However, these models also have notable limitations and vulnerabilities. They can hallucinate, generate incorrect outputs, and are often influenced by biases present in their training data. They are sensitive to input, and even slight variations to that input can lead to widely inconsistent outputs; such vulnerability can be used to exploit foundation models for unintended misuse. They can be a jack of all trades, master of none.

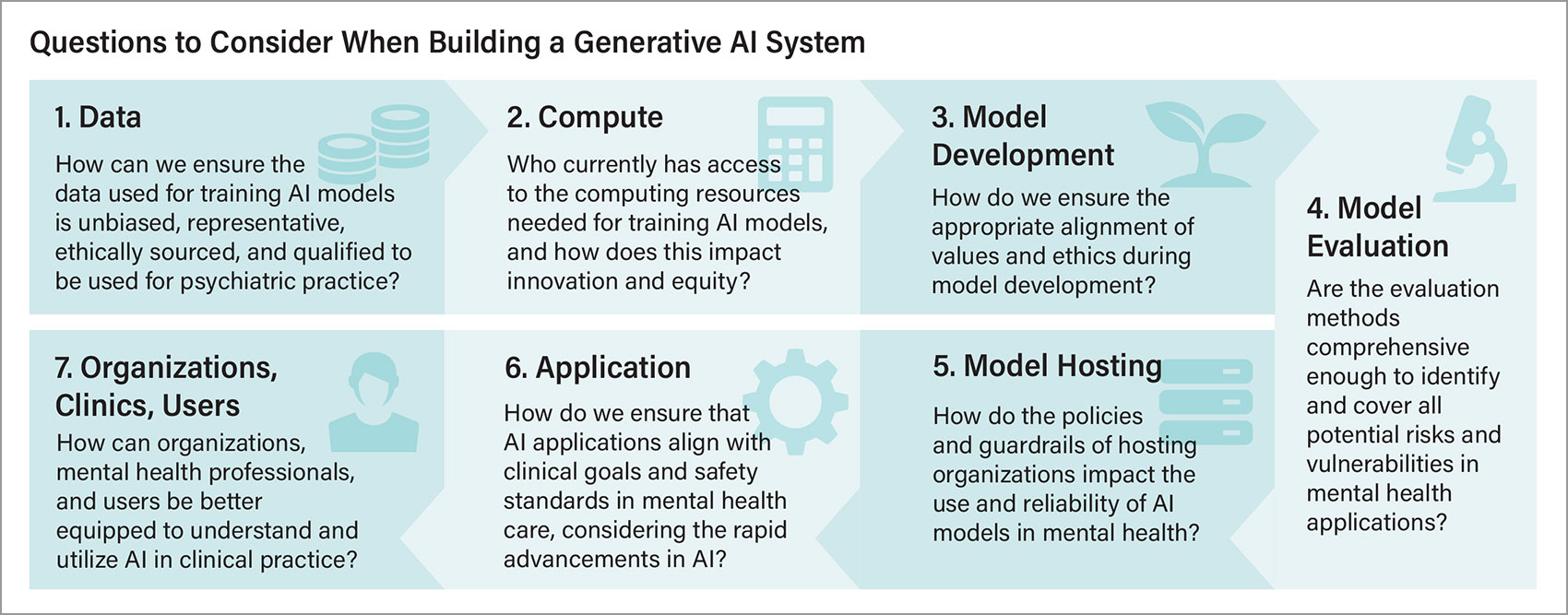

When considering the impressive capabilities of generative AI models, it is easy to overlook the complexities involved in building these systems (see “

Understanding the Architecture of AI”).

Opportunities for the Psychiatric Community

With an understanding of how generative AI–powered applications are built, there are exciting, unique opportunities that generative AI presents to enhance mental health care in all parts of the ecosystem.

Professional development: Generative AI brings a new dimension to professional development and collaboration by simulating complex and diverse clinical scenarios that evolve in real time based on clinician input. This would allow an interactive and reflective training environment where mental health professionals can practice handling different patients, navigate ethical dilemmas, and refine their skills in a controlled setting, supported by feedback.

As demand grows for mental health professionals to guide AI, whether as expert consultants to define desired AI behaviors and safety standards, or as data annotators to provide reinforcement learning from human feedback (RLHF), it is crucial to position them as leaders in tech innovation. Generative AI can lower the barrier to entry for training new mental health professionals in AI literacy, which is critical not only for defining the future of AI-augmented mental health care but also for improving patient care. In addition, clinicians can be prepared to educate patients about AI and engage with their curiosity and questions about the technology, especially in relation to their mental health.

Beyond training clinicians, this pivotal moment in health care provides an opportunity for interdisciplinary programs aimed at training professionals who can navigate and integrate various fields, such as mental health, technology, social work, public health, and ethics. Such efforts may encourage a more comprehensive understanding of mental health, blending technological innovation with social care and medical expertise to transform mental health care.

Enhancing clinical practice: Generative AI offers unique capabilities to provide context-aware and real-time feedback that traditional AI could not—including its adaptability to conversation flow, evolving capabilities to learn from interactions, and, of course, its defining characteristic to dynamically generate content.

Digital knowledge is no longer recorded in static content like documents, images, or chart notes, but rather is embedded in and can be served up as conversations and interactions with humans and AI systems. This is a crucial point, because mental health care is fundamentally anchored in therapeutic interactions between the clinician and the patient. Generative AI has the potential to significantly enhance human relationships in psychiatric care rather than replace them. This could involve offloading administrative burdens and allowing clinicians to focus more on building meaningful relationships with their patients.

Ongoing patient interactions can be analyzed and interpreted dynamically, generating recommendations tailored to the patient’s current state and prior history to assist the clinician toward

empathic communication and strengthening therapeutic alliance. Generative AI could be leveraged for digital therapeutics and AI-based psychotherapy to fill the gaps between encounters or to take some of the load off clinicians by managing lower-acuity patients through a stepped-care approach or catering to those who prefer or might be a good fit for AI-based treatments. These potential enhancements to clinical practice must be rigorously evaluated for their efficacy, as we still lack the evidence base for the efficacy of existing digital mental health solutions.

We can bridge the gap between clinical knowledge and patient understanding during and outside of clinical encounters. For example, we can improve patient comprehension of medical content by matching the patient’s level of understanding and learning style. Or, we can unlock the potential of

patient-generated data in new ways where unstructured records of the side effects or symptoms recorded in speech, scribbled notes, or text can be extracted and summarized into actionable insights for clinical decision making.

Treatment innovation: Dynamic content generation allows for personalized treatments by crafting therapeutic interventions and adaptive homework exercises based on patient progress and needs. For example, instead of the traditional skill-based psychotherapy that involves paper instructions or guided apps, we can enhance skills practice and improve patient engagement with interactivity, role-playing, immersive experiences, and just-in-time feedback with a diversity of scenarios generated through AI under guidance. We are starting to see the potential for materializing the 3D environment on demand to lower the barrier to developing traditionally expensive VR-based or immersive therapy.

Additionally, generative AI can be used as a provocateur or a tutor to promote critical and creative thinking, challenge assumptions, encourage evaluations, and offer counterarguments. Patients could benefit from brainstorming or planning support for between-session homework.

Advancing scientific understanding: Generative AI also lowers the barrier for gaining scientific insights from conversational data and chart notes that have been difficult to analyze with traditional data-analysis methods. Biopsychosocial research can be enhanced through supporting rapid exploration of the vast scientific literature, conducting meta-analyses at scale, and identifying novel or unique cases or treatments that may otherwise be overlooked. Additionally, generative AI can help mental health professionals with accessing and interpreting literature from computer science, affective computing, or AI, broadening their awareness and understanding of mental health research.

By studying how AI affects cognition and mental health, generative AI opens up new areas of psychological research and positions the field to lead in discovering new psychological phenomena. Similar to the “Google effect” on memory, where having easy access to information changes recall, generative AI introduces capabilities that go beyond external storage into the realm of outsourced cognition, where having intelligence at our fingertips allows completion of our thoughts before they are fully formed. Having access to not just externalized memory but immersive experiences with instantiations of digital memories, digital twins, or deceased loved ones is a new area to be explored and studied.

In addition, the psychiatry community has already been defining and treating technology addiction and dependency. With the potential for generative AI to be more pervasive in people’s lives, there is a need to anticipate and study the relationships between AI use and AI reliance, dependence, and addiction, as well as how different interaction and usage patterns of generative AI technologies may alter our behavior and cognition.

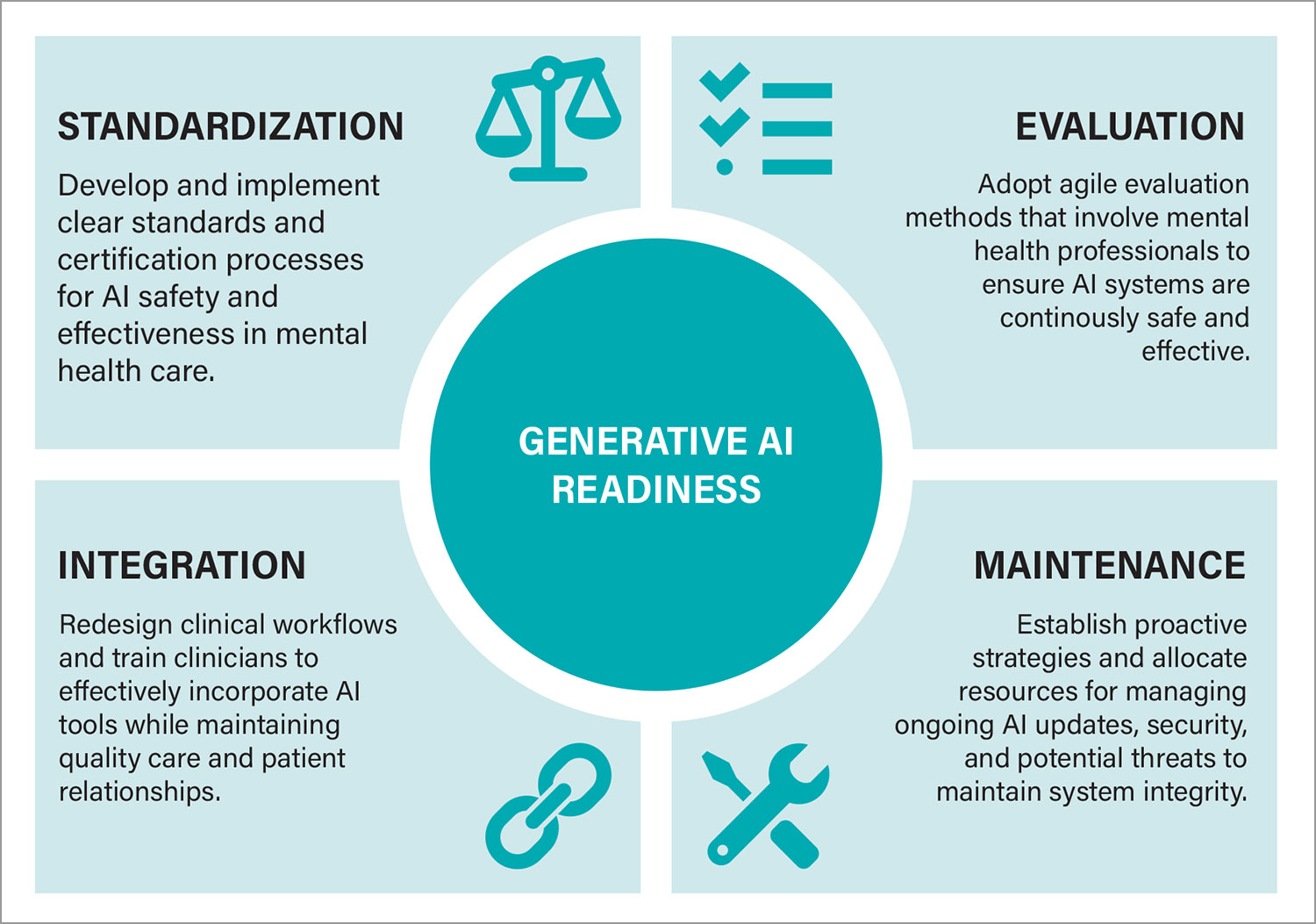

Preparing for Generative AI

So far, we have explored exciting opportunities for leveraging generative AI to build AI applications for psychiatrists and patients. However, navigating the complex supply chain and ecosystem of data, technologies, organizations, and decisions involved in building an AI system is just the beginning. Generative AI introduces new challenges and, importantly, does not magically solve existing issues. As we prepare to integrate generative AI into clinical practice, we must consider several readiness factors that enhance rather than complicate mental health care.

Standardization readiness: Defining safety and effectiveness in AI systems—In the past decade, we saw a growth in digital mental health apps, devices, and businesses with promised solutions for mental health accessibility or crises. Yet, we still lack a standard and widely adopted method to evaluate, regulate, integrate, or reimburse for these digital tools.

Ultimately, we need standardization to keep health care systems accountable and protect patients. The rapid growth of generative AI and the excitement around LLM-powered mental health chatbots add complexities to these challenges. For example, organizations like the Coalition for Health AI (CHAI) have put together

blueprints for trustworthy AI implementation in health care, and others have developed AI chatbot–specific

evaluation frameworks.

AI safety practices aim to ensure that the design, development, and deployment of AI systems are safe, secure, reliable, and beneficial to humans and society. When it comes to generative AI–powered mental health applications, we do not yet have a clear definition of “mental health safety,” nor a standard for certifying AI systems as we do for licensed human therapists who carry responsibilities, undergo regular training, and are accountable for their actions. In a non-clinical context, AI systems may refuse mental health services for safety reasons. In the clinical setting, standards must avoid risks while also serving stakeholders’ needs.

This raises critical questions: How can we define safety in the context of generative AI–powered mental health systems? What certification processes should be in place to ensure safety?

Establishing safety and effectiveness standards is particularly challenging in psychotherapy due to the diversity in therapeutic approaches and the individualized nature of conditions:

•

If we were to develop a standard for AI-based cognitive behavioral therapy (CBT), whose implementation guidelines would we follow?

•

Is there consensus on what constitutes safe and effective practice that should be encoded into our AI systems?

•

If we allowed customizations for different approaches, what processes would ensure fair and thorough assessment?

•

How can we identify the source of an AI’s harmful behavior—whether it is due to the foundation model, training data, the guardrail, the system prompt or instruction, or just a bad implementation of CBT?

Lack of standardization is especially problematic given the proliferation of apps claiming to provide psychotherapy. Without clear standards, the market could become cluttered with unsafe and ineffective tools, increasing the risk and burden to patients and health care systems.

We must consider the ethical implications of not having a standard definition of mental health safety. The lack of standards raises urgent attention to develop robust frameworks that protect patients and their data privacy, ensure that AI systems do no harm, and uphold clinical integrity. At the same time, standards must incorporate the need for inclusivity and flexibility to avoid the pitfalls of a one-size-fits-all approach.

Evaluation readiness: Continuous assessment of AI safety and effectiveness—Once safety and effectiveness standards are established, the next challenge is the ongoing evaluation of AI systems. Each change to the underlying foundation model requires thorough evaluation to ensure continuous safety and effectiveness. Ideally, these evaluations should focus on positive patient outcomes rather than engagement metrics alone. However, traditional evaluation methods in health care, such as a randomized control trial, may last several months to years. Given that AI models commonly receive frequent updates, we need more agile methods and benchmarks for evaluating AI systems promptly, systematically, and repeatedly.

Unlike deterministic AI systems or conventional software with narrow, well-defined goals, generative AI–powered systems have larger surface area for testing and probing. Typical safety measures such as RLHF, guardrails, or red-teaming may only cover predictable scenarios and may miss unforeseen situations. Evaluation must consider readiness “for all” that highlights access and equity issues that technologies are often motivated to solve. Concerns about representational bias and over-generalization in datasets or algorithms should also be adequately addressed.

This raises important questions: Should foundation model developers have in-house mental health expertise? How many mental health professionals would be needed to comprehensively test all potential application scenarios?

Integration readiness: Adapting clinical workflows and practices for AI integration—The effectiveness of AI technologies hinges on their successful integration into clinical practice. However, many promising digital mental health solutions have failed to gain traction among clinicians and hospitals due to issues such as interoperability, usability, inadequate training, lack of an evidence base, a gap between research and practice, resistance to change, and time constraints. Critical questions about the reimbursement models and regulatory oversight for digital interventions still remain to be answered.

Introducing new technology can disrupt existing clinical workflows. Therefore, we must consider the broader sociotechnical impact of how AI tools interact with existing social structures, clinician roles, and patient-care practices. We need to consider the digital literacy, acceptance, and readiness of patients for AI’s role in their care as well as having adequate training for clinicians to effectively use these tools and manage the changing landscape of mental health practice. In mental health practices, where human relationships and rapport are fundamental to care, integrating AI might require a comprehensive overhaul of workflows and processes—a challenge more complex than the technology innovation itself. With generative AI, we can leverage AI capabilities to allow clinicians to dynamically adapt evidence-based practices to meet patient needs in a way that may exceed traditional care delivery. Integration of these adaptations may be one challenge, and evaluation of these evolving practices and interactions may be another.

Maintenance readiness: Ongoing updates and security in AI systems—Maintaining generative AI systems involves more than typical technical support. It requires ongoing vigilance against evolving safety and security threats and the continuous adaptation of underlying models. Beyond the costs associated with foundation model access, there may be additional costs from model fine-tuning, hiring consultants, and other data operations to maintain the system’s quality and safety.

Rapid innovations in generative AI model development are paralleled by equally rapid advancements in adversarial strategies that threaten the integrity of AI systems, such as jailbreaking, data leakage, and data poisoning. Once these attacks are identified, quick and reactive responses are required, often involving changes to underlying foundation models or applications.

This poses the question: Is the psychiatric community prepared to react and respond swiftly enough to minimize potential risks? Ensuring a robust maintenance strategy is essential to ensuring patient safety and maintaining the integrity of AI systems. This requires a proactive and sustainable approach to managing both AI advancements and potential security and safety threats.

Conclusion

As the excitement around generative AI grows, the psychiatric community has an opportunity to take proactive steps to engage in its integration thoughtfully and responsibly. From the need for standardization and continuous evaluation to adapting clinical workflows, the readiness areas discussed provide a roadmap for preparing and responding to the evolving technology effectively.

Additionally, leveraging AI for care enhancement, professional development, and scientific research as well as anticipating the effects of AI are crucial for guiding appropriate AI use in psychiatry and society. By focusing on these strategic actions and leveraging mental health professionals’ insights into shaping AI developments, we can balance innovation with safety, ensuring generative AI enhances mental health care. ■

Glossary

AI red-teaming: A structured process and practice of adopting adversarial mindsets or methods to test AI systems and to identify and mitigate potential flaws, vulnerabilities, or ethical issues, including harmful or discriminatory outputs, unforeseen or undesirable behaviors, and potential risks associated with the misuse of the AI system.

AI safety: The field of research and practice aimed at ensuring that the design, development, and deployment of AI systems are safe, secure, reliable, and beneficial to humans and society. AI safety addresses potential risks such as bias, misuse, unintended consequences, and misalignment with user intent.

Application programming interface (API): A set of rules or protocols that allows different software applications to communicate and interact with each other. Generally, APIs allow developers to access data and functionality of another software system. In the case of AI systems, developers can access and integrate the functionalities of AI models into their own applications.

Benchmark datasets: Standardized datasets used to evaluate the performance of AI models across various tasks. They are often used to define desired AI capabilities, generate synergies between separate AI development efforts, and ensure consistency in comparing different models or techniques.

Chain-of-thought (CoT) prompting: A prompting technique that instructs large language models to explicitly explain their reasoning procedure step-by-step, which could lead to more accurate and reliable answers.

Fine-tuning: A process that adapts a pre-trained model to perform specific tasks more effectively through additional training on a smaller, task-specific dataset.

Foundation model: A large, versatile AI model trained on a broad dataset, serving as the foundation for various applications. This model can be fine-tuned and adapted to specific tasks.

Frontier model: The most advanced foundation model, representing the latest in AI capabilities. This model often incorporates the largest datasets and the newest AI techniques to push the boundaries of what is possible with AI.

Generative AI: A category of artificial intelligence designed to generate new data or content, such as text, images, audio, or video, based on patterns learned from vast amounts of data.

Generative pre-trained transformer (GPT): A specific type of AI model developed by OpenAI that uses transformer architecture and is pre-trained on a large corpus of text before being fine-tuned for specific data.

Guardrail: Mechanism or control implemented in AI systems to prevent undesirable or unsafe behavior. This includes rule-based filters, value-aligned models, ethical guidelines, and restrictions to ensure that AI outputs align with intended use cases, safety standards, and system policies. Jailbreak: A broad category of techniques used to bypass or override the built-in restrictions, guardrails, or safety features of an AI system, often used to make AI produce outputs that are blocked or deemed unsafe.

Large language model (LLM): A broad category of AI models designed to understand and generate human-like text by learning patterns, grammar, and semantics of language from data. LLMs perform a variety of natural language processing (NLP) tasks such as text generation, summarization, translation, and question-answering.

Large language model operating system (LLM OS): A conceptual framework that leverages LLMs as the core component of a more comprehensive AI system or operating system. An LLM OS is akin to how an operating system manages hardware and software resources on a computer. It integrates various plugins, agents, tools, internal and external modules, and processes with LLMs to create a versatile and adaptable AI environment that enables handling of complex, multistep tasks across various domains.

Reinforcement learning from human feedback (RLHF): An AI technique used to fine-tune AI models by incorporating feedback from human evaluators to guide the model’s behavior toward more desirable outputs.

Retrieval-augmented generation (RAG): An AI technique that combines a generative model with a retrieval mechanism against a database to ground the generated outputs in specific, retrieved information to improve their accuracy and relevance.