Mobile health (mHealth) includes the use of mobile devices, such as mobile phones, monitoring devices, personal digital assistants, and other wireless devices, in medical care and is a promising approach to the provision of support services. mHealth may enable improvements in monitoring mental health conditions, offering peer support, providing psychoeducation (i.e., information about conditions), and delivering evidence-based practices (

1–

4). mHealth technologies offer an opportunity to overcome barriers to services through use of technologies to address challenges related to transportation to and from services, and these technologies also may address linguistic and literacy barriers (

5,

6). Moreover, the COVID-19 pandemic has expanded the need to offer mHealth. Use of mHealth has the potential to provide care for service users beyond the pandemic and is rapidly transforming mental health care delivery (

7).

However, some limitations of mHealth need to be overcome before it can be recognized as a credible and effective service for achieving positive mental health outcomes. Many “well-being” apps on the market do not meet academic standards for clinical interventions and lack evidence-based research to inform their content (

8). This disconnect between availability and the evidence base is also apparent for apps targeting specific mental disorders, including bipolar disorder, posttraumatic stress disorder, and bulimia nervosa (

9). Additional concerns about the larger issue of safety include an overreliance on apps and users’ increased anxiety when apps result in self-diagnosis (

9). One systematic review of consumer-facing mHealth apps found considerable safety concerns with the quality of information (e.g., incorrect or incomplete information and inconsistencies in content) and with app functionality (such as gaps in features, lack of user input, and other limitations) (

10). Many such apps are seldom backed by empirical research, and users may be subjected to deceptive marketing practices. For some individuals, the benefits of using apps may be largely a placebo response. Apps also have the potential to harm certain high-risk populations.

Privacy is also a particular concern, because marginalized groups can be more susceptible to the effects of privacy violations than other groups. For example, people with mental health challenges may avoid or delay treatment because of fear of sharing stigmatizing information (

11) or fear of potential repercussions from sharing information, such as being treated differently or losing one’s job (

11,

12). Additionally, digital technologies may increase coercion in psychiatric care (

13). To improve the self-determination of service users who interact with mHealth, it is essential to increase transparency about the use of various technologies, allowing service users to opt out of services and to edit or delete their data; it is also important to train clinicians about the implications of using these technologies (

13). Much more comprehensive risk assessment metrics are needed to regulate the production and recommendation of mHealth apps (

14).

Furthermore, some groups may fail to benefit from mHealth despite having a high need for mental health services, including people from ethnically and racially disadvantaged groups, rural residents, those who are socioeconomically disadvantaged, and people with disabilities. For example, mental health conditions such as major depressive disorder and posttraumatic stress disorder are highly prevalent in Rwanda, especially among survivors of genocide (

12). Mental health resources and facilities are scarce in many regions, but mHealth may offset this scarcity by offering services such as automated chatbots as part of a stepped care model, which automatically escalates service users to the attention of a mental health provider when a user needs more intensive services.

In the United States, one in five adults (52.9 million in 2020) has a diagnosis of a mental disorder (

15). Even though technology adoption is higher in the United States than in resource-poor nations, technology ownership levels are lower among disadvantaged U.S. groups. For example, 80% of White adults in the United States reported having home broadband, compared with 71% of Black and 65% of Hispanic adults with such service (

16). Although 79% of people in suburban communities and 76% of those in urban communities have access to broadband Internet, only 63% of people in rural communities do (

17). Individuals living in rural communities also own disproportionately fewer smartphones and tablets (

17). Similarly, 23% of individuals with disabilities in the United States do not access the Internet, nearly three times the percentage in the general population (8%) (

18). Additionally, when specific groups, such as individuals from racially and ethnically disadvantaged groups in the United States, access in-person mental health care, they often receive poor-quality care, compared with groups that are not racially and ethnically disadvantaged (

19). This phenomenon may also be true for disadvantaged groups accessing care via mHealth; however, limited knowledge currently exists regarding disparities in quality of mHealth care by racial and ethnic groups.

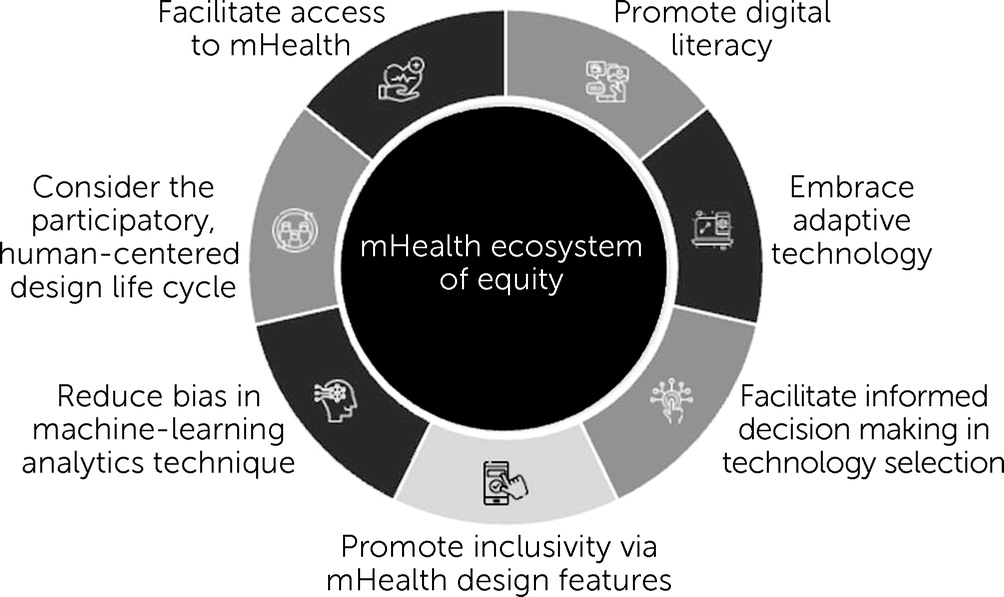

mHealth Ecosystem of Equity

A well-designed mHealth ecosystem that considers multiple elements of design, development, and implementation can afford disadvantaged populations the opportunity to address inequities and facilitate access to and uptake of mHealth. This article describes an equitable mHealth ecosystem with the purpose of guiding industry and nonindustry entities, scientists, administrators, policy makers, educators, clinicians, lay interventionists (e.g., peer support specialists), and service users in their mHealth efforts to facilitate inclusion and equity. We, the authors of this article, convened over the course of 1 month through e-mail and developed recommendations through discussion and collaboration. To ensure that all suggestions were documented and included, authors checked in with each other to ensure that all authors took part in forming the recommendations. This article proposes the inclusion of the following principles and standards in the development of an mHealth ecosystem of equity: adopt a human-centered design, reduce bias in machine-learning analytical techniques, promote inclusivity via mHealth design features, facilitate informed decision making in technology selection, embrace adaptive technology, promote digital literacy through mHealth by teaching service users how to use the technology, and facilitate mHealth access to improve health outcomes (

Figure 1).

Adopt a Human-Centered Design Approach

Adopting a human-centered design approach in the creation of mHealth products involves increasing the participation of service users—especially those from disadvantaged backgrounds—in the discovery, design, and development process of mHealth apps to gain insights pertaining to their preferences and priorities. We hope that by identifying and addressing the needs of a diverse set of users, future mHealth products will have a universal design. Furthermore, the optimal process for the discovery, development, testing, iteration, and implementation of mHealth solutions to mental health challenges resides at the intersection of traditional behavioral science research and design thinking (

20–

22). The traditional behavioral health approach to intervention development begins with professionals recognizing a problem that needs to be addressed and then creating a solution for it. However, the lack of widespread uptake and practical effectiveness and the high disengagement found with many mHealth interventions (

23) highlight the limitations of this traditional expert-driven design approach. Design thinking offers an alternative to this approach. Design thinking integrates the scientific method with end-user engagement and experience to provide an evidence-based and humanistic foundation to problem solving. This method is directly relevant to mHealth development and testing by prioritizing patients at the center of the process (

20,

22).

We therefore recommend that software developers incorporate a design-thinking approach focused on understanding the needs and experiences of service users and on building and testing solutions (e.g., prototypes) with them as partners throughout the software development and implementation process. Specifically, we recommend that product management teams incorporate patient-centered approaches for obtaining data to inform design decision making. Moreover, we recommend that clinical teams regularly liaise with service users, social workers, and certified peer support specialists to ensure continuous interaction and that clinical teams also utilize accountability measures of service user engagement that promote feedback throughout the process (

24). For instance, the Quality of Patient-Centered Outcomes Research Partnerships instrument was coproduced by service users and scientists and designed to improve the quality of community engagement research by providing feedback on the extent to which stakeholders report being involved in research activities (

24).

Reduce Bias in Machine-Learning Analytical Techniques

Eliminating or reducing bias in artificial intelligence (AI) is of increasing importance. Despite the seemingly objective nature of AI, bias and subjectivity in this technology can affect findings in many subtle ways that affect equity. Researchers and clinicians must be mindful of the nature and limitations of the data that are being used to train algorithms and from which conclusions about service users’ health and well-being are being made (

24). One example is a commercial algorithm used by the U.S. health care system. This algorithm uses health care costs to represent health needs (

25). Because less money is spent on Black service users who have the same level of needs as White service users, the algorithm incorrectly determines that Black service users are healthier than White service users when both are equally sick (

25). As a result, the needs of Black service users may be underestimated.

Participant recruitment, sampling framework, data collection procedures, and a host of other methodological decisions made by researchers can also have unwanted impacts on the results produced by AI analyses in terms of their ability to produce conclusions that are valid for disadvantaged populations. For example, passive data collection via smartphones has become an increasingly rich resource for researchers (

26); however, these devices are not generally developed with disadvantaged populations in mind. As a result, data collection may be biased from the outset by selecting only service users who have the physiological, cognitive, and functional capacity to participate (

27). Scrutinizing the sampling frameworks and recruitment strategies may offset some of these biases, in addition to working alongside community partners from underrepresented, disadvantaged, or vulnerable groups to directly address these biases.

To minimize bias in AI, we recommend that scientists be deliberate in data collection, engineers be mindful of the ways in which the data are analyzed and interpreted, and marketing teams be careful about the specific services they are promoting and for what demographic groups. When dealing with “medical interventions” and “medical devices” in which AI underpins the mechanism of action, researchers must be particularly diligent. Perhaps because of AI’s intangible nature, it can be easier for bias to creep into its applications. If a physical device was designed with a clear bias against specific groups, it is unlikely that health care regulators would grant approval of the device. Therefore, the same level of scrutiny must be applied when AI is used in the context of health care. Research protocols for producing new apps should include plans to develop algorithms from more diverse data sets.

Promote Inclusivity via mHealth Design Features

Service users with mental disorders may also have comorbid neurocognitive deficits that may vary by race, ethnicity, or gender. These types of deficits are also present among older adults or individuals with cognitive impairments. Design features have been tested in a series of studies to inform guidelines for developing mHealth tools and resources for people living with mental health conditions or cognitive impairments (

Table 1) (

28–

32).

We recommend that software developers and system engineers incorporate design features that improve app usability across diverse diagnostic groups. Moreover, to facilitate design equity and to make more informed and inclusive recommendations to industry partners, we suggest that social scientists research and consider the effects of culture, language, race-ethnicity, and gender when service users interact with digital technologies.

Additionally, not all users will need the same level of repetition and reminders. Potentially, the integration of precision medicine and multiphase optimization strategy (MOST) study designs can support the level or “dose” of mHealth features needed to optimize outcomes. MOST is a framework for building and optimizing multicomponent behavioral interventions similar to those in many mHealth apps (

33). MOST involves establishing a theoretical model, identifying a set of intervention components to be examined, and experimenting to examine the impact of individual intervention components (

33).

Facilitate Informed Decision Making in Technology Selection

In a qualitative study with 40 service users with serious mental illness (

34), users reported that they did not feel informed regarding which mHealth technologies they could use for treatment at community mental health centers. When service users are not informed, mHealth’s intended benefits may not be achieved.

Decision support in selecting technologies may strengthen informed decision making and emphasize choice, engagement, and decision making by service users, clinicians, and certified peer support specialists (

35). Current decision support interventions in mental health focus on treatment and medication choice (

36), psychiatric rehabilitation decisions, or care transition determinations. Decision support within mental health has been found to promote engagement in services and treatment adherence (

37). To date, few decision support frameworks regarding the choice of mHealth tools exist. For example, the American Psychiatric Association initiated a framework for selecting smartphone apps for use in clinical settings (

38) that includes suggestions for informing decision making, such as asking a professional, reviewing research supporting the app, or reaching out to the app developer. Although these guidelines may be feasible for some patients, interpreting them can still require extensive functional or cognitive resources that can make it difficult for individuals with mental health challenges. Nevertheless, efforts to increase accessibility to reviews of apps are already underway. For example, Wykes and Schueller (

39) call for app stores to take responsibility for what they call the “transparency for trust principles,” including providing information on privacy and data security, on how the technology was developed, on the feasibility of the tool, and on benefits to individuals. All of these details can be provided in a plain language summary to facilitate understandability.

Another example to facilitate understandability is a framework called “Decision-Support Tool for Peer Support Specialists and Service Users” that was initiated by patients (or peers) who worked together to facilitate shared decision making in selecting technologies to support mental health (

40). Patient-identified decision domains include privacy and security, cost, usability, accessibility, inclusion and equity, recovery principles, personalized mHealth for patient needs, and ease of device setup. All questions are in a simple form so that all consumers can understand them. Moreover, online services, such as PsyberGuide (

https://onemindpsyberguide.org) in the United States and ORCHA (

https://us.orchahealth.com) in the United Kingdom, provide detailed and accessible reviews of online health apps that users can utilize depending on their individual needs. Backed by scientific advisory boards and structured by various parameters, services such as these are personalized tools that can help users navigate the untested space of mHealth with greater confidence and clarity.

We recommend that software developers create features that enable service users to have access to and control of the features they want to use, which can influence satisfaction and willingness to remain engaged; such control could include, for example, giving users a way to opt in or out of creation of a medications list (i.e., that would require a service user to enter a medication regimen). Other examples include service users’ ability to control whether they receive notifications or alarms for treatment (

41), as well as other privacy and security features (

42,

43). We also advise that more clinicians and social workers collaborate with developers in creating evidence-based decision support strategies within the selection process to better support service users’ mental health. Because service users commonly disengage from therapeutic digital technologies after 2 weeks or before intervention effects take place (

44), the incorporation of decision support strategies may counteract premature disengagement and guide individuals in selecting technologies according to available research, their preferences and unique needs, and socioenvironmental characteristics. Moreover, we recommend that app stores integrate the aforementioned reviews and frameworks into their ratings and overviews for mHealth apps. Information such as privacy policies, user experience, and credibility scores can provide accessible guides for potential users.

Finally, we suggest that legal teams for mHealth products provide plain language summaries of privacy statements. Recently, the Cochrane Group has created guidelines to develop “plain language summaries” for all published scientific reviews to make research findings accessible to nonscientists (

45). Such plain language summaries can greatly guide the development of a more understandable framework (

46).

Embrace Adaptive Technology

Adaptive technologies can be products or modifications to existing services that provide enhancements or different ways to interact with a certain technology. For instance, developing mHealth applications that are accessible on multiple platforms and that can work without the Internet or that use limited data might support engagement among disadvantaged service users regardless of their location. One example is WhatsApp, a mobile instant-messaging system that offers smartphone-based communication for free across and within countries. WhatsApp also adjusts to inconsistent Internet service by sending messages as soon as the signal returns. This approach has application to many mental health apps that require content updating. Moreover, adaptive technologies can help strengthen the engagement of service users with self-management practices and reduce the likelihood of secondary complications. iMHere is an adaptive mHealth system that helps combat the dynamic changes in self-management needs that can arise for individuals with chronic conditions and disabilities (

47). Its architecture includes cross-platform client and caregiver apps, a Web-based clinician portal, and a secure two-way communication protocol. The system can suggest personally relevant treatment regimens to individuals tailored to their conditions (with or without support from caregivers) and allows clinicians to flexibly modify these modules in response to their service users’ changing performance or needs over time (

47).

We recommend that clinicians, social workers, and certified peer support specialists embrace such adaptive solutions in their work within communities. In the development of mHealth technologies, companies should similarly consider the integration of adaptive features to offset challenges, such as limited Internet connection, and to increase flexibility in interacting with the product to ensure long-term engagement. Nevertheless, we note that WhatsApp’s parent company, Facebook, has faced major backlash for allegedly collecting and using private user data. WhatsApp also could hinder service users’ autonomy, because users may be unable to refuse a service recommended by their health care provider despite a user’s hesitations about the service (

48). Thus, although we reference these services and their role in adaptive technologies, it is important to review privacy statements to determine the privacy standards of each technology before using it or before recommending it to service users.

Promote Digital Literacy by Teaching Service Users How to Use the Technology

It is necessary to develop mHealth features that facilitate service users’ learning how to use mHealth platforms. Adults from higher socioeconomic strata are much more likely to have greater familiarity with mHealth than are low-income, Black or Hispanic, disabled, or homebound adults (

49). In the promotion of digital literacy, it is necessary to understand how adults learn and especially how adults’ learning needs differ from individuals of other age groups (such as children and adolescents and older adults), including previous experiences and factors associated with mental disorders that can impede learning, including neurocognitive deficits (

50).

We recommend that clinicians and social scientists incorporate andragogical learning theory—a theory of how adults learn through the comprehension, organization, and synthesis of knowledge (

51)—when teaching service users or other participants how to use new technologies. We also encourage various peer-to-peer networks and groups to facilitate digital literacy training.

Facilitate mHealth Access to Improve Health Outcomes

Consistent with the concept of social determinants of health (

52), which suggests that conditions in an individual’s life (e.g., housing, socioeconomic status, and education) affect the person’s overall health, having access to mHealth may also affect the overall health of service users. Thus, mHealth utilization is potentially mediated by social forces, institutions, ideologies, and processes that interact to generate and reinforce inequity among groups (

1). Institutional infrastructure and processes espousing mHealth as a public health facilitator or a health care access facilitator may merely produce “digital redlining”—perpetuating unequal access for already marginalized populations. mHealth may also be used to perpetuate a “separate but equal” health care interface, allowing or justifying structural barriers to health care (

53).

Nevertheless, throughout the world, governments are increasingly offering income-based access to free smartphones and data plan services, which can help reduce the digital divide. Thus, smartphone ownership among disadvantaged groups is steadily increasing, including among people with serious mental illness (

54,

55), people with disabilities (

56), and rural residents (

17).

Access to mHealth is vital to care, and an equitable offering of services through mHealth is equally important. Specifically, an equitable and inclusive mHealth ecosystem must always include protocols to facilitate timely crisis responses. Although some mHealth technologies may discourage the use of mHealth for crises, it is possible that service users in crisis may still seek out care on publicly available platforms. Therefore, development of crisis response protocols that align with state, county, and legal regulations can support service users in crisis. For example, possible solutions can be an on-call provider to support service users in crisis in real time, integration of natural language processing to predict suicidal ideation through text message interactions, or a feature that allows for immediate connection with a local authority by dialing 988 (in the United States) or connecting service users to a warmline to work through a crisis.

Inclusive and appropriate mHealth utilization requires not merely contemplating individuals’ comfort with and access to mHealth but also requires scrutinizing the systems and processes introducing the mHealth tool. We advise that policy makers work in conjunction with social scientists to study data plan use at the population level and to determine the minimum amount of data required to use mHealth products effectively. Moreover, government programs can be expanded to allow more individuals to qualify for services (i.e., allowing more than one subscriber per household to accommodate individuals in shared housing and group homes). Software developers of mHealth products can use knowledge of government policy during the development process to ensure compatibility with free government programs and also include in-app protocols to facilitate timely crisis responses.

Limitations and Future Scope

We represent diverse stakeholder groups, including social workers, mental health service users, social scientists, certified peer support specialists, clinicians, software developers, industry partners, and systems engineers. However, as a group we are not fully representative of the backgrounds, diagnoses, socioeconomic status, professions, geography, nationality, age, and gender of the various communities of service users. Therefore, these recommendations should be reviewed with caution, because this is an important limitation.

Limitations regarding implementation of these recommendations include poverty and access, which create challenges in developing an equitable mHealth ecosystem. Much of the data on access to smartphones and the Internet come from online surveys. Therefore, it is highly likely that access levels are currently overestimated. Additionally, not all smartphone owners have data plans that give them access to apps, and some may have plans that do not provide sufficient data.

Also, it is not practical for all commercial apps to include all the resources necessary to support people with cognitive, hearing, and vision impairments. Adding all the necessary requirements may affect the cost of app development.

Nevertheless, an analysis of the long-term return on investment may show cost effectiveness. Examining the economic impact of dollars spent on mHealth development and mHealth’s impact on health services outcomes, such as hospitalizations and medication adherence, is important for determining the potential return on investment in incorporating the features delineated above. It is also necessary that future research move beyond studying the feasibility and acceptability of mHealth, because it has become quite apparent that people with mental health challenges can use and are interested in using these services. Instead, we urge for a greater focus on investigations of the clinical effectiveness of these technologies in addressing health concerns through more rigorous randomized controlled trials and meta-analyses (

9).