As summarized in several reviews, a significant body of research supports a variety of treatment manuals for children's mental health disorders (

1,

2 ). Despite the breadth and depth of available protocols, research also suggests that evidence-based approaches are not widely used in everyday practice (

3 ). As with many innovations, the dissemination of evidence-based practices in real-world settings may depend on a number of factors (

4 ). For example, Addis and colleagues (

5,

6 ) examined therapists' attitudes toward evidence-based practices and found that therapists were supportive of the science behind evidence-based treatments but were concerned about the impact of standardized manuals on therapeutic rapport and individualized case conceptualization. Another study also found that therapists' concerns about evidence-based treatments were not a consequence of negative attitudes toward research but were focused instead on reduced opportunity to exercise clinical judgment and on fears that research-based protocols do not fully address the complexity of their cases (

7 ).

Aarons (

8 ) assessed therapists' attitudes toward evidence-based practices with the Evidence-Based Practice Attitude Scale (EBPAS), which was designed to measure therapists' attitudes across several factors, such as the "appeal" of evidence-based practices. Aarons' findings were mixed, although the most positive attitudes were found among intern-level therapists, as well as among therapists in organizations where differences between evidence-based approaches and current practices were viewed as minimal. The larger message was that the matter of preference might not be unidimensional; some aspects of evidence-based practices may be well received among therapists, whereas other aspects may not. In addition, we thought it was important to consider that evidence-based interventions have at times been conflated with specific manuals in the literature (

9 ). As described by Kazdin (

10 ), the notion of evidence-based treatments refers to specific protocols as assessed in research, whereas evidence-based practices refer to a broader set of treatment approaches that incorporate research, clinical judgment, and client-specific needs. It is unlikely at this point in the field of psychology, however, that most frontline therapists differentiate between the two concepts or among the range of available treatment manuals that vary widely in structure, format, and flexibility of application.

Given the variable findings in the literature and the fact that measures of therapists' attitudes such as the EBPAS (

8 ) do not distinguish between treatment manuals and evidence-based practices more generally, we felt it would be informative to investigate whether the manuals themselves or the "packaging" of evidence-based interventions and component clinical procedures were primarily responsible for observed therapists' attitudes toward evidence-based practices.

Thus this study set out to test a priori whether there were observable, different dimensions of attitudes toward evidence-based practices and whether such dimensions would be differentially affected by training therapists in evidence-based protocols that varied with respect to their format and structure. Thus therapists' attitudes were compared across two different evidence-based treatment formats: standard treatment manuals and a modular approach in which techniques were applied according to a guiding clinical algorithm derived from the standard treatment protocols. Attitudes were assessed according to two different measures—the EBPAS and a measure that assesses more generally for attitudes about evidence-based practices. Modularity, as described by Chorpita (

11 ), is an evidence-based approach to treatment that focuses on finding the common elements among standard treatment manuals and applying them according to a decision-making process that accounts for "pace, timing, or selection of techniques" and is guided by client-specific variables (the client's problems and his or her engagement in treatment). We hypothesized that therapists in the modular treatment condition would report more favorable shifts in attitude toward evidence-based practices from pretraining to posttraining and that the EBPAS would be less sensitive to differences in therapists' attitudes toward evidence-based practices than a measure that deemphasized the use of treatment manuals.

Methods

Data for this study were collected during the training phase of a longitudinal, randomized, clinical trial that examined children's mental health treatments. Institutional review board approval was obtained before the start of the clinical trial. The treatment phase of the trial concurrently took place in Boston and Honolulu beginning in 2004, with treatment ongoing at the time of this publication.

Participants

Therapists were recruited across clinic- and school-based mental health settings and private practices. After complete description of the study, written informed consent was obtained. Data were gathered from 63 therapists participating in the clinical trial; they ranged in age from 25 to 60 (mean=41.48). Clinical experience ranged from less than one year (completed graduate degree within past 12 months) to 35 years (mean=8.79 years). Eight participants were missing all of either their pre- or posttraining data and were therefore excluded from the analyses. Thus the final sample consisted of 55 therapists.

Measures

EBPAS. The EBPAS (

8 ) is a 15-item measure that generates four scales: appeal ("If you received training in a therapy or intervention that was new to you, how likely would you be to adopt it if it 'made sense' to you?"), requirements ("If you received training in a therapy or intervention that was new to you, how likely would you be to adopt it if it were required by your organization?"), openness ("I am willing to try new types of therapy/interventions even if I have to follow a treatment manual"), and divergence ("Clinical experience is more important than using manualized therapy/interventions"). Participants indicate level of agreement with each item, ranging from 0, not at all, to 4, to a very great extent. The EBPAS total score is calculated by reverse-scoring the items for the divergence scale and then computing an overall mean, with higher scores indicating more favorable attitudes. Total scores can range from 0 to 4. Aarons (

8 ) assessed the EBPAS in a study of 322 clinicians across various public mental health agencies. Internal consistency was good, with Cronbach's alpha ranging from .77 for the EBPAS total scale to .90 for the requirements subscale.

Modified practice attitudes scale. The modified practice attitudes scale (MPAS) (Chorpita BF, Weisz JR, Higa C, et al., unpublished measure, 2004) is an eight-item measure that was developed specifically for this study to assess therapists' attitudes toward evidence-based practices. In contrast to the EBPAS (

8 ), items on the MPAS were worded to measure therapists' attitudes toward evidence-based interventions by minimizing references to treatment manuals (for example, items included "I am willing to use new and different types of treatments if they have evidence of being effective" and "Clinical experience and judgment are more important than using evidence-based treatments"). Similar to the EBPAS, on this measure participants indicated their level of agreement with the items, which could range from 0, not at all, to 4, to a very great extent. Five items are reverse-scored, and the total score can range from 0 to 32, with higher scores indicating more favorable attitudes.

Procedure

Participating clinical trial therapists were randomly assigned to one of three treatment conditions: standard manual treatment, where therapists were trained in standard versions of manuals, as used in research studies; modular manual treatment, where therapists were trained in evidence-based treatment components (for example, one module for relaxation training and another for problem solving) that were applied with a decision-making algorithm to individually tailor treatment approaches; and usual care treatment, where therapists used treatments found in community-based mental health settings. Therapists assigned to usual care did not participate in training; thus their data are not presented in this report.

Data were gathered before and after training, which occurred between 2004 and 2006 at the Boston and Honolulu sites. The training sessions occurred in two rounds and consisted of three, two-day events focused on treating anxiety and depression with cognitive-behavioral therapy and treating disruptive behavior problems with behavioral parent training. Experts in these areas (doctoral-level psychologists) and the investigator team led the training sessions. Therapists were administered the study measures as a group at pretraining and again after the last training session.

All participating therapists received identical training (they were in the room together with the trainers at the same tables at the same time), with the exception of 2.5 hours on the second day of each of the training events, when therapists got into their respective treatment condition groups (standard or modular). Most of the training days were spent discussing the theoretical framework of the evidence-based protocols and role-playing various techniques. Training sessions were organized by skills (such as how to do exposure when treating anxiety) rather than in a session-by-session format, and each event covered nine to 12 skills. During the breakout sessions, the standard group spent their time covering the sequencing and structure of manuals, whereas the modular group spent most of their time discussing various applications of selecting evidence-based treatment skills and applying them to different types of cases. Otherwise, therapists attended identical training sessions. The standard and modular trainees even role-played treatment exercises with one another during the training. Between training events, therapists were encouraged to practice the evidence-based techniques with clients in their caseload as appropriate. In addition, therapists in the first round of training were encouraged to practice skills by role-playing with the doctoral-level trainers in one-on-one supervision.

Data analysis

A mixed-factorial repeated-measures design was used to assess differences in therapists' attitudes from pre- to posttraining for therapists in the evidence-based conditions. Training site (Honolulu or Boston) was included as a covariate to control for differences across sites. Given our hypotheses regarding the modular condition and the sensitivity of the MPAS to detect differences in attitudes between groups of therapists, we predicted a significant interaction between group and time on the MPAS, with therapists in the modular condition showing a significantly greater increase in positive attitude from pretraining to posttraining compared with therapists in the standard group. No such interaction was predicted for the EBPAS (

8 ).

Results

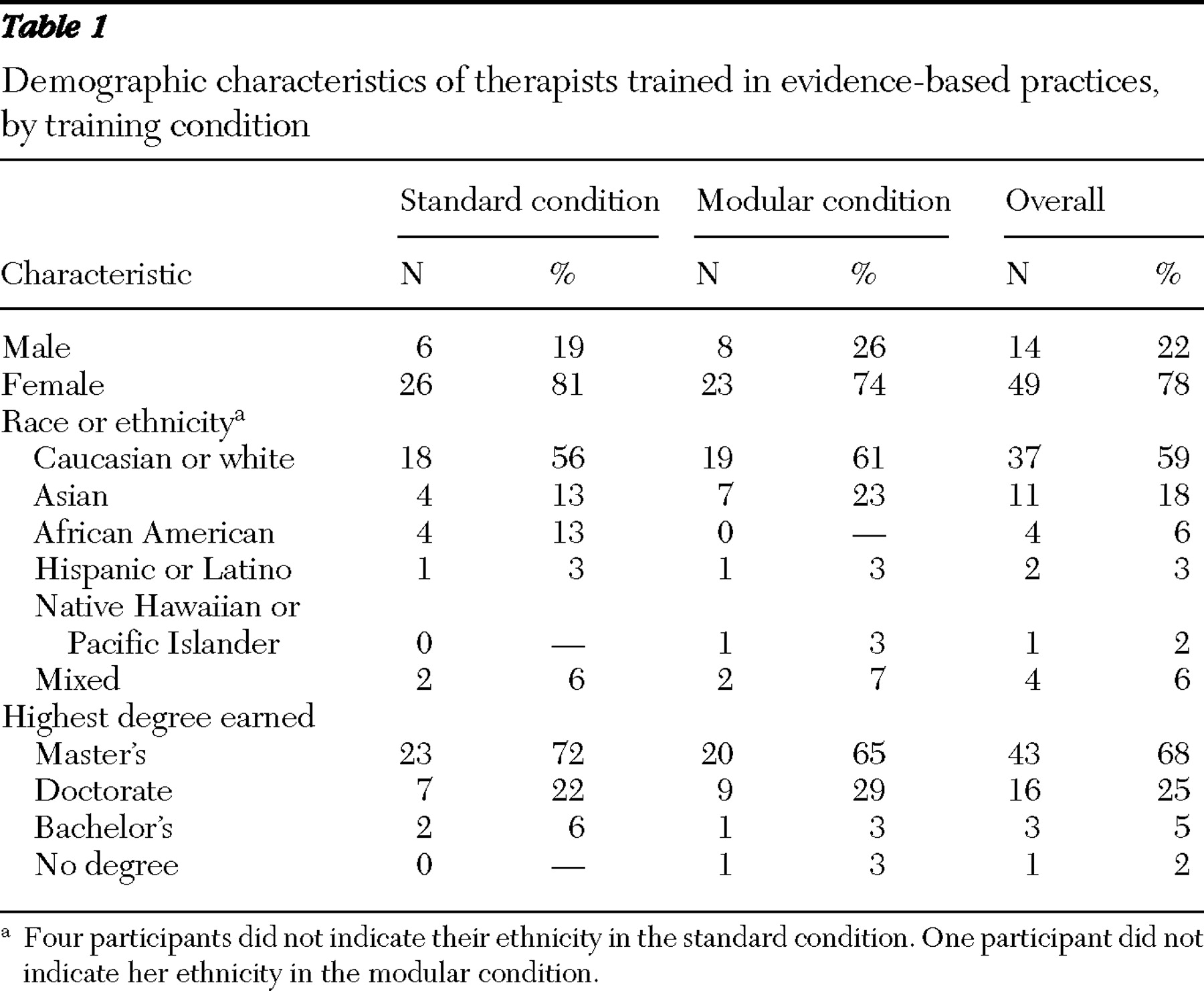

Demographic information is presented in

Table 1 . Internal consistency was assessed for both measures in this sample. Cronbach's alpha was .80 for the MPAS and .77 for the EBPAS. A significant, moderate correlation was found between measures (r=.36, p<.01), which was expected given that they were intended to measure related constructs. We also assessed for preprogram differences on demographic variables that research indicated might be relevant (that is, age, gender, and highest degree earned) (

8 ). No significant differences were found for these variables.

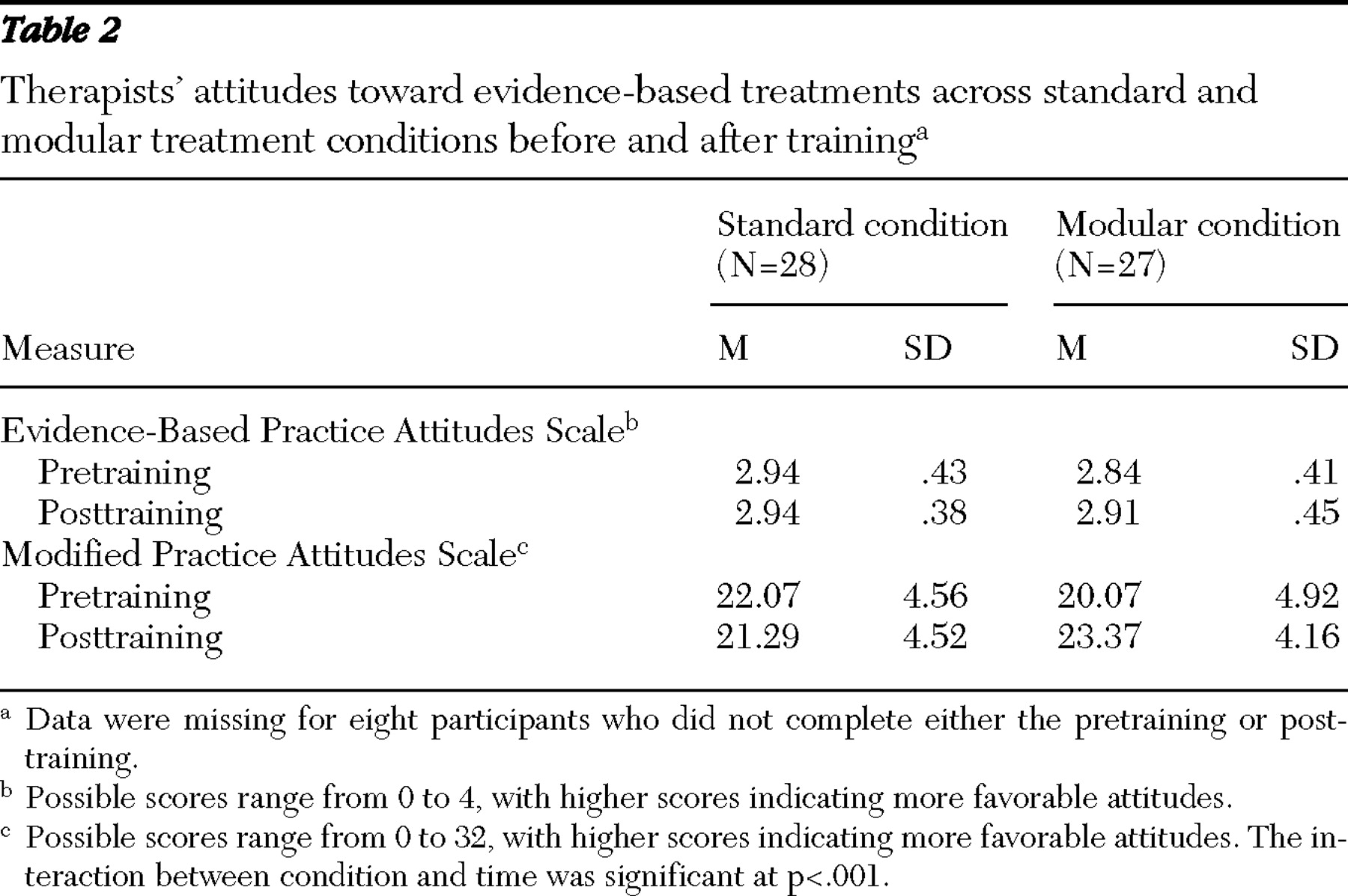

We had hypothesized that on the MPAS, therapists in the modular condition would show larger increases in positive attitudes toward evidence-based practices than would therapists in the standard condition. Consistent with this hypothesis, we found a significant interaction between time and condition on the MPAS (F=11.76, df=1 and 55, p<.001). Simple main effects showed that therapists' attitudes in the modular condition became significantly more positive from pretraining to posttraining (t=-4.77, df=26, p<.001), whereas therapists' attitudes in the standard condition did not change significantly. No other MPAS comparisons were significant (

Table 2 ).

Also as predicted, there was no significant interaction between therapists in the modular and standard conditions from pretraining to posttraining on the EBPAS. There were no other significant differences in EBPAS scores, including in therapists' attitudes as measured by the EBPAS from pre- to posttraining in either condition (

Table 2 ).

Discussion

This study was an examination of therapists' attitudes toward evidence-based practices across two evidence-based treatment conditions. Attitudes were measured across two instruments—the EBPAS and the MPAS—in order to determine whether therapists' attitudes would differ with treatment conditions according to how they were asked about evidence-based practices. Specifically, we examined whether therapists' attitudes reflected a negative view of evidence-based practices in general or whether therapists' concerns were multidimensional, with potential divergence among dimensions.

The results of this study revealed the latter. Therapists scored differently on two measures of attitudes toward evidence-based practices according to how they were asked about them. On the EBPAS, therapists were asked about evidence-based practices in the context of treatment manuals; however, on the MPAS the use of manuals was deemphasized, and more general reference to evidence-based practices was made. Differences were found on the MPAS only, suggesting that therapists did not harbor negative attitudes toward evidence-based practices as a whole; rather, their concerns were with the use of treatment manuals more specifically. One implication of these results is related to the manner in which we asked about complex constructs such as attitudes. For example, measures not derived by traditional test construction procedures, such as broad domain sampling, may produce overly narrow measurement strategies (

12 ). Given that both the EBPAS and the MPAS are rationally constructed measures, it is possible that these instruments capture only limited aspects of attitudes toward evidence-based practices. By demonstrating a divergence in perspective on attitudes, our results underscore the importance of refining the measurement of attitudes and how they relate to the adoption of evidence-based practices.

Refining measures of attitudes also draws attention to the importance of directing resources toward understanding the factors that influence attitudes, in that they may contribute to the adoptability and dissemination of evidence-based practices. As mentioned previously, Rogers (

4 ) described a number of factors that may affect the rate of adoption of innovative practices. Specifically, aspects related to the "perceived attributes of innovations" may especially relate to mental health because they may influence the adoptability of evidence-based practices by therapists, as well as the appropriate buy-in required by agencies to incorporate change (

4,

13 ). In addition, Rogers (

4 ) described the "relative advantage" of innovations over current practices, which has repeatedly been demonstrated over the past two decades of research in evidence-based practices compared with usual care practices (

9,

14 ).

Another variable affecting the rate of adoption of innovations that has received less attention is what Rogers (

4 ) described as compatibility. In the context of mental health, this variable may be described as the perceived compatibility between a therapist and the innovation. Problems with perceived compatibility may partially explain why evidence-based treatments remain relatively unused among usual care therapists, despite their established effectiveness. Our results suggest that we may be able to address the compatibility issue by influencing the popularity of manuals according to how they are packaged and applied (concept of modularity), as well as by how therapists are asked about evidence-based practices (not conflating them with use of manuals). For example, we hypothesized that the increase in positive attitudes of therapists in the modular condition may have occurred because therapists felt they were involved in the "design" of the modular approach to treatment, thus increasing perceived compatibility.

Although a modular approach to delivering evidence-based practices (

10,

15 ) does not dictate a flexible approach to treatment per se, the perceived flexibility may come from allowing therapists to be involved in the decision-making algorithm. Furthermore, the treatment components prescribed by the algorithm are the same as those in the standard manuals, and the algorithms themselves have relatively minor practical differences. Indeed, when presented with a particular clinical vignette, most therapists in the modular training chose the same clinical technique that would have been dictated by the standard approach. Thus one of the main differences may be that in the modular condition the decision-making power was shared with the therapist, whereas in the standard condition it was not.

Training on a modular approach to evidence-based practices may also have implications for policy. As described previously, therapists in both the standard and modular conditions participated in the same training, with few resources devoted to individual therapeutic formats. Dissemination efforts across systems may be more readily received if training procedures for multiple approaches are compatible across protocols and address therapists' concerns (including lack of flexibility and deemphasized therapeutic alliance and clinical judgment) (

16 ). Finally, as discussed elsewhere (

15 ), modular design principles lend themselves to an overall efficiency when combined with other treatment approaches that could broaden the availability of evidence-based practices overall and enhance the benefit of adopting evidence-based practices at a systems level.

Limitations of this study included characteristics of the sample. Specifically, our sample represented only a subset of community-based therapists, and an investigation targeting a larger population of treatment providers may show different outcomes. In addition, this study was the first investigation to use the MPAS; thus the psychometric characteristics of the measure are limited. Nonetheless, reliability of the MPAS was found to be acceptable in this study, and its correlation with the only other known measure of a related construct was in the expected range. Finally, some may argue that therapists in the modular condition were not trained in standard, evidence-based practices but rather in a new, non-evidence-based approach. However, the MPAS did not assess for attitudes toward the modular protocol but asked about evidence-based practices in general. Thus the results cannot be interpreted as showing that therapists simply preferred alternatives to evidence-based practices; it is more likely that their baseline perceptions of evidence-based practices were that they are typically manualized and inflexible.