Neuroscientists traditionally thought that information processing was revealed by changes in firing patterns of “smart” neurons in a bottom-up fashion

(1) . Such a conceptualization is relatively hard wired

(2) and fails to reflect the flexibility needed to cope with top-down constraints, such as attention, expectations, and context. Constraints on bottom-up processing come from high-level executive systems, such as conscious focusing of attention, but can also come from automatically invoked, lower-level systems. A forward model system involving transmission of an “efference copy” of motor commands to the sensory cortex to generate “corollary discharges” that prepare it for impending sensory consequences of self-initiated motor acts can help us unconsciously disregard sensations resulting from our own actions. Helmholtz

(3) first described the need for a mechanism that would allow us to discriminate between moving objects and movements on the retina resulting from eye movements. Von Holst and Mittelstaedt

(4) and Sperry

(5) later suggested that a motor action is accompanied by an efference copy of the action that produces a corollary discharge in the sensory cortex. Subsequently, this feed-forward mechanism has been described in numerous other systems, including the auditory system, where it serves to suppress auditory cortical responses to speech sounds as they are being spoken

(6,

7) . This may result from partial cancellation of sensation by the corollary discharge, which represents the expected sound of our own speech. Indeed, auditory cortical suppression is not as evident when the speech sound is artificially distorted as it is spoken

(8) .

The transmission of an efference copy to the appropriate sensory cortex may be an emergent property of a self-organizing system, accomplished by synchronization of oscillatory activity among distributed neuronal assemblies

(1) . The specific frequency of synchronous oscillations may identify neural populations as belonging to the same functional network of spatially distributed neuronal assemblies

(9) . If the forward model mechanism involves self-coordinated communication between motor and sensory systems, enhancement of neural synchrony should be evident before execution of motor acts, such as talking. Consistent with this hypothesis, local field potential recordings from somatosensory cells in rats showed neural synchrony that preceded exploratory whisking in both 7–12 Hz

(10) and 30–35 Hz

(11) bands. Hamada and colleagues

(11) suggested that neural oscillations might be triggered by transfer of an efference copy of motor preparation to the somatosensory cortex, happening several hundred milliseconds before the action and seen as oscillations phase-locked to it.

Time-frequency analyses of human EEG, time-locked to specific events, now allow us to measure phase synchrony on a millisecond time scale to investigate integrated neural systems and their compromise in complex neuropsychiatric disorders such as schizophrenia. Dysfunctional regional coordination, communication, or connectivity

(12), possibly associated with deficient synchronization of neuronal oscillations

(2,

13 –

15), may be responsible for a wide range of schizophrenia symptoms

(2) . Intertrial coherence

(16) is a measure of phase synchronization of neural oscillations across individual event-locked EEG epochs, reflecting the degree to which a particular type of stimulus is associated with changes in phase synchrony of ongoing oscillations at specific frequencies. With intertrial coherence, millisecond-by-millisecond changes in phase synchrony can be assessed independent of changes in EEG power. Intertrial coherence was described by Tallon-Baudry et al.

(17) as a “phase-locking factor.” Intertrial coherence can also be thought of as “temporal coherence” and is different from “spatial coherence,” which is calculated between different brain regions or electrode sites (see reference 18). Theta band (4–7 Hz) oscillation and synchrony may be involved in the mechanisms of sensorimotor integration and provide voluntary motor systems with continually updated feedback on performance

(19) .

In addition to dampening irrelevant sensations resulting from our own actions, the forward model provides a mechanism for automatic distinction between internally and externally generated percepts across sensory modalities and may even operate in the realm of covert thoughts, which have been viewed as our most complex motor act

(20) . Failures of this mechanism may contribute to self-monitoring deficits and auditory verbal hallucinations characteristic of schizophrenia

(21) . Specifically, if an efference copy of a thought, memory, or other inner experiences does not produce a corollary discharge of the expected auditory consequences, internally generated percepts may be experienced as having an external source.

Behavioral and electrophysiological evidence for dysfunction of the forward model system in schizophrenia is growing and extends to auditory, visual, and somatosensory modalities. With the N1 component of the event-related potential, we showed that the auditory cortical response dampening observed in healthy comparison subjects during talking was not evident in patients with schizophrenia. Lindner et al.

(22) reported that nondelusional patients were better able to perceive a stable environment during eye movements than were delusional patients, suggesting that delusions might be due to a specific deficit in the perceptual cancellation of sensory consequences of one’s own actions (e.g., references

21 and

23 ). Deficits in the self-monitoring of action may also underlie the failure of schizophrenia patients to correct action errors when only proprioceptive feedback is available

(24) . Shergill and colleagues

(25) used a motor force-matching task and demonstrated sensory attenuation of self-produced stimulation. Patients with schizophrenia exhibited significantly decreased attenuation of the resulting sensation, and the authors suggested that this was due to a failure of self-monitoring and faulty internal predictions.

Current Approach

Our primary goal was to quantify the neural correlates of the hypothesized efference copy associated with speaking, to assess group differences in this neural signal, and to relate it to auditory hallucinations. We predicted that there would be a larger signal preceding speaking than listening and that this difference would be reduced in patients, especially those with severe auditory hallucinations.

Our secondary goal was to validate neural synchrony as a reflection of the corollary discharge by relating its strength to subsequent cortical responsiveness. In a similar analysis of self-paced button-press data (unpublished report by Ford et al.), we suggested that synchrony of neural activity preceding the press reflected the corollary discharge from the motor to the sensory cortex. Although we predicted that the strength of the corollary discharge would be related to suppression of the subsequent cortical response to the button press, we did not have a good measure of postpress response suppression. Here we used the difference in N1 amplitude during talking and listening, as we have done previously

(26) . We predicted that the strength of the prespeech corollary discharge would be directly related to suppression of N1 to speech onset during talking compared to listening.

Our final goal was to confirm our earlier findings

(26) of a reduction in N1 suppression to speech-onset patients with schizophrenia but with a left hemisphere locus

(27) .

Methods and Materials

Participants

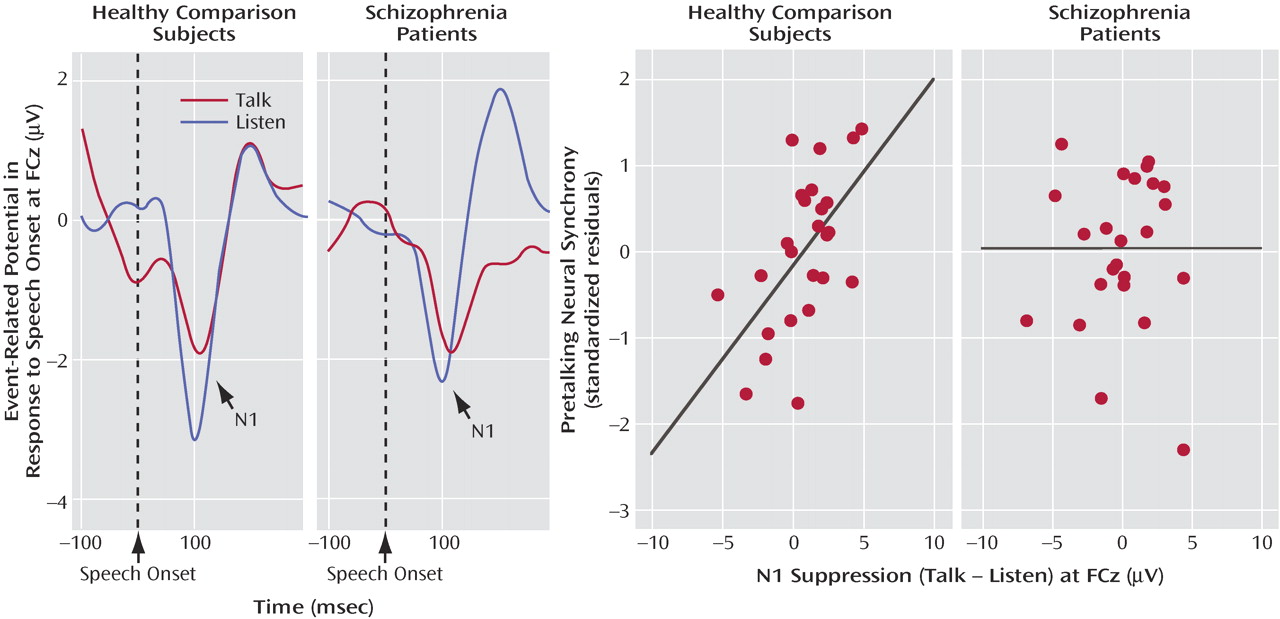

EEG data were acquired from 24 patients (four women) and 25 healthy comparison subjects (six women). All gave written informed consent after the procedures had been fully described. The patients and comparison subjects were matched for age and parental socioeconomic status. Demographic and clinical data are summarized in

Table 1 .

The patients were recruited from community mental health centers as well as from inpatient and outpatient services of the Veterans Affairs Health Care System in Palo Alto, Calif., and San Francisco. All patients were taking stable doses of antipsychotic medications and met DSM-IV

(28) criteria for schizophrenia or schizoaffective disorder either based on the diagnosis from the Structured Clinical Interview for DSM-IV (SCID

[29] ) conducted by a psychiatrist or psychologist or by consensus of a SCID interview conducted by a trained research assistant and a clinical interview by a psychiatrist or psychologist. The patients were excluded if they met DSM-IV criteria for alcohol or drug abuse within 30 days before the study. In addition, patient and comparison participants were excluded for significant head injury, neurological disorders, or other medical illnesses compromising the CNS. Symptoms during the last week were rated with the Scale for the Assessment of Negative Symptoms (SANS)

(30), the Scale for the Assessment of Positive Symptoms (SAPS)

(31), and the Brief Psychiatric Rating Scale (BPRS)

(32) by two (and sometimes three) independent raters attending the same rating session.

The comparison subjects were recruited by newspaper advertisements and word of mouth, screened with the telephone with questions from the SCID nonpatient screening module

(29), and excluded for any history of axis I psychiatric illness.

Task Design

The subjects uttered “ah” while a cue (yellow X) was on the screen (1.66 seconds). This was repeated five times in each talk block. For comparison with functional magnetic resonance imaging (fMRI) data collected in a different session (data to be presented separately), each talk block was followed by a rest block in which the subjects saw a sequence of five black cues instead of five yellow cues. The subjects were instructed to watch the screen during the rest block. “TALK” appeared at the beginning of each talk block and “REST” at the beginning of each rest block. Each talk-rest pair of blocks was repeated six times. At the end of six repeats, “END” appeared on the screen. All visual stimuli were uppercase against a blue background.

During the talk task, utterances were recorded for playback during the listen task. During the listen task, the visual display was similar to the talk task; cues were seen as before, but the instruction “TALK” was replaced with “LISTEN.” Also, for comparison with the fMRI data, magnetic resonance noise from the clustered acquisition sequence was played between the cues such that it was absent when speech was produced or played back.

The subjects were trained to produce uniform, brisk utterances with minimal tongue, jaw, and throat movements. Before data acquisition, the subjects uttered “ah” several times to facilitate sound system calibration and acclimation to the environment.

Instrumentation

An audio presentation system (Reaktor, Native Instruments, Berlin, Germany) allowed us to detect the subject’s vocalization and to amplify and play it back through headphones essentially in real time. The same program rectified and low-pass filtered incoming audio signals, detected the rising edge of the rectified and filtered signal, generated a trigger pulse, and inserted it into the EEG data collection system.

EEG/Event-Relation Potential Acquisition

EEG data were acquired (0.05–100 Hz band-pass filter, 1000 Hz analogue-to-digital conversion rate) from 27 sites referenced to the nose (F7, F3, Fz, F4, F8, FT7, FC3, FCz, FC4, FT8, T5, C3, Cz, C4, T6, TP7, CP3, CPz, CP4, TP8, P7, P3, Pz, P4, P8, Tp9, and Tp10). During preprocessing, data were rereferenced to the mastoid electrodes (TP9 and TP10). Additional electrodes were placed on the outer canthi of both eyes and above and below the right eye to measure eye movements and blinks (vertical and horizontal electro-oculogram). EEG data were separated into 500-msec epochs and time-locked to onset of the first speech sound on every trial. Trials containing artifacts (voltages exceeding 100 μV) were rejected, and then vertical electro-oculogram and horizontal electro-oculogram data were used to correct EEGs for eye movements and blinks in a regression-based algorithm

(33) . EEG epochs for these analyses spanned 500 msec, centered on speech onset.

EEG Analysis

Intertrial coherence analysis was implemented in EEGLAB

(34) . Intertrial coherence provided frequency- and time-specific measures of cross-trial phase synchrony with respect to the button-press onset. Intertrial coherence values were calculated with a moving fast Fourier transform window, which was 64 msec wide. This window was applied 200 times to each epoch, producing as many intertrial coherence data points. Data were extracted in 1-msec increments. Although the 64-msec window gave us excellent temporal resolution, we could not unambiguously resolve frequencies below 15.625 Hz because one complete cycle of a 15.625 Hz signal lasts 64 msec. Because the forward model signal is likely to be brief, we focused our analysis on the data derived from the 64-msec window and sacrificed precision in the frequency domain. Thus, the first bin in our analysis was 15.625 Hz, with the next at 31.25 Hz. It is important to note that nearby unresolved frequencies can influence a fast Fournier transform measure. A Hanning window was applied to each 64-msec section of data to minimize such frequency leakage, but this is not a perfect remedy

(35) . Thus, data extracted at 15.625 Hz includes contributions from adjacent frequencies.

Event-Related Potential Analysis

Before identification of N1, data were band-pass filtered between 2 and 8 Hz to optimize measurement of N1. N1 was identified as the most negative point between 50 and 175 msec after speech onset. The voltage at that point was measured relative to a prespeech baseline (–100 to 0 msec).

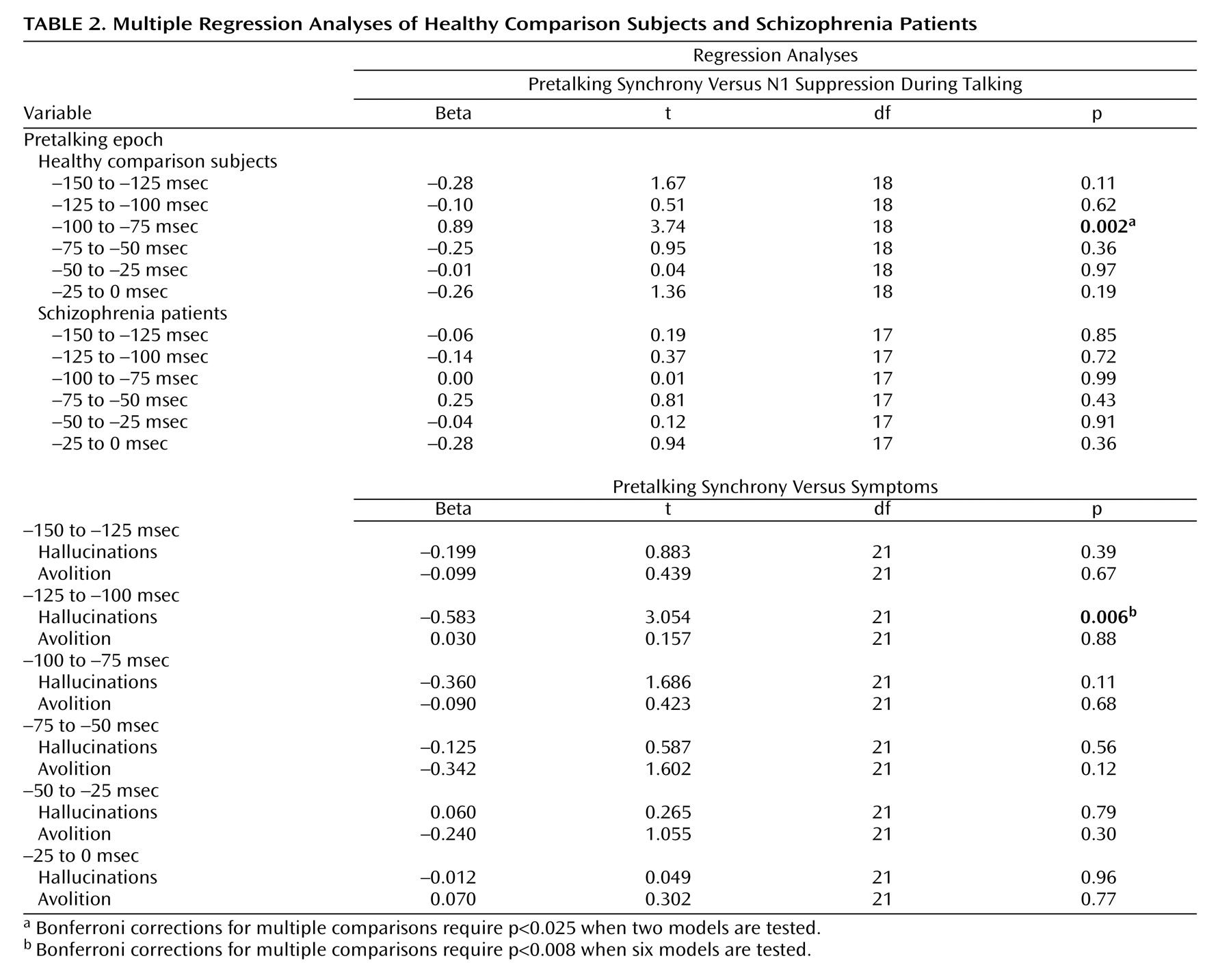

Statistical Analysis of EEG Data

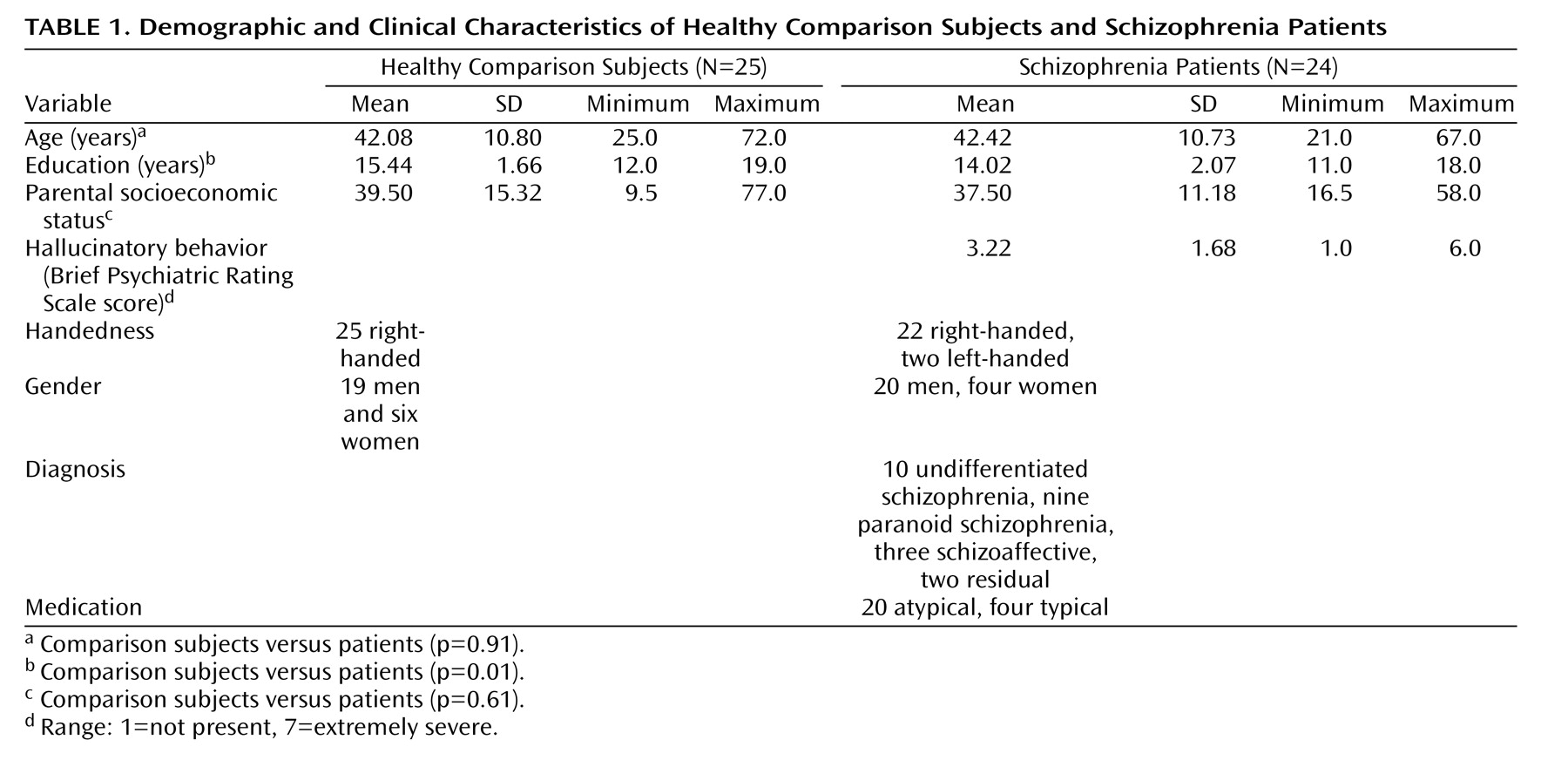

Intertrial coherence time-frequency plots for comparison subjects (

Figure 1 ) showed increased phase synchrony between –150 and 0 msec before speech onset. To reduce the number of statistical tests, we focused on the slow EEG band at FCz. Data were subjected to a three-way analysis of variance (ANOVA) for group (comparison subjects versus patients), condition (talk versus listen), and time (six bins of 25 msec spanning 150 msec preceding speech onset). Interactions were parsed with follow-up ANOVAs

(36) . Greenhouse-Geisser correction for nonsphericity was used, as appropriate. We used a p<0.05 level of significance.

Statistical Analysis of Event-Related Potential Data

N1 peak amplitudes off midline were assessed in a four-way ANOVA for the between-subjects factor of group and the within-subjects factors of condition (talk versus listen), hemisphere (left versus right), laterality (far from midline, closer to midline, and closest to midline), and caudality (frontal, frontal-central, central, central-parietal, and parietal). Data from the following electrodes were used in this analysis: left frontal: F7, F5, F3; right frontal: F8, F6, F4; left frontal-central: FT7, FC5, FC3; right frontal-central: FT8, FC6, FC4; left-central: T3, C5, C3; right-central: T4, C6, C4; left central-parietal: TP7, CP5, CP3; right central-parietal: TP8, CP6, CP4; left-parietal: T5, P5, P3; right-parietal: T6, P6, and P4.

Correlations

Symptoms With EEG

To establish the specificity of the relationship between hallucinatory behavior (the BPRS) and prespeech asynchrony, hallucinatory behavior was entered into a multiple regression model with avolition/apathy (SANS) to predict prespeech intertrial coherence at FCz for each of the six 25-msec epochs. Bonferroni corrections for multiple comparisons require p<0.008 for testing six models in this family.

EEG and Event-Related Potentials

The intertrial coherence values before talking at FCz were correlated with the N1 amplitude suppression during talking at FCz (talk – listen). A larger positive value of the N1 talk – listen difference reflected more suppression. Not all subjects showed suppression during talking; indeed, many patients and some comparison subjects have negative values. Each of the six time bins was entered into one multiple regression analysis for comparison subjects and one for patients. Bonferroni corrections for multiple comparisons require p<0.025 for testing two models in this family.

Results

EEG

As can be seen in

Figure 1, there was greater intertrial coherence preceding talking than listening (talk/listen: F=31.55, df=1, 47, p<0.0001) and a talk/listen-by-group interaction (F=6.91, df=1, 47 p<0.02). Although the talk-listen effect was stronger in comparison subjects (F=29.26, df=1, 24, p<0.0001), it was also significant in patients F=5.43, df=1, 23, p<0.03). There was also a time-by-group interaction (F=2.53, df=5, 235, p<0.05), but the effect of time was not significant in either comparison subjects (p=0.28) or patients (p=0.28). Inspection of data in

Figure 1 suggested that much earlier in the prespeech epoch the group difference was reduced or reversed. We specifically addressed whether patients had greater synchrony between –225 and –200 msec preceding vocalization, and, indeed, we found no talk/listen-by-group interaction (p=0.31), although there was greater synchrony before talking than listening (F=11.91, df=1, 47, p<0.001).

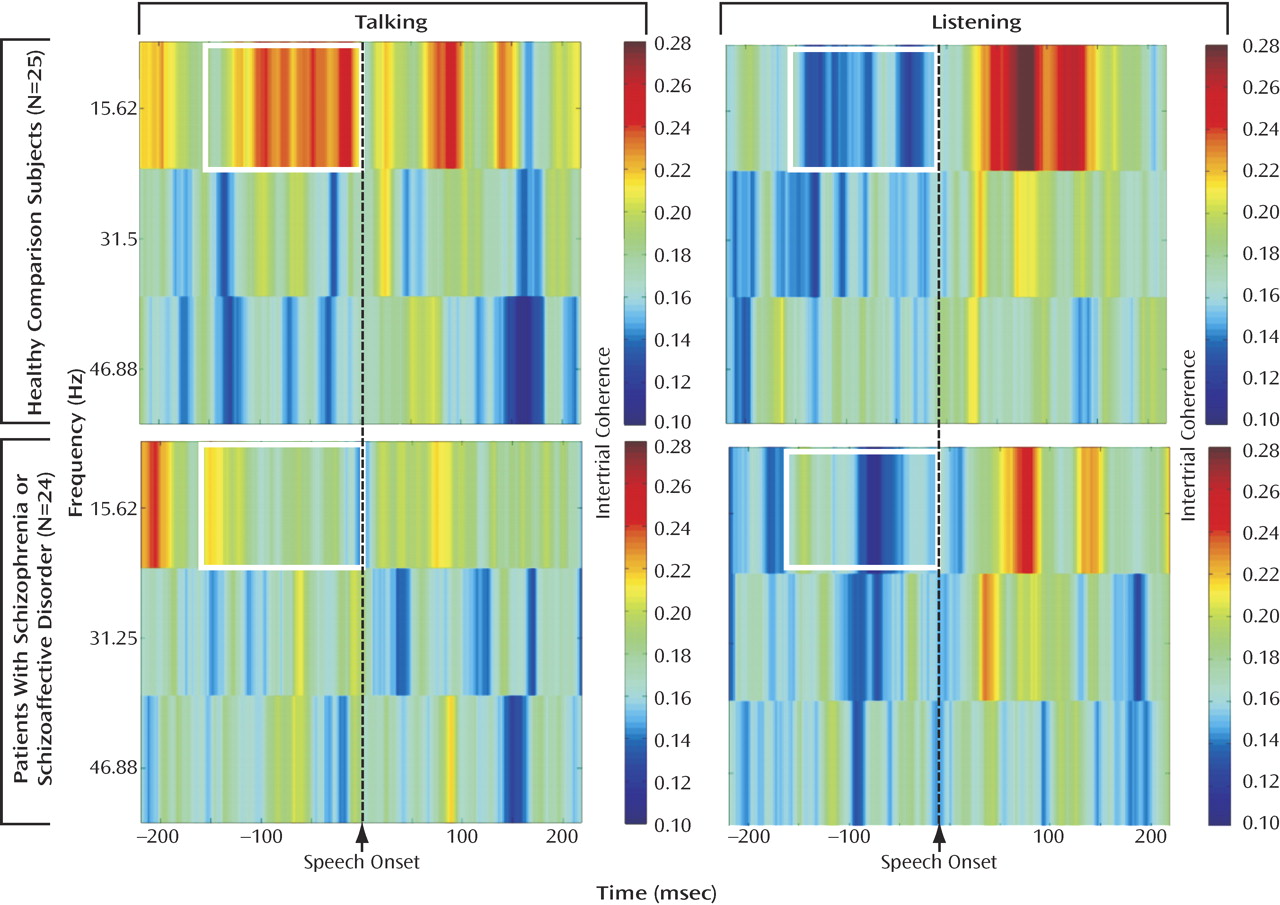

EEG and Hallucinations

When both hallucination severity and avolition/apathy were entered into a multiple regression analysis to control for the effects of one on the other, only hallucination severity was related to synchrony. A scatterplot of the inverse relationship between prespeech intertrial coherence and hallucination severity (residualized on avolition/apathy) can be seen in

Figure 2 . The scalp topography map shows that the strongest correlations between hallucinations and prespeech synchrony are at frontal-central sites.

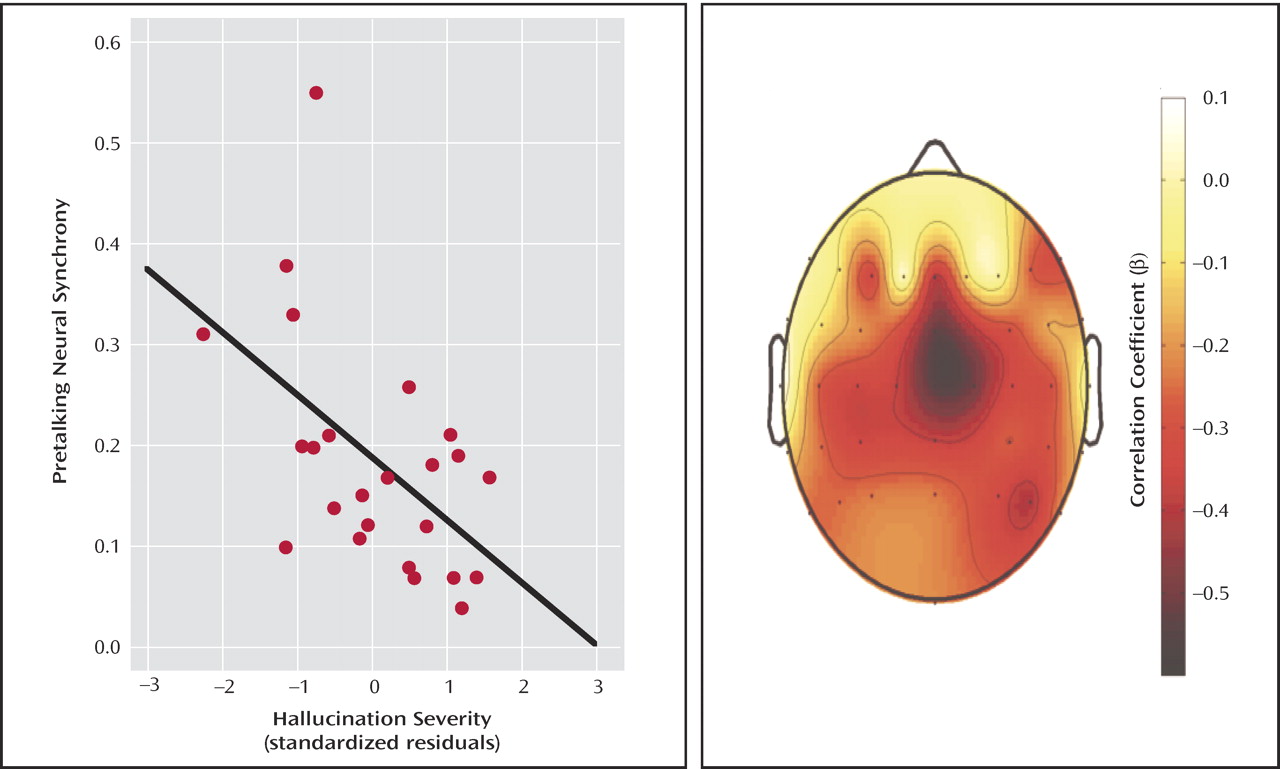

Event-Related Potentials

N1 amplitude to speech sound onset was affected by a talk/listen-by-group interaction (F=4.4, df=1, 47, p<0.05). In comparison subjects, N1 amplitude during talking (–1.62 μV 1.26) was suppressed compared to listening (–2.04 μV 0.85). In patients, N1 amplitude during talking (–2.05 μV 1.1) was not suppressed compared to listening (–1.81 μV 0.88). Although we predicted that this effect would be larger over the left than the right hemisphere

(27), neither the talk/listen-by-hemisphere effect (F=0.063, df=1, 47, p=0.82) nor the talk/listen-by-group-by-hemisphere interaction (F=0.663, df=1, 47, p=0.42) was significant.

EEG/Event-Related Potential Correlations

We suggested that intertrial coherence preceding speech might be a reflection of the efference copy signal sent from speech production areas to the auditory cortex, where the resulting corollary discharge prepares auditory processing areas for the imminent arrival of the speech sound about to be produced. If so, efference copy/corollary discharge strength preceding speech should be related to the degree of suppression of auditory cortical responsiveness to the speech sound. To assess that association, we correlated N1 suppression at FCz with prespeech intertrial coherence at FCz. After we controlled for the contribution of intertrial coherence from the other time bins, intertrial coherence between –100 and –75 msec before speech onset was strongly related to N1 suppression (

Figure 3 ) in comparison subjects but not patients (

Table 2 ).

Sound Quality

The intensity of sounds did not differ between groups (p=0.88), but the patients (290 137 msec) tended to produce sounds of longer duration than the comparison subjects (226 74 msec; F=4.18, df=1, 48, p<0.05). In the comparison subjects, prespeech synchrony presaged sound quality: those who generated more prespeech synchrony between –100 and –75 msec (r=0.41, p=0.04) and between –75 and –50 msec (r=0.43, p=0.03) spoke sounds of longer duration later, and those who generated more prespeech synchrony between –50 and –25 msec spoke louder later. These relationships were not significant in the patients. However, in the patients, synchrony and sound quality were related in the moment that sounds were uttered; sounds of longer duration were associated with more neural synchrony between 0 and 25 msec (r=0.43, p<0.03), and more intense sounds were associated with more neural synchrony between 100 and 125 msec (r=0.43, p<0.04).

Discussion

With time-frequency decomposition of EEG recorded during a simple vocalization task, we examined phase synchrony of neural oscillations preceding speech onset. We found that both healthy comparison subjects and patients with schizophrenia showed an increase in phase synchrony during the 150 msec preceding an utterance in the lower frequency range studied. This is consistent with increased neural synchronization preceding whisking in the 7–10 Hz range

(10) and the 30–35 Hz range

(11) in rats. Like Hamada et al.

(11), we suggest that this premovement burst of synchronous neural activity is a reflection of the forward model preparing the CNS for the sensory consequences of its own actions. Consistent with prespeech synchrony reflecting the forward model communication from speech production to reception areas, we found evidence in comparison subjects of a relationship between prespeech synchrony and N1 suppression during talking compared to listening.

Consistent with the theory that the forward model mechanism is dysfunctional in schizophrenia (e.g.,

21,

23), this prespeech signal was smaller in patients and was not associated with suppression of cortical responsiveness to speech sounds. Nevertheless, there was still evidence of a corollary discharge signal in patients because the prespeech intertrial coherence was larger during talking than listening and the amount of prespeech synchrony in patients was inversely correlated with auditory hallucination severity. That is, in addition to dampening irrelevant sensations resulting from our own actions, the forward model may distinguish between internally and externally generated percepts. This would apply to memories and inner experiences because thoughts have been described as our most complex motor act

(20) . Specifically, if an efference copy of a thought or inner experience does not produce a corollary discharge of its expected sensory consequences, internal experiences may be experienced as external.

These data are similar to those recorded during self-paced button pressing in some of these patients (unpublished report by Ford et al.). In that study, we found that a lack of prepress neural synchrony over the contralateral sensory motor cortex in the patients was related to the motor symptoms of avolition and apathy and not to auditory hallucination severity. In the current study, when both hallucination severity and avolition/apathy were entered into a multiple regression analysis to control for the effects of one on the other, hallucination severity only was related to prespeech synchrony.

We are becoming increasingly aware that locally specialized functions in the brain must be coordinated with each other, and coordination failures may be responsible for a wide range of problems in schizophrenia, from psychotic experiences to cognitive dysfunction. The asynchrony seen in patients preceding self-initiated movements, such as talking and pressing a button, suggests that patients with schizophrenia are out of synch at a most basic level in tasks that should be relatively unaffected by differences in intellectual ability and motivation and in tasks that are not speaking-bound (unpublished report by Ford et al.). This suggests that these symptoms could result from a deficient message being sent to the sensory cortex that the action is “self-generated” and that it results in modality-specific symptoms.

EEG is an excellent way to study temporal synchrony, and new time-frequency analyses show promise as brain-imaging tools. Furthermore, neural oscillations are readily translated to more basic neural mechanisms studied in laboratory animals and in vitro. Although these data support the notion that the forward model system can be assessed with these tools and that this system is dysfunctional in patients, especially those who hallucinate, there are many questions still needing to be addressed: whether the deficit seen in patients is due to a faulty corollary discharge

to the auditory cortex or if faulty processing of the information

in the auditory cortex can be addressed in fMRI studies with this paradigm. Whether neural synchrony is abnormal in patients during inner speech can be addressed in cued-inner-speech studies such as we have done before

(37) . Whether the interplay of γ-aminobutyric acid (GABA) and glutamate affects the frequency

(38) or the phase

(39) of neural oscillations of premovement activity can be addressed with drug challenge studies in human and nonhuman primates. Relationships between neural synchrony and spoken sound quality should be addressed in a series of parametric manipulations.