Longitudinal studies are critically important for identifying childhood abnormalities that predict schizophrenia and schizophrenia spectrum disorders. High-risk and follow-back studies have the advantage of being specifically targeted for schizophrenia, but they are also subject to the problem of lack of representativeness. For example, relatively few individuals with schizophrenia have a parent with schizophrenia. General population studies avoid some of the potential biases inherent in high-risk and follow-back studies. Prospective data about developmental antecedents of schizophrenia from general population samples provide a valuable complement to high-risk studies.

High-risk studies have shown that childhood attentional impairments predict adult schizophrenia spectrum disorders or associated symptoms (

1–

3). Childhood and adolescent affective deficits also appear to be important factors in schizophrenia spectrum outcomes in high-risk children (

4,

5). Family instability and poor childhood social or school adjustment have been associated with adult schizophrenia-related outcomes in high-risk and follow-back studies (

6–

9). Psychophysiological, neurological, and motor abnormalities have been found to be predictive of adult schizophrenia in high-risk studies (

9,

10). In community sample studies, impaired childhood social adjustment, delayed motor development, speech problems, poor educational test performance, and mothers rated as having less than average parenting skills were each a significant predictor of adult schizophrenia (

11,

12).

Low childhood IQ (or other measures of general intellectual ability) was also a predictor of adult schizophrenia in studies using each of the preceding strategies (

12–

16). The focus of this article is on change in IQ during childhood as a predictor of adult psychotic symptoms in a community sample unselected for psychiatric illness.

Our thinking about IQ change was influenced by the ideas of Barbara Fish, M.D., although she did not specifically address this issue. In conducting assessments over time, investigators have usually looked for stability of deficits or abnormalities. In contrast, Fish, who began studying infants at risk for schizophrenia in the 1950s, focused more on change over time than on stability (

10). She postulated that an inherited neurointegrative defect was specific for the schizophrenia phenotype. “Pandysmaturation” was later invoked as an index of this defect in infancy. It consisted of 1) transient retardation and accelerated return to normal of motor or visual-motor development, 2) an abnormal developmental profile whereby earlier items on a single developmental examination are failed and later items are passed, and 3) retardation in skeletal growth (

10). Several high-risk studies have shown patterns of uneven development consistent with pandysmaturation in children of parents with schizophrenia, although there are few data on the relationship to adult outcomes (

10).

In several instances, predictors from the studies just discussed were based on assessments at multiple points in time. Not only does this approach help to illuminate the developmental trajectory of schizophrenia-related disorders, but it is also likely to reduce false positive predictions (

17,

18). Using the notion of variability in a broader fashion, Hanson et al. (

19) examined intraindividual cognitive variability in children with an index of variability across measures and over time (ages 4 and 7). They standardized psychological test scores, including IQ measures, and calculated the variance of the scores for each individual. The proportion of children with high variance scores was significantly higher for the high-risk children than for the low-risk children.

These considerations suggest three alternative hypotheses regarding the relationship between childhood IQ and adult schizophrenia or psychotic symptoms. First, a “low IQ” hypothesis would be that low IQ in and of itself is a predictor of adult schizophrenia or psychotic symptoms. Second, a “nondirectional IQ change” hypothesis parallels the notion of uneven development or deviation from an expected trajectory; that is, large fluctuations in IQ during childhood, regardless of the direction of change, are a predictor. Third, a “directional IQ change” hypothesis would be that a large change in a specified direction is a predictor; the logical choice in this case would be IQ decline.

METHOD

Participants

The participants were a subset of the offspring at the Providence, R.I., site of the National Collaborative Perinatal Project. Details of the National Collaborative Perinatal Project, which was designed to evaluate factors associated with neurodevelopmental disorders of childhood, have been described previously (

20). Pregnant women were recruited, usually at their first prenatal visit, at 12 locations in the United States from 1959 to 1966. The women were followed throughout pregnancy, and their children were followed to age 7. Extensive prenatal and maternal data were collected, along with results of repeated medical, neurological, and psychological examinations of the children. A total of 4,140 pregnancies were included in the Providence cohort (

21).

Buka et al. (

21) followed up a selected sample of 1,068 individuals comprising offspring with pregnancy and delivery complications and a matched group without complications. At follow-up, 140 were deceased, adopted, or otherwise ineligible. Of the remaining 928, 693 (75%) were interviewed at an average age of 23 years. There were no differences in interview rates for the individuals with and without complications. Face-to-face interviews were conducted with 85% of the offspring; 15% were interviewed by telephone. The present sample of individuals who underwent IQ testing at both ages 4 and 7 comprises 547 (79%) of the 693 interviewed offspring (59% of the total eligible cohort). Of the 547 participants, 316 (58%) were women and 381 (70%) were white; the mean parental socioeconomic rating at study entry (N=528) was 45.2 (SD=18.8) on a 0–99 scale based on a U.S. Census Bureau instrument (

22). This group of 547 was similar to the entire sample of 693 in terms of sex distribution, age at assessment, and parental socioeconomic status, but it had fewer minority participants. Written informed consent was obtained from all participants after the research procedures were fully explained.

Measures

Diagnosis and symptom ratings. Adult psychiatric symptoms and lifetime DSM-III diagnoses were determined on the basis of version III of the National Institute of Mental Health Diagnostic Interview Schedule (DIS), a structured interview suitable for large community samples (

23). The DIS was administered by trained interviewers. We placed particular emphasis on minimizing false positive ratings of psychotic symptoms. In accordance with standard DIS administration procedures, if the interviewee acknowledged any symptoms, he or she was further queried to determine symptom severity and any known precipitating conditions. Subthreshold symptoms and symptoms attributed to medications, substance use, and/or physical conditions did not qualify. In addition, the interviewers were instructed to ask for and record verbatim examples of potentially qualifying symptoms. Two expert diagnosticians subsequently reviewed all available information and reevaluated the presence of psychotic symptoms to rule out those that were not clinically meaningful. For example, a person responded positively to a question about events having particular and unusual meaning specifically for him or her; on review it was determined that this individual was alluding to personal values rather than delusions of reference.

Of the 547 offspring, 25 experienced psychotic symptoms according to the DIS interview alone. The expert reevaluation, on which there was complete interrater agreement, resulted in far more conservative ratings; seven individuals originally rated as having psychotic symptoms were reclassified as nonpsychotic. On the basis of the reevaluation, eight (2%) of the 547 offspring were rated as having definite psychotic symptoms at age 23; 18 (3%) individuals were included when the threshold was probable or definite psychotic symptoms. Among these 18 individuals, there were five with persecutory delusions, five with hallucinations, one with bizarre delusions, one with delusions of reference, one with other delusions, two with hallucinations and persecutory delusions, and three with persecutory and bizarre delusions. In the analyses, the group of 18 offspring with probable or definite psychotic symptoms was compared with the remainder of the sample (without psychotic symptoms).

IQ measures. The Stanford-Binet IQ test (

24) was administered at age 4. An abbreviated version of the Wechsler Intelligence Scale for Children (WISC) (

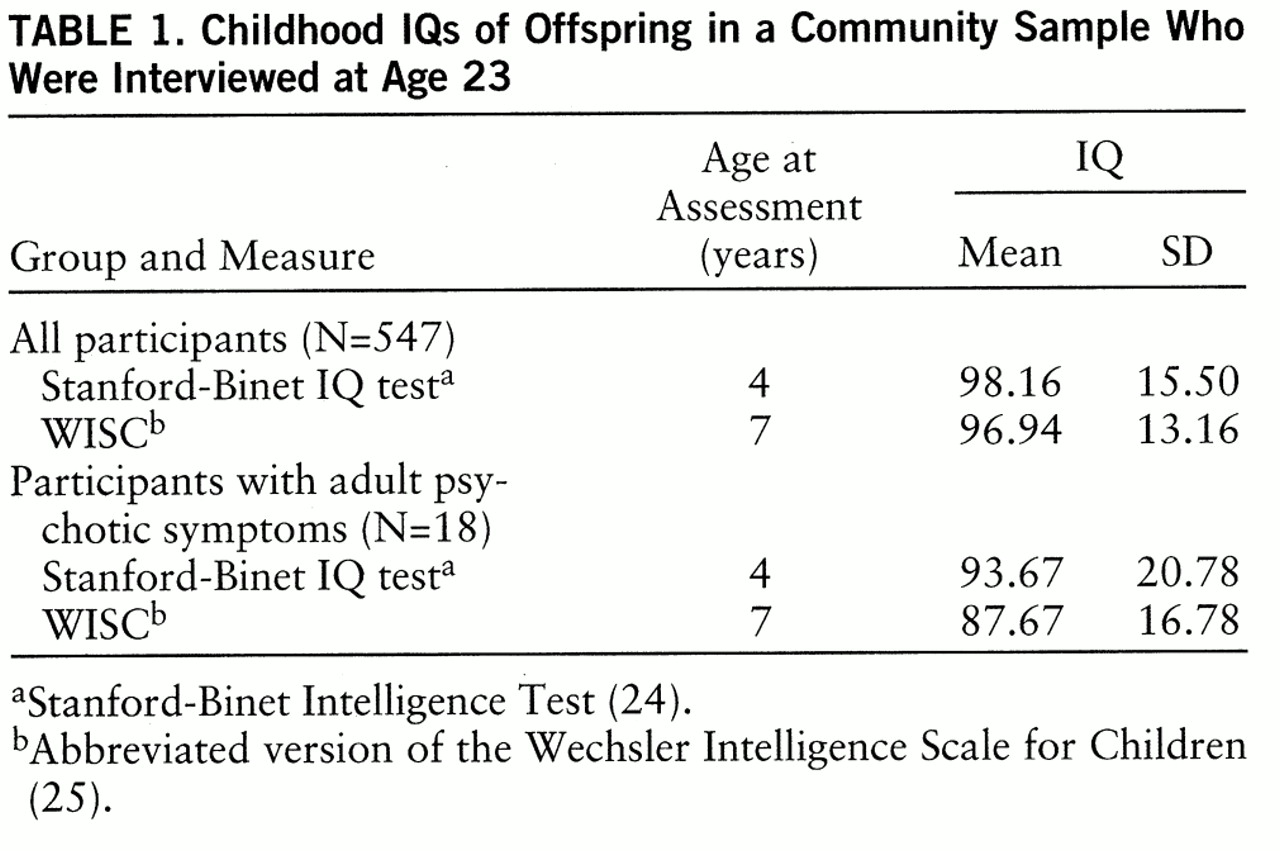

25) including the information, vocabulary, digit span, comprehension, block design, picture arrangement, and coding subtests was administered at age 7. To provide a common metric, we standardized the IQ measures so that each had a mean of 100 and a standard deviation of 15 for the entire Providence sample of the National Collaborative Perinatal Project. These are the general population means and standard deviation values for the WISC, whereas the Stanford-Binet test has a mean of 100 and a standard deviation of 16. Mean IQs for the study sample are shown in

table 1.

Indices of IQ change. We applied a regression approach to avoid the psychometric artifacts inherent in raw difference scores, i.e., the curvilinear relationship between difference scores and total score for any two tests (

26). For the entire Providence sample, we regressed standardized age 7 IQs on age 4 IQs (N=2,688). The residual (observed minus predicted) score tells how much higher or lower than expected an individual is from the predicted score; residual scores are comparable regardless of absolute level of performance. Thus, when we refer to IQ change, we are actually referring to increase or decline in comparison with expectation based on predicted score.

For this method to be valid, the pairs of tests being used should be fairly highly correlated (

26). The correlation (r) between the Stanford-Binet test and the WISC was 0.65 (df=2686) for the entire Providence sample and 0.60 (df=545) in the present study (p<0.001 in both cases).

Because only a small proportion of the general population would be expected to experience psychotic symptoms, we hypothesized that if IQ change were a meaningful predictor variable, then individuals at the extremes of the IQ change distribution would be most likely to develop psychotic symptoms. Consequently, we performed deviant responder analyses (

17), dividing the 547 offspring into the highest (largest IQ increase) and lowest (largest IQ decline) deciles, the next highest and lowest 15%, and the middle 50%.

RESULTS

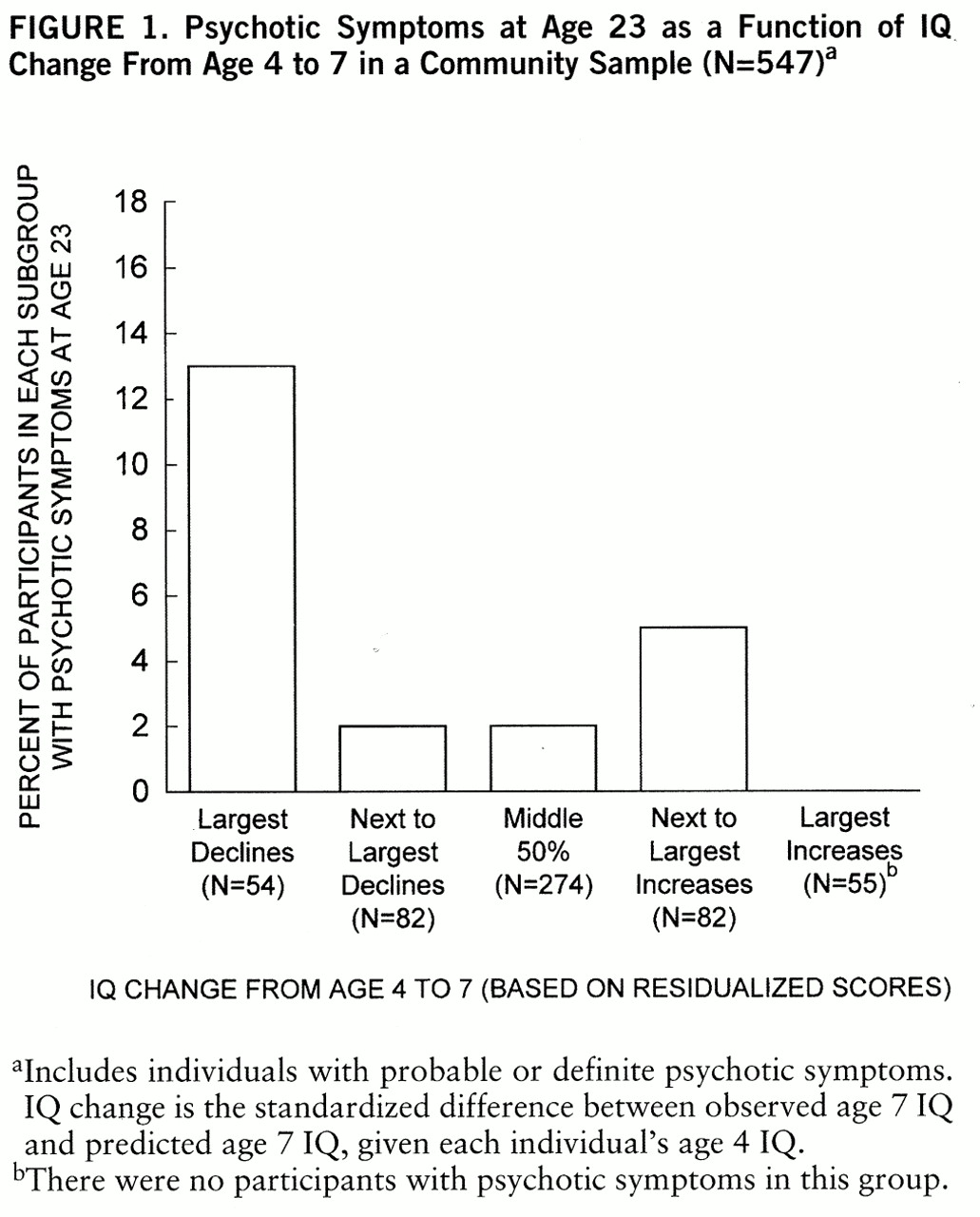

Figure 1 shows the proportion of participants with adult psychotic symptoms grouped by amount of IQ change from age 4 to 7. The subgroups other than that with the largest IQ decline did not differ in rates of later psychotic symptoms (χ

2=4.10, df=3, p=0.25). Only individuals who had very large declines were more likely to experience later psychotic symptoms. Consequently, we compared the group with largest IQ decline (bottom decile; N=54) with the other four groups combined (N=493).

Individuals in the bottom decile (those with much larger than expected declines from age 4 to 7) were significantly more likely to have psychotic symptoms at age 23: 13% (seven of 54) versus 2% (11 of 493) (χ

2=17.61, df=1, p=0.0001; odds ratio=6.62, 95% confidence interval=2.52–17.42).

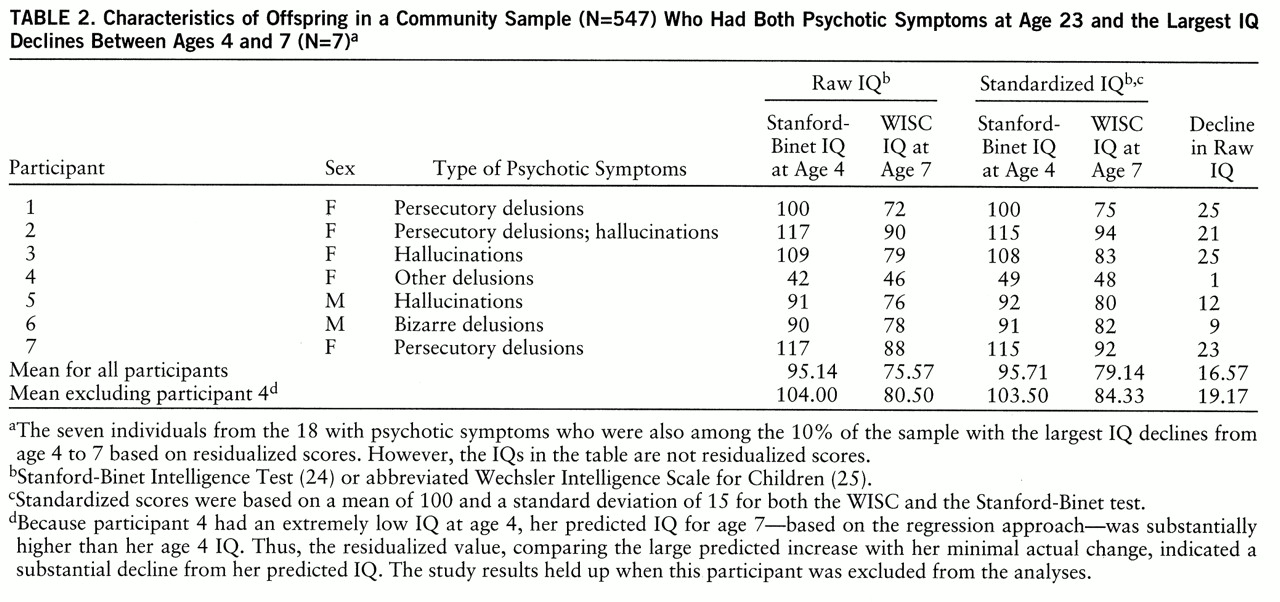

Table 2 shows characteristics of the seven participants who had both larger than expected childhood IQ declines and adult psychotic symptoms. Analyses based on raw differences, rather than residualized scores, between age 4 and 7 IQs did not significantly predict psychotic symptoms; the rates of psychotic symptoms at age 23 were 7% for the participants with the largest raw childhood IQ decline (four of 54) and 3% for the remainder of the respondents (14 of 493) (χ

2=3.05, df=1, p=0.08).

Age 7 IQ alone predicted psychotic symptoms at age 23 nearly as well as the residualized score did. As with the residualized scores, the bottom decile of age 7 IQs (IQ≤80) was compared with all others combined. The participants with low age 7 IQs were significantly more likely to have psychotic symptoms at age 23: 11% (six of 54) versus 2% (12 of 493) (χ2=10.80, df=1, p=0.0001). In contrast, when the bottom decile for age 4 IQs was compared to the rest of the participants, there was no significant difference in the rates of later psychotic symptoms: 6% (three of 54) versus 3% (15 of 493) (χ2=0.97, df=1, p=0.33).

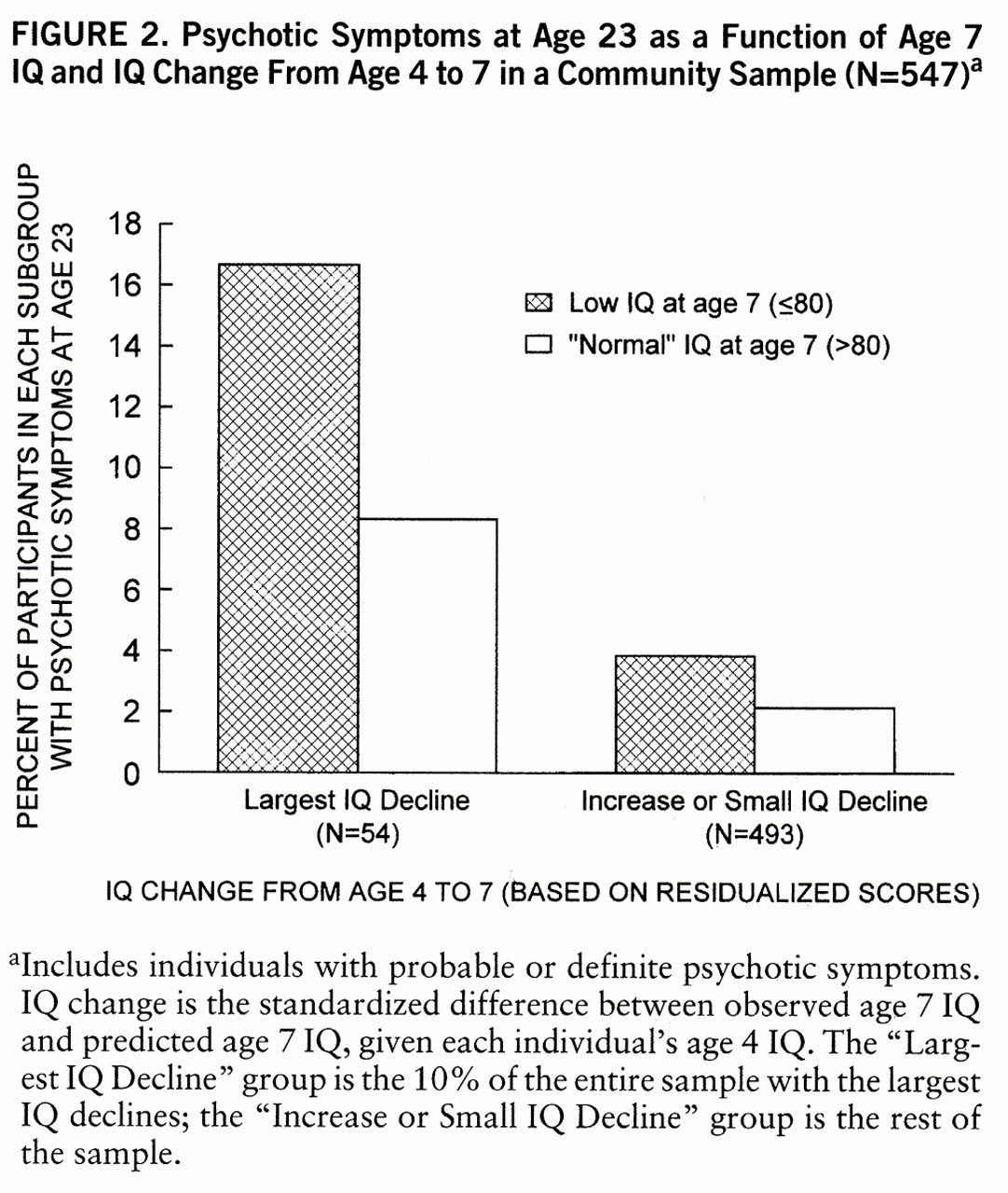

The question of whether the results could be accounted for simply by low age 7 IQ warranted further examination because a group with large IQ declines between ages 4 and 7 would also be more likely to have relatively low IQs at age 7. We divided the participants into four groups: 1) low age 7 IQ with large IQ decline between ages 4 and 7, 2) “normal” age 7 IQ with large IQ decline between ages 4 and 7, 3) low age 7 IQ with small IQ decline or increase between ages 4 and 7, 4) “normal” age 7 IQ with small IQ decline or increase between ages 4 and 7. “Low” age 7 IQ was defined as 80 or below (bottom decile). “Normal” IQ was used as a shorthand reference to IQs above 80. “Large” IQ decline between ages 4 and 7 refers to the bottom decile for decline (i.e., largest IQ declines). The results are illustrated in

figure 2. There was a highly significant trend across these four groups such that the two groups with the largest IQ declines (two leftmost bars in

figure 2) had the highest proportions of individuals with psychotic symptoms at age 23 (Mantel-Haenszel χ

2=20.75, df=3, p=0.001).

We also considered the potential role of demographic factors in these results. The sex ratio did not differ between groups: 39% women and 61% men for the group with large IQ declines versus 43% women and 57% men for the rest of the sample (χ

2=0.27, df=1, p=0.60). Socioeconomic status could also be an important factor in the prediction of psychotic symptoms because it is often associated with IQ (

27). There were modest correlations between socioeconomic status and IQ at ages 4 and 7 (r=0.30, df=531, p<0.001 in both cases). Socioeconomic status was significantly lower in the group with the largest IQ decline (mean=40.09, SD=21.18) than in the other study participants (mean=46.42, SD=29.40) (t=2.25, df=531, p=0.02), but there was no significant difference between the mean scores of the participants with and without psychotic symptoms (mean=48.22, SD=19.08, versus mean=45.69, SD=19.69) (t=–0.054, df=531, p=0.59). Thus, socioeconomic status appeared to be associated with IQ decline but not with later psychotic symptoms.

As another way of examining the relationship among these factors, we performed a logistic regression analysis in which IQ change, age 7 IQ, and socioeconomic status were included as predictors of the presence or absence of psychotic symptoms at age 23. IQ change and age 7 IQ were each dichotomized at the bottom decile because theoretical considerations led us to focus on the extreme end of the distributions (i.e., the deviant responders). Socioeconomic status was dichotomized on the basis of a median split to maximize statistical power. The overall model was highly significant (χ2=15.20, df=3, p<0.002). When each predictor variable was tested after adjustment for the other predictors, the results were as follows—IQ change: χ2=6.10, df=1, p<0.02; age 7 IQ: χ2=1.55, df=1, p=0.21; socioeconomic status: χ2=2.84, df=1, p<0.10. Although low socioeconomic status may have some predictive value, these results are consistent with the notion that IQ change from age 4 to 7 is a more important predictor of psychotic symptoms at age 23 than either socioeconomic status or age 7 IQ alone.

Finally, we examined the ability of IQ decline between ages 4 and 7 to predict other types of psychiatric symptoms. Large IQ decline was not associated with nonpsychotic symptoms, whether we looked at the presence of any symptoms or the 10% of individuals with the most symptoms in a given DIS category. This relationship was assessed for symptoms in the following DIS modules: depression; mania; anxiety disorders (including phobias, panic disorder, and obsessive-compulsive disorder); antisocial personality disorder; childhood conduct disorder; alcohol abuse; drug abuse; and total number of any symptoms. The differences in rates of these symptoms between the group with large IQ declines and the other participants were trivial and in some cases were in the opposite direction of the results for psychotic symptoms.

DISCUSSION

We found that a substantially larger than expected IQ decline from age 4 to 7 was associated with a rate of psychotic symptoms 16 years later that was nearly seven times as high as that for individuals without large childhood IQ declines. This result supports the “directional IQ change” hypothesis; that is, a large decline, not simply a large fluctuation, predicted later psychotic symptoms. There was also support for the “low IQ” hypothesis in that low age 7 IQ alone also predicted psychotic symptoms at age 23. However, further analysis suggested that IQ decline was a more important factor. This distinction is perhaps most clearly seen by inspection of

figure 2; individuals with low age 7 IQs who did not experience substantial IQ decline between ages 4 and 7 (second bar from right) still had a lower rate of psychotic symptoms at age 23 than individuals with “normal” age 7 IQs who did experience substantial IQ decline during childhood (second bar from left). Our data also indicated that IQ decline was a stronger predictor of psychotic symptoms than was parental socioeconomic status.

Given the small number of individuals with psychotic symptoms, we chose to include those with either probable or definite symptoms. Although inclusion of individuals with probable symptoms could have increased the false positive rate, at least two considerations argue against the possibility that false positives contributed to the observed results. First, it is likely that our review of the DIS interviews substantially reduced false positives; seven of the 25 individuals originally classified as positive for psychotic symptoms were reclassified as negative. Second, false positives would be most likely to reduce the chances of finding significant results. Indeed, there were slightly stronger significance levels for analyses comparing only the eight individuals with definite psychotic symptoms to the rest of the sample.

The predictive value of childhood IQ decline was specific for psychotic symptoms. The group with large IQ declines was not more likely to manifest symptoms of mania, depression, anxiety disorders, antisocial personality disorder, or alcohol or drug abuse. Despite the specificity of the findings, we cannot be certain about specificity for schizophrenia per se. Four individuals in this study were diagnosed with schizophrenia or schizophreniform disorder at the age 23 assessment. One of those was in the large-IQ-decline group (one of 54, or 1.85%); there was one in each of the next three groups and none in the large-increase group (three of 493, or 0.61%). Thus, the large-IQ-decline group had about double the roughly 1% population prevalence of schizophrenia and the rest of the participants had a somewhat lower than expected rate. This corresponds to an odds ratio of 2.83, which, however, was not significant. On the other hand, at age 23 the study participants were in the early part of the risk period for schizophrenia. Some nonschizophrenic participants, including those who have not yet experienced any psychotic symptoms, may still go on to develop schizophrenia.

Parallels between the present study and studies of schizophrenia further suggest that our findings are indeed likely to be relevant to schizophrenia. Studies of schizophrenia have examined and supported the “low childhood IQ” hypothesis (

12–

16). Other findings emphasize the value of looking at developmental changes or trajectories. Findings from one longitudinal study of a community sample (

12) showed some consistency with the “IQ decline” hypothesis, showing a trend toward increasing overrepresentation of preschizophrenic individuals in the lower one-third of the distribution of intellectual functioning assessed at ages 8, 11, and 15. There is evidence that differences other than IQ between high-risk and control children are greater during adolescence than during earlier childhood (

2,

28). In showing a meaningful difference between individuals with low IQs at age 7 whose IQs were always low and those whose low IQs represent substantial declines from age 4, our data suggest the importance of even earlier developmental trajectories, at least in regard to development of psychotic symptoms. Few studies have examined prospective data from this early in childhood (

5,

10). Moreover, the present study was carried out with a community sample that was unselected for psychiatric illness, thus extending the generalizability of the findings beyond that of high-risk samples.

Although predictive of psychotic symptoms, childhood IQ decline may still not, strictly speaking, be an entirely causal factor. IQ decline would be more likely to be a truly causal factor if it were largely the result of extrinsic, socioenvironmental factors. However, if socioenvironmental influences were the primary cause, it seems unlikely that IQ decline would predict psychotic symptoms only. In addition, socioeconomic status, which might be considered an extrinsic factor, was less strongly predictive of psychotic symptoms than was IQ decline. IQ decline also remained significant as a predictor even after socioeconomic status was accounted for. Thus, a more parsimonious explanation may be that childhood IQ decline reflects neurobiological processes intrinsic to the specific development of psychotic symptoms. If childhood IQ decline is specific for schizophrenia and not just psychotic symptoms, this explanation would also be consistent with the increasingly accepted notion of schizophrenia as a neurodevelopmental disorder.