“Always remember that you are absolutely unique. Just like everyone else.”

Margaret Mead

The incredible diversity in psychopathology in the acute aftermath of trauma has fueled an ongoing search to discover key biotypes that account for these clinical trajectories. In this issue of the

Journal, Ben-Zion and colleagues report on a valiant attempt to replicate findings of functional MRI (fMRI)-based biotyping that predicts trauma-related psychopathology in the acute aftermath of trauma (

1). Findings regarding these biotypes were first discovered and internally replicated from the AURORA study (

2) and reported in the

Journal last year by Stevens and colleagues (

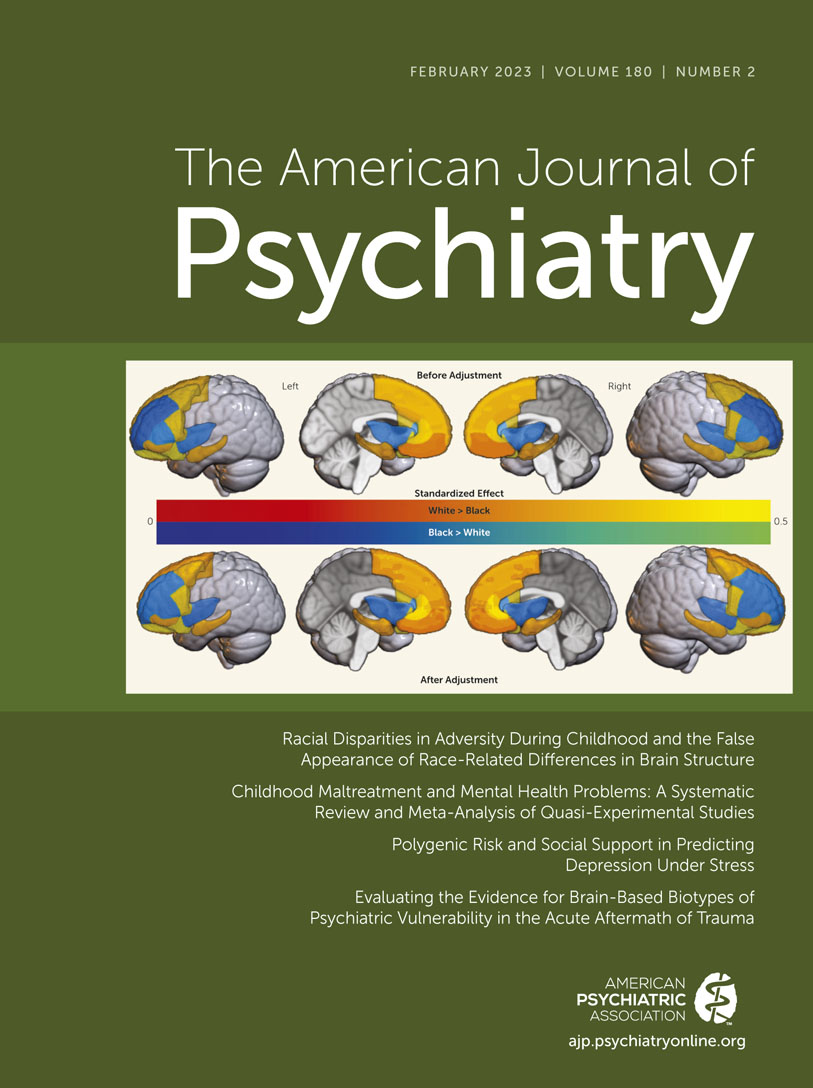

3). The original finding was that individuals who had been evaluated in the emergency department for recent significant traumatic event exposure and had participated in several tasks while undergoing fMRI could be grouped into clusters based on brain profiles in response to these tasks. The clusters were predictive of 6-month PTSD and anxiety symptom outcomes (but not depressive, dissociative, or impulsivity symptoms).

These remarkable findings led Ben-Zion et al. to attempt a “conceptual nonexact replication” of that study, which, we think, means they did their best to externally replicate the findings. Their Neurobehavioral Moderators of Posttraumatic Disease Trajectories (NMPTDT) study had, in fact, been started earlier than the AURORA study, so the data collection aspects of the two protocols were not harmonized a priori. But in an iconic example of collaboration in the name of science, the two research groups cooperated to have the analytic aspects of the NMPTDT study mimic, to the greatest extent possible, those properties of the AURORA study. This collaboration extended to the sharing of anatomical masks for the fMRI analyses, thereby ensuring that the same brain regions were being considered across studies. In addition to the replication attempt, Ben-Zion and colleagues conducted analyses to address a critical question of whether clustering was advantageous at all.

Stevens and colleagues replicated clusters across separate AURORA discovery and replication samples, showing that clusters characterized as “reactive-disinhibited,” “low reward–high threat,” and “inhibited” were observed in each sample (

3). As such, it is valuable in suggesting that brain-based biotypes may be replicable with the caveat that it was an internal replication, meaning that participants in the two cohorts came from the same clinical sources, were assessed with the same measures, and so on. It is uncertain whether those biotypes would be discernible in patients whose clinical and sociodemographic characteristics differ from those enrolled in the AURORA study and, importantly, whether they are stable at different points of measurement (e.g., 4 weeks or 2 months after the trauma as opposed to ∼2 weeks). Moreover, the initial paper did not determine whether the clusters reliably predicted outcomes in each of the two cohorts. Instead, the authors combined the samples to test their hypotheses about prediction. This was presumably done to maximize power—a very reasonable goal—but this design feature left unanswered the key question of generalizability of results to other nonexact samples and contexts.

Working with the AURORA investigators, the NMPTDT investigators attempted a nonexact replication in their somewhat similar sample that had been assessed with somewhat similar methods. This is an ideal approach to determining the extent to which the findings from the AURORA study are generalizable. Ben-Zion et al. recruited individuals from the emergency department following trauma exposure to complete fMRI tasks, which was followed by a longitudinal assessment of clinical outcomes at 6 and 14 months. They established four clusters from the task-related fMRI data, three of which shared commonalities with the original biotypes (for example, one group showed high threat and high reward reactivity, which was observed in the original “reactive-disinhibited” group). The findings did not suggest, however, that cluster membership predicted any of the clinical outcomes (PTSD or anxiety) at the 6- or 14-month follow-up. The optimal cluster solution did not appear significantly better than a null hypothesis that no underlying clusters were present in the data.

While interpreted by the authors as evidence of nonreplication, the lack of concordance across studies is perhaps unsurprising. The NMPTDT study did not include a task measuring neural substrates of response inhibition, so the features used in clustering were different across studies. Thus, even if the samples and analytic pathways were identical, the resultant clustering patterns would likely be different unless the response inhibition feature had no meaningful weight in the biotypes originally identified by Stevens et al. The tasks administered to participants for the other dimensions (threat reactivity and reward sensitivity) also differed, although the authors proposed that the tasks engage similar brain processes, and the observed task-based neural activation suggested that this was indeed the case. The fact that the two studies differed considerably in terms of inclusion and exclusion criteria is noteworthy. Some examples of how they differed pertained to pretrauma psychopathology. The AURORA study was relatively broad in inclusion and permitted entry of individuals with a prior diagnosis of PTSD. In contrast, the NMPTDT study excluded individuals with a prior diagnosis of PTSD, as well as those with other commonly comorbid conditions, such as substance abuse, suicidality, and traumatic brain injury. These differences suggest that the nature of the assessed populations may have been different in neurobiologically meaningful ways.

Identifying neural biotypes that inform our understanding of clinical trajectories is an important step to achieving truly personalized medicine. Success on this front will depend on our ability to establish reliable and generalizable biotypes that characterize clinically meaningful outcomes—either in terms of naturalistic trajectories or treatment response. Achieving this goal poses several challenges.

Robust person-centered prediction models will only be possible with large data sets. Thus, it will require investigators to embrace uniform data collection parameters in the service of future projects that combine participant data across studies. One example of stringent standardization in this way comes from psychiatric genomics, where it could be argued that replicable findings were only achieved once investigators regularized genotyping and statistical methods and shared data in ways that enabled consistency of analyses (including meta-analyses) (

4). In contrast, functional imaging paradigms are often not uniformly implemented across research groups. While paradigms should not

need to be perfectly identical—measures of the same construct should provide conceptual replication of robust models—implementation of similar assessments for commonly measured constructs will decrease method-based noise when combining data sets. Continued efforts are also needed to develop consensus on gold-standard assessments. An example of an attempt to recommend validated paradigms is the work done by NIMH Research Domain Criteria (RDoC) construct workgroups (

5), which were tasked with identifying key paradigms for RDoC domains. Their results highlight the challenges in arriving at such recommendations (e.g., often scant psychometric data are available to inform instrument selection and prioritization), yet the work conducted by Stevens et al. and Ben-Zion et al. underscores the importance of doing so to provide guidance for studies that seek to share data for larger analyses. As demonstrated by these two papers, the necessity of collaboration and communication between research groups for replication cannot be understated, including sharing data collected via publicly available platforms (e.g., NIMH Data Archive) and the code and pipelines used for analyses.

Clustering approaches are sensitive to the features that are used to create the clusters. Moreover, there is not necessarily a single “best” cluster solution, but rather solutions that are valid for specific purposes given a set of measured features (

6). This begs the question: even if there were optimally standardized paradigms implemented to measure key features, which features are most important to include? By this we mean not just constructs (e.g., whether clusters should be made from neural responses to reward, threat, inhibition, or others) but also the assessment modality (e.g., neuroimaging, genetics, or physiology). Purposeful selection of features that both closely align with the purpose of biotyping and show similar validity for the members of the population of interest is important (

7). Given the cost and complexity of functional imaging, it will be critical to determine whether it is indispensable or if other assessment approaches optimize the reliability and predictive ability of cluster-based biotyping so that these features can be consistently measured in new projects.

Finally, we should continue to critically evaluate the underlying statistical models and assumptions used to create biotypes, including metrics such as significance and stability, as appropriate for the chosen analytic approach (e.g.,

8,

9). Ben-Zion and colleagues illustrate the value of empirically testing the observed cluster structure versus assuming a single multidimensional distribution—just because clusters can be found does not mean they optimally characterize the data (or predict outcomes). Clustering data is both art and science (

10), so clear explication of steps taken and transparency with decision points made is crucial for this type of work. Finally, these studies (and many others conducted to date in psychiatric samples) use analyses that treat clusters as discrete and separable, which has implications for interpretation and statistical power (

11). The veracity of this assumption with neural data remains to be established, but it seems unlikely that biotypes of psychiatric conditions will routinely take shape in discrete, nonoverlapping, and highly distant groupings. Further work testing models that account for less discrete cluster boundaries (e.g., fuzzy clustering) is needed.

Clustering is a viable approach to dealing with patient heterogeneity. But, based on a necessarily limited set of inputs and features, can any attempt at clustering overcome the reality that brains are complex, that human experience is multimodal and varied, and that each of us is, as a result, unique?