Assertive community treatment (ACT) is a standardized, intensive, integrative, team-based approach for supporting individuals with serious mental illness. It is used nationally and internationally. ACT teams provide 24-hour care seven days a week in situ and rehabilitation service to help consumers live in their communities and make progress toward recovery goals. To meet a consumer’s needs, ACT teams include multidisciplinary professionals with expertise in areas such as mental health, substance abuse, supported employment, social services, and nursing.

Because teamwork is key in ACT (

1), standards for designing ACT team structures and procedures have been extensively studied and specified (

2,

3). Research indicates that greater fidelity to ACT design standards results in better consumer outcomes (

4–

7). Yet, variations in performance exist even among high-fidelity ACT teams, and the causes are not well understood (

8): “Sometimes even well resourced teams that adhere to program principles ‘on paper’ can struggle and not function at their best” (

9). Although optimal ACT team design is important, it represents only one facet of the causes of ACT performance. Research on team effectiveness suggests that in addition to team design, team processes play a vital role in ensuring good team performance. A recent review of ACT found that “agency or team culture and climate have received little attention in the ACT or the adult mental health implementation literature” (

10). This suggests that processes of high-fidelity ACT teams have yet to be investigated.

The Teamwork in Assertive Community Treatment (TACT) scale measures team processes in ACT teams. The TACT scale is designed to complement tools measuring fidelity to ACT core structures, procedures, and clinical interventions (

2). Existing fidelity scales focus on how teams should operate, and the TACT scale assesses how teams actually operate. We integrate organizational and mental health research to present a conceptual framework describing the mechanism through which team processes affect the translation of ACT fidelity into consumer and staff outcomes. Results of a longitudinal field study of 26 ACT teams supported the development, validation, and future use of the TACT scale. The next section reviews our theoretical framework and model.

Teamwork in ACT

Management research on teams indicates that team designs providing clearly specified tasks, clearly delineated roles, unambiguous communication, and simple direct workflows and information sharing promote high-performing teams (

2,

11,

12). This research also reveals that the relationship between team design and outcomes is moderated and mediated by team processes (

13). The research in this area has examined a wide array of teams in many different settings (including health care, aviation, military, university, and business settings), and the findings are robust across domains (

13–

15). The strong findings about team process and the observed performance variations in ACT teams suggest that further study of fidelity to ACT design standards and to ACT team processes would be useful to better understand ACT team performance. However, there is no validated instrument to evaluate ACT team processes. With this study we sought to fill this research gap.

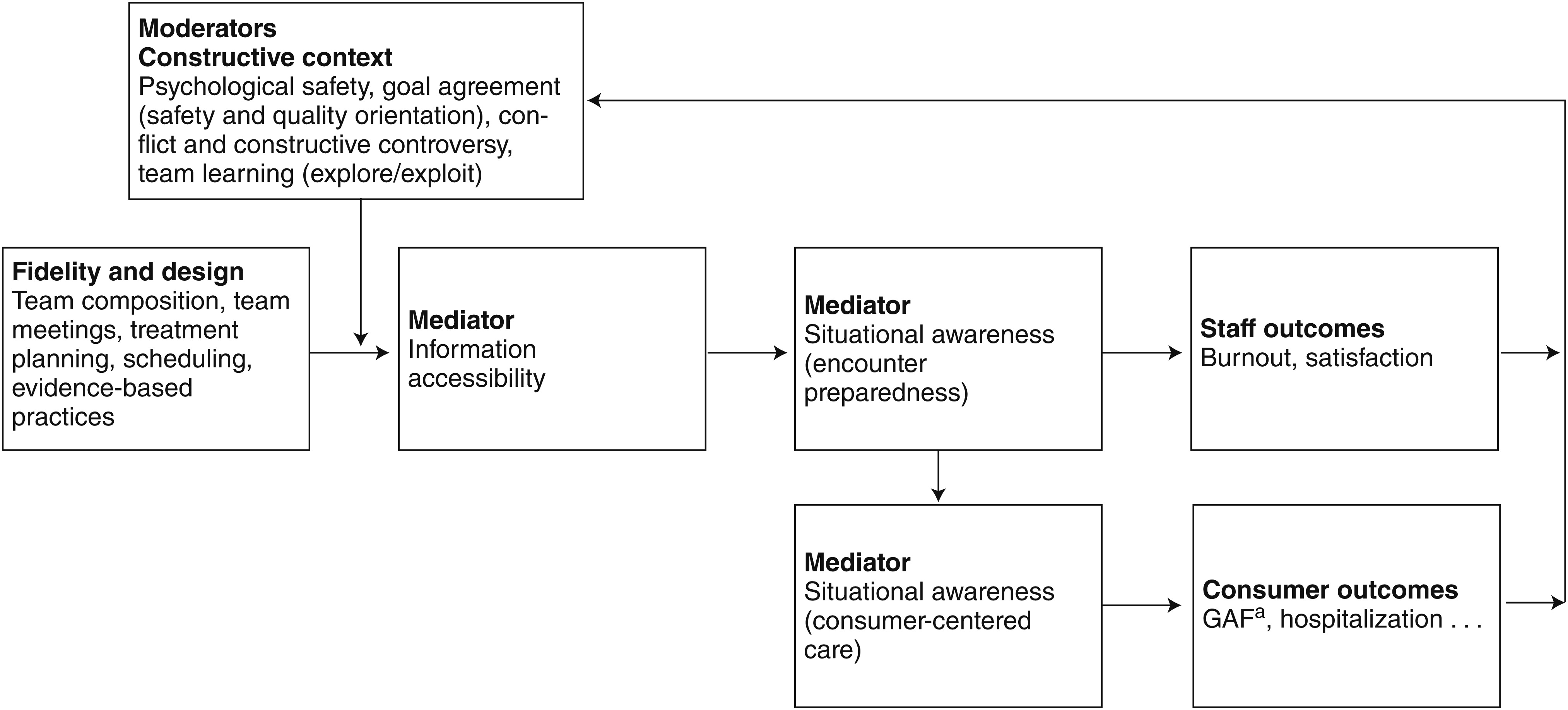

Drawing on principles common to team dynamics research, we developed a conceptual model of team processes moderating and mediating the relationship between ACT design fidelity and performance (

Figure 1). Team research suggests that a constructive context promotes growth, development, and performance capabilities of team members by moderating the relationship between design (fidelity) and task performance. A constructive context is a safe environment where information and ideas are freely exchanged and task processes are refined. Such a context includes team learning, psychological safety, constructive controversy, goal agreement, and minimal conflict. It is likely that a constructive context is particularly important for teams focused on information management, such as ACT teams.

Many health care teams depend on information management, which involves the encoding, storing, and retrieving of information about a consumer’s health condition, goals, needs, and social context (

16). Encoding entails activities such as developing a treatment plan in collaboration with a consumer, diagnosing conditions, and learning about the consumer’s situation through observation. Storing entails saving the encoded consumer information in formal (that is, hard-copy or electronic medical records, daily meetings, registries, and schedules) or informal (that is, information held by individual team members) information systems. Formal systems are best used for explicit, codifiable information, whereas informal, interpersonal systems are best used for implicit, tacit information (

16). An example of an informal retrieval mechanism is team members sharing tacit information that could be useful during an encounter. These coordination mechanisms are designed to ensure that the right information is available to the right team member at the right time (

17), thus minimizing information search time (

18) and optimizing care.

Managing consumer information is a key task that ACT teams must effectively implement. ACT fidelity standards specify a structure designed to facilitate information management. The combination of adherence to ACT fidelity standards and a constructive context should improve information accessibility. Improved information accessibility should result in better encounter preparedness for team members and subsequently more consumer-centered care.

The rest of this section discusses the moderating effect of constructive context on the relationship between fidelity and information accessibility, followed by a discussion of the mediating processes of information accessibility and encounter preparedness. Finally, we propose that greater encounter preparedness results in better team member outcomes and that consumer-centered care results in better consumer outcomes.

Constructive context: moderating team processes

The moderating team processes include psychological safety, team learning, goal agreement, conflict, and constructive controversy. Research on teams across a wide variety of settings shows that team members are often unwilling to engage in interpersonally risky behavior, such as admitting errors, asking for assistance, or discussing a difficult problem, for fear of negative consequences (

19). Yet, these very behaviors promote learning, problem solving, and information sharing, all of which are critical for successful care teams, such as ACT teams. Team research describes psychological safety as an environment where risks can be taken. Specifically, individuals feel safe admitting mistakes, asking for assistance, exposing others’ mistakes, and making controversial suggestions without the fear of negative consequences to their self-image, status, or career (

19,

20). Psychological safety supports a collaborative work environment where individuals freely exchange information and experience greater team learning, job involvement, and work effort and smoother problem solving (

19).

Team learning involves quality improvement activities through which teams obtain and process data facilitating adaptation and improvement (

19). Learning in ACT teams is critical because members must master and customize standardized team design aspects of ACT to their specific setting or consumer mix. Organizational researchers Levitt and March (

21) identified two types of learning—exploration and exploitation (

22). Exploration brings new knowledge and skills to a team (such as improvements in ACT team design or clinical skills in illness management and recovery or supported employment [

23]). Exploitation refers to the implementation and evaluation of new knowledge and the team’s continuous improvement of existing processes. Effective teams balance exploration and exploitation. Too much exploitation focuses attention on improving existing processes, whereas too much exploration of new ways of accomplishing tasks can result in their ineffective implementation.

Team research defines a shared perception of a team’s priorities and objectives as goal agreement (

24). Team research focuses on three major goals—productivity, quality, and safety—because they involve significant trade-offs. Taking time or resources to ensure quality and safety may result in a decrease in productivity; alternatively, pressures to increase productivity may result in taking shortcuts harmful to quality and safety. Research suggests that maintaining safety may be compatible with productivity and quality goals (

24). The prioritization of certain goals over others gives rise to team-level norms that influence the way designs specified in fidelity standards are actually implemented in practice.

A key finding in team research is that conflict and conflict resolution are both inevitable and necessary in work teams. Conflict includes perceived incompatibilities, opposing interests, or discrepant views among team members (

25). Conflict can arise from opposing goals as well as different beliefs about attaining agreed-upon goals (

25). For example, ACT team members may agree upon team goals but may have divergent perspectives on how to achieve them. Task-related conflict can be beneficial, but only if effective methods to resolve conflict are in place. Team researchers define constructive controversy as the critical and open discussion of divergent perspectives (

26). Constructive controversy encourages individuals to express their opinions directly and explore opposing positions open mindedly when resolving conflicts, such as disagreement about the best way to implement a design for information sharing. Therefore, constructive controversy improves decision quality and leads to better organizational outcomes.

Mediating team processes

Because we focus on the critical function of information management to ACT team effectiveness, we further draw on team research to identify possible mediating processes between information management and ACT team outcomes. Specifically, we focus on relationships between information accessibility and situational awareness. In teams such as ACT teams where information management is a major task, high accessibility to information means that task-relevant information can be obtained efficiently, with minimal waste (

11,

27).

Team research also suggests that situational awareness of one’s surroundings is important in patient care (

28). Situational awareness has two components, encounter preparedness and consumer-centered care. Encounter preparedness entails being prepared for consumer encounters by having knowledge of the consumer’s condition and rehabilitation goals and by knowing the encounter schedule and related interventions. Consumer-centered care entails observing elements of an encounter that require adapting care to a consumer’s diagnosis, needs, and current situation. Encounter preparedness is a necessary condition for consumer-centered care, for it enables ACT team members to realize when an encounter differs from expectations and to adapt to the emergent situation to manage it effectively. We anticipate that encounter preparedness will reduce team member stress because it clarifies the situation and expectations; we anticipate that it will improve consumer-centered care by making deviations apparent and resulting in more appropriate adjustments. Consumer-centered care will ultimately improve consumer outcomes.

In sum, ACT team design and fidelity scales provide a strong foundation for guiding and evaluating ACT team success. However, measuring and better understanding moderating and mediating team processes ought to enable ACT teams to capitalize fully on these structures and perform even more effectively.

Methods

We developed and validated the TACT scale with the following four-stage research design (

29). In the first stage, an iterative round of observations and interviews was conducted with a convenience sample of two ACT teams to identify key team processes in ACT. Research team members observed the two ACT teams in the field, attended team training and ACT conferences, and interviewed 23 team members, three leaders, and two administrators. In the second stage, we selected and defined our constructs by drawing on themes repeatedly emerging in our field notes and consulting the team and ACT literature. This resulted in the development of an initial set of 47 items to measure the moderating and mediating constructs in our conceptual model. Items were adapted from existing organizational research and customized for the ACT and mental health context. [Citations for item sources are listed online in a

data supplement to this article.] Cognitive interviews were used to review items with three ACT team leaders and four ACT team members (

30), and we revised items based on their comments. In the third stage, the survey was pretested with members from one rural and one urban ACT team. Exit interviews confirmed that participants had no difficulty understanding questions or completing the survey. A longitudinal study of ACT teams was conducted in the fourth stage to validate TACT scale constructs and verify their temporal stability. Teams were surveyed over three waves spaced at six-month intervals from May 2008 to November 2009.

The population studied was ACT teams, with a convenience sample consisting of 26 teams in Minnesota. We invited all 27 ACT teams in Minnesota to participate in our study, but one team declined the invitation. ACT teams in the sample were formed in accordance with the Minnesota ACT standards (

31,

32), which are a close replication of the Dartmouth Assertive Community Treatment Fidelity Scale (

33). These teams served approximately 1,600 consumers and were diverse in terms of geographic area served (rural or urban) and consumer population served (for example, general, homeless, personality disorders, and Asian). ACT teams in the sample ranged in size from eight to 17 team members, had a mean size of 12, and exhibited high fidelity to the ACT model. We administered the survey on site for three survey waves to individuals who constituted the 26 teams (N=318, N=309, and N=304 for waves 1, 2, and 3, respectively). All respondents gave their informed consent before completing the survey. Breakfast or lunch was provided at the time of the survey. The study was approved by the Human Research Protection Program of the University of Minnesota, Twin Cities.

Questionnaires were completed by 287 respondents in wave 1, 268 in wave 2, and 275 in wave 3, representing 90%, 87%, and 91% response rates, respectively. Among the second- and third-wave respondents, 222 (83%) and 241 (88%), respectively, were recurrent respondents. Sixty-five first-wave respondents dropped out and 46 new respondents entered in the second wave; for the third wave, 27 second-wave respondents dropped out and 34 new respondents entered. Seventy-one percent of the nonrecurrent respondents (N=261 out of 367) were female. The racial composition of the sample was 91% Caucasian (N=328 out of 359), 5% Asian (N=18), 2% African American (N=8), and 1% others (N=5).

We developed nine constructs. [Details about the constructs, including their definition and operationalization, are provided in Appendix A, available online as a

data supplement to this article.] Data were also collected on staff outcomes, including burnout and satisfaction, to assess predictive validity. Burnout was measured with a subset of the Maslach Burnout Inventory (

34). A subset of burnout items was used to minimize respondent burden and increase response rates. Items selected had the highest correlations with their primary factor and lowest correlations with other factors. The items for emotional exhaustion were as follows, all prefaced with “How often did you feel”: “emotionally drained from your work on your ACT team,” “used up at the end of the workday from your work on your ACT team,” and “you were working too hard on your ACT team” (Cronbach’s α=.89). The items for depersonalization were “you were treating some consumers as if they were impersonal objects,” “you have become more callous toward people since you took this job,” and “you don’t really care what happens to some consumers” (Cronbach’s α=.57). The items for ineffectiveness were “you dealt very effectively with the problems of your team’s consumers,” “you were positively influencing other people’s lives through your work,” and “you had accomplished many worthwhile things in your job on your ACT team” (Cronbach’s α=.83) (

34). Satisfaction was measured with one question that asked team members “Overall, how satisfied are you with your job on your ACT team?”

Data analysis consisted of six steps. First, an exploratory factor analysis (EFA) was conducted to assess fit between scale items and theoretical constructs. The EFA was guided by the theoretical model and was conducted with the first-wave data. We used principal factor analysis followed by a promax rotation (

35). [Appendix B, available online as a

data supplement to this article, details the factor loadings at wave 1.] We selected the number of factors to retain by examining eigenvalues and scree plots (

35). The assignment of items to constructs was done by carefully examining the item-to-factor loadings and the correlations between factors to identify “the most easily interpretable, psychologically meaningful, and replicable” (

35) loadings. The EFA was also run with the second- and third-wave data as a preliminary step to the confirmatory factor analysis (CFA). No change in items or in assignment of items to constructs was made with the EFA results from the second- and third-wave data. Second, Cronbach’s alpha coefficients were calculated to examine the internal consistency of items for each construct. Third, CFA was performed to test fit of the overall measurement model and its stability across survey waves. Fourth, within-team agreement was assessed with r

wg (

36,

37), the intraclass correlation coefficient (ICC), and one-way analysis of variance (ANOVA) to justify aggregation of individual-level measures to the team level. Fifth, convergent validity and discriminant validity were tested by examining correlations between all constructs. Sixth, predictive validity was determined by examining the relationship between the TACT scale and staff outcomes.

Because errors are likely to be correlated as a result of repeated observations and the nesting of individuals in teams, we applied appropriate strategies in statistical analyses to overcome this violation of the independent-observation assumption. For factor analyses, this was managed by subtracting the team mean for each wave from individual observations. This method removed correlated errors resulting from differences in team by wave. For regression analyses assessing predictive validity, we applied a multilevel modeling strategy by including a fixed effect for wave and a random effect for both team and individual.

Results

EFA

The EFA conducted with the wave 1 data revealed nine factors that collectively accounted for 60% of the variance in the items (

35). The Kaiser-Meyer-Olkin measure of overall sampling adequacy was .82, which supports proceeding with factor analysis (

38). Bartlett’s test of sphericity rejected the hypothesis that there are no common factors, again supporting factor analysis (

38). Forty-three items loaded on the nine factors, which measure the six target constructs and their subconstructs. Four initial items were excluded from the final scale because they loaded onto single-item factors with smaller eigenvalues.

The 11 items designed to measure team learning loaded onto two factors; one described exploration (five items), and the other described exploitation (six items). Four items were used to measure conflict, and two items were used to measure constructive controversy. Of the six items designed to measure information accessibility, four items loaded onto one factor. Among the four items, “information notes current” had a factor loading smaller than the generally accepted minimum loading (.30). We retained this item because of its practical importance. The wording used in this item pointed to an important information source for ACT teams—the encounter notes—which were mentioned by ACT team leaders but not captured by other items included in the subscale. Further, including this item did not deteriorate the subscale’s internal consistency. Encounter preparedness was measured with seven items that loaded on one factor describing awareness of information related to consumer encounters, and consumer-centered care was measured with four items loading together on a factor characterizing team members’ awareness of consumer-specific conditions for treatment plans and interventions. Goal agreement was measured with three items that loaded together, with the safety and quality goals loading positively and the productivity goal loading negatively. To clarify interpretation, we reverse-coded productivity goal and created a compound subscale for goal agreement, with productivity as a low value and safety and quality orientation as a high value. Finally, eight items loaded onto their intended construct, psychological safety.

Internal consistency

Using Cronbach’s alpha, we tested the internal consistency of subscale items.

Table 1 presents the alpha values for the nine subscales in each wave. All alpha values were above acceptable levels (>.70) except for the subscale information accessibility in waves 1 and 2, which were still within minimally acceptable levels (.65–.70). We further tested the internal consistency of each subscale with item-to-total correlations. The average item-total correlations for the nine subscales were all above the appropriate value of .40 (

39), ranging from .46 to .75. These results indicate that the nine TACT subscales are internally consistent, each measuring a unique aspect of team processes.

CFA and temporal stability

Using CFAs with each wave, we examined the fit of the nine-factor structure. Because the observations from the second and third waves were not used in the EFA, their CFA analyses were purely confirmatory. The nine factors were exploitation, exploration, conflict, constructive controversy, psychological safety, goal agreement, information accessibility, encounter preparedness, and consumer-centered care. We compared the nine-factor structure with a six-factor model that grouped exploration and exploitation items into one team learning construct, the conflict and constructive controversy items into one conflict construct, and the encounter preparedness and consumer-centered care items into one situational awareness construct.

Using the SAS tcalis procedure (

40), we estimated the CFA models. We assigned no cross-loadings or covariance between error terms but allowed covariance among factors. Following Thompson’s (

41), Yung’s (

42), and Kenny’s (

43) recommendations, we evaluated model fit with four commonly used indices: standardized root mean square residual (SRMSR), root mean square error of approximation (RMSEA), adjusted goodness-of-fit index (AGFI), and Bentler’s comparative fit index (CFI).

Table 2 summarizes the fit indices of the two models by wave. Compared with the alternative model, the nine-factor model fits the data better in all three waves. Both SRMSR (.06, .06, and .07 for waves 1, 2, and 3, respectively) and RMSEA (.05, .05, and .06 for waves 1, 2, and 3, respectively) results of the nine-factor model indicated reasonable fit. Similarly, fit indices for the six-factor model were larger than the recommended cutoff value of .06 (

41). Bentler’s CFIs (.85, .85, and .83 at waves 1, 2, and 3, respectively) for the nine-factor model indicated adequate fit and were better than the indices for the six-factor model (<.80). However, both models had poor AGFI (<.80). Because the two models are nested, we compared model fit with chi square difference tests. In all three waves, the differences in chi squares suggested that the nine-factor model was significantly better than the six-factor model.

We examined temporal stability by conducting a multigroup confirmatory factor analysis (

42) that imposed the same factor structure and constrained the loadings to be the same across waves. The overall model fit was acceptable (SRMSR=.07, RMSEA=.05, AGFI=.78, Bentler’s CFI=.84), suggesting that factor loadings were stable across waves. We checked the robustness of the measurement model for our clustered sample. We estimated the models with Stata 12’s SEM procedure allowing clustering within teams, which produced similar results (SRMSR=.07).

Aggregation analysis

The nine subscales were designed to measure team-level constructs. We assessed the within-team agreement on each subscale in each wave by r

wg (

36), ICC(1), and one-way ANOVA (

44).

Table 1 displays the results of these tests. Twenty-three of the 27 average values for r

wg were above the 95% critical values of .62 for a group with 11 respondents and five moderately correlated (.4) items (

36). The exceptions were information accessibility in waves 1 and 3 and exploitation in waves 2 and 3. The r

wg values for information accessibility in waves 1 and 3 were both close to the cutoff value. The results from the one-way ANOVA show that 22 out of the 27 F tests had significant within-group agreement at α=.05. Similarly, 23 ICC(1) scores are above the recommended .05 cutoff value, and 14 are above the .12 median value observed in other studies (

44,

45). It is noteworthy that the exploration subscale had particularly low ICC(1) scores not from lack of within-team agreement but because all ACT teams were subject to the standard training provided by the state’s ACT training center when we collected the data. The subscale for exploration reflected team members’ participation in the training program, which resulted in low cross-team variation and subsequently low ICC(1) scores. Overall, the aggregation analysis indicated sufficient within-team agreement on the subscales to justify aggregating individual-level measures to the team level by averaging team members’ scores on each subscale.

Convergent and discriminant validity

We tested convergent and discriminant validity by examining correlations among the nine TACT subscales. Significant correlations between theoretically related subscales indicate convergent validity, and absence of correlations between unrelated subscales indicates discriminant validity. We theorized that constructive team contexts lead to information accessibility and situational awareness. With this conceptual framework, we expected stronger correlations between subscales within each higher-order construct, such as constructive team contexts (psychological safety, goal agreement, conflict, constructive controversy, and team learning), and the mediating processes (information accessibility and situational awareness). Because of their causal orders, we expected that the correlations between constructive team contexts and information accessibility and between information accessibility and situational awareness would be stronger than the correlation between constructive team contexts and situational awareness.

Table 3 presents the descriptive statistics of and correlations among the TACT subscales. Subscales belonging to the same higher-order constructs were more strongly correlated with one another than with other subscales. The moderating processes of psychological safety, goal agreement, and constructive controversy were highly correlated (.53<r<.74, p<.001). Conflict was highly (negatively) correlated with psychological safety and constructive controversy (r=–.52 and –.69 respectively, p<.001), whereas the correlation with goal agreement was significant but more modest (r=–.26, p<.05), and correlations with the learning measures were not statistically significant. In fact, the learning measures, although highly correlated with each other (r=.45, p<.001), exhibited modest correlations with the other moderators. Specifically, the exploitation subscale was significantly correlated with constructive controversy, psychological safety, and goal agreement (.30<r<.38, p<.01), but the exploration subscale was not correlated with other moderators. We address the reasons for the latter result in the Discussion.

For the most part, correlations among the moderating processes were stronger than those between the moderating and mediating processes. In terms of our mediating variables—information accessibility and situational awareness—correlations were high and significant (.49<r<.63, p<.001). Moreover, for each mediating process variable, all correlations with the moderator variables were weaker than those with other mediators. These results suggest that the TACT subscales measure related but distinct aspects of teamwork.

Predictive validity

We tested predictive validity of the TACT Scale by examining the relationships predicted by our model between encounter preparedness and ACT staff outcomes. We regressed staff outcome measures on lagged encounter preparedness (for example, staff outcomes in wave 2 were regressed on team-level encounter preparedness in wave 1). The model included a fixed effect for wave and a random effect for team and individual. We found that members of the teams with higher lagged encounter preparedness (measured at the team level) were less likely to report burnout for emotional exhaustion (β=–.39, t=–2.37, N=540, p<.05) or depersonalization (β=–.13, t=–1.95, N=540, p<.10), although the latter finding was marginal. We did not find evidence that encounter preparedness reduces ineffectiveness burnout. Encounter preparedness also increased team members’ satisfaction (β=.41, t=3.47, N=539, p<.01).

Discussion

With the theoretical model and measurement instrument presented here, we have incorporated research from organizational behavior to examine team processes in ACT. This study extends work on measuring ACT fidelity, such as the TMACT (

2), with an instrument to measure ACT team processes, the TACT. Although the team design aspects of ACT are fully developed, unexplained performance variations occur even among teams faithfully adhering to ACT design standards. Our theoretical model and measurement instrument include team processes as both mediators and moderators to explain implementation issues facilitating or inhibiting the link between fidelity to ACT design standards and consumer and staff outcomes.

The results indicate that the TACT instrument has excellent measurement properties. Specifically, the scales within TACT have high face validity, reliability (internal consistency and within-team agreement), convergent validity, divergent validity, temporal stability, and predictive validity for team member outcomes. The instrument can be used to complement tools that focus on team design, such as the Dartmouth Assertive Community Treatment Scale (

3) and the TMACT (

2). When used in conjunction with these tools, researchers can gain a better understanding of differences across teams in the relationship between fidelity and performance. Moreover, the instrument can be used by teams and state health authorities (

46) to improve teamwork by measuring and providing feedback to teams on their processes. ACT team leaders can use TACT as a coaching tool to improve a team’s constructive context and information-sharing capabilities (

47,

48). Finally, whereas TACT was specifically designed for ACT to maximize its usefulness for ACT teams, the core principles of the model can generalize to other health care teams. The model emphasizes the importance of both team design and processes; thus the instrument could be adapted to study other types of care teams by making appropriate item adjustments to reflect the specific context.

This study could be extended by relating team processes to consumer outcomes. Studies including consumers served by individual ACT teams would be particularly valuable because such studies could explore whether the effect of ACT team processes varies as a function of severity of a consumer’s symptoms. Are strong team processes more important for consumers with greater needs? The study could also be extended by comparing the scale on consumer-centered care with the independently developed person-centered planning and practices subscale on the TMACT instrument (

2) to evaluate the subscales’ similarity and relationship to consumer outcomes.

The instrument could be improved by refining measures. Goal agreement, for example, focused on measuring dimensions identified in organizational research as important trade-offs that influence team functioning. It could be extended to include measures more specific to the ACT context, such as a recovery and rehabilitative treatment focus versus service delivery. It could also be refined to examine more closely trade-offs ACT teams make when held accountable for multiple goals. Efforts to improve the measurement of information accessibility would also be useful because its measurement properties were not as strong as other constructs and because it has a central role in the theoretical model.

The research could be extended by examining the relative contribution of fidelity (design), a constructive context, and implementation of evidence-based practices such as supported employment or motivational interviewing. A question to be examined here is whether constructive context primarily affects the implementation of evidence-based practices, which affect consumer outcomes.

The limitations of this study suggest interesting areas for future research. The sample in this study was limited to one state. Different states may have different funding constraints and policies, thus limiting generalizability. However, ACT is a nationally standardized practice with highly developed scales measuring adherence to fidelity; thus we are confident that the findings of this study will generalize to ACT teams implementing national standards. Another state-specific effect may be a state directing ACT teams to increase consumer-to-staff ratios to national standards between the first and second wave. This increase in workload may be associated with the increase in burnout and decrease in satisfaction over time. The research could be extended by examining ACT teams with a greater range of fidelity to ACT than observed in this study’s sample to better test the hypothesis that team processes are more advantageous in high-fidelity teams.

The second limitation was that the sample used for CFA (wave 2 and 3) included a large portion of recurrent respondents from the sample used for EFA (wave 1). Thus these samples were not completely independent. The length of time between surveys, turnover, and contextual changes could attenuate the effect of repeated observations on CFA results. However, a more rigorous test of the factor structure requires an independent sample of ACT teams.

Another limitation was that the measurement for the depersonalization burnout dimension was not reliable. This could be due to using a subset of the burnout items for measurement. But if this was the primary reason for the measurement difficulties, it would be reasonable to expect a similar difficulty with the burnout scales for effectiveness and emotional exhaustion. This did not occur. It may be that the low alpha for depersonalization may reflect limited variation in the response to these items. The interpretation of low variation across respondents is consistent with their devotion to ACT consumers; 91% of all respondents ranked working with ACT consumers as their most important motivator for joining an ACT team.