Measurement-based care (MBC) is an evidence-based practice defined as the systematic evaluation of patient symptoms before or during a patient-clinician encounter to inform treatment. MBC fidelity is assessed with three elements: completion of a patient self-report measure, score review by the clinician, and discussion of scores between clinician and patient to inform session and treatment plans (

1). MBC has been touted as the minimal intervention needed to change usual care (

2) to improve outcomes (

3). Despite its transtheoretical (i.e., MBC can be integrated across theoretical orientations) and transdiagnostic (i.e., MBC can be used across many diagnoses) potentials, MBC is rarely used, with <20% of behavioral health providers reporting use of MBC consistent with its evidence base (

1).

Barriers at the patient, provider, organization, and system levels prevent MBC use with fidelity. It is unclear whether a standardized or a tailored approach is needed to support MBC implementation into mental health care. Although standardized approaches (i.e., one-size-fits-all approaches) may offer greater scale-out potential, mounting research suggests that tailored approaches (i.e., those that are customized and collaborative) may be needed to address a specific clinic’s needs and barriers (

4,

5).

This study reports the design and results of a cluster-randomized trial (

6) that compared the effects of standardized MBC implementation with those of tailored MBC implementation on clinician (e.g., fidelity to MBC) and patient (i.e., depression severity) outcomes in Centerstone, one of the United States’ largest nonprofit community behavioral health systems (

1). We hypothesized that the tailored implementation would outperform the standardized implementation in terms of clinician fidelity and patient depression improvement and that MBC fidelity would be associated with greater improvements in patient depression severity.

Results

Clinicians were primarily non-Hispanic White women (64%, N=99), with a mean age of 43.1±12.6 years (standardized implementation, 44.7±12.7 years; tailored implementation, 41.7±12.5 years). No significant differences in clinician demographic characteristics between the two implementation groups were noted (

Table 1).

Patients were also primarily White (59%, N=2,377) and women (68%, N=2,739), with a mean age of 43.8±13.3 years. The most common primary diagnosis was depression (66%, N=2,681), followed by anxiety (16%, N=644) and bipolar disorder (4%, N=178). Patients differed on several baseline characteristics (e.g., race and primary payer) between the two groups (

Table 2), but sensitivity analyses including these factors as covariates did not change the overall model results.

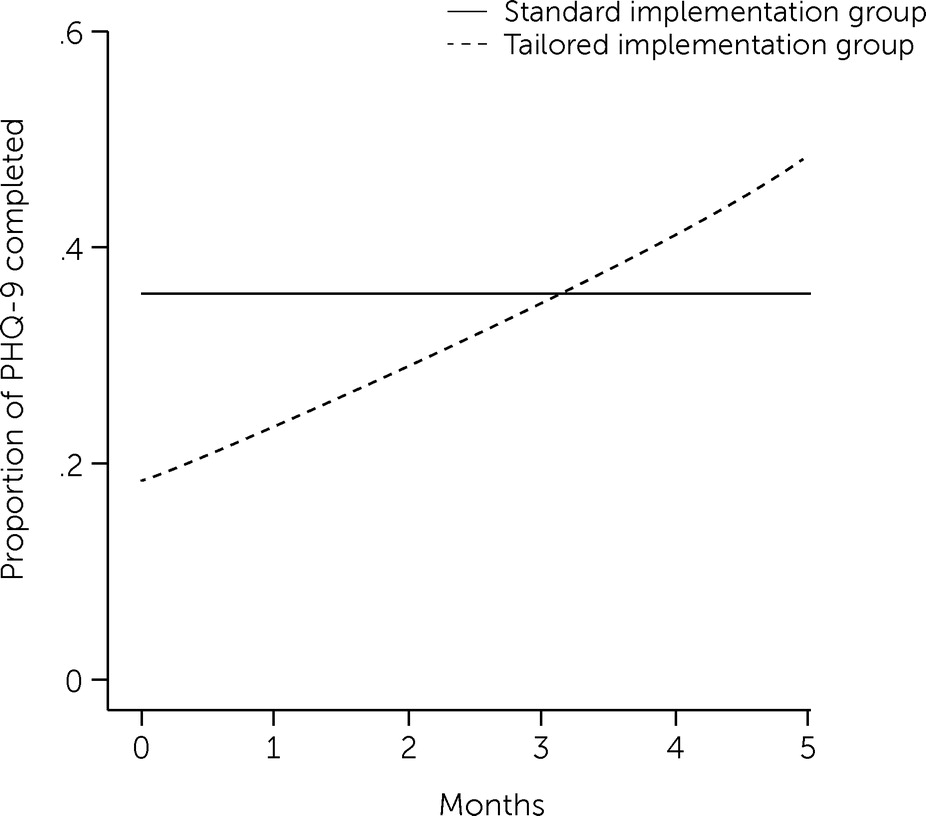

PHQ-9 Recorded in EHR

The PHQ-9 completion rate was lower in the tailored implementation group at baseline but increased over time, eventually surpassing the rate in the standardized implementation group (

Table 3,

Figure 1). A statistically significant negative effect on PHQ-9 completion in the tailored implementation group (OR=0.28) indicated that patients in this group had lower PHQ-9 completion rates at baseline (i.e., time=0), but a significant time × tailored interaction effect (ROR=1.60) indicated that the main effect changed over time in the tailored group (

Table 3). Differences in estimated values from the models revealed that the odds of PHQ-9 completion were lower in the tailored implementation group at baseline (OR=0.28) but approximately three times greater at the median length of the implementation phase (5.3 months) (OR=3.39, 95% CI=1.00–11.48). In addition, PHQ-9 completion was more likely for new patients than for existing patients in the tailored implementation group (OR=1.77, 95% CI=1.42–2.22) but not in the standardized implementation group (OR=1.05, 95% CI=0.85–1.29).

Full MBC Fidelity

Information about clinic guidelines for tailored MBC implementation and descriptive statistics of clinicians’ MBC fidelity by implementation group are provided in the

online supplement. The set of models examined the impact of implementation group on full MBC fidelity (exploring whether patient scores were discussed in a clinician-patient session after PHQ-9 administration) over time. After excluding sessions in which the PHQ-9 had not been completed, 5,522 sessions held by 126 clinicians for 2,059 patients remained in the sample. After evaluating a variety of models of the change in MBC fidelity, we selected a piecewise model wherein one time segment represented the first 3 months of the implementation phase and another segment the last 2 months of that phase. Full MBC fidelity (i.e., a PHQ-9 result was both recorded and discussed) decreased across time (OR=0.62) for the first 3 months of the earlier phase of implementation (i.e., the first 3 months of implementation) but increased (OR=1.99) during the later implementation phase (i.e., from 3 months to the end of implementation) (

Table 3). However, the implementation groups (tailored vs. standardized) did not differ in full MBC fidelity in the final model (

Table 3). The addition of the new patients × tailored interaction did not improve model fit.

Depression Severity

In both groups, PHQ-9 scores decreased substantially (b=−4.71, 95% CI=−5.53 to −3.89) from baseline (mean=17.6±4.4) to 12 weeks (mean=12.6±5.9). (See Tables S4 and S5 in the

online supplement. Neither implementation group nor new patient × group interactions significantly predicted week-12 PHQ-9 scores. Additionally, neither the number of total patient sessions nor the number of sessions with a completed or discussed PHQ-9 predicted week-12 depression severity. Moreover, none of the interactions between these factors and new patient status significantly predicted week-12 depression severity. Finally, neither clinician-level (i.e., MBC attitudes and perceptions of implementation leadership) nor patient-level (i.e., age, gender, number of diagnoses of comorbid conditions, medication type, and new patient status) factors significantly moderated depression severity at week 12.

Postsession Telephone Survey

Exploratory analyses of postsession telephone survey data from patients suggested that in nearly half (47%, N=255 of 541) of the clinical encounters, therapy did not change as a result of PHQ-9 data review, whereas in 27% (N=144 of 541) of encounters a new goal was set and in 24% (N=130 of 541) a new strategy was tried.

Discussion

In partial support of our hypotheses, the tailored MBC implementation approach outperformed standardized MBC implementation. The better performance of the tailored MBC implementation was indicated by an increased likelihood of having PHQ-9 forms completed in the EHR at study completion, which is the most basic component of MBC fidelity. Mounting evidence suggests that addressing clinic-specific barriers is critical to implementing new practices such as MBC (

5). We previously studied implementation strategy deployment by the implementation teams in the six clinics assigned to the tailored implementation and observed on average 39 discrete strategies for improving implementation, including quality management (50%, e.g., audit and feedback), restructuring (16.5%, e.g., revise professional roles), communication (15.7%), education (8.9%), planning (7.2%, e.g., assess for readiness), and financing (1.7%, e.g., offer an incentive) (

22). One explanation for superior performance in clinics assigned to tailored implementation may be that five of the six clinics engaged office professionals (i.e., front desk staff) in the implementation teams and assigned them to facilitate PHQ-9 administration in order to remove the time barrier associated with in-session PHQ-9 administration by the clinician (

22). This strategy may also explain why, for new patients in the clinics using tailored implementation, PHQ-9s were more likely to be on record—office professionals may have included the PHQ-9 in intake packets at the start of treatment.

However, no difference was observed between the two implementation groups for full MBC fidelity. Scores on postimplementation surveys appeared to indicate that clinicians in both groups had sufficient knowledge and favorable attitudes to meaningfully integrate PHQ-9 data into sessions (

4,

12). It is possible that barriers at the organizational level were not sufficiently addressed, such as relative priority of the implementation and an organizational climate that expects, supports, and rewards MBC delivery with fidelity. Even in the clinics assigned to the tailored implementation, we saw few examples of engaging opinion leaders or champions, shifting incentives to reward MBC, modifying role expectations, or restructuring clinical supervision to be guided by MBC, which has been shown to increase standardized assessment administration with youths receiving treatment in community settings (

23). Some of these strategies were planned by implementation teams in our trial but not enacted, perhaps because their strategy selection was often motivated by feasibility rather than by criticality parameters (

24).

Finally, the significant improvement in PHQ-9 scores over 12 weeks of treatment could not be attributed to MBC fidelity. This finding suggests that usual care for moderately to severely depressed adult patients effectively improves depression among such patients and that PHQ-9 administration in roughly one-third of clinical encounters does not yield incremental benefits for patients. Our findings are consistent with results of a previous meta-analysis (

25), indicating that PROM administration may be helpful but is relatively weak as a “stand-alone” intervention in the absence of organizational enhancements and systems support. Including a patient perspective on the implementation teams or in the consultation groups may have revealed critical barriers or ways to optimize MBC that are important for future research considerations.

In most MBC effectiveness studies, data were fed back to both patients and clinicians, particularly when cases were not showing progress as expected, and in many studies, clinical decision support offered guidance on how to adjust treatment on the basis of scores (

1). MBC would ideally inform changes in treatment (e.g., more therapy sessions or adding pharmacotherapy). We were unable to link these data to important contextual information such as PHQ-9 scores over time, and so it is not clear whether these rates reflect the type and quality of PHQ-9 integration that has been found in other MBC effectiveness studies. The PHQ-9 has been used in many trials assessing the efficacy of collaborative care in which a nurse care manager regularly reviews and discusses scores with patients and uses an algorithm for increasing, switching, or augmenting medication treatment (

26–

28). We note that the same clear guidance for PHQ-9 data–informed adjustments is not available for psychotherapy. It is possible that clinician- or patient-selected measures may be better integrated and more actionable in the psychotherapy context (

1,

29).

Conclusions

Our findings suggest that despite potentially resulting in greater expense, tailored implementation support may be necessary to optimize implementation of evidence-based practices such as MBC. However, selection of implementation strategies may need to be guided by factors other than feasibility, and more time (>5 months) may be needed to achieve higher MBC fidelity. Indeed, a continuous quality improvement approach, commonly used in health care delivery systems, may be needed to support MBC fidelity (

30,

31). Although technological solutions are on the horizon, multilevel implementation strategies will remain necessary to fully integrate MBC into psychotherapeutic treatment (

1). Without this type of support, fidelity of evidence-based practice delivery may be undermined, resulting in an attenuated impact on patient outcomes.

Acknowledgments

Jenny Harrison at Crisis Access Engagement in Centerstone, IN, and Matt Hardy at Centerstone, TN, contributed to the design and execution of this study, as well as to the interpretation of the results. Candi Arnold served as study coordinator. Abigail Melvin, Brigid Marriott, Mira Hoffman, Hannah Kassab, Jacqueline Howard, and Iman Jarad served as research specialists contributing to study protocols and data collection.