In the past decade, mental health services researchers have increasingly used qualitative methods in combination with quantitative methods (

1,

2). This use of mixed methods has been partly driven by theoretical models that encourage assessment of consumer perspectives and of contextual influences on disparities in the delivery of mental health services and the dissemination and implementation of evidence-based practices (

3,

4). These models call for research designs that use quantitative and qualitative data collection and analysis for a better understanding of a research problem than might be possible with use of either methodological approach alone (

5,

6). Numerous typologies and guidelines for the use of mixed-methods designs exist in the fields of nursing (

7,

8), evaluation (

9,

10), public health (

11,

12), primary care (

13), education (

14), and the social and behavioral sciences (

5,

15).

To address these issues, we examined the application of mixed-methods designs in a sample of mental health services research studies published in peer-reviewed journals and in NIMH-funded research projects over five years. Our aim was to determine how and why such methods were being used and whether there are any consistent patterns that might indicate a consensus among researchers as to how such methods can and should be used. This aim is viewed as an initial step toward the development of standards for effective uses of mixed methods in mental health services research and articulation of criteria for evaluating the quality and impact of this research.

Methods

We conducted a literature review of mental health services research publications over a five-year period (January 2005 to September 2009), using the PubMed Central database and the following search terms: mental health services, mixed methods, and qualitative methods. Data were taken from the full text of each research article. Articles identified as potential candidates for inclusion had to report empirical research and meet one of the following selection criteria: a study specifically identified as a mixed-methods study in the title or abstract or through keywords; a qualitative study conducted as part of a larger project, including a randomized controlled trial, that also included use of quantitative methods; or a study that “quantitized” qualitative data (

16) or “qualitized” quantitative data (

17). On the basis of criteria used by McKibbon and Gadd (

18) and Cresswell and Plano Clark (

5), the analysis had to be fairly substantial; for example, a simple descriptive analysis of baseline demographic characteristics of participants was not sufficient to be included as a mixed-methods study. Further, qualitative studies that were not clearly linked to quantitative studies or methods were excluded from our review.

Using the same criteria and search terms, we also reviewed the NIH CRISP database (Computer Retrieval of Information on Scientific Projects) of projects funded over the same five-year period. Projects were limited to R series (independent research awards), F series (predissertation research awards), and K series (career development awards) grants. Data were taken from only the project descriptions provided by the applicant and contained in the database.

Using typologies employed in other fields of inquiry (

5–

7,

9), we next assessed the use of mixed methods in each study to determine the study aims, rationale, structure, function, and process. Study aims referred to the objectives of the overall project that included both quantitative and qualitative studies or methods. The rationale for using mixed methods included conceptual reasons, such as exploration and confirmation (

5), breadth and depth of understanding (

19), and inductive and deductive theoretical drive (

20). Pragmatic reasons for using mixed methods, such as addressing the weaknesses of one method by use of the other, and suitability to address research questions were also examined. Assessment of the structure of the research design was based on Morse's (

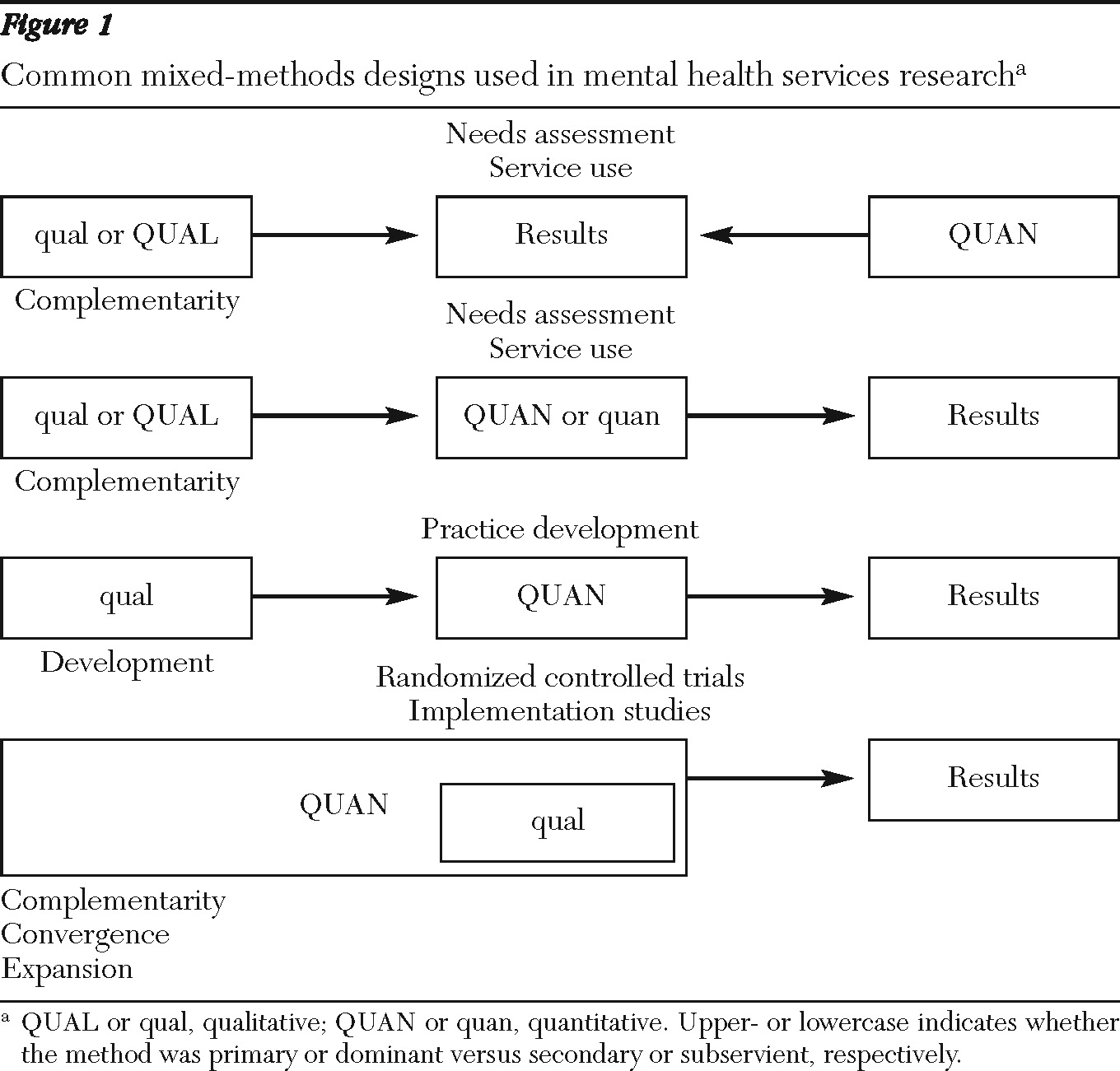

7) taxonomy, which gives emphasis to timing (for example, using methods in sequence [represented by a → symbol] versus using them simultaneously [represented by a + symbol]) and to weighting (for example, primary method [represented by capital letters such as QUAN] versus secondary method [represented in lowercase letters such as qual]).

Assessment of the function of mixed methods was based on whether the two methods were being used to answer the same question or to answer related questions and whether they were used to achieve convergence, complementarity, expansion, development, or sampling (

9). Finally, the process or strategies for combining qualitative and quantitative methods were assessed with the typology proposed by Cresswell and Plano Clark (

5): merging or converging the two methods by actually bringing them together in the analysis or interpretation phase, connecting the two methods by having one build upon the results obtained by the other, or embedding one data set within the other so that one type of method provides a supportive role for the other method.

Results

Our search identified 50 articles and 67 NIH-funded research projects published or funded between 2005 and 2009 that met our criteria for analysis. Seven of the NIH projects were excluded from further review because of missing data on the use of mixed methods. Three of the publications were based on one of the NIH-funded projects, and two other publications were based on one funded project each. Any redundant aims or strategies for combining qualitative and quantitative methods identified in linked publications and projects were counted only once in our analysis.

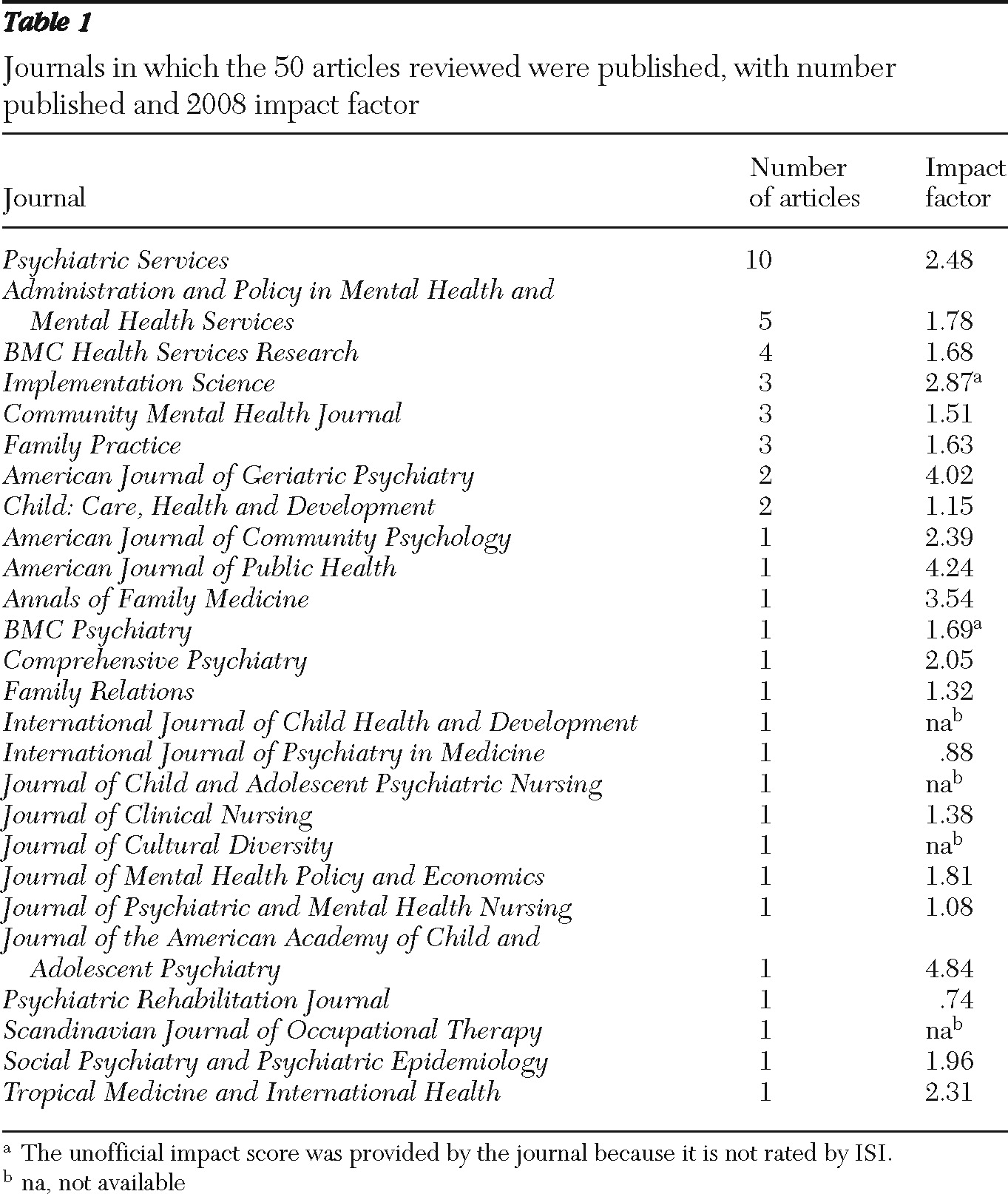

A list of the 26 journals in which the articles were published and the journals' impact factors (IFs) is presented in

Table 1. One-fifth of the articles were published in

Psychiatric Services. The 2008 IFs of the journals for which information was available ranged from .74 (

Psychiatric Rehabilitation Journal) to 4.84 (

Journal of the American Academy of Child and Adolescent Psychiatry). Twenty-one of the 50 articles (42%) had an IF of 2.0 or greater. Of the funded grants, three were predissertation research grants (F31s), 28 were career development awards (K01, K08, K23, K24, and K99), and 29 were independent research awards (R01, R03, R18, R21, R24, and R34).

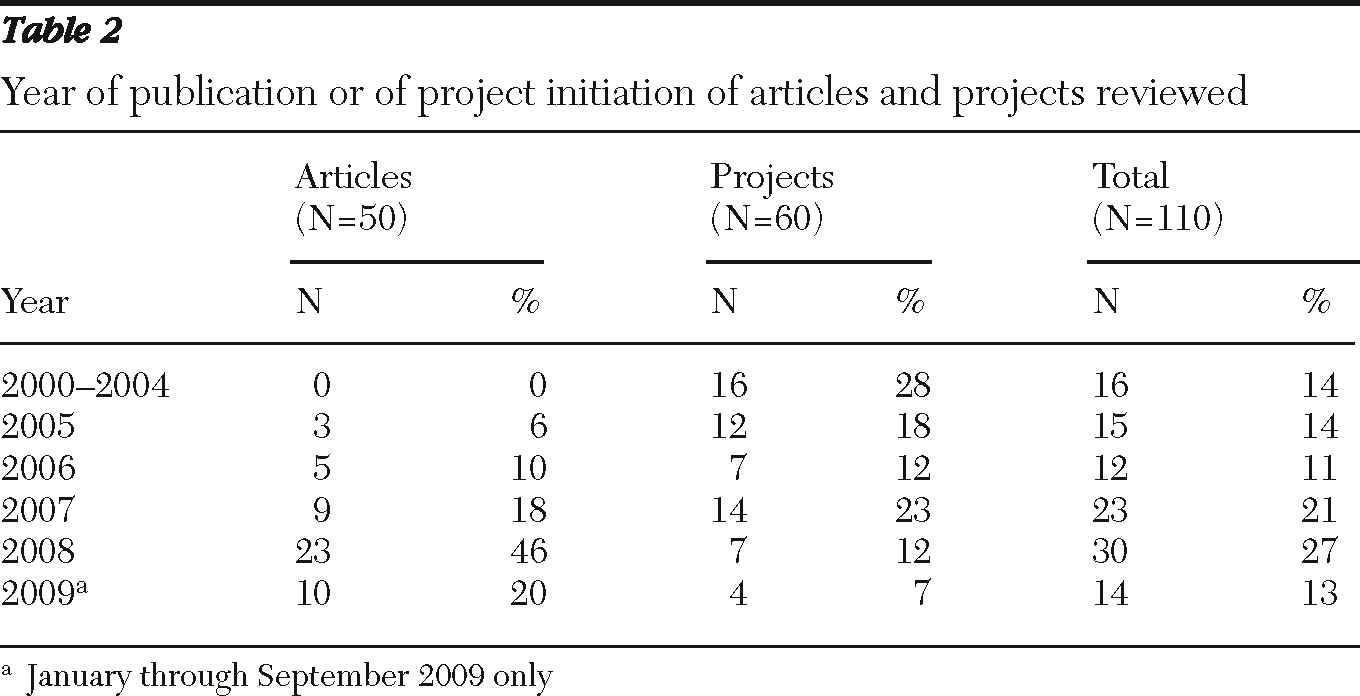

Table 2 presents the year of publication for the 50 articles and the start date of the 60 funded projects. Sixteen of the projects funded during this period had a start date before 2005. The smaller numbers of publications and of projects in 2009 reflect the shorter period of observation (nine months) for that year. There was an exponential increase in the number of publications between 2005 and 2008, and the number of grants from 2005 to 2009 was more than twice that of the previous five-year period (2000–2004).

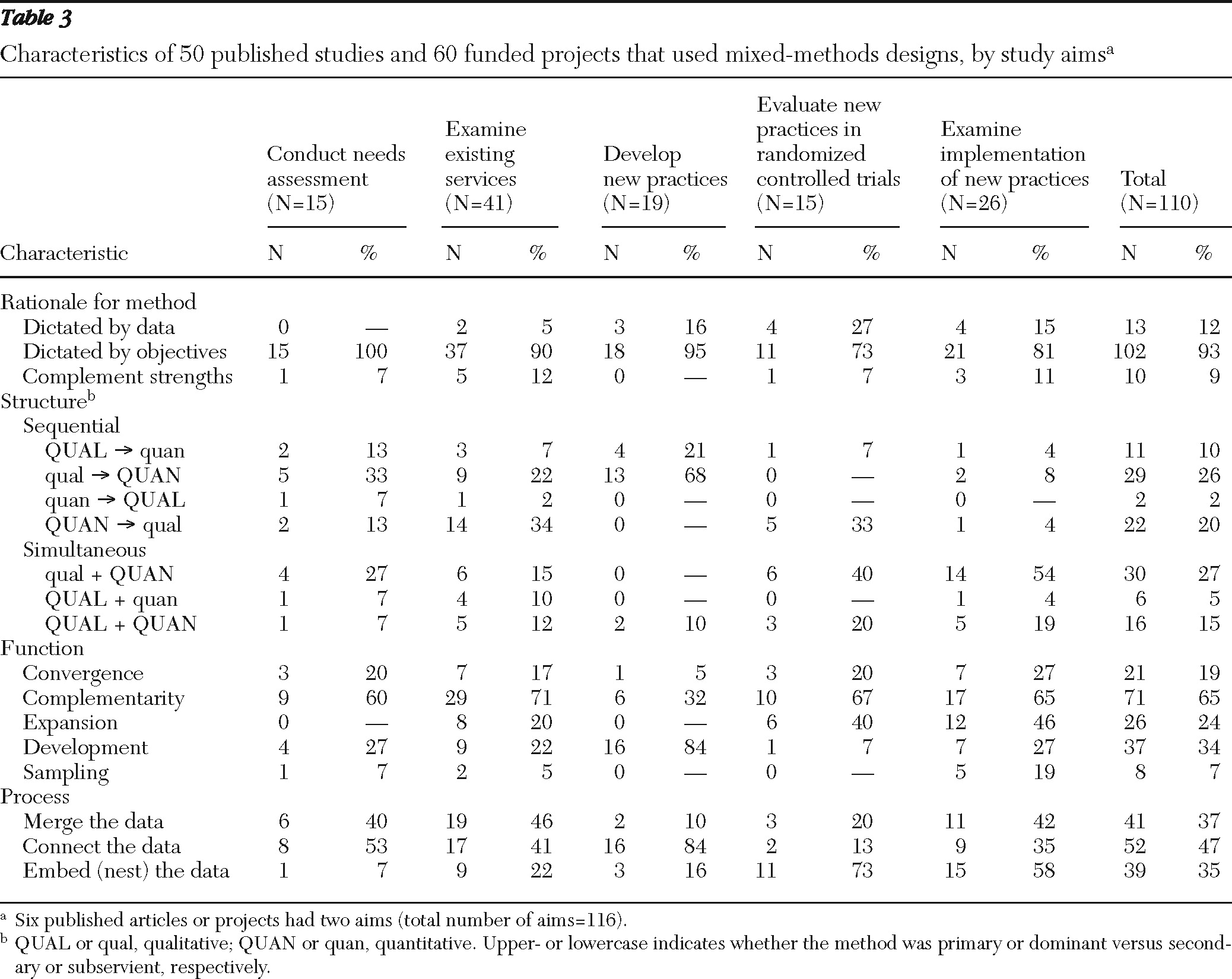

Table 3 summarizes for comparison the use of mixed-methods designs on the basis of study aims. Our analyses revealed the use of mixed methods to accomplish five distinct types of study aims and three categories of rationale. We further identified seven structural arrangements, five uses or functions of mixed methods, and three ways of linking quantitative and qualitative data together. Some papers and projects included more than one objective, structure, or function; hence the raw numbers may occasionally sum to more than the total number of studies examined. Twelve of the 50 articles presented qualitative data only but were part of larger studies that included the use of quantitative measures. Further, we identified four commonly used designs, with each design associated with a specific aim or set of aims (

Figure 1).

Study aims

As shown in

Table 3, the largest number of publications and projects (41 of 110, 37%) used mixed methods in observational or quasi-experimental studies of existing services. Almost one-quarter (24%) used mixed methods to study the implementation and dissemination of evidence-based practices. Mixed methods were also used to develop evidence-based practices, treatment, and interventions (17%); to conduct randomized controlled trials of interventions (14%); or to assess the needs of populations for mental health services (14%). Six studies had more than one aim (for example, two studies conducted a needs assessment before developing new interventions, and two studies examined implementation of an evidence-based practice within the context of a randomized controlled trial examining the practice's effectiveness.

Mixed-methods rationale

Forty-one of the 60 project abstracts (68%) and 25 of the 50 published articles (50%) did not provide an explicit rationale for the use of mixed methods; consequently, the rationale was inferred from statements found in project objectives. Of the 25 published articles that did provide an explicit rationale, only 11 provided one or more citations to justify use of mixed methods. The most common reason (93% of all articles and projects) for using mixed methods was based on the specific objectives of the study (for example, qualitative methods were needed for exploration or depth of understanding or quantitative methods were needed to test hypotheses). In other instances, use of mixed methods was dictated by the nature of the data; studies that included a focus on variables related to values and beliefs, the process of service delivery, or the context in which services are delivered relied on qualitative methods to describe and examine these phenomena. In 9% of articles and projects, investigators specifically indicated that both methods were used so that the strengths of one method could offset the weaknesses of the other (

Table 3).

Mixed-methods structure

The majority (58%) of the publications and projects used the methods in sequence, with qualitative methods more often preceding quantitative methods. Quantitative methods were the primary or dominant method in 74% of the publications and projects reviewed, and in 16 studies, qualitative and quantitative methods were given equal weight. In seven of the published studies, qualitative analyses were conducted on one or two open-ended questions attached to a survey, and 17 of the 50 published studies (34%) provided no references justifying their procedures for qualitative data collection or analysis. Only one published study (

21) provided a figure that illustrated the timing and weighting of qualitative and quantitative data collection and analysis, and none used terms like QUAN and qual to describe this structure.

In studies that aimed to assess needs for mental health services, examine existing services, or develop new services or adapt existing services to new populations, sequential designs were used two to four times more frequently than simultaneous designs. The latter type of design was more commonly used in randomized controlled trials and in implementation studies.

Mixed-methods functions

Our review of the publications and projects revealed five distinct functions of mixing methods (

Table 3). The first function was convergence, in which qualitative and quantitative methods were used sequentially or simultaneously to answer the same question, either through triangulation (that is, the simultaneous use of one type of data to validate or confirm conclusions reached from analysis of the other type of data) or transformation (that is, the sequential quantification of qualitative data or use of qualitative techniques to transform quantitative data). For instance, Griswold and colleagues (

22) triangulated quantitative trends in functional and health outcomes of psychiatric emergency department patients with qualitative findings of perceived benefits of care management and the value of integrated medical and mental health care to determine whether both types of data provided support for the effectiveness of a care management intervention (QUAN + QUAL). Using the technique of concept mapping (

23), Aarons and colleagues (

24) collected qualitative data on factors likely to have an impact on implementation of evidence-based practices in public-sector mental health settings. These data were then entered in a software program that uses multidimensional scaling and hierarchical cluster analysis to generate a visual display of statement clusters (QUAL → quan).

A second function of integrating quantitative and qualitative methods was complementarity, in which each method was used to answer related questions for the purpose of evaluation or elaboration. This function was evident in a majority (65%) of the published studies and projects examined. In evaluative designs, quantitative data were used to evaluate outcomes, whereas qualitative data were used to evaluate process. For instance, Bearsley-Smith and colleagues (

25) described the use of quantitative methods to investigate the impact on clinical care of implementing interpersonal psychotherapy for adolescents within a rural mental health service and the use of qualitative methods to record the process and challenges (that is, feasibility, acceptability, and sustainability) associated with implementation and evaluation (QUAN + qual). In elaborative designs, qualitative methods were used to provide depth of understanding and quantitative methods were used to provide breadth of understanding. For instance, in a longitudinal study of mental health consumer-run organizations, Janzen and colleagues (

26) used a quantitative tracking log for breadth of information about system-level activities and outcomes and key informant interviews and focus groups for greater insight into the impacts of these activities (QUAL + quan).

A third function of integrating qualitative and quantitative methods was expansion, in which one method was used in sequence to answer questions raised by the other method. This function was evident in 24% of the published studies and projects examined. In each instance, qualitative data were used to explain findings from the analyses of quantitative data. Brunette and colleagues (

27) interviewed key informants and conducted ethnographic observations of implementation efforts to understand why some agencies adhered to established principles for integrated dual disorders treatment and others did not (QUAN + qual).

A fourth function of mixed methods was development, in which qualitative methods were used sequentially to identify form and content of items to be used in a quantitative study (for example, survey questions), to create a conceptual framework for generating hypotheses to be tested by using quantitative methods, or to develop new interventions or adapt existing interventions to new populations (qual → QUAN). This function was used in 34% of the published studies and projects. Blasinsky and colleagues (

28) used qualitative findings from site visits to develop quantitative rating scales to construct predictors of outcomes and sustainability of a collaborative care intervention for older adults who had major depressive disorder or dysthymia. Green and colleagues (

29) used qualitative data to generate a theoretical model of how relationships with clinics and clinicians' approach affect quality of life and recovery from serious mental illness and then tested the model using questionnaire data and health-plan and interview-based data in a covariance structure model. Several of the research projects funded through the R34 mechanism (for example, MH074509-01, Kilbourne, principal investigator [PI]; MH078583-01, Druss, PI; and MH073087-01, Lewis-Fernandez, PI) used qualitative data obtained from focus groups of consumers and providers to develop or adapt interventions for clients with specific conditions (for example, bipolar disorder, chronic medical conditions, and depressive disorders) (qual − QUAN).

The final function of mixed methods was sampling, the sequential use of one method to identify a sample of participants for research that uses the other method. This technique was used in only 7% of all studies. One form of sampling was the sequential use of quantitative data to identify potential participants for a qualitative study (quan − QUAL). For instance, Aarons and Palinkas (

30) purposefully sampled candidates for qualitative interviews who had the most positive or most negative views of an evidence-based practice on the basis of a Web-based quantitative survey. The other form of sampling used qualitative data to identify samples of participants for quantitative analysis (qual − QUAN). Woltmann and colleagues (

31) created categories of low, medium, and high staff turnover on the basis of staff perceptions of relevance of turnover obtained from qualitative interviews and then quantitatively examined the relationship between these turnover categories and implementation outcomes (qual + QUAN).

Only six of the published studies and none of the project abstracts explicitly referred to the function of mixed methods by using terms such as triangulation (four published studies) or complementarity (two published studies). As expected, the development function was used in a majority (84%) of studies that aimed to develop new practices or adapt existing practices to new populations. A majority of observational and quasi-experimental studies of existing services (71%), randomized controlled trials (67%), implementation studies (65%), and needs assessment studies (60%) utilized mixed methods for the purposes of answering related questions in complementary fashion. The use of one set of methods to explain the results of a study using another set of methods appears to have been limited to implementation studies (46%), randomized controlled trial evaluations (40%), and studies of existing services (20%).

Process of mixing methods

The final characteristic of mixed-methods designs that we examined was the process of mixing the quantitative and qualitative methods. The largest percentage (47%) of articles and projects sought to connect the data sets (

Table 3). This occurs when the analysis of one data set leads to (and thereby connects to) the need for the other data set, such as when quantitative results lead to the subsequent collection and analysis of qualitative data (that is, expansion) or when qualitative results are used to build to the subsequent collection and analysis of quantitative data, (for example, development) (

5). For instance, Frueh and colleagues (

32) conducted focus groups to obtain information on the target population, their providers, and state-funded mental health systems that would enable the researchers to further adapt and improve a cognitive-behavioral therapy-based intervention for treatment of posttraumatic stress disorder before implementing it (qual → QUAN). This type of mixing was found in almost all of the studies with aims to develop new practices or adapt existing practices to new populations; it was also more likely to be found in needs assessment and studies of existing services than in randomized controlled trials or implementation studies.

Over one-third (37%) of the studies merged the knowledge gained from the quantitative and qualitative data, either during the interpretation phase when two sets of results that had been analyzed separately were brought together or during the analysis phase when one type of data was transformed into the other type by consolidating the data into new variables (

5). This type of mixing was found in slightly less than half of the needs assessment, observational, and implementation studies. For instance, Lucksted and colleagues (

33) reported that a qualitative analysis of responses to an open-ended postintervention question supported the quantitative findings of the benefits of a relapse prevention and wellness program (QUAN + qual).

The embedding of small qualitative or qualitative-quantitative studies within larger quantitative studies was observed in 35% of the published studies and projects reviewed and described as “nested designs” in six of the studies. This type of mixing was more commonly found in randomized controlled trials and in implementation studies, where qualitative studies of treatment or implementation process or context were embedded within larger quantitative studies of treatment or implementation outcome. For instance, to better understand the essential components of the patient-provider relationship in a public health setting, Sajatovic and colleagues (

34) conducted a qualitative investigation of patients' attitudes toward a collaborative care model and how individuals with bipolar disorder perceive treatment adherence within the context of a randomized controlled trial evaluating a collaborative practice model (QUAN + qual).

In 20% of published studies, more than one process was evident. For instance, Proctor and colleagues (

35) connected the data by generating frequencies and rankings of qualitative data on perceptions of competing psychosocial problems collected from a community sample of 49 clients with a history of depression. These data were then merged with quantitative measures of depression status obtained through administration of the Patient Health Questionnaire-9 to explore the relationship of depression severity to problem categories and ranks.

Discussion

The results of our analysis indicate that there has been substantial progress in using mixed-methods designs in mental health services research in response to efforts by NIMH (

2,

3) and other funding agencies to promote their use. Evidence for this progress is found in the increasing number of research projects that use mixed methods. The number of projects with mixed-methods designs funded over the five-year study period was more than twice the number that began in the previous five-year period (2000–2004). Furthermore, a majority (52%) of these funded projects were predissertation or career development awards used by junior and midlevel investigators to acquire expertise in mixed-methods research.

We also observed a notable increase in the number of studies based on mixed-methods designs published each year during this five-year period. The number of published mental health services research studies with mixed-methods designs increased by 67% between 2005 and 2006, by 80% between 2006 and 2007, and by 155% between 2007 and 2008. Furthermore, 21 of the 50 published studies (42%) that we reviewed appeared in journals with 2008 IFs of 2.0 or higher, including ten articles published in

Psychiatric Services; four articles appeared in a journal with an IF of 4.0 or higher. In contrast, McKibbon and Gadd (

18) reported that only 11 of 37 (30%) mixed-methods studies of health services appeared in a journal with an IF of 2.0 or higher in the year 2000.

Despite this progress, however, our review also suggests that there is room for improvement in use of mixed-methods designs. Most studies did not make explicit or provide support for the reasons for choosing a mixed-methods design; rather, we were forced to infer the rationale based on statements explaining what the methods were used for. Researchers may have felt that such explicit statements were as unnecessary as statements explaining the rationale for using certain quantitative methods, such as analysis of variance or survival analysis. However, the absence of an explicit rationale may also reflect a lack of understanding or appreciation of mixed-methods designs or a decision to use them without necessarily integrating or “mixing” them (

5,

6).

Most studies failed to provide explicit descriptions of the design structure or function that used terminology found in the mixed-methods literature; use of such terminology is consistent with the general standards for high-quality mixed-methods research recommended by Cresswell and Plano Clark (

5). Further, three-fourths of the 50 published studies reviewed assigned priority to the use of quantitative methods, seven of the studies performed qualitative analyses of one or two open-ended questions attached to a survey, and 17 of the studies provided no references justifying their procedures for qualitative data collection or analysis. This may reflect an underappreciation of qualitative methods, as Robins and colleagues (

1) have argued, or it may reflect a greater need for quantitative methods at the present time.

Although it was beyond the scope of this review to determine whether each study used mixed methods in effective ways, we note that each study was subjected to rigorous peer review before being published or funded, and each was judged by this process to make a valuable contribution to the field of mental health services research. These studies also provide evidence of meaningful and sensible variations in mixed-methods approaches to achieving various kinds of study aims and offer some guidance for integrating quantitative and qualitative methods in mental health services research. For instance, the choice of a mixed-methods design appears to be dictated by the nature of the questions being asked by mental health services researchers. Qualitative methods were used to explore a phenomenon when there was little or no previous research or to examine that phenomenon in depth, whereas quantitative methods were used to confirm hypotheses or examine the generalizability of the phenomenon and its associated predictors.

A majority of studies aiming to develop new practices or adapt existing practices to new populations had the same structure (beginning with a small qualitative study before developing or adapting the practice that was to be evaluated by using quantitative methods, which was found in 84% of the studies and projects) and the same process (connecting the findings of one set of methods with those of another set, which was found in 90% of the studies and projects). These studies reflect a growing awareness of the need to incorporate the preferences and perspectives of both service consumers and providers to ensure that new practices will be acceptable as well as feasible (

32,

36–

39).

Studies of existing services also tended to be sequential in structure, with qualitative methods used to elaborate or explain the findings of quantitative studies. In the majority of these studies, the process of mixing methods involved either merging two sets of data to achieve convergence or connecting them to achieve expansion (

5). A similar pattern was observed in studies that aimed to explore issues related to the needs for mental health services or provide more depth to our understanding of those needs. Such studies also appeared more likely to transform or “quantitize” qualitative data (

24,

35).

Randomized controlled trials and studies of implementation also shared similar patterns in use of mixed methods, including simultaneous use of both methods to achieve complementarity by embedding a qualitative or qualitative-quantitative study within a larger quantitative study, such as a randomized controlled trial. In the randomized controlled trials, qualitative methods were usually used to evaluate the process of providing the practice or intervention, whereas quantitative methods were used to evaluate the outcomes (

25,

40). In implementation research studies, qualitative methods were used to explore or provide depth to understanding barriers and facilitators of intervention implementation, whereas quantitative methods were used to confirm hypotheses and provide breadth to understanding by assessing the generalizability of findings (

41,

42).

The choice of mixed-methods designs was also dictated by how the individual questions being addressed by each method were related to one another. Studies that used different types of data to answer the same question reflected the function of convergence in a simultaneous structure, where data were merged for the purpose of triangulation, or a sequential structure, where qualitative data were transformed into quantitative data. Studies that used different types of data to answer related questions reflected the function of complementarity, in which quantitative methods were used to measure outcomes, describe content (for example, fidelity of services used and the nature of the mental health problem), and provide breadth (generalizability) of understanding, whereas qualitative methods were used to evaluate the process of service delivery (

43–

45), describe context (for example, setting) (

26,

34,

46), describe consumer values or attitudes (

35,

42,

47), and provide depth (meaning) of understanding (

28,

48) in a simultaneous structure and embedded data process. Expansion, development, and sampling were also used to provide answers to related questions that could not be answered by one method alone, usually in a sequential structure in which data sets were merged or connected together (

24,

30,

37).

Finally, the choice of design appears to be based on the strengths of one method relative to the weaknesses of the other. For instance, expansion was used to explain findings based on quantitative data with qualitative data because explanation was not possible with the quantitative methods alone (

25,

27,

40). In convergence, both sets of methods were used to confirm or validate one another, especially in instances where limited samples precluded testing of hypotheses with sufficient statistical power (

30,

49) and where limitations to qualitative data collection raised concerns about objectivity and transferability of results. In studies developing new methods, conceptual models, and interventions, qualitative methods also served to enhance quantitative analysis by laying the groundwork essential for more valid measurement and theory and more effective, usable, and sustainable interventions (

37). Sampling also worked to enhance validity by using qualitative methods to enhance quantitative methods by developing targeted comparisons or by using quantitative methods to enhance qualitative methods by establishing criteria for purposeful sampling (

36).

In summary, the choice of a mixed-methods design appears to be associated with three considerations: the nature of the question being asked (inductive-exploratory or deductive-confirmatory), how the questions being addressed by each method are related to one another, and the strengths of each method relative to the weaknesses of the other.

Caution should be exercised in interpreting these findings given limitations in our study design and analysis. Despite our efforts to be comprehensive in the search process and to select studies and projects on the basis of criteria with face validity, we undoubtedly excluded several articles or projects that used mixed methods. For example, we may have excluded mixed-methods projects listed in the CRISP database that did not specify use of qualitative or mixed methods in the abstracts. We may have also excluded published articles with qualitative data that were part of larger, primarily quantitative studies if the articles did not reference the larger studies, or we may have excluded articles not listed in PubMed Central. In the absence of explicit information, we were often forced to infer the structure, rationale, and function of the design based on statements contained in the available material. Similarly, the CRISP abstracts describe only what the investigators proposed to do with mixed methods and do not indicate what was actually done. Our use of existing typologies of structure, function, and process were intended to serve as a starting point in our analysis rather than an attempt to “pigeon-hole” each study into a specific typology. Our assessment of the progress made in the application of mixed-methods designs in response to calls for their use by funding agencies did not include indicators of whether these efforts had produced more useful, incisive, or insightful knowledge for the purpose of addressing mental health services questions and problems. Such an assessment would require comparisons with the products of studies based on monomethod designs, which was beyond the scope of this study.

Finally, it should be noted that the typology of mixed-methods use does not represent a set of standards for using mixed methods per se but is an important first step toward the development of such standards. Typologies by themselves do not explain why a particular method should be used and how to use a method appropriately. However, as Teddlie and Tashakkori (

6) observed, there are five reasons or benefits to developing such a typology: typologies help to provide the field with an organizational structure, they provide examples of research designs that are clearly distinct from either qualitative or quantitative research designs, they help to establish a common language for the field, they help researchers decide how to proceed when designing their studies, and they are useful as a pedagogical tool. A consensus conference or workshop bringing together experts in mixed methods and mental health services research to evaluate the empirically generated typology found in current patterns of mixed-methods use would appear to be the next logical step in developing a set of standards. Such standards would also be required to adhere to the epistemological foundations of each method when used separately (for example, whether appropriate considerations are made to ensure the generalizability of quantitative results or theoretical saturation of qualitative data and whether each method is appropriately matched to the inductive or deductive theoretical drive of the study) and when combined (for example, whether the knowledge gained when using the two methods together is more insightful and of greater value than the knowledge gained when using them separately).