A great deal of excitement has been generated by the recent introduction of computer-assisted therapies, which can deliver some or all of an intervention directly to users via the Internet or a processor-based program. Computer-assisted therapies have a number of potential advantages. They are highly accessible and may be available at any time and in a broad range of settings; they can serve as treatment extenders, freeing up clinician time and offering services to patients when clinical resources are limited; they can provide a more consistently delivered treatment; they can be individualized and tailored to the user's needs and preferences; interactive features can link users to a wide range of resources and supports; and the multimedia format of many of these therapies can convey information and concepts in a helpful and engaging manner (

1–

3). Computer-assisted therapies may also greatly reduce the costs of some aspects of treatment (

4–

6). Among their most promising features is the potential to provide evidence-based therapies to a broader range of individuals who may benefit from them (

7), given that only a fraction of those who need treatment for psychiatric disorders actually access services (

8–

10), while 75% of Americans have access to the Internet (

11).

However, the great promise of computer-assisted approaches is predicated on their effectiveness in reducing the symptoms or problems targeted. Recent meta-analyses and systematic reviews have suggested positive effects on outcome for a range of computer-assisted therapies, particularly those for depression and anxiety (

2,

12,

13), but the existing reviews also point to substantial heterogeneity in study quality (

2,

14–

17). For example, a recent review of e-therapy (treatment delivered via e-mail) conducted by Postel et al. (

14) noted that only five of the 14 studies reviewed met minimal criteria for study quality as defined by Cochrane criteria (

14,

18).

Thus, this emerging field is in many ways reminiscent of the era of psychotherapy efficacy research prior to the adoption of current methodological standards for evaluating clinical trials (

19) and prior to the codification of standards for evaluating a given intervention's evidence base (e.g., specification in manuals, independent assessment of outcome, and evaluation of treatment integrity) (

20,

21). Moreover, as a novel technology, there are several methodological issues that are particularly salient to the evaluation of computer-assisted therapies, such as the level of prior empirical support for the (usually clinician-delivered) parent therapy, the level of clinician/therapist involvement in the intervention, the relative credibility of comparable approaches, and whether the approach is delivered alone or as an adjunct to another form of treatment.

Given the rapidity with which computer-assisted therapies can be adopted and disseminated, it is particularly important that this emerging field not only have a sound evidence base but also demonstrate safety, since heightened awareness of potentially negative or harmful effects of psychological treatments has increased the need for more stringent evaluation prior to dissemination (

22–

24). Although generally considered low risk (

17), there are multiple potential adverse consequences of computer-assisted therapies. These may include providing less intensive treatment than necessary to treat severely affected or symptomatic individuals, ineffective approaches that may discourage individuals from subsequently seeking needed treatment, or inappropriate interpretation of program recommendations that could lead to harm (e.g., premature detoxification in substance users, worsening of panic attacks from exposure approaches that are too rapid or intensive), particularly in the absence of clinician monitoring and oversight. Systematic evaluation of possible adverse effects of computer-assisted therapies, as well as further evaluation of the types of individuals who respond optimally to computer-assisted versus traditional clinician-delivered approaches, is needed prior to their broad dissemination.

Results

Reliability of Ratings

Results of the interrater concordance (using weighted kappas) for the randomized controlled trial and computer-assisted therapy-specific quality items, as well as the overall quality items, were computed for rated articles within each disorder type (see Table 1 in the data supplement accompanying the online version of this article). The overall concordance on all items for 74/75 rated articles (excluding the Carroll et al. article [

37]) was a kappa of 0.52, which is consistent with moderate levels of interrater agreement (

38,

39). Across all four main disorder types, concordance for randomized controlled trial items (kappa=0.48) was comparable to that for computer-assisted therapy-specific items (kappa=0.44). Since kappas can be artificially low when sample sizes and prevalence rates are small (

40), the percent of absolute agreement between the rater pairs was also calculated (range: 75%–78% overall).

Methodological Quality Scores

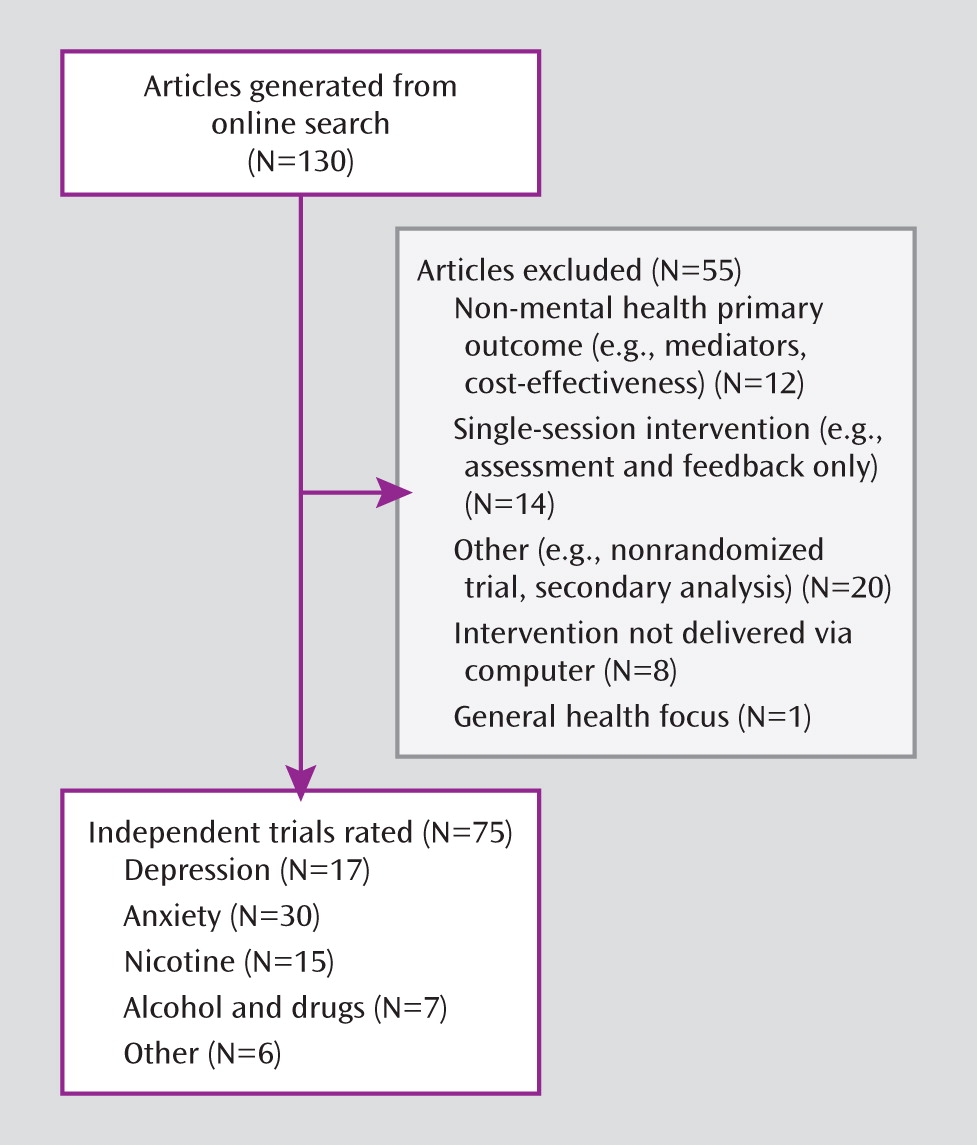

Frequencies and item scores for the full sample of articles (N=75) are presented in

Table 2. The mean overall quality item score was 13.6 (SD=3.6), out of a maximum score of 28. Mean scores for randomized controlled trial and computer-assisted therapy-specific items were 6.5 (SD=1.9) and 7.1 (SD=2.7), respectively. In terms of the seven randomized controlled trial criteria, the majority of studies (73%) described the randomization method used and demonstrated equivalence of groups on baseline characteristics. Nearly all trials (91%) were judged as using appropriate statistical analyses. However, a substantial proportion (40%) was rated as not adequately describing the methods used to handle missing data or as reporting use of an inappropriate method (e.g., last value carried forward) rather than an intention-to-treat analysis. Very few trials (15%) obtained follow-up data on at least 80% of the total sample, and most (75%) relied solely on participant self-report for evaluation of outcome.

In terms of the seven computer-assisted therapy-specific items, nearly all trials (95%) indicated that the computerized approach was based on an existing manualized treatment with some prior empirical support. Slightly more than one-half of the studies (52%) used standardized diagnostic criteria as an inclusion criterion. When studies on nicotine dependence were excluded, the rate of use of standardized diagnostic criteria to determine eligibility rose to 62%. Forty-one percent of the trials relied on self-report or a cutoff score to determine participant eligibility, and 7% did not identify clear criteria for determining problem or symptom severity. In terms of control conditions, 23% of studies utilized wait-list conditions only; 41% used an attention/placebo condition; and 36% used active conditions. Most studies (65%) relied solely on participant self-report on a validated measure to evaluate primary outcomes. Only 25% of studies used an independent assessment (blind ratings or biological indicator), and 9% reported use of unvalidated measures. A number of studies (27%) did not report on participant adherence with the study interventions; 49% reported some measure of adherence; and only 24% measured compliance thoroughly and considered it in the analysis of treatment outcomes. Most studies (76%) did not include a measure of credibility of the study treatments. Comparison or control conditions were not equivalent in time or attention in the majority of studies (69%).

No study met basic standards (i.e., score of ≥1) for all 14 items. Three trials met basic standards on 13 of the 14 items (see Figure 1 in the data supplement). Results of ANOVAs examining differences in mean overall quality scores indicated no statistically significant difference across disorder areas (depression, anxiety, nicotine dependence, drug/alcohol dependence).

Table 3 summarizes additional methodological features not included in the overall quality score. The majority of trials (72%) utilized a web-based format for treatment delivery, and most (72%) were stand-alone treatments rather than add-ons to an existing or standardized treatment. A notable issue across all studies reviewed was the lack of information provided on the level of exposure to study treatments. Only 16 studies (21%) reported either the length of time involved in treatment or number of treatment sessions/modules completed. The majority of computer-assisted therapies for alcohol and drug dependence (57%) had a study sponsor with a potential conflict of interest, whereas relatively few studies focusing on anxiety disorders (13%) had study sponsors with a potential conflict of interest. Most studies (72%) reported that the theoretical base of the computer-assisted approach was cognitive behavioral.

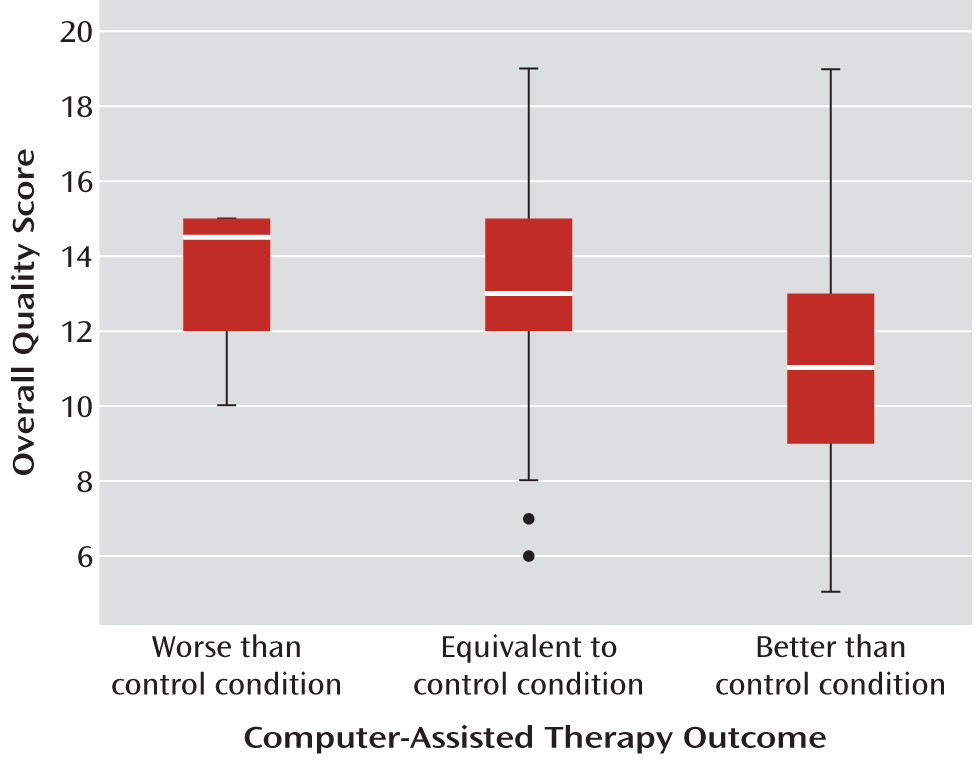

Intervention Effectiveness and Relationship With Quality Scores

In terms of the effectiveness of computer-assisted therapies relative to control conditions, 44% of the trials reported that the computer-assisted therapy was more effective than the most potent comparison condition with regard to effect on the primary outcome. Overall, the computer-assisted therapy was found to be more effective than 88% of the wait-list comparison conditions, 65% of the placebo/attention/education conditions, and 48% of the active control conditions (χ

2=6.7, p<0.05). In those studies where the control condition did not involve a live clinician, the computer-assisted therapy was typically found to be significantly more effective than the control condition (74% of trials). However, the computer-assisted therapies were less likely to be effective than comparison therapies when the control condition included face-to-face contact with a clinician (46% of studies) (χ

2=6.56, p<0.05). There were no differences in overall effectiveness of the computer-assisted therapy relative to the control conditions across the four major disorder/problem areas. However, as shown in

Figure 2, studies that reported the computer-assisted therapy to be more effective than the most potent control condition had significantly lower overall methodological quality than studies where the computer-assisted therapy was found to have efficacy that was comparable to or poorer than the control condition (F=5.0, df=2, 72, p<0.01).

Relationship Between Methodological Quality Scores and Specific Design Features

Several exploratory analyses were conducted to evaluate relationships between specific methodological features and methodological quality scores (for these comparisons, the criterion in question was removed when calculating methodological quality scores, and thus 13 rather than 14 items were assessed). These analyses indicated higher methodological quality scores for studies that 1) used an active control condition relative to attention or wait-list conditions (active: mean=14.8 [SD=2.4]; placebo: mean=11.7 [SD=2.8]; wait-list: mean=10.4 [SD=2.5]; F=17.4, df=2, 72, p<0.001); 2) evaluated computer-assisted therapies based on a manualized behavioral intervention with previous empirical support (no prior support: mean=10.5 [SD=1.3]; some support: mean=11.1 [SD=3.4]; empirical support with manual: mean=13.5 [SD=3.0]; F=6.1, df=2, 72, p<0.01); 3) included at least a moderate level of clinician involvement (>15 minutes/week) with the computer-assisted intervention (at least moderate: mean=15.6 [SD=3.7]; little: mean=12.2 [SD=3.9]; none: mean=12.9 [SD=2.8]; F=6.1, df=2, 72, p<0.01); 4) clearly defined the anticipated length of treatment and measured the level of participant exposure/adherence with the computerized intervention (treatment defined and adherence evaluated: mean=14.7 [SD=3.1]; treatment defined but adherence not evaluated: mean=14.2 [SD=3.5]; treatment not defined and adherence not evaluated: mean=10.4 [SD=2.7]; F=7.7, df=2, 72, p<0.001); 5) utilized a clinical sample rather than a general sample (clinical: mean=14.9 [SD=3.6]; general: mean=12.4 [SD=3.2]; F=10.8, df=1, 73, p<0.01); and 6) were replications of previous trials (replication: mean=14.5 [SD=3.2]; nonreplication: mean=12.3 [SD=3.3]; F=8.7, df=1, 73, p<0.05).

Overall, quality scores were somewhat higher for studies in which the authors reported a financial interest in the computerized intervention but not significantly different from studies with no apparent conflict of interest (mean: 14.1 [SD=3.7] versus 13.5 [SD=3.6], respectively). The control condition was more likely to be potent (active) in studies with authors who did not report a financial interest relative to studies with authors who did (40% versus 20%, not significant). There were no significant differences for overall methodological quality scores with respect to the format of the intervention (web-based versus DVD/CD), whether evaluated as a stand-alone intervention or delivered as an addition to an existing treatment, nor were there significant differences with regard to the geographic region where the study was conducted (United States versus United Kingdom versus European Union versus Australia/New Zealand). Finally, in contrast to our expectations of improving the quality of research methods over time, there was no evidence of substantial change in overall quality over time. Although the number of publications in this area increased yearly, particularly after 1998 (beta=0.62, t=3.01, p<0.01), the number of criteria met tended to decrease over time (beta=–0.08, t=2.10, p=0.06).

Discussion

This review evaluated the current state of the science of research on the efficacy of computer-assisted therapies, using a methodological quality index grounded in previous systematic reviews and criteria used to determine the level of empirical support for a wide range of interventions. The mean methodological quality score for the 75 reports included was 13.6 (SD=3.6) out of a possible 28 quality points (49% of possible quality points), with comparatively little overall variability across the four major disorder/problem areas evaluated (depression, anxiety, nicotine dependence, and alcohol and illicit drug dependence). Overall, this set of studies met minimum standards on only 9.5 of the 14 quality criteria evaluated.

Taken together, these findings suggest that much of the research on this emerging treatment modality falls short of current standards for evaluating the efficacy of behavioral and pharmacologic therapies and thus point to the need for caution and careful review of any computer-assisted approach prior to rapid implementation in general clinical practice. Relative strengths of this body of literature include consistent documentation of baseline equivalence of groups and inclusion of comparatively large sample sizes, with 43% of studies reporting at least 50 participants within each treatment group. Moreover, most of the computer-assisted interventions evaluated were based on clinician-delivered approaches with some empirical support. These strengths therefore highlight the broad accessibility of computer-assisted therapies and the relative ease with which empirically based treatments can be converted to digital formats (

26,

41).

This review also highlights multiple methodological weaknesses in the set of studies analyzed. One of the most striking findings was that none of the 75 trials evaluated met minimal standards on all criteria, and only three studies met 13 of the 14 criteria. Three issues were particularly prominent. First, many of the studies used comparatively weak control conditions. Seventeen trials (23%) used wait-list conditions only, all of which relied solely on self-report for assessment of outcome, without appropriate comparison for participant expectations or multiple demand characteristics, resulting in very weak evaluations of the computer-assisted approach. These trials also had lower overall quality scores relative to those that used more stringent control conditions.

The second general weakness was a striking lack of attention to issues of internal validity. Few of the studies (21%) reported the extent to which participants were exposed to the experimental or control condition or considered the effect of attrition in the primary analyses. For many studies (40%), it was impossible to document the level (in terms of either time or proportion of sessions/components completed) of intervention received by participants. Third, only 13% of studies conducted true intention-to-treat analyses. Reliance on inappropriate methods, such as carrying forward the final observation, was common. This practice, coupled with differential attrition between conditions, likely led to biased findings in many cases (

42). Finally, very few studies addressed the durability of effects of interventions via follow-up assessment of the majority of randomly assigned participants.

Some but not all of our exploratory hypotheses regarding methodological quality, specific study features, and outcomes were confirmed. For example, there was no clear evidence that the methodological quality of the field improved over time. In fact, some of the more highly rated studies in this sample were published fairly early and in high-impact journals. This suggests a rapidly growing field marked by increasing methodological heterogeneity. Second, while a unique feature of computer-assisted therapies (relative to most behavioral therapies) is that the developers may have a significant financial interest in the approach and hence results of the trial, there was no clear evidence of lower methodological quality in such trials. However, weaker control conditions tended to be used more frequently in those studies where there was a possible conflict of interest. In general, studies that included clearly defined study populations and some clinician involvement were associated with better methodological quality scores. This latter point may be consistent with meta-analytic evidence of larger effect size among studies of computer-assisted treatment that include some clinician involvement (

43).

A striking finding was the association of study quality with the reported effectiveness of the intervention, with those studies of lower methodological quality associated with greater likelihood of reporting a significant main effect for the computer-assisted therapy relative to the control condition. Many weaker methodological features (e.g., reliance on self-reported outcomes, differential attrition, mishandling of missing data) are generally expected to bias results toward detecting significant treatment effects, and in this set of studies, use of wait-list rather than active control conditions was particularly likely to be associated with significant effects favoring the computer-assisted treatment. Other features, particularly those associated with reducing power (e.g., small sample size, poor adherence), generally add bias in terms of nonsignificant effects.

While there were no differences in methodological quality scores across the four types of disorders with adequate numbers of studies for review (depression, anxiety, nicotine dependence, drug/alcohol dependence), there were a number of differences across studies associated with use of specific methodological features. For example, the studies evaluating treatments for nicotine dependence were characterized by use of broad, general populations and hence tended to be web-based interventions with less direct contact with participants. Thus, these studies were also characterized by methodological features closely associated with web-based studies (

44), such as comparatively little clinician involvement, large sample sizes, and reliance on self-report. In fact, this group of studies consisted of the largest sample sizes among those in our review (approximately 93% reported treatment conditions with greater than 50 participants). The studies involving interventions for anxiety disorders were some of the earliest to appear in the literature and were characterized by a larger number of replication studies, as well as higher rates of standardized diagnostic interviews to define the study sample in addition to higher levels of clinician involvement and of attention to participant satisfaction and assessment of treatment credibility. These studies had fairly small sample sizes, with 43% reporting fewer than 20 participants per condition. The depression studies were more heterogeneous in terms of focus on clinical populations and use of standardized criteria to define study populations, yet they included relatively large sample sizes (71% contained treatment conditions with more than 50 participants). The drug/alcohol dependence literature contributed the fewest number of studies, a higher proportion of web-based intervention trials, and moderate sample sizes (71% reported treatment conditions with 20–50 participants).

Limitations of this review include a modest number of randomized clinical trials in this emerging area, since only 75 studies met our inclusion criteria. The limited number of studies may have contributed to the modest kappa values for interrater reliability, while percent agreement rates were comparatively good. Although the items on the methodological quality scale were derived by combining several existing systems for evaluating randomized trials and behavioral interventions, lending it reasonable face validity, we did not conduct rigorous psychometric analyses to evaluate its convergent or discriminant validity. Another limitation was failure to verify all information from the relevant study authors. Some of the information used for our methodological ratings may have been eliminated from the specific report prior to publication because of space limitations (e.g., description of evidence base for parent therapy) and therefore may not reflect the true methodological quality of the trial. Finally, we did not conduct a full meta-analysis of effect size, since our focus was evaluation of the methodological quality of research in computer-assisted therapy. Moreover, inclusion of trials of poor methodologic quality and heterogeneity in the rigor of control conditions (and hence likely effect size) would yield meta-analytic effect size estimates of questionable validity (

45).

It should be noted that some methodological features were not included in our rating system simply because they were so rarely addressed in the studies reviewed. In particular, only one of the studies included explicitly reported on adverse events occurring during the trial. Most behavioral interventions are generally considered to be fairly low risk (

46), as are computerized interventions (

17). However, like any potentially effective treatment or novel technology, computer-assisted therapies also carry risks, limitations, and cautions often minimized or overlooked by their proponents (

47), and hence there is a pressing need for research on both the safety and efficacy of computer-assisted therapies (

24,

34,

47). Relevant adverse events should be appropriately collected and reported in clinical trials of computer-assisted interventions. Another important issue of particular significance for computer-assisted and other e-interventions is their level of security. This issue was not addressed in the majority of studies we reviewed, nor was the type or sensitivity of information collected from participants. Because no computer system is completely secure and many participants in these trials might be considered vulnerable populations, better and more standard reporting of the extent to which program developers and investigators consider and minimize risks of security breaches in this area is needed.

The high level of enthusiasm regarding adoption of computer-assisted therapies conveys an assumption that efficacy readily carries over from clinician-delivered therapies to their computerized versions. However, computer-assisted only conveys that some information or putative intervention is delivered via electronic media, but nothing is indicated about the quality or efficacy of the intervention. We maintain that computer-based interventions should be evaluated using the same rigorous testing of safety and efficacy, with methods that are requirements for establishing empirical validity of behavioral therapies prior to dissemination. At a minimum, this should include standard features evaluated in the present review (e.g., random assignment; appropriate analyses conducted in the intention-to-treat sample; adequate follow-up assessment; use of standardized, validated, and independent assessment of outcome). In addition, data from this review suggest that particular care is needed with respect to treatment integrity, particularly evaluation and reporting of intervention exposure and participant adherence, as well as assessment of intervention credibility across conditions. Finally, the field has developed to a level where studies that use weak wait-list control conditions and rely solely on participant self-reports are not tenable, since they address few threats to internal validity and convey little regarding the efficacy of this novel strategy of treatment delivery. The poor retention rates in treatment and lack of adequate follow-up assessment provide insufficient evidence of safety and durability. Given the rapidity with which these programs can be developed and marketed to outpatient clinics, healthcare insurance providers, and individual practitioners, our findings should be viewed as a caveat emptor warning for the purchasers and consumers of such products. The vital question for this field is not “Do computer-assisted therapies work?” but “Which specific computer-assisted therapies, delivered under what conditions to which populations, exert effects that approach or exceed those of standard clinician-delivered therapies?”