The processes of making a prediction, acting on the prediction, registering any mismatch between expectation and outcome, and then updating future predictions in the light of any mismatch are critical factors underpinning learning and decision making. Considerable evidence demonstrates that the striatum is a key structure involved in registering mismatches between expectation and outcome (prediction errors) (

1). Representations of the value of stimuli and actions (incentive values) are encoded in ventral and medial parts of the frontal cortex (

2). Available evidence, mainly from experimental animal research, suggests that the monoamine neuromodulators norepinephrine, serotonin, and especially dopamine play an important role in neural computations of incentive value and reward prediction error (

3–

6). These findings from basic neuroscience have considerable implications for our understanding of psychiatric illness, given that many psychiatric disorders have been shown to involve frontal or striatal pathology, monoamine neurochemical imbalances, and abnormalities in learning and decision making (

7).

It has been suggested that in schizophrenia, abnormal learning of associations and inappropriate attribution of incentive salience and value could be key processes underpinning the development of psychotic symptoms (

8,

9). Functional MRI (fMRI) studies in unmedicated patients and in patients with active psychotic symptoms have shown abnormal frontal, striatal, and limbic markers of prediction error or stimulus value during or after associative learning, with some evidence of a correlation between abnormal brain learning indices and symptom severity (

10–

15). In this study, we sought to explore further the link between prediction error-based learning, brain mechanisms of valuation, and psychotic symptom formation by examining the ability of methamphetamine to induce transient psychotic symptoms in healthy volunteers and relating this to the drug’s effect on neural learning signals.

Amphetamines can induce psychotic-like symptoms even after a single administration, especially at high doses (

16–

18). Experimental administration of amphetamines and related stimulants, whether in humans or animals, has proved a long-standing useful preclinical model of aspects of the pathophysiology of schizophrenia. The mechanisms through which amphetamines cause psychotic symptoms are unknown, but through their effects on release of dopamine (and/or other monoamines) they may induce a disruption of frontostriatal reinforcement value and prediction error learning signals, which in turn may contribute to the generation of symptoms.

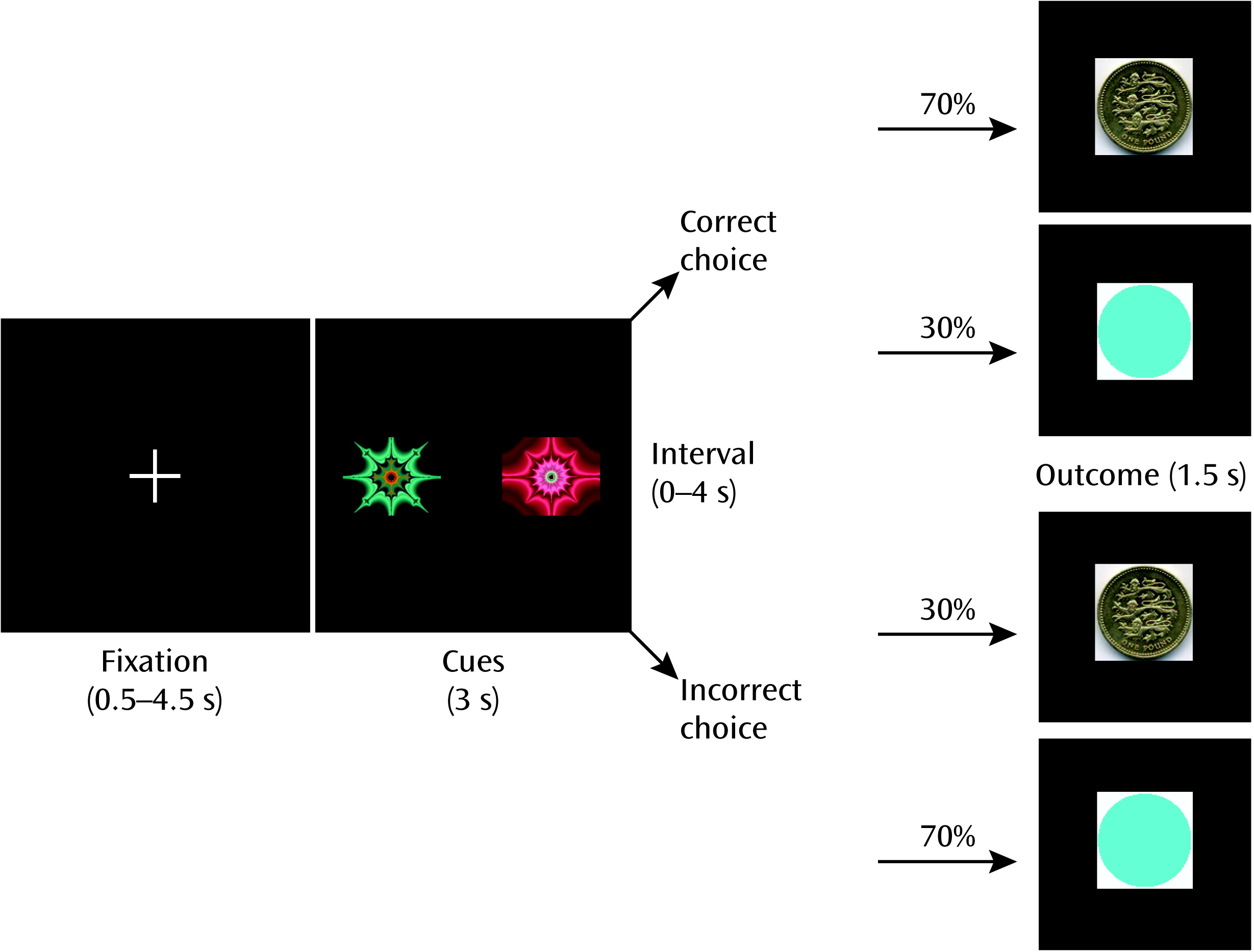

With this in mind, we adopted an approach characterizing 1) the impact on fMRI measures of administration of an amphetamine (methamphetamine) on neural computations of incentive value and prediction error during learning in healthy volunteers, and 2) the degree to which methamphetamine-induced disruption of these neural computations is related to drug-induced changes in mental state. In a third session, participants received pretreatment with the second-generation antipsychotic amisulpride, a potent dopamine D2 receptor antagonist, before methamphetamine was administered. We included amisulpride in the study in the hope of gaining insight into the mechanism of action of antipsychotic medication and clarifying the neurochemistry of any changes in mental state or brain learning signals induced by methamphetamine.

We hypothesized that administering methamphetamine would impair reinforcement learning and disrupt frontal and striatal learning signals. We hypothesized furthermore that individual differences in the degree of frontal and striatal reinforcement learning signal disruption would be associated with individual differences in the degree to which the drug induced psychotic symptoms. We reasoned that if these hypotheses were to be confirmed, it would strengthen the evidence supporting a disruption in frontostriatal learning processes in the generation of psychotic symptoms in schizophrenia and other psychiatric disorders. A third hypothesis, investigating the mechanism of action of antipsychotic medication and the precise neurochemical basis of methamphetamine-induced changes, was that pretreatment with amisulpride prior to methamphetamine administration would mitigate any abnormalities induced by methamphetamine.

Discussion

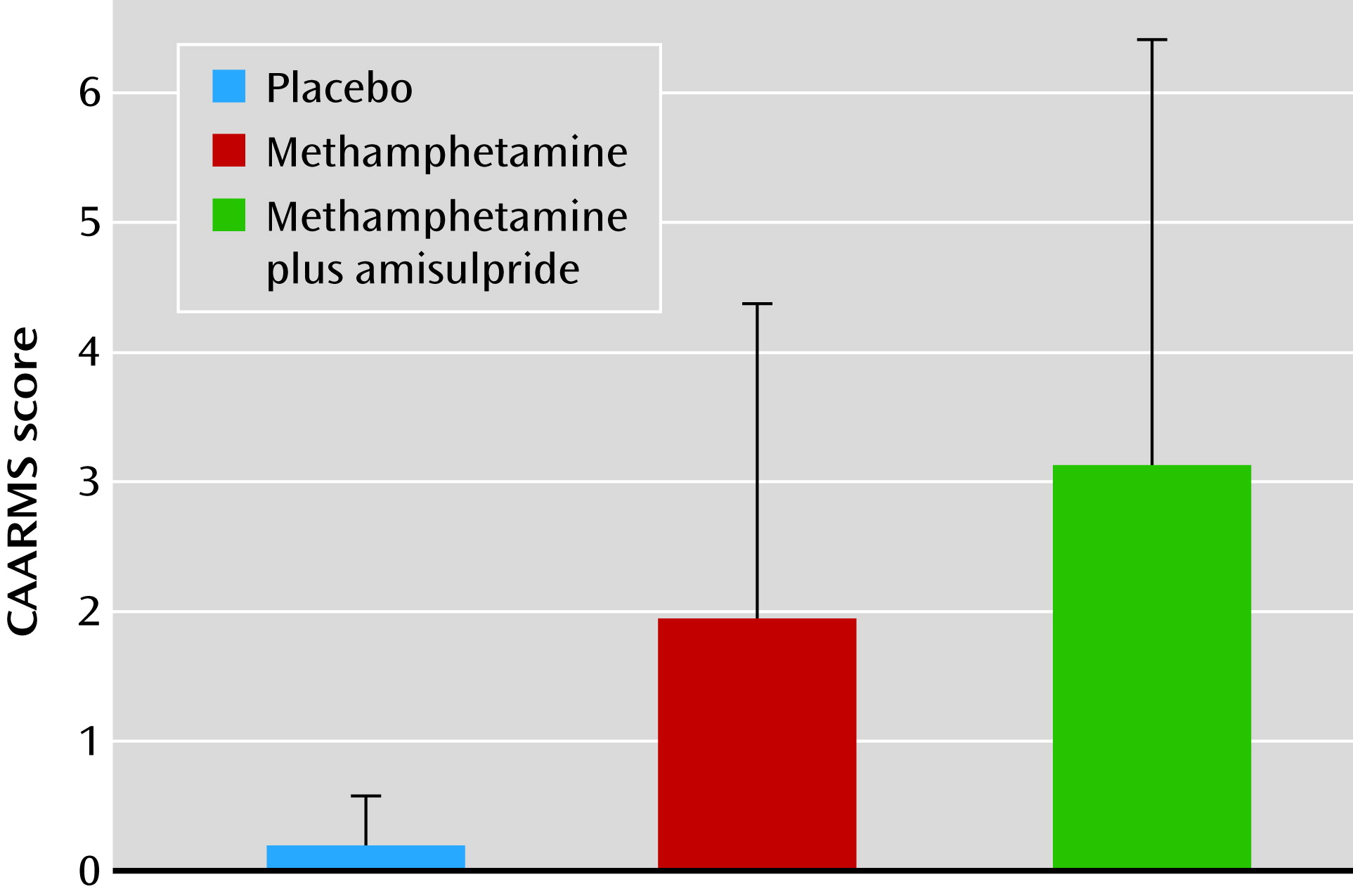

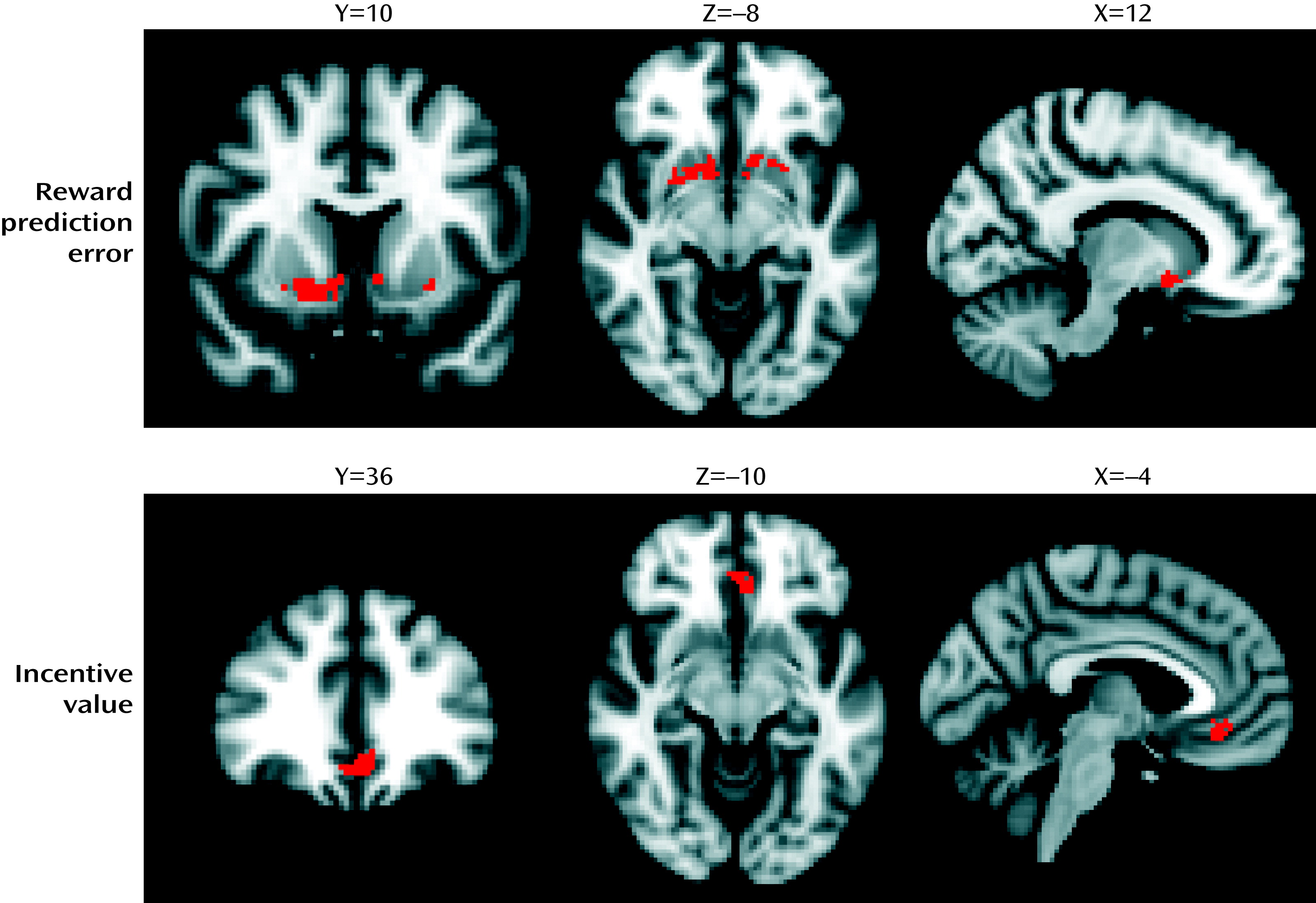

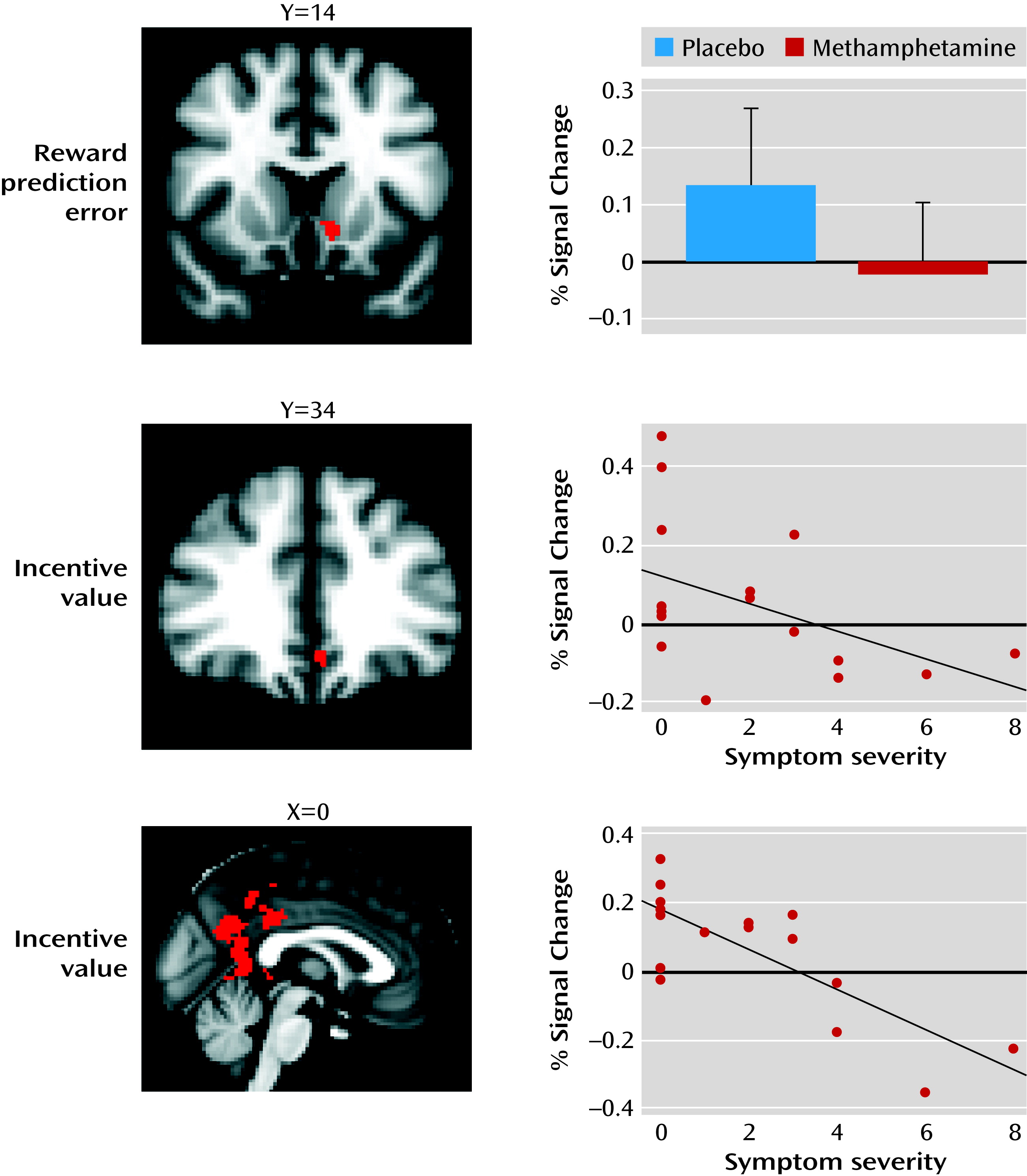

Our study yielded the following findings: 1) intravenous methamphetamine induced mild psychotic symptoms in healthy volunteers; 2) methamphetamine significantly attenuated the reward prediction error signal in the limbic striatum and significantly attenuated the incentive value signal in the ventromedial prefrontal cortex; 3) methamphetamine induced behavioral changes in learning, leading to lower learning rates during reward-related reinforcement learning; 4) the degree to which methamphetamine disrupted the encoding of incentive values in the ventromedial prefrontal cortex correlated with the degree to which the drug induced mild psychotic symptoms; 5) the degree to which methamphetamine disrupted the encoding of incentive values in the posterior cingulate correlated with the degree to which the drug induced mild psychotic symptoms; and 6) pretreatment with amisulpride did not alter symptoms or the ventromedial prefrontal cortex incentive value signal, but it did alter the relationship between the ventromedial prefrontal cortex incentive value signal and mild psychotic symptoms.

According to an influential account of psychotic symptom formation, a disturbance in the ways that affected individuals evaluate stimuli and learn associations leads to mistaken evaluation of irrelevant phenomena as motivationally salient and to faulty association of unconnected ideas and events, ultimately leading to the emergence of characteristic alterations in perceptions and beliefs (

8,

9,

26). In this study, we show that a drug intervention that induces psychotic symptoms is also associated with disruption of frontal and striatal neural learning signals. Moreover, the degree to which methamphetamine disrupted representations of incentive value in the ventromedial prefrontal cortex was correlated with the degree to which it induced psychotic symptoms, shedding light on the mechanisms of how amphetamines cause psychosis and increasing support for the argument that brain mechanisms of learning about incentive value and motivational importance are involved in the pathogenesis of psychotic symptoms in schizophrenia.

Considerable evidence has implicated frontal lobe function as being critical in the pathophysiology of schizophrenia (

27). Previous research has extensively demonstrated the importance of the ventromedial prefrontal cortex in the representation of action value in rewarding events (

23,

28,

29). Here, we suggest an implication of neurochemical disruption of prefrontal value computation: the generation of psychotic symptoms. This is in keeping with previous evidence demonstrating an association between medial frontal lobe function during learning and psychotic symptoms in schizophrenia, although as far as we are aware, a specific disruption of cortical incentive value signaling has yet to be described in schizophrenia (

30). An additional novel finding of our study is to implicate a link between a disruption of incentive value signaling in the posterior cingulate with the psychotogenic effects of methamphetamine. Although we did not have a specific hypothesis about the effects of methamphetamine in this region, we note that this region has previously been shown to encode information about reward value (

31) and that fMRI studies of memory and learning have documented posterior cingulate dysfunction in actively psychotic patients and ketamine-induced psychotic states (

11,

32).

Behavioral analyses confirmed that methamphetamine mildly disrupted learning. While the number of correct decisions was not affected by methamphetamine, we found a deleterious effect of methamphetamine on learning rates in reward trials. We speculate that methamphetamine-induced disruption of monoamine signaling led to increasing levels of uncertainty and a consequent deleterious effect on learning. Impairments in the precision of the brain computations underlying learning introduce uncertainty into environmental appraisal, which, according to Bayesian accounts of belief updating, may lead to small prediction errors being given undue weight and consequent false inference (

26,

33). Abnormal decision making under uncertainty is an important factor predisposing individuals to delusion formation in psychotic illness (

34). Recent accounts of dopamine function in learning emphasize that dopamine neuron firing encodes not only information about expected value and prediction error but also information about their precision (

35), such as the variance.

Our study shows that methamphetamine, a drug known to increase synaptic dopamine levels, affects both behavioral and brain representations of learning parameters along with a link to mental state changes that are typical of the early stages of psychosis. Thus, our results are consistent with the theory that a hyperdopaminergic state leads to psychotic phenomenology because of disruption in dopamine’s role in evaluation and learning of associations of stimuli. However, as methamphetamine affects not only dopamine but also norepinephrine and, to a lesser extent, serotonin, our study allows us to draw direct inferences about how amphetamines may impair decision making and induce psychotic symptoms via a “hypermonoaminergic” state, but not about a hyperdopaminergic state specifically. Pretreatment with 400 mg amisulpride before administration of methamphetamine had no effect on symptoms or brain response, and thus we cannot conclusively demonstrate that methamphetamine’s effects on brain reward learning signals were mediated by stimulation of dopamine D2 receptors. Indeed, the lack of effect of amisulpride may suggest an alternative explanation, that methamphetamine’s effects on symptoms and brain learning signals may have been mediated by nondopaminergic mechanisms, such as its effects on norepinephrine release, or possibly through dopaminergic effects on dopamine D1 receptors, which would not have been blocked by amisulpride. Amisulpride pretreatment did modulate the relationship between methamphetamine-induced alterations in prefrontal function and psychotic symptoms. This suggests that although amisulpride does not normalize methamphetamine’s deleterious effects on ventromedial prefrontal incentive value signaling, it may reduce the tendency of these ventromedial prefrontal disruptions to manifest in psychotic symptoms. The subtle effects of amisulpride in this study make it hard to draw conclusive interpretations concerning the relative contributions of norepinephrine and dopamine to the results. If we had used a higher dose of amisulpride, it might have resulted in clearer results, though at the risk of possibly inducing Parkinsonian side effects in the volunteers.

To our knowledge, only one previous study has examined the effect of a prodopaminergic drug on brain representations of reward learning parameters in healthy humans; that study found that levodopa improved striatal representations of reward prediction error during learning (

21). We now show that an amphetamine-induced hypermonoaminergic state can be associated with impairments in both frontal and striatal representations of incentive value and reward prediction error. The divergence between our results and the previous study can be resolved when considering that levodopa, being a dopamine precursor, facilitates stimulus-locked dopamine release. Methamphetamine, however, can cause stimulus-independent release of monoamines through its actions on the dopamine, norepinephrine, and serotonin transporters and the vesicular monoamine transporter-2, changing the balance of phasic and tonic release of monoamines, thus reducing the signal-to-noise ratio during learning and potentially reducing frontostriatal transmission via stimulation of presynaptic dopamine D

2 receptors (

36–

38). This finding is highly relevant in understanding both how stimulant intoxication may lead to maladaptive learning and induce psychiatric symptoms (

16) and how a hyperdopaminergic state in psychotic illness could be accompanied by impaired associative learning (

39,

40).

In summary, our study provides new evidence of the role of monoaminergic frontostriatal function in underpinning feedback-based learning in humans. We show, for the first time, that a drug-induced state can disrupt neural representations of computational parameters necessary for reinforcement learning, while causing a reduced learning rate; these findings have implications for understanding decision-making impairments seen in amphetamine intoxication. The finding that the degree to which methamphetamine induces psychotic symptoms is related to the degree to which the drug affects the encoding of incentive value in the frontal and cingulate cortices is consistent with theories linking abnormal mechanisms of learning and incentive valuation in the pathogenesis of psychosis.