T

o the E

ditor: Depression and anxiety are leading causes of disability worldwide, yet most people with these conditions do not receive treatment (

1). Technology-based interventions have the potential to reduce this treatment gap (

1–

3). The potential advantages, including low cost, widespread availability, low stigma, and an emerging evidence base, have spurred research into the development of smartphone-based mental health interventions (

4). However, research on the real-world reach of these interventions has been scarce; identifying whether they expand access to treatment is considered one of the top 10 priorities in digital mental health research (

5).

As a step toward this goal, we examined the reach of smartphone applications (apps) for depression and anxiety. We searched for “depression” and “anxiety” in the Google Play Store and identified the top 50 apps for each search, hereafter referred to as “depression apps” and “anxiety apps.” We limited our analyses to apps offering treatment, services, or skill-building (see the Details About Results section in the online supplement) and acquired data on downloads, average daily active users (unique users who opened the app on a given day), and monthly active users (unique users who opened the app in a given month). Data were collected from Mobile Action and SimilarWeb, two companies that offer usage estimates for commercially available apps (see the Details About Methodology section in the online supplement); we restricted our search to the Google Play Store because SimilarWeb offers data only for Android apps.

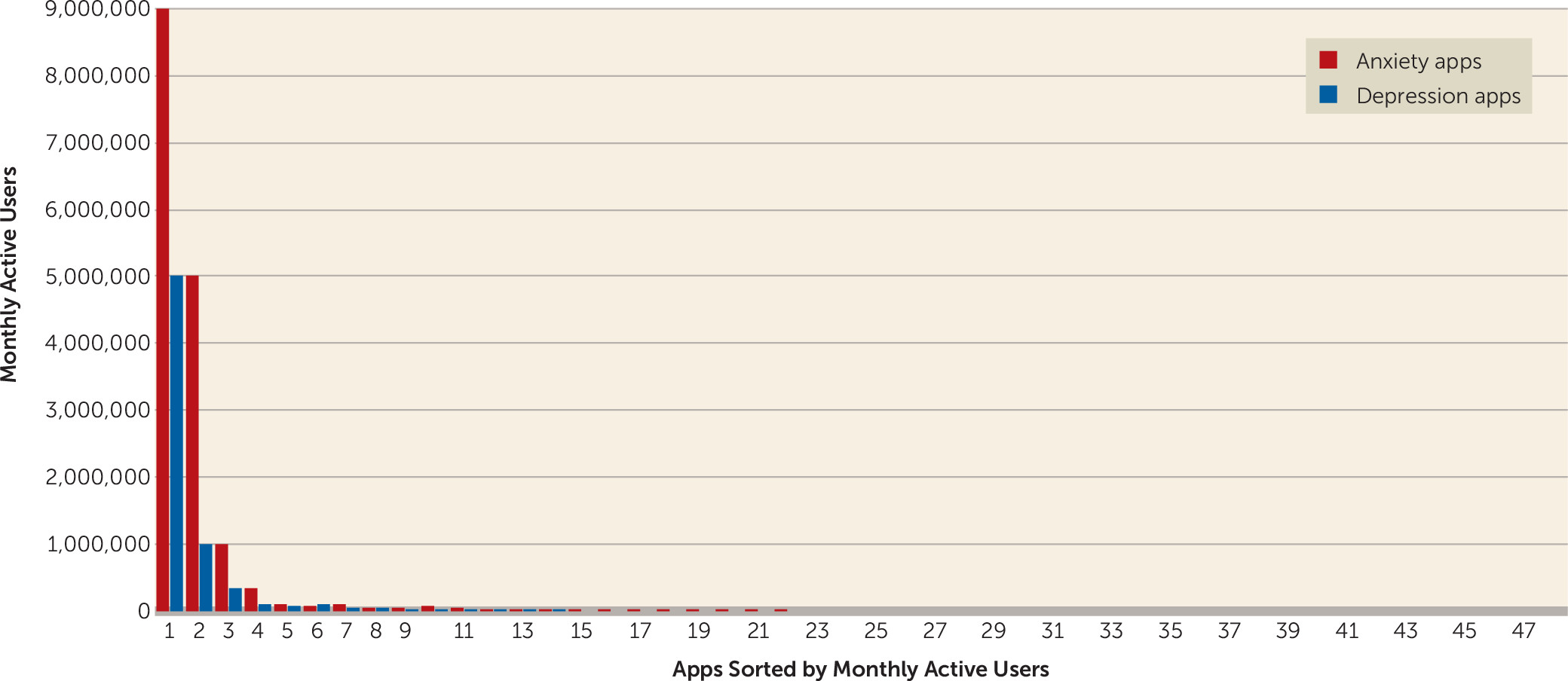

Across a 1-month period, the depression and anxiety apps averaged about 10,000 downloads, several thousand daily active users, and a few hundred thousand monthly active users (see the Details About Results section in the

online supplement). A handful of apps account for nearly all the activity: the top three apps for depression (Headspace, Youper, and Wysa) and for anxiety (Calm, Headspace, and Youper) were responsible for about 90% of downloads, daily active users, and monthly active users. In addition, 63% of depression apps and 56% of anxiety apps had no monthly active users (

Figure 1). These findings have at least three implications for future research.

First, extreme variations in app usage mean that analyses of app content alone cannot tell us much about the content most users experience. Several such analyses have appeared in recent years. For example, one review reported that only 7% of 208 commercially available mental health apps are based on meditation (

6). Does this mean that few app users are exposed to meditation options? Our data suggest otherwise. We found that two meditation apps, Headspace and Calm, account for more than 50% of users of depression apps and anxiety apps. This example highlights a broader point: an important distinction should be made between content that is available on the app store and the content that people commonly use. Notably, some recent reviews have limited analyses to the top search results for a given search term (

7,

8). However, our results show that popularity varies even within the top search results, suggesting that search rank cannot be substituted for usage data. Instead, reviews that incorporate user data are needed.

Second, our findings have implications for the evaluation and monitoring of mental health apps. The American Medical Association, APA, and the U.S. Food and Drug Administration have begun developing standards for evaluating mental health apps to provide guidance to consumers and clinicians. Given that there are more than 10,000 commercially available mental health apps and more than 45 app evaluation frameworks (

9,

10), efforts to rigorously evaluate large numbers of apps could lead to excessive costs, low-quality evaluations, or delayed rollout. Usage findings could be used to determine which apps warrant the effort that will be required to implement rigorous evaluation frameworks. A case can be made for prioritizing evaluation of the apps that are being used at rates substantial enough to have the potential for greatest impact. Importantly, apps with large numbers of users may not necessarily be effective; further evaluations of these apps could incorporate data from clinical trials or compare app content with established evidence-based practices (

7).

Third, our findings highlight a critical question for app developers and researchers: How can they build apps that people will use? The growing optimism that apps can address the mental health treatment gap (

1–

3) has led many research teams to develop apps, and some have encouraged practitioners to independently develop their own apps (

11). Our findings call for caution and indicate that the majority of mental health apps fail to gain traction. Although some apps have the potential to bridge the treatment gap, further research incorporating user data may clarify which apps have actually reached people who previously lacked access to services.

Overall, a small number of apps have attracted a substantial number of active users and a large market penetrance, meeting one criterion for a meaningful public health impact. Discerning what gives these apps such appeal, and thus potential for real impact, will be an important goal for research in the years ahead.

Acknowledgments

The authors acknowledge Scott Mulligan, Matthew Ventures, Benjamin Edwards, and Black Vein Productions for their support in identifying application analytics providers and interpreting application analytics data, as well as Michelle Shih for reviewing and editing the manuscript.